- Home

- LPI Certifications

- 202-450 LPIC-2 Exam 202 Dumps

Pass LPI 202-450 Exam in First Attempt Guaranteed!

Get 100% Latest Exam Questions, Accurate & Verified Answers to Pass the Actual Exam!

30 Days Free Updates, Instant Download!

202-450 Premium Bundle

- Premium File 120 Questions & Answers. Last update: Jan 31, 2026

- Study Guide 964 Pages

Last Week Results!

Includes question types found on the actual exam such as drag and drop, simulation, type-in and fill-in-the-blank.

Developed by IT experts who have passed the exam in the past. Covers in-depth knowledge required for exam preparation.

All LPI 202-450 certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the 202-450 LPIC-2 Exam 202 practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

LPIC-2 (202-450) Complete System Administration Guide: Professional Linux Network Services Mastery

Pursuing Linux Professional Institute LPIC-2 certification marks a significant milestone for experienced system administrators seeking to demonstrate advanced Linux expertise. This specialized examination, coded 202-450, constitutes the second component of LPIC-2 certification, concentrating on network service management, security frameworks, and enterprise system administration competencies.

The LPIC-2 202-450 exam tests your capability to manage complex Linux environments, encompassing DNS infrastructure, web service deployment, file sharing systems, authentication protocols, and comprehensive security architectures. This certification track requires substantial hands-on experience alongside theoretical mastery of contemporary Linux technologies and administrative practices.

Obtaining LPIC-2 certification establishes you as an expert practitioner equipped to design, deploy, and maintain sophisticated Linux solutions in enterprise settings. This qualification confirms your proficiency in addressing complex system integration challenges, enhancing network efficiency, and maintaining strong security frameworks across varied organizational infrastructures.

Complete Overview of LPIC-2 Certification Framework

The Linux Professional Institute LPIC-2 credential represents a prestigious advanced qualification within the internationally recognized LPI certification pathway, specifically engineered to validate sophisticated system administration skills in Linux environments. This certification targets experienced IT professionals who have shown extensive competency in managing complex Linux infrastructures, emphasizing crucial areas including network service administration, security implementation, kernel optimization, and enterprise-grade system management.

The LPIC-2 certification structure consists of two complementary examinations: LPIC-2 201-450, focusing on system maintenance fundamentals, kernel customization, and performance planning; and LPIC-2 202-450, emphasizing network services, client management frameworks, and integrated security approaches. Success in both examinations is mandatory for complete LPIC-2 certification, ensuring candidates possess comprehensive understanding of advanced Linux system administration.

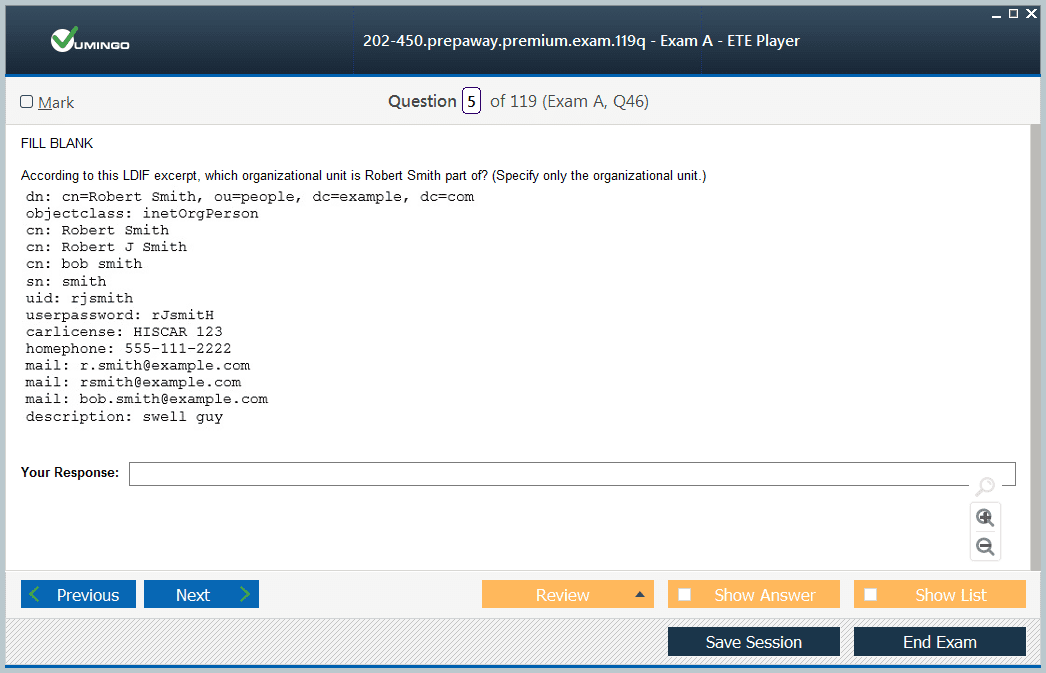

Each examination contains 60 carefully crafted questions administered within a 90-minute timeframe, incorporating diverse question types including multiple-choice items, technical fill-in-the-blank responses, and scenario-driven problem resolution tasks. This varied assessment approach guarantees that certified professionals demonstrate both conceptual understanding and practical, hands-on capabilities essential for managing enterprise Linux systems effectively.

Detailed Analysis of LPIC-2 202-450 Network Infrastructure and Security

The LPIC-2 202-450 examination places significant emphasis on network service implementation and security deployment—essential competencies for administrators operating within dynamic, enterprise-level Linux environments. Candidates face evaluation on their capacity to configure and maintain critical services including Domain Name System (DNS), Dynamic Host Configuration Protocol (DHCP), Lightweight Directory Access Protocol (LDAP), and web services featuring Apache and Nginx.

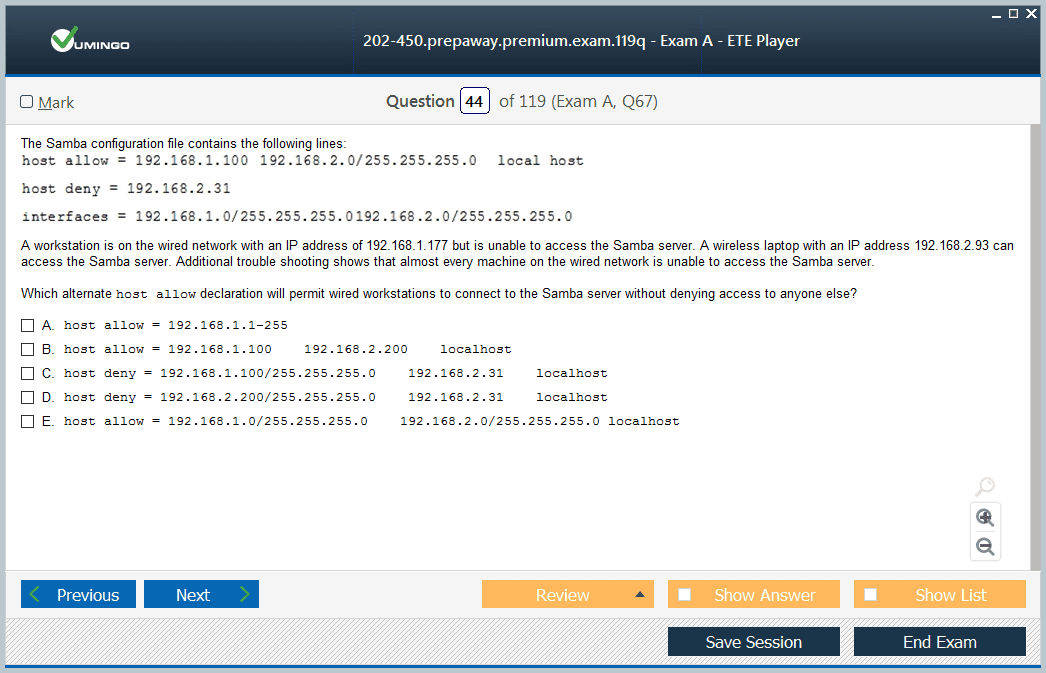

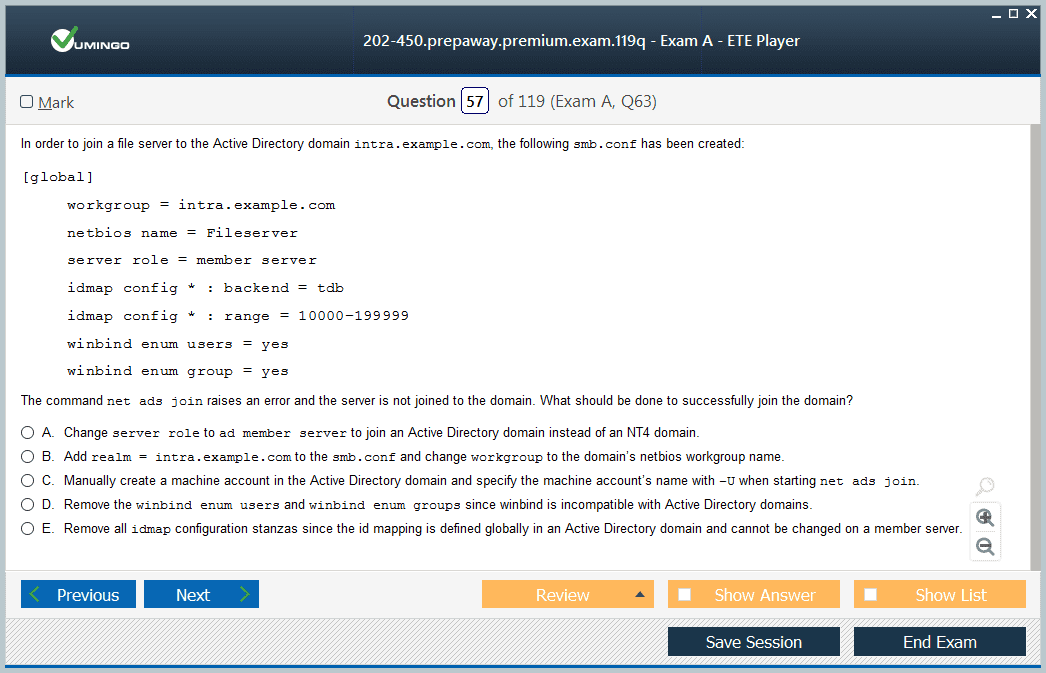

File sharing mechanisms, encompassing Samba and NFS, alongside sophisticated authentication frameworks constitute additional core study areas, demanding candidates integrate diverse systems seamlessly while preserving rigorous security standards. This examination validates expertise in deploying and securing these services within complex environments, ensuring reliability, scalability, and continuous availability.

Security implementations within this exam encompass firewall administration, intrusion prevention, encryption methodologies, and secure communication frameworks, reflecting real-world requirements for protecting sensitive data and network infrastructure. Candidates must demonstrate capability to implement security best practices, perform vulnerability evaluations, and deploy appropriate countermeasures to protect Linux servers against evolving cyber threats.

Core Prerequisites and Educational Requirements

Achieving LPIC-2 certification requires possession of current LPIC-1 certification as a mandatory foundation, ensuring candidates maintain solid grounding in fundamental Linux system administration. This prerequisite structure promotes systematic skill development, building upon established competencies to address more complex administrative challenges effectively.

LPIC-1 certification encompasses fundamental competencies including basic system operations, user and group administration, file system management, package administration, and introductory networking concepts. Mastery of these core topics prepares candidates for advanced system configurations, network services, and security responsibilities encountered at the LPIC-2 level.

While formal practical experience requirements aren't strictly mandated, industry recommendations strongly suggest candidates possess three to five years of hands-on Linux administration experience. Exposure to various Linux distributions, hardware platforms, and organizational environments enhances candidates' practical capabilities and improves their preparation for rigorous LPIC-2 examinations.

Advanced Administrative Capabilities and Professional Skills

LPIC-2 certified professionals must demonstrate sophisticated system administration abilities that extend beyond basic operational knowledge. These encompass kernel compilation and optimization to enhance system performance, complex storage administration involving RAID configurations, Logical Volume Manager (LVM), and file system maintenance to maximize data reliability and accessibility.

Network service deployment expertise includes configuring mail infrastructure, proxy services, and enterprise-grade directory services supporting large-scale infrastructures. Security framework implementation involves designing and maintaining firewall configurations, SELinux/AppArmor implementations, and encrypted communication protocols to maintain stringent data protection standards.

Additionally, LPIC-2 professionals should possess leadership and mentoring capabilities, guiding junior administrators, consulting management on technology strategies, and contributing to organizational policy development. This certification indicates readiness for senior positions requiring both technical expertise and strategic perspective within IT organizations.

Ongoing Education and Industry Relevance for LPIC-2 Professionals

The rapidly changing landscape of Linux technologies and cybersecurity demands continuous commitment to professional development for LPIC-2 certified individuals. Maintaining currency with emerging tools, kernel developments, network protocols, and threat intelligence remains crucial for sustaining effectiveness and relevance in dynamic IT environments.

LPIC-2 certification holders should engage in continuous education through specialized training, workshops, and participation in professional communities. This commitment ensures they remain proficient at implementing contemporary best practices, adapting to new challenges, and leveraging innovations to enhance system security and performance.

Furthermore, the LPIC-2 credential provides a foundation for pursuing higher-level certifications and specialized Linux tracks, enabling professionals to expand their expertise and career opportunities progressively.

Varied Career Growth Opportunities and Professional Recognition

LPIC-2 certification creates numerous pathways for career advancement across multiple sectors including finance, healthcare, government, and technology. The credential enjoys global recognition, facilitating international mobility and enhancing employability for roles demanding advanced Linux administration skills.

Senior system administrator positions represent common career progression, involving management of complex multi-server environments, performance optimization, and orchestration of enterprise-scale deployments. These roles often include responsibility for disaster recovery planning, compliance maintenance, and operational excellence.

Linux architect positions leverage LPIC-2 expertise to design scalable, secure, and efficient infrastructure blueprints, requiring combination of deep technical knowledge and strategic planning capabilities. Consulting opportunities in areas like security audits, migration projects, and performance optimization also abound for certified professionals.

Additionally, DevOps and cloud engineering roles increasingly value LPIC-2 certification, recognizing the importance of comprehensive Linux knowledge in infrastructure automation, continuous integration pipelines, and container orchestration. Training and educational positions further enable certified professionals to share knowledge, fostering community growth and technical excellence.

LPIC-2 Examination Framework and Comprehensive Assessment Methods

The LPIC-2 examinations utilize sophisticated assessment techniques that comprehensively evaluate candidate theoretical understanding and practical proficiency. Question formats include multiple-choice items testing recall and conceptual knowledge, fill-in-the-blank commands assessing precise syntax familiarity, and scenario-driven challenges demanding analytical reasoning and effective solution design.

Emphasis remains on real-world applicability, with many questions simulating complex administrative situations requiring multi-step problem-solving under time constraints. This approach ensures candidates are evaluated on their capacity to integrate various Linux tools and technologies in cohesive, efficient workflows.

The examination's topic weighting aligns with current enterprise priorities, with substantial focus on network service configurations, security implementation, and system integration complexities. Adaptive testing elements may adjust difficulty dynamically based on candidate responses, providing accurate measurement of competency and knowledge depth.

Performance criteria extend beyond mere correctness to include factors such as security awareness, efficiency, and adherence to industry best practices, reflecting the professional judgment expected of senior Linux administrators.

Core DNS Infrastructure and Architectural Excellence

Domain Name System administration represents an essential foundation of advanced Linux system administration, particularly emphasized within the LPIC-2 202-450 examination. Comprehensive understanding of DNS infrastructure and architectural design remains critical for managing scalable, resilient, and secure network environments. DNS functions as the foundation of internet and intranet communications by translating human-readable domain names into IP addresses, enabling seamless connectivity across diverse systems.

Thorough understanding of DNS architecture includes familiarity with authoritative name servers responsible for definitive domain information, recursive resolvers processing client queries by navigating the DNS hierarchy, and caching mechanisms storing query results temporarily to expedite future resolutions. Zone delegation, partitioning DNS namespaces into manageable segments, plays a crucial role in enabling distributed administration and enhancing reliability.

Linux administrators must master the predominant DNS server software, BIND (Berkeley Internet Name Domain), which dominates Linux-based DNS deployments due to its extensive configurability and support for dynamic updates, security enhancements, and performance tuning. Expertise in BIND's configuration syntax, zone file management, and server operation forms the foundation for efficient DNS administration in enterprise settings.

Comprehensive DNS Security Implementation Approaches

Security within DNS infrastructure remains paramount due to the system's critical role in network operations and its vulnerability to various attacks such as cache poisoning, spoofing, and denial-of-service assaults. The LPIC-2 examination emphasizes the necessity for deploying robust DNS security measures that mitigate these risks while maintaining service availability.

DNSSEC (Domain Name System Security Extensions) enhances DNS integrity by enabling cryptographic validation of DNS responses. This mechanism prevents malicious manipulation by signing zones with private keys and allowing resolvers to verify signatures using public keys, effectively eliminating cache poisoning vulnerabilities. Understanding DNSSEC key lifecycle management—including generation, rollover, and revocation—remains vital for maintaining continuous trustworthiness of DNS data.

Access control lists (ACLs) are essential for restricting which clients can query DNS servers or perform zone transfers. Proper ACL configuration ensures sensitive zone data remains confidential and prevents unauthorized zone replication, while balancing operational requirements such as legitimate client access and replication synchronization.

Modern DNS security architectures also incorporate DNS filtering techniques, blocking malicious or unwanted domains to safeguard users from phishing, malware, and other threats. Rate limiting policies help protect DNS servers from resource exhaustion and distributed denial-of-service attacks by controlling the volume of requests processed over time.

Logging and monitoring capabilities provide the visibility required for proactive security management. Collecting and analyzing DNS query logs, transfer events, and anomaly detection metrics enable administrators to quickly identify suspicious activities and respond effectively. The introduction of encrypted DNS protocols like DNS over HTTPS (DoH) and DNS over TLS (DoT) further elevates privacy standards by encrypting DNS traffic, preventing interception and tampering during transmission.

DNS Diagnostic and Performance Enhancement Techniques

Effective DNS management demands proficient troubleshooting skills to rapidly identify and resolve resolution errors, performance bottlenecks, and misconfigurations. LPIC-2 candidates must master systematic diagnostic methodology leveraging various command-line utilities, log file analysis, and network traffic inspection tools.

Command-line utilities such as dig, nslookup, and host provide comprehensive capabilities to query DNS servers, validate zone configurations, and examine response details including time-to-live (TTL) values, authoritative answers, and error statuses. Mastery of these tools includes understanding their advanced options for recursive queries, reverse lookups, and debugging.

Analyzing DNS server logs reveals critical insights into query patterns, failure reasons, and potential security incidents. Recognizing log formats and employing automated parsing tools facilitates efficient monitoring and alerting, minimizing downtime caused by DNS faults.

Network packet analyzers like tcpdump and Wireshark enable granular inspection of DNS traffic at the packet level. These tools assist in diagnosing network connectivity issues, protocol compliance problems, and identifying potential attacks such as spoofing or replay.

Performance tuning strategies focus on optimizing caching behaviors to balance freshness and load reduction. Adjusting TTL settings, query rate limits, and resource allocations ensures DNS servers respond swiftly while handling high volumes efficiently. Load balancing configurations distribute query loads across multiple DNS servers, enhancing responsiveness and fault tolerance. Implementing high availability with failover mechanisms guarantees continuous service even during server failures.

Capacity planning based on query volume trends, projected growth, and organizational needs informs infrastructure scaling decisions. Proactive resource allocation avoids performance degradation during traffic surges and supports sustainable expansion of DNS services.

Dynamic DNS and Network Service Integration

Dynamic DNS functionality empowers DNS servers to automatically update zone records in response to changing network conditions, supporting environments where IP addresses frequently change such as DHCP-managed networks and mobile clients. This capability becomes increasingly vital for modern infrastructures with dynamic, ephemeral addressing schemes.

Integrating DHCP with DNS automates hostname resolution by registering dynamically assigned IP addresses with corresponding DNS entries. This synchronization ensures devices remain accessible by name even as their IP addresses change, simplifying network management and improving operational accuracy.

DNS views and split-horizon configurations allow the same DNS server to provide different responses based on client attributes such as source IP or authentication status. This facilitates tailored network access policies, enabling internal users to access sensitive internal records while presenting limited or altered information to external clients. Such configurations bolster security and optimize resource access control.

Advanced DNS server roles include slave and stealth servers. Slave servers act as secondary authoritative sources, maintaining synchronized copies of zones from master servers to provide redundancy and load distribution. Stealth servers operate invisibly within the DNS ecosystem, answering queries only from trusted sources to enhance security by limiting exposure.

Load balancing techniques distribute client requests across multiple backend services or servers, improving response times and resilience. Various algorithms including round-robin, weighted distribution, and geographic-based balancing accommodate diverse deployment scenarios, supporting highly available and scalable DNS infrastructures.

Complete Zone File Administration and Record Setup

Zone files constitute the cornerstone of DNS server configuration, defining domain name resolution behavior through a structured collection of resource records. Proficiency in crafting, maintaining, and optimizing zone files remains essential for LPIC-2 certified administrators.

Key record types include A (IPv4 address mapping), AAAA (IPv6 address mapping), CNAME (canonical name aliases), MX (mail exchange servers), PTR (reverse DNS mappings), and TXT (text annotations for verification and security purposes). Understanding each record's syntax, semantics, and usage remains crucial for accurate DNS response generation and interoperability with network services.

Forward zones translate domain names to IP addresses, facilitating client access to services, while reverse zones perform inverse mappings, critical for logging, access controls, and security validations. Coordinating forward and reverse zones ensures consistent and trustworthy hostname resolution across the network.

Best practices for zone file management involve maintaining clear documentation, employing consistent naming conventions, validating syntax before deployment, and periodically auditing records to remove stale entries. Dynamic updates and incremental zone transfers help streamline administration and improve synchronization efficiency among distributed DNS servers.

Reliability, Scalability, and Continuous Availability in DNS Implementations

Enterprise-grade DNS infrastructures demand robust redundancy and scalability features to ensure uninterrupted service delivery and resilience against hardware or network failures. Implementing secondary DNS servers across diverse geographic locations prevents single points of failure and balances query loads effectively.

Zone transfer protocols, such as AXFR (full zone transfer) and IXFR (incremental zone transfer), synchronize data between primary and secondary servers, enabling rapid propagation of changes and minimizing downtime risks. Configuring secure zone transfers with authentication mechanisms prevents unauthorized replication.

Scalable DNS architectures accommodate increasing query volumes by deploying distributed server clusters, employing caching resolvers close to client networks, and leveraging anycast routing to optimize query resolution paths. These approaches enhance overall DNS responsiveness and fault tolerance.

Failover mechanisms automatically redirect traffic away from failed or degraded servers, maintaining service continuity. Integration with monitoring and alerting systems ensures prompt detection and resolution of DNS service anomalies, supporting proactive maintenance and operational excellence.

Automation and Scripted DNS Administration for Operational Effectiveness

Automation plays a pivotal role in managing complex DNS environments by reducing human error, accelerating routine tasks, and enhancing consistency. Utilizing scripting languages such as Bash, Python, or Perl allows administrators to automate zone file generation, bulk record updates, and configuration deployments.

Configuration management tools integrate with DNS systems to enforce standardized configurations across multiple servers, supporting version control, rollback capabilities, and audit trails. Automated testing frameworks validate DNS configurations before production deployment, minimizing service disruptions.

Dynamic DNS update scripts facilitate real-time synchronization with DHCP servers, ensuring DNS records reflect current network states without manual intervention. Automated monitoring scripts analyze log files, query performance, and security events, triggering alerts and remediation workflows when anomalies are detected.

Embracing automation in DNS administration streamlines operational workloads, improves accuracy, and empowers Linux administrators to focus on strategic tasks, elevating the overall reliability and security of DNS services within enterprise infrastructures.

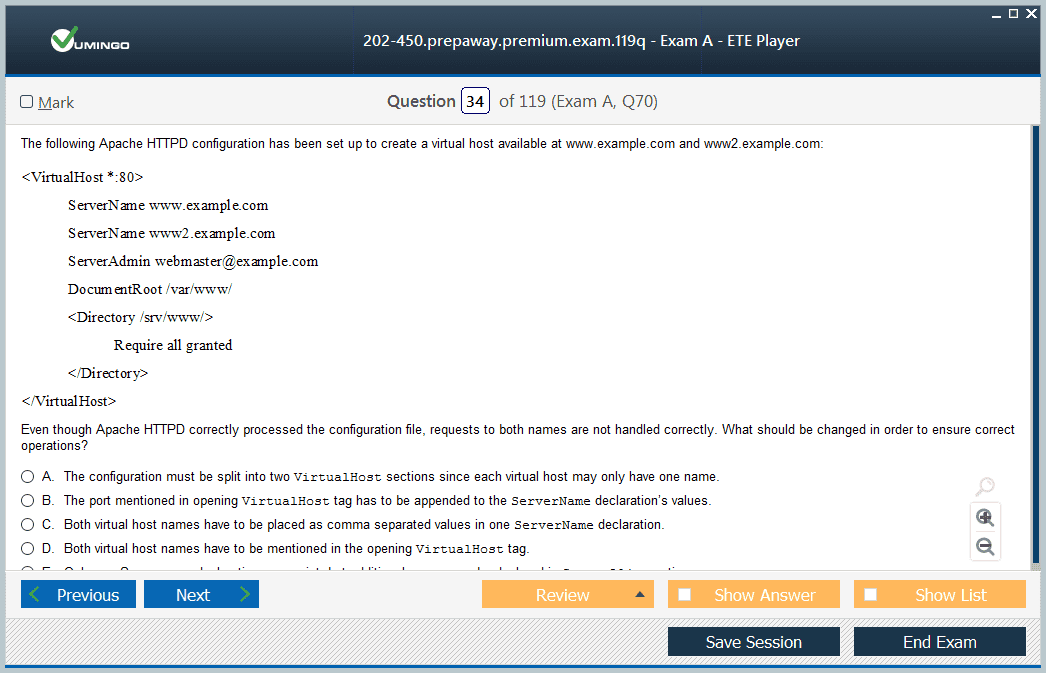

Apache HTTP Server Advanced Setup Excellence

Mastering Apache HTTP Server administration represents a vital skill for Linux professionals, particularly for the LPIC-2 202-450 examination. Apache continues as one of the most prevalent web servers globally, powering a significant portion of websites and web applications. Advanced configuration expertise encompasses setting up and managing virtual hosts, module customization, SSL/TLS encryption, performance enhancements, and rigorous security practices.

Virtual host configuration remains fundamental to hosting multiple domains on a single Apache instance. Linux administrators must skillfully configure name-based, IP-based, and port-based virtual hosts, enabling efficient server resource utilization while maintaining strict isolation between websites. Properly managing virtual hosts involves careful configuration of ServerName and ServerAlias directives, ensuring HTTP requests route correctly according to the requested domain or IP.

Module management extends Apache's core capabilities. Modules handle diverse functionalities such as authentication (mod_auth), URL rewriting (mod_rewrite), compression (mod_deflate), and security hardening (mod_security). Understanding the nuances of loading modules dynamically or statically, configuring module directives, and disabling unnecessary modules improves both server security and performance. An optimal balance between functionality and minimal overhead leads to robust server operation.

Securing web communication through SSL/TLS certificates remains indispensable. Apache administrators must competently generate certificate signing requests (CSRs), install certificates, and configure cipher suites to enforce strong encryption. Mastery of SSLEngine, SSLCertificateFile, and SSLProtocol directives remains crucial for achieving compatibility with a broad range of clients while maintaining the highest security standards. Additionally, configuring HTTP Strict Transport Security (HSTS) policies reinforces secure connections.

Performance tuning encompasses several key strategies, including tuning worker MPM (Multi-Processing Modules) settings like MaxRequestWorkers, configuring caching with mod_cache, enabling compression, and optimizing KeepAlive settings. Such optimizations reduce latency, improve throughput, and handle high concurrency, especially in high-traffic scenarios. Monitoring Apache's resource usage and adapting configurations dynamically ensures web service stability and responsiveness.

Security hardening involves implementing access control lists, IP restrictions, and authentication realms to prevent unauthorized access. Incorporating security modules that protect against common attack vectors such as cross-site scripting (XSS), SQL injection, and distributed denial-of-service (DDoS) attacks strengthens the server's resilience. Routine log monitoring and applying security patches promptly complete a comprehensive security strategy.

NGINX Deployment and Reverse Proxy Setup

NGINX has emerged as a preferred choice for modern web infrastructure due to its event-driven architecture, which excels in handling thousands of concurrent connections with minimal resource consumption. As both a web server and reverse proxy, NGINX's configuration mastery represents a critical asset for system administrators aiming to build scalable, high-performance web services.

The reverse proxy functionality allows NGINX to distribute client requests among multiple backend servers, implementing load balancing algorithms such as round-robin, least connections, and IP hash. This facilitates fault tolerance and optimizes resource utilization across server clusters. Additionally, NGINX handles SSL termination, offloading encryption and decryption workloads from backend servers, which significantly improves overall system efficiency.

Caching in NGINX stores frequently requested content, dramatically reducing backend load and accelerating response times. Fine-tuning cache expiry, cache key configuration, and cache invalidation ensures fresh yet efficient content delivery. The integration of microcaching techniques further enhances performance for dynamic content.

NGINX's security configurations include implementing rate limiting to mitigate brute-force and DDoS attacks, access controls to restrict client IPs, and integration with Web Application Firewalls (WAF) to block malicious payloads. Logging and real-time monitoring allow administrators to detect anomalies promptly and respond effectively to potential threats.

Understanding the architectural distinctions between NGINX's asynchronous, non-blocking event model and traditional thread-based servers informs deployment strategies. For resource-constrained environments or high-concurrency applications, NGINX's lightweight design maximizes throughput and minimizes latency.

Squid Proxy Server Setup and Enhancement

Squid proxy servers enhance network efficiency by caching web content, filtering traffic, and enforcing access policies. In enterprise networks, Squid proves invaluable for reducing bandwidth consumption, improving user experience, and bolstering security.

Effective caching in Squid requires configuring cache storage, refresh patterns, and hierarchical caching strategies. Administrators must balance memory and disk allocations to optimize hit ratios and response times. Advanced configurations include configuring cache peers and ICP (Internet Cache Protocol) for multi-level caching architectures.

Access control lists (ACLs) in Squid provide granular traffic management by filtering requests based on source IP addresses, destination URLs, HTTP methods, time-based restrictions, and MIME types. Crafting detailed ACLs supports organizational policies, such as restricting social media access during work hours or blocking malicious sites.

Squid's authentication mechanisms integrate with LDAP, Active Directory, or local user databases, enabling centralized user management and enforcing user accountability. This integration supports compliance with corporate security policies and auditing requirements.

Content filtering further protects the network by blocking malware, adult content, and other undesirable traffic categories. Combined with URL rewriting and header manipulation, Squid serves as a powerful tool for enforcing organizational web usage policies.

Performance tuning includes adjusting cache directories, optimizing memory buffers, and fine-tuning disk I/O settings to maximize proxy throughput. Monitoring Squid logs and performance metrics assists in identifying bottlenecks and optimizing configurations for evolving traffic patterns.

SSL/TLS Deployment and Certificate Administration

Implementing SSL/TLS protocols within web services secures communications by encrypting data transmitted between clients and servers. For LPIC-2 candidates, mastering SSL/TLS certificate management, protocol configuration, and troubleshooting remains essential to ensure data confidentiality, integrity, and authentication.

The certificate lifecycle encompasses requesting certificates from trusted authorities, installing certificates on web servers, renewing certificates before expiry, and securely storing private keys. Understanding how to create and manage certificate signing requests (CSRs) and handle certificate chaining fosters seamless deployment across distributed environments.

Selecting appropriate SSL/TLS protocols and cipher suites remains vital to balancing security and compatibility. Disabling deprecated protocols such as SSLv2 and SSLv3, enabling TLS 1.2 or TLS 1.3, and prioritizing strong cipher suites mitigate vulnerabilities without alienating legacy clients.

Certificate validation mechanisms verify authenticity by checking certificate chains, domain name matches, and revocation status through OCSP (Online Certificate Status Protocol) or CRL (Certificate Revocation List). Administrators must troubleshoot common issues such as certificate mismatches, expired certificates, and incomplete chains to maintain secure communications.

Perfect Forward Secrecy (PFS) enhances security by generating ephemeral session keys, preventing attackers from decrypting past communications even if private keys are compromised. Configuring key exchange algorithms such as ECDHE or DHE ensures PFS remains enabled.

Automated certificate management via protocols like ACME streamlines acquisition and renewal processes, significantly reducing administrative overhead and minimizing downtime risks. Automating certificate renewal and deployment enhances security posture and operational efficiency.

Performance Enhancement Strategies for Web Infrastructure

Performance optimization represents a multifaceted discipline that significantly impacts the responsiveness and reliability of web services. Both Apache and NGINX benefit from strategic tuning of worker processes, caching layers, compression, and connection handling to achieve optimal throughput.

Configuring appropriate worker MPMs in Apache, such as prefork, worker, or event, tailors concurrency models to the workload. Adjusting MaxClients, ServerLimit, and KeepAliveTimeout parameters aligns resource usage with traffic demands. Enabling mod_deflate compression reduces bandwidth consumption, enhancing page load speeds without sacrificing server resources.

NGINX's event-driven model allows tuning worker_processes and worker_connections to maximize parallel handling of client requests. Implementing microcaching and fine-tuned proxy_cache settings improve responsiveness for dynamic and static content alike.

Caching, both at the HTTP server level and reverse proxy layer, reduces backend processing and database hits. Implementing content expiration policies and cache purging mechanisms ensures users receive fresh content without excessive server load.

Compression techniques reduce response payload sizes, but administrators must balance CPU utilization with network savings. Enabling Gzip or Brotli compression on appropriate content types enhances user experience, particularly on bandwidth-limited networks.

Connection management, including tuning TCP parameters and enabling HTTP/2 or HTTP/3 protocols, optimizes client-server communication efficiency, reducing latency and improving page rendering speed.

Security Strengthening and Threat Prevention in Web Services

Securing web infrastructure represents a perpetual challenge requiring layered defenses and proactive management. Apache, NGINX, and proxy servers like Squid must incorporate rigorous access controls, authentication schemes, and security headers to mitigate evolving threats.

Implementing Role-Based Access Control (RBAC), enforcing strong password policies, and integrating multi-factor authentication strengthens user identity management. Configuring firewalls, limiting exposed services, and deploying Web Application Firewalls (WAFs) shield servers from injection attacks, cross-site scripting, and other exploits.

Utilizing security headers such as Content Security Policy (CSP), X-Frame-Options, and HTTP Strict Transport Security (HSTS) adds layers of client-side protection. Enabling logging of access and error events facilitates forensic analysis and rapid incident response.

Rate limiting and IP blacklisting prevent brute force and denial-of-service attacks. Monitoring tools, intrusion detection systems, and automated alerting enable swift detection and mitigation of malicious activities.

Regular patching, vulnerability assessments, and compliance audits maintain a hardened security posture aligned with organizational policies and industry standards.

Logging, Monitoring, and Diagnosing Web Infrastructure

Comprehensive logging and monitoring form the backbone of effective web service management. Both Apache and NGINX provide configurable logging mechanisms that record detailed access, error, and performance data essential for troubleshooting and optimization.

Analyzing log files reveals patterns of user behavior, pinpointing frequently accessed resources, unusual activity, or recurrent errors. Tools that parse and visualize logs assist administrators in identifying root causes of outages, misconfigurations, or attacks.

Real-time monitoring solutions track server health metrics such as CPU load, memory usage, connection counts, and response times. Alerting systems notify administrators of threshold breaches, enabling proactive intervention.

Troubleshooting SSL/TLS issues often involves validating certificate chains, checking protocol compatibility, and examining server logs for handshake errors. Command-line tools like openssl and curl facilitate diagnosis by simulating client-server interactions.

Network packet analyzers aid in detecting connectivity issues, malformed requests, or suspicious traffic patterns, complementing server-side diagnostics.

By integrating logging and monitoring into daily operations, Linux administrators ensure high availability, security, and performance of web services.

Samba Server Setup and Windows Compatibility

Samba server configuration represents a cornerstone for enabling seamless interoperability between Linux and Windows environments, making it indispensable in mixed-OS enterprise networks. Samba facilitates file and print sharing, allowing Linux systems to emulate Windows SMB/CIFS protocols, thereby enabling transparent access to shared resources from Windows clients. The Samba ecosystem consists of essential daemons: smbd handles file and printer sharing, nmbd manages NetBIOS name services crucial for network discovery, and winbindd bridges Samba with Windows Active Directory or NT domains, enabling domain authentication and user/group mapping.

Configuring Samba shares involves careful definition of accessible directories through the smb.conf file, specifying path permissions, and setting share-level security options to govern read/write access. Administrators must balance security and accessibility by leveraging parameters such as valid users, write list, and browseable flags to control resource exposure effectively. Additionally, performance tuning includes configuring socket options and enabling asynchronous I/O to optimize throughput for file operations.

User authentication with Samba integrates local Linux accounts, LDAP directories, and Active Directory services, offering centralized management and seamless login experiences. Winbindd plays a critical role by resolving Windows user and group information into POSIX equivalents, allowing consistent permission enforcement on shared resources. Joining Samba servers as domain members in an Active Directory environment enhances authentication consistency and allows utilization of Group Policy Objects (GPOs) for policy enforcement.

Print services configuration with Samba enables Linux machines to offer printers to Windows clients using CUPS or other print subsystems. Administrators must configure printer shares, manage printer drivers, and troubleshoot spooler communication to ensure reliable cross-platform print functionality. Security practices include enabling encrypted transports and audit logging to protect print jobs and shared data against unauthorized interception.

NFS Server Setup and Unix Compatibility

Network File System (NFS) remains the standard solution for native file sharing within Unix and Linux environments, providing transparent, network-wide file access. NFS architecture involves a server exporting file systems, clients mounting these exports, and a suite of protocols such as RPC (Remote Procedure Call) to coordinate communication. NFS versions 3 and 4 offer varying features; NFSv4 introduces enhanced security with integrated Kerberos authentication and stateful operations.

Configuring NFS exports involves specifying shared directories in /etc/exports, along with access permissions, security options, and network restrictions. Administrators leverage options like rw/ro (read-write/read-only), sync/async, and root squash to tailor access controls and mitigate privilege escalation risks. Exporting file systems securely requires precise firewall configurations and port allocations to restrict unauthorized access.

Client-side mounting of NFS shares utilizes mount commands with options like noexec, nolock, and intr to control execution permissions, file locking behavior, and interrupt handling. Automounting via autofs ensures persistent and seamless access, reducing administrative overhead for dynamic network environments. Performance considerations include tuning rsize and wsize parameters to optimize data transfer chunk sizes.

Security in NFS deployments is enhanced through implementations of Kerberos-based authentication, using sec=krb5 or sec=krb5p options to encrypt traffic and ensure data integrity. Network segmentation and firewall rules further restrict access, while export restrictions prevent unauthorized clients from mounting shares.

Performance optimization strategies encompass caching directory and attribute data, enabling async writes cautiously to boost throughput, and employing jumbo frames on supporting networks. Additionally, deploying high availability configurations using clustered NFS servers or DRBD-based replication ensures continuous service during failures or maintenance, crucial for enterprise-grade file sharing.

Advanced User Authentication and Directory Infrastructure

Pluggable Authentication Modules (PAM) provide a flexible and extensible framework to implement authentication policies across diverse Linux services. PAM architecture separates authentication logic into modular stacks that can incorporate traditional password checks, LDAP queries, biometric verification, or multi-factor authentication, making it essential for centralized access control strategies.

Configuring PAM requires editing service-specific files within /etc/pam.d/, specifying module order and control flags to enforce password complexity, account expiration, or session management rules. Combining PAM with centralized directories enhances security and user experience by unifying credentials and access policies.

LDAP clients enable Linux systems to communicate with directory services, centralizing user and group information for streamlined authentication and authorization. OpenLDAP represents a prevalent open-source directory server supporting customizable schemas, replication, and access controls. Configuring LDAP clients involves setting up ldap.conf parameters, establishing secure TLS connections, and mapping LDAP attributes to POSIX accounts.

Implementing multi-factor authentication integrates additional identity verification layers such as time-based one-time passwords (TOTP), hardware tokens, or smart cards. This significantly reduces the risk of credential compromise and aligns with modern compliance requirements.

Single sign-on (SSO) streamlines user workflows by allowing authentication once per session to access multiple services, using protocols like Kerberos or SAML. Integrating SSO into enterprise environments reduces password fatigue and enhances security.

Directory replication mechanisms maintain data consistency and fault tolerance across multiple LDAP servers, supporting both master-slave and multi-master topologies. High availability configurations ensure directory services remain accessible despite server failures, which remains critical for uninterrupted authentication services.

DHCP Setup and Network Administration

Dynamic Host Configuration Protocol (DHCP) automates the allocation of IP addresses and network settings, reducing manual configuration errors and facilitating scalable network management. Configuring DHCP servers involves defining scopes, or IP address pools, lease durations, and options such as default gateways, DNS servers, and domain names to propagate essential network parameters.

Scope management includes optimizing address ranges to prevent conflicts and efficiently utilize limited IP space. Reservation mechanisms ensure critical devices receive fixed IP addresses while allowing most devices to obtain dynamic addresses. Exclusion ranges prevent accidental assignment of reserved addresses, maintaining network stability.

DHCP relay agents extend DHCP services across multiple subnets by forwarding client broadcast requests to centralized servers, simplifying network infrastructure and centralizing address management. Relay configuration includes specifying helper addresses and ensuring relay agents remain operational on routing devices.

Integration with Dynamic DNS (DDNS) allows automatic updates of DNS records corresponding to DHCP lease assignments, ensuring hostnames resolve accurately within the network. This dynamic coupling reduces administrative overhead and improves name resolution reliability.

High availability DHCP configurations use failover protocols to synchronize lease databases between two servers, providing uninterrupted IP address distribution even during server outages. These implementations typically involve stateful failover with load balancing and redundancy to maximize uptime.

Print Sharing and Administration in Mixed Environments

Efficient print sharing in environments combining Linux and Windows systems is a critical aspect of modern network administration. Organizations increasingly rely on heterogeneous networks, which necessitate seamless integration between distinct operating systems. Administrators must employ robust strategies that bridge these differences, ensuring reliable, high-performance printing services for diverse user bases. In mixed environments, the convergence of Samba and the Common Unix Printing System (CUPS) offers a versatile framework for delivering print services across platforms while maintaining security, manageability, and operational efficiency.

Leveraging Samba and CUPS for Cross-Platform Printing

Samba serves as a pivotal component in Linux-based print servers, providing interoperability with Windows clients through the Server Message Block (SMB) protocol. When integrated with CUPS, Samba transforms a Linux server into a comprehensive print host capable of handling print requests from a wide range of devices. Administrators can define print queues, assign printer-specific drivers, and configure access controls to ensure that print jobs are processed smoothly and securely. By bridging protocol differences between Linux and Windows systems, Samba enables the sharing of both networked and locally attached printers without compromising performance or reliability.

CUPS, designed to provide modular and flexible printing capabilities, allows administrators to establish complex printing environments with minimal overhead. Filters, backends, and printer drivers can be customized to accommodate specialized hardware or unique workflow requirements. This combination of CUPS and Samba ensures that mixed networks can sustain diverse printing needs, from simple document outputs to intricate graphical or technical prints, while maintaining administrative oversight and security.

Configuring Print Queues and Printer Drivers

A foundational task in print administration is the configuration of print queues. Print queues act as intermediaries, managing job scheduling, prioritization, and processing. In mixed environments, administrators must carefully assign drivers compatible with both the server and client operating systems. This often involves selecting generic or manufacturer-specific drivers that ensure fidelity in document output while minimizing the risk of incompatibility errors.

In addition to driver configuration, advanced options such as duplex printing, color management, and resolution control must be addressed. These settings optimize print quality and resource usage, allowing organizations to maintain operational efficiency while reducing waste. Administrators can also implement policies that automatically route print jobs based on type, size, or department, further enhancing the usability and performance of shared print services.

Access Control and Security Measures

Security is paramount in print sharing, especially in enterprise environments where sensitive documents frequently traverse the network. Administrators implement multiple layers of access control to safeguard print resources. Share-level permissions determine which users or groups can access a printer, while user-level permissions define specific capabilities such as printing, job cancellation, or administrative management.

Encryption of print data streams provides an additional layer of protection, preventing interception of confidential documents during transit. Logging and auditing features record all print activity, enabling administrators to monitor usage, detect anomalies, and maintain compliance with regulatory mandates. This comprehensive approach to security ensures that mixed networks not only function efficiently but also protect organizational information against both internal and external threats.

Supporting Diverse Printer Types and Connectivity

Modern network environments require support for a wide array of printer hardware, including networked devices, USB-attached printers, and multifunctional machines capable of scanning, copying, and faxing. Mixed-environment administrators must account for the differing communication protocols and device capabilities to ensure uniform accessibility.

Samba and CUPS offer flexible configuration options to accommodate these devices, including the ability to handle raw printing for unsupported hardware or apply specific filters for optimized processing. Networked printers often require IP-based configurations and protocol adjustments, while USB-connected printers demand correct driver mapping and device recognition. By addressing these considerations, administrators create a versatile printing infrastructure capable of meeting the dynamic requirements of modern workplaces.

Troubleshooting Print Spoolers and Driver Issues

Despite careful planning, print environments are prone to technical challenges that can disrupt workflow. Print spooler errors, driver incompatibilities, and permission conflicts are common issues in mixed networks. Administrators must possess diagnostic skills to identify root causes, resolve job failures, and restore operational continuity promptly.

Spooler troubleshooting often involves analyzing log files, verifying service configurations, and clearing stalled print jobs that may block subsequent tasks. Driver-related issues require a combination of testing, updating, or replacing incompatible drivers, while permission problems may necessitate re-evaluation of user roles and access levels. Proactive maintenance, regular updates, and systematic monitoring mitigate these challenges, ensuring that print services remain robust and dependable.

Print Sharing and Administration in Mixed Environments

In modern enterprises, managing print services across mixed operating environments is a sophisticated challenge that demands precision and foresight. Organizations often operate heterogeneous networks comprising Windows and Linux systems, each with its own protocol and driver requirements. Efficient print sharing in such environments is critical to maintaining productivity, minimizing downtime, and ensuring data integrity. Properly configured print infrastructure allows users to submit, track, and retrieve print jobs seamlessly, regardless of the underlying operating system, while administrators retain centralized control over resources, security, and access. The integration of Samba with the Common Unix Printing System (CUPS) provides an optimal solution, offering interoperability and high-performance management capabilities that bridge diverse network protocols and printer models.

Leveraging Samba and CUPS for Cross-Platform Printing

Samba functions as a bridge between Linux servers and Windows clients by implementing the Server Message Block (SMB) protocol, enabling file and printer sharing across platforms. When paired with CUPS, which provides a flexible and modular printing architecture, administrators can host printer shares that are fully compatible with Windows workstations. This combination allows organizations to consolidate printer resources on Linux servers while maintaining full functionality for Windows users. Samba supports both raw and filtered printing, providing versatility for various printer models and document types. Meanwhile, CUPS offers a robust framework for job scheduling, driver management, and printer-specific customization, ensuring consistent and high-quality output across the network. Administrators can configure access rights, manage multiple queues, and prioritize print jobs to maintain operational efficiency.

Configuring Print Queues and Printer Drivers

Print queues serve as the backbone of any organized printing environment, enabling controlled management of document processing. In mixed networks, administrators must carefully define queues and assign compatible drivers to avoid rendering errors or compatibility issues. Selecting appropriate printer drivers—whether manufacturer-specific or generic—ensures that printed documents accurately reflect the intended formatting, color schemes, and resolution. Advanced configuration options, such as duplex printing, color calibration, and job spooling strategies, further optimize print performance and resource utilization. Administrators can implement policies that prioritize critical documents, route specialized print jobs to dedicated printers, and maintain printer availability even during peak usage periods. A well-structured queue system not only improves efficiency but also reduces maintenance overhead and enhances user satisfaction.

Access Control and Security Measures

Security in print sharing is an often-overlooked aspect of network management, yet it is essential for safeguarding sensitive information. Access control in mixed environments includes both share-level permissions, which define general access to printers, and user-level permissions, which specify actions such as printing, job cancellation, or administrative modifications. Encryption protocols can secure print data streams during transit, preventing interception by unauthorized parties. Logging and auditing of print activity provide visibility into document flow, enabling administrators to detect misuse, unauthorized access, or abnormal usage patterns. These measures are particularly crucial in organizations handling confidential or regulated information. By implementing layered security strategies, administrators ensure that print infrastructure supports compliance mandates while maintaining operational integrity.

Supporting Diverse Printer Types and Connectivity

Modern enterprises utilize a variety of printer technologies, ranging from network-attached devices to USB-connected multifunction machines. Each printer type may require unique drivers, communication protocols, or configuration settings. Samba and CUPS accommodate this diversity by supporting both standard network protocols and device-specific configurations, including raw printing for unsupported hardware. Administrators must consider the connectivity environment, whether IP-based for network printers or direct USB communication, to ensure seamless access for all clients. Multifunction printers introduce additional complexity with scanning, faxing, and copying features, which may also need to be managed through the same print infrastructure. A comprehensive strategy that accounts for these variables allows organizations to maintain a flexible, scalable, and resilient print network capable of meeting evolving operational demands.

Troubleshooting Print Spoolers and Driver Issues

Even in well-managed environments, technical issues with print spoolers, drivers, and permissions are inevitable. Print spooler errors, stalled jobs, and driver incompatibilities can disrupt workflow, creating delays and user frustration. Administrators must be adept at diagnosing these problems by analyzing logs, validating service configurations, and clearing queue bottlenecks. Driver conflicts often require updating, reassigning, or replacing incompatible drivers, while permission-related issues may necessitate adjustments to user roles or access levels. Proactive monitoring, routine maintenance, and periodic testing of print services are essential for preventing recurring failures. By addressing these challenges systematically, administrators can maintain high availability, ensure reliability, and provide a seamless printing experience across mixed operating systems.

Final Thoughts

Performance optimization is crucial in high-demand print environments where large volumes of documents are processed continuously. Efficient job handling reduces latency, improves throughput, and enhances user satisfaction. Administrators can implement strategies such as job prioritization, load balancing, and pre-processing filters to streamline printing workflows. CUPS filters allow administrators to convert documents into printer-compatible formats while preserving formatting, color accuracy, and resolution, ensuring consistent output quality. Raw printing options bypass unnecessary processing for compatible devices, reducing processing time and conserving system resources. Additionally, caching frequently used drivers, templates, and configurations minimizes overhead and accelerates print job submission. Continuous monitoring of queue activity enables administrators to anticipate bottlenecks, optimize resource allocation, and maintain optimal system performance even under heavy workloads.

Print administration extends beyond operational efficiency to include auditing, compliance, and long-term scalability. Detailed logging of print activity ensures traceability, facilitating audits and regulatory adherence. Administrators can generate reports to track usage patterns, identify unauthorized access, and maintain accountability for sensitive information. Future-proofing print infrastructure requires anticipating technological advances and evolving organizational needs. This includes preparing for emerging printer models, adopting new communication protocols, and integrating hybrid cloud-based printing solutions. Modular and extensible configurations using Samba and CUPS provide the flexibility needed to accommodate growth without major disruptions. By implementing forward-looking strategies, organizations ensure their print services remain reliable, secure, and adaptable to changing technological landscapes, supporting sustainable operational success.

LPI 202-450 practice test questions and answers, training course, study guide are uploaded in ETE Files format by real users. Study and Pass 202-450 LPIC-2 Exam 202 certification exam dumps & practice test questions and answers are to help students.

Exam Comments * The most recent comment are on top

- 010-160 - Linux Essentials Certificate Exam, version 1.6

- 102-500 - LPI Level 1

- 101-500 - LPIC-1 Exam 101

- 201-450 - LPIC-2 Exam 201

- 202-450 - LPIC-2 Exam 202

- 300-300 - LPIC-3 Mixed Environments

- 305-300 - Linux Professional Institute LPIC-3 Virtualization and Containerization

- 010-150 - Entry Level Linux Essentials Certificate of Achievement

- 303-300 - LPIC-3 Security Exam 303

Purchase 202-450 Exam Training Products Individually

Why customers love us?

What do our customers say?

The resources provided for the LPI certification exam were exceptional. The exam dumps and video courses offered clear and concise explanations of each topic. I felt thoroughly prepared for the 202-450 test and passed with ease.

Studying for the LPI certification exam was a breeze with the comprehensive materials from this site. The detailed study guides and accurate exam dumps helped me understand every concept. I aced the 202-450 exam on my first try!

I was impressed with the quality of the 202-450 preparation materials for the LPI certification exam. The video courses were engaging, and the study guides covered all the essential topics. These resources made a significant difference in my study routine and overall performance. I went into the exam feeling confident and well-prepared.

The 202-450 materials for the LPI certification exam were invaluable. They provided detailed, concise explanations for each topic, helping me grasp the entire syllabus. After studying with these resources, I was able to tackle the final test questions confidently and successfully.

Thanks to the comprehensive study guides and video courses, I aced the 202-450 exam. The exam dumps were spot on and helped me understand the types of questions to expect. The certification exam was much less intimidating thanks to their excellent prep materials. So, I highly recommend their services for anyone preparing for this certification exam.

Achieving my LPI certification was a seamless experience. The detailed study guide and practice questions ensured I was fully prepared for 202-450. The customer support was responsive and helpful throughout my journey. Highly recommend their services for anyone preparing for their certification test.

I couldn't be happier with my certification results! The study materials were comprehensive and easy to understand, making my preparation for the 202-450 stress-free. Using these resources, I was able to pass my exam on the first attempt. They are a must-have for anyone serious about advancing their career.

The practice exams were incredibly helpful in familiarizing me with the actual test format. I felt confident and well-prepared going into my 202-450 certification exam. The support and guidance provided were top-notch. I couldn't have obtained my LPI certification without these amazing tools!

The materials provided for the 202-450 were comprehensive and very well-structured. The practice tests were particularly useful in building my confidence and understanding the exam format. After using these materials, I felt well-prepared and was able to solve all the questions on the final test with ease. Passing the certification exam was a huge relief! I feel much more competent in my role. Thank you!

The certification prep was excellent. The content was up-to-date and aligned perfectly with the exam requirements. I appreciated the clear explanations and real-world examples that made complex topics easier to grasp. I passed 202-450 successfully. It was a game-changer for my career in IT!

Wish you all the best with your exam!