- Home

- LPI Certifications

- 201-450 LPIC-2 Exam 201 Dumps

Pass LPI 201-450 Exam in First Attempt Guaranteed!

Get 100% Latest Exam Questions, Accurate & Verified Answers to Pass the Actual Exam!

30 Days Free Updates, Instant Download!

201-450 Premium Bundle

- Premium File 120 Questions & Answers. Last update: Jan 31, 2026

- Study Guide 964 Pages

Last Week Results!

Includes question types found on the actual exam such as drag and drop, simulation, type-in and fill-in-the-blank.

Developed by IT experts who have passed the exam in the past. Covers in-depth knowledge required for exam preparation.

All LPI 201-450 certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the 201-450 LPIC-2 Exam 201 practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

Complete LPIC-2 (201-450) Linux Professional Certification Study Guide

The Linux Professional Institute LPIC-2 (201-450) certification stands as a premier qualification for Linux system administrators seeking to demonstrate advanced technical proficiency in enterprise environments. This intermediate-level credential validates comprehensive skills in complex system management, network service configuration, and security implementation across diverse Linux distributions.

This certification pathway transforms basic Linux administrators into skilled professionals capable of handling sophisticated infrastructure challenges. The LPIC-2 credential emphasizes practical expertise in managing large-scale Linux deployments, implementing robust security measures, and optimizing system performance for mission-critical applications.

Organizations worldwide recognize LPIC-2 certified professionals as qualified experts who can architect scalable solutions, troubleshoot complex issues, and lead technical teams. The vendor-neutral nature of this certification ensures applicability across various Linux distributions, making certified professionals valuable assets in heterogeneous computing environments.

The certification process involves rigorous testing of both theoretical knowledge and practical skills, ensuring that successful candidates possess the competencies needed to excel in demanding Linux administration roles. This comprehensive evaluation covers everything from kernel management to network service deployment, creating well-rounded professionals ready for advanced responsibilities.

LPIC-2 Certification Prerequisites and Eligibility Overview

Pursuing the LPIC-2 certification necessitates a foundational understanding of Linux system administration, which is formally established through the acquisition of the LPIC-1 credential. This prerequisite ensures candidates possess a robust grounding in essential Linux operational concepts before advancing to more complex infrastructure management topics covered in LPIC-2. The LPIC-1 certification serves as a stepping stone, verifying proficiency in basic command-line usage, file system hierarchy navigation, software package installation, elementary networking configurations, and preliminary security practices.

While the LPIC-1 credential is mandatory, there is no formal requirement for professional work experience. However, the demanding nature of LPIC-2 examination objectives makes practical Linux administration experience highly advantageous. Industry norms suggest candidates who have accrued three to five years of hands-on involvement with varied Linux environments tend to excel. Such experience not only fortifies theoretical knowledge but also cultivates problem-solving acumen essential for tackling real-world system administration challenges, ultimately boosting exam success rates.

Foundational Knowledge Established by LPIC-1 Certification

The LPIC-1 certification delineates core Linux skills critical for any aspiring advanced administrator. This foundation encompasses mastery of shell commands and scripting techniques necessary for efficient system interaction. Candidates become adept at navigating the Linux file system hierarchy, understanding permissions, symbolic links, and essential file attributes.

Additionally, LPIC-1 covers fundamental package management using tools such as apt, yum, or zypper, depending on distribution, enabling candidates to install, update, and remove software reliably. The certification also introduces basic network configurations, including IP addressing, DNS settings, and firewall basics, establishing a preliminary security framework that is vital before progressing to LPIC-2's more intricate network services and security protocols.

Grasping these essentials allows candidates to confidently approach LPIC-2 topics, as the advanced certification builds upon and extends this foundational knowledge toward managing complex services, optimizing system performance, and implementing enterprise-level security strategies.

Importance of Practical Experience in Linux Administration

Although theoretical knowledge forms the backbone of LPIC-2 certification readiness, the value of immersive, real-world experience cannot be overstated. Candidates with extensive hands-on exposure to Linux environments—spanning server deployment, user and group management, shell scripting, networking, and troubleshooting—are better equipped to comprehend nuanced exam scenarios and apply best practices effectively.

Engagement in diverse environments, including cloud infrastructures, virtualization platforms, container orchestration, and traditional data centers, enriches practical understanding and sharpens adaptability. This experience fosters proficiency in managing system services, configuring advanced networking, securing servers, and optimizing resource utilization, all pivotal skills assessed by LPIC-2 objectives.

Moreover, practical experience deepens familiarity with configuration files, log analysis, and command-line utilities, enabling candidates to perform under exam conditions and in professional roles with confidence and precision.

Structured Study Plans and Flexible Learning Modalities

The pathway to LPIC-2 certification accommodates a variety of learning preferences and professional commitments through flexible study frameworks. Candidates may choose self-paced learning using comprehensive documentation, video tutorials, and practice labs, enabling deep dives into specific topics according to individual schedules and learning rhythms.

Alternatively, instructor-led training courses offer guided instruction, interactive discussions, and hands-on labs, fostering a collaborative environment that enhances understanding and retention. Hybrid approaches, combining self-study with formal training sessions, provide balanced exposure, reinforcing concepts through multiple formats.

Incorporating simulated exams and scenario-based practice questions within study plans helps candidates evaluate their readiness and identify areas for improvement. Time management strategies, regular review cycles, and participation in online forums or study groups further augment preparation efficacy, catering to the demands of working professionals seeking certification advancement.

Comprehensive Coverage of Advanced Linux Topics in LPIC-2

LPIC-2 certification encompasses a broad spectrum of advanced Linux administration topics that expand upon the LPIC-1 foundation. Candidates delve into sophisticated system architecture concepts, including kernel modules, boot loaders, and hardware configurations. Mastery of file system management extends to advanced storage technologies such as Logical Volume Management (LVM), RAID configurations, and network file systems like NFS and Samba.

Networking topics intensify to include IPv6, advanced routing, firewall configurations, VPNs, and troubleshooting complex network issues. Security subjects cover SELinux, AppArmor, cryptographic services, and advanced authentication mechanisms, reinforcing an administrator’s ability to safeguard Linux environments against emerging threats.

Additionally, LPIC-2 requires competence in system maintenance tasks like performance monitoring, kernel upgrades, and backup strategies, ensuring comprehensive preparedness for enterprise-level Linux administration.

Exam Preparation Strategies and Resource Utilization

Effective LPIC-2 exam preparation involves strategic use of a diverse range of learning materials and resources tailored to the certification’s objectives. Official documentation, technical manuals, and trusted Linux community resources provide authoritative content for concept mastery.

Practice exams and timed quizzes simulate the pressure and format of the actual examination, facilitating time management skills and exam temperament. Lab environments—either virtual machines or physical systems—offer invaluable opportunities to practice configuration tasks, troubleshoot real-world scenarios, and validate command proficiency.

Engaging with forums and study groups fosters knowledge exchange, clarifies doubts, and exposes candidates to varied problem-solving approaches. Keeping abreast of the latest Linux distributions and tools ensures familiarity with current technologies and industry trends relevant to the LPIC-2 syllabus.

Certification Benefits and Career Advancement Opportunities

Achieving the LPIC-2 certification validates advanced Linux administration expertise, significantly enhancing professional credibility and marketability. Certified professionals gain recognition for their ability to manage complex systems, implement robust security measures, and optimize performance across heterogeneous environments.

This certification opens doors to higher-level positions such as senior system administrator, Linux engineer, DevOps specialist, and infrastructure architect. Organizations increasingly seek certified professionals to ensure their Linux environments are secure, reliable, and efficient, reflecting the growing reliance on open-source technologies in enterprise IT landscapes.

Furthermore, LPIC-2 serves as a foundation for pursuing further specialized certifications and roles, positioning candidates for continuous career growth in the dynamic field of Linux system administration.

Overview of LPIC-2 Certification Examination Structure

The LPIC-2 certification assessment framework is meticulously designed to validate advanced Linux system administration competencies through two distinct but complementary examinations: 201-450 and 202-450. Each examination focuses on specific domains within the broad spectrum of Linux administration, ensuring comprehensive coverage of essential skills required for professional mastery. The bifurcated structure enables candidates to demonstrate their expertise in areas such as system architecture, network management, security implementation, and service configuration, reflecting real-world administrative responsibilities.

The dual-exam format encourages candidates to prepare thoroughly across varied topics, emphasizing a holistic understanding rather than narrow specialization. By structuring the certification into two separate yet interrelated tests, the evaluation process ensures that professionals certified at the LPIC-2 level possess the multifaceted skill set necessary to manage complex Linux environments effectively.

Detailed Exam Composition and Question Types

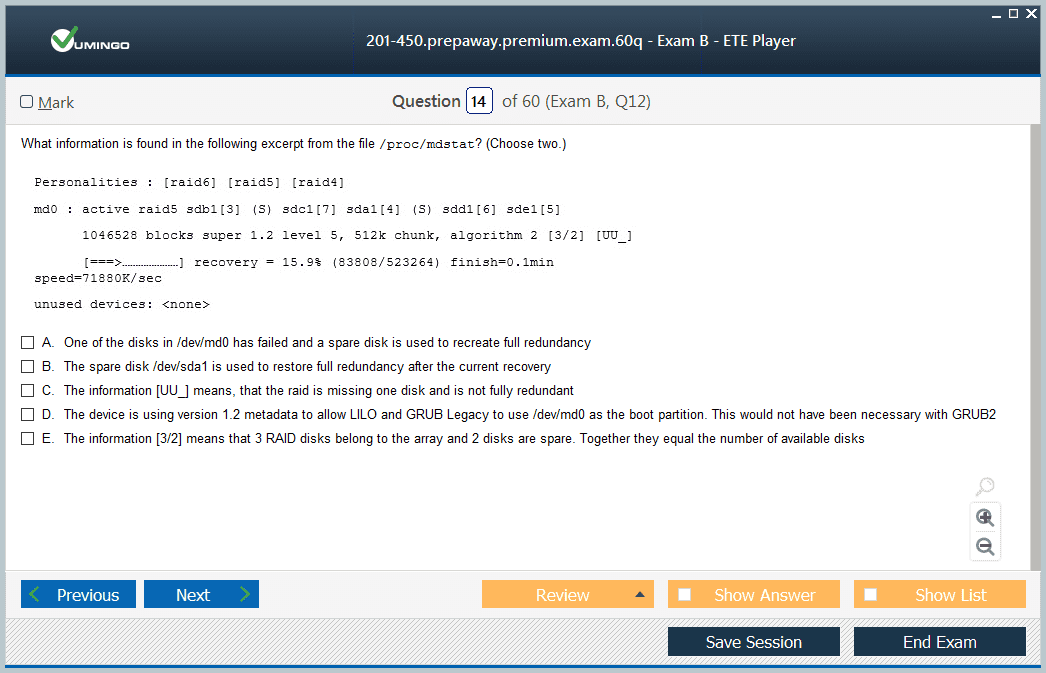

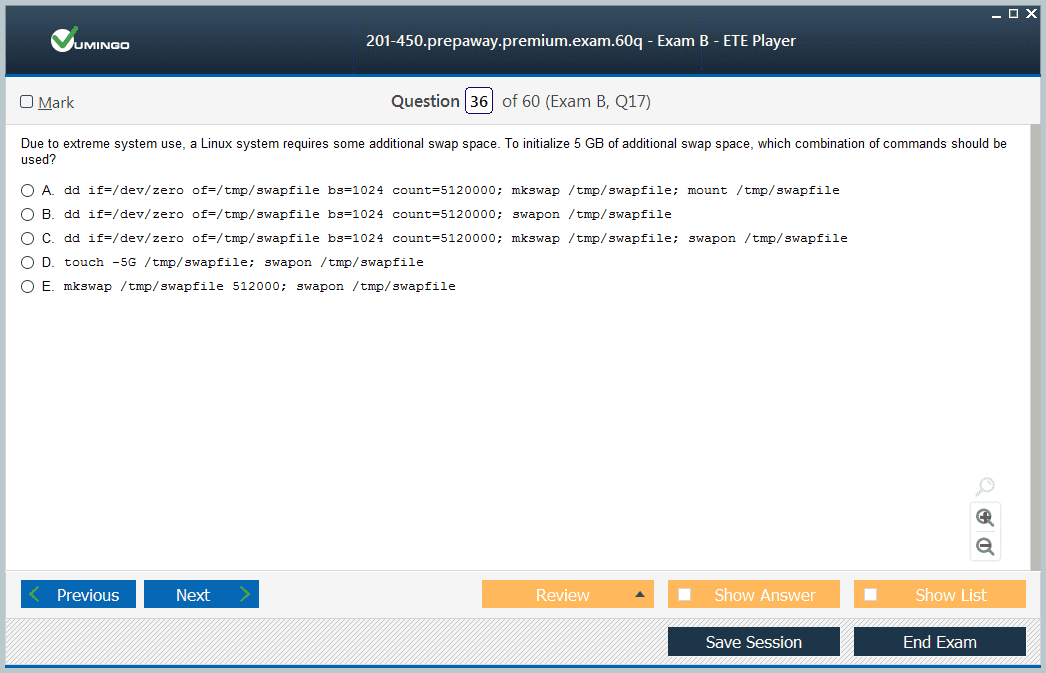

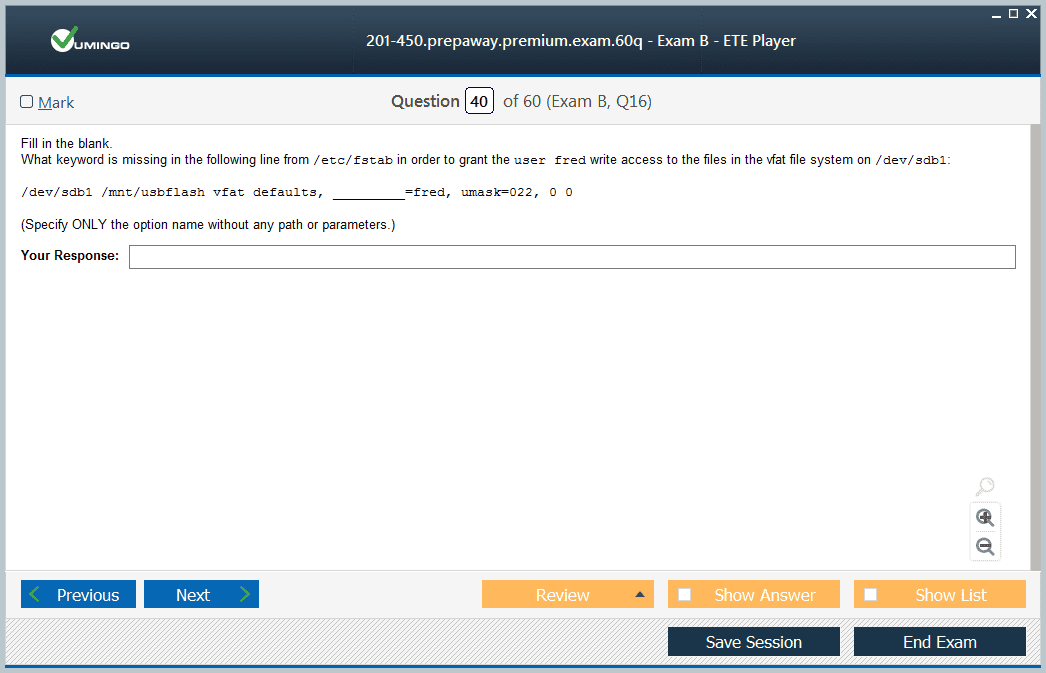

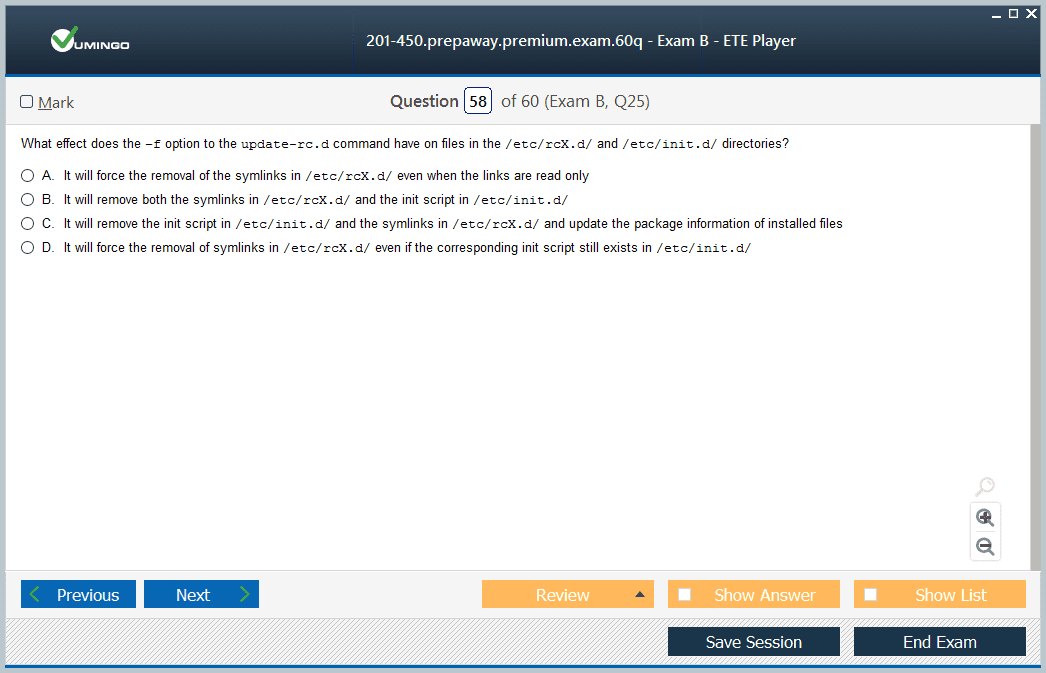

Each LPIC-2 exam comprises approximately 60 questions presented in a combination of multiple-choice and fill-in-the-blank formats. This mixture enhances the assessment’s capacity to evaluate not only theoretical knowledge but also practical problem-solving aptitude. Multiple-choice questions test candidates’ ability to recognize correct answers amidst plausible alternatives, assessing recall and comprehension. Meanwhile, fill-in-the-blank items require precise command syntax, configuration parameters, or technical terminology, ensuring familiarity with the granular aspects of Linux administration.

The question design intentionally favors scenario-based inquiries that mirror authentic challenges Linux administrators face daily. Candidates must interpret system outputs, diagnose faults, propose configuration adjustments, and select appropriate commands or procedures under timed conditions. This approach effectively differentiates those who have internalized practical skills from those relying on rote memorization, thereby elevating the professional standard of certified individuals.

Time Allocation and Examination Environment

Candidates are allotted 90 minutes per examination to complete the set of 60 questions. This carefully calibrated time frame balances the need for thoughtful analysis with the realities of exam pressure, encouraging efficient problem-solving without undue haste. The duration allows candidates to thoroughly review complex multi-step scenarios, validate answers, and manage the exam’s cognitive demands effectively.

The testing environment is standardized globally to ensure fairness and consistency. Exams are conducted under secure, proctored conditions, either at authorized testing centers or through monitored remote platforms. This controlled setting upholds exam integrity and fosters equitable assessment standards across international candidate populations. Multilingual exam options enhance accessibility, accommodating non-native English speakers and promoting broader certification adoption worldwide.

Emphasis on Practical Application and Real-World Scenarios

A distinguishing feature of the LPIC-2 evaluation process is its strong focus on real-world applicability. Unlike assessments that prioritize theoretical recall, LPIC-2 examinations immerse candidates in problem-solving situations representative of actual Linux system administration duties. Questions simulate scenarios such as configuring network services, troubleshooting hardware compatibility, managing security policies, and optimizing system performance.

This pragmatic evaluation philosophy ensures that certified professionals are equipped not only with knowledge but also with the ability to execute solutions effectively under operational constraints. Consequently, employers can trust that LPIC-2 certified administrators possess actionable competencies critical to maintaining robust, secure, and efficient Linux infrastructures.

Standardized Scoring and Performance Feedback

The LPIC-2 certification employs a rigorous, standardized scoring methodology to guarantee consistent evaluation across diverse testing locations and candidate cohorts. Each exam undergoes precise calibration to ensure fairness and reliability, with passing scores set to reflect a proficient level of mastery over the exam objectives.

Candidates receive detailed performance feedback post-examination, especially if they do not pass. This feedback highlights strengths and pinpoint areas needing improvement, providing valuable guidance for future study and retakes. Such comprehensive reporting encourages continuous learning and professional growth, aligning with the certification’s ethos of fostering enduring expertise rather than one-time achievement.

Global Accessibility and Multilingual Support

To accommodate the global Linux professional community, LPIC-2 examinations are offered in multiple languages, breaking down linguistic barriers and enhancing inclusivity. This multilingual support enables candidates from diverse geographical and cultural backgrounds to undertake the certification process with confidence and clarity, promoting equitable access to credentialing opportunities.

The availability of exams in various languages also underscores the certification’s recognition and respect for international standards, reinforcing its value in the global IT job market. By removing language constraints, LPIC-2 certification facilitates wider participation, contributing to the proliferation of skilled Linux administrators worldwide.

Continuous Improvement and Alignment with Industry Trends

The LPIC-2 assessment structure is subject to periodic review and updates, ensuring alignment with the evolving Linux ecosystem and contemporary industry requirements. As Linux distributions advance and new technologies emerge, exam content is refreshed to reflect current best practices, tools, and methodologies relevant to professional system administration.

This commitment to continual enhancement ensures that LPIC-2 certified professionals remain at the forefront of Linux expertise, capable of navigating emerging challenges and leveraging innovative solutions. The dynamic nature of the evaluation process guarantees that the certification remains a meaningful benchmark of advanced Linux proficiency, respected by employers and peers alike.

Expanded Career Opportunities with LPIC-2 Certification

Achieving LPIC-2 certification significantly broadens professional horizons, opening the gateway to advanced roles within the Linux system administration domain. Professionals who earn this credential often transition into positions such as senior system administrators, infrastructure architects, Linux solutions engineers, and technical team leads. These roles demand a sophisticated understanding of complex Linux environments, encompassing system optimization, security enforcement, network integration, and troubleshooting expertise. The certification serves as a definitive testament to the candidate’s capacity to manage enterprise-level Linux infrastructure, which is invaluable to organizations reliant on robust open-source technologies.

The advanced knowledge validated by LPIC-2 certification empowers professionals to oversee critical infrastructure projects, lead system migrations, and implement automation solutions that improve operational efficiency. This elevated career trajectory often involves collaboration with cross-functional teams including DevOps, cybersecurity, and cloud computing specialists, fostering interdisciplinary skills that enhance employability and job satisfaction.

Enhanced Salary Prospects and Marketability

Linux professionals equipped with LPIC-2 certification are frequently positioned for premium compensation packages reflective of their specialized skill set. Employers recognize that certified candidates bring not only technical proficiency but also the ability to proactively prevent system failures, mitigate security vulnerabilities, and ensure continuous system availability. Consequently, organizations are willing to invest in these professionals to safeguard mission-critical environments and maintain competitive technological advantages.

The certification bolsters marketability in a saturated IT job market, distinguishing candidates from peers who lack formal validation of their expertise. This recognition often translates into more lucrative job offers, negotiation leverage for salary increments, and increased potential for bonuses tied to project success or operational excellence. Furthermore, certified professionals may gain preferential consideration for high-impact projects and leadership roles within their organizations.

Pathways to Specialized LPIC-3 Certification and Advanced Expertise

LPIC-2 certification is not only a milestone but also a foundational stepping stone toward more specialized and advanced certifications such as LPIC-3. The LPIC-3 track offers domain-specific credentials in areas like virtualization and containerization, high availability clustering, security, and advanced network services. Pursuing LPIC-3 certifications enables professionals to deepen their expertise in cutting-edge technologies that drive modern IT infrastructures.

This certification progression aligns with industry trends emphasizing cloud computing, microservices architectures, and resilient system design. By advancing to LPIC-3, Linux administrators can position themselves as subject matter experts, consultants, or architects capable of designing scalable, secure, and fault-tolerant environments. The pathway fosters lifelong learning and specialization, ensuring sustained career relevance and intellectual growth.

Access to Exclusive Professional Development Resources

LPIC-2 certified professionals gain privileged entry to a myriad of professional development avenues designed to refine skills and expand knowledge. Access to advanced training programs, both online and instructor-led, keeps professionals abreast of the latest Linux advancements, emerging security threats, and best practices in system administration. Industry conferences and workshops provide platforms for networking with peers, thought leaders, and vendors, facilitating the exchange of innovative ideas and solutions.

Participation in expert communities and forums nurtures continuous learning through collaborative problem-solving and mentorship opportunities. Such interactions encourage sharing of unique insights on real-world challenges, fostering professional camaraderie and support systems that enhance job performance and career satisfaction over time.

Global Recognition and Its Impact on Networking

The LPIC-2 certification enjoys widespread international recognition, enhancing professionals’ visibility and credibility in the global Linux ecosystem. This global acceptance facilitates seamless networking with Linux experts and organizations worldwide, opening doors to remote consulting, freelancing, and multinational employment opportunities. The certification’s universal standard serves as a common language among professionals, simplifying collaboration across borders and time zones.

By joining a global community of certified Linux administrators, professionals can leverage collective knowledge to solve complex problems, explore new technologies, and stay informed about evolving industry standards. Such networking enriches career trajectories through partnerships, referrals, and joint ventures that may not be accessible otherwise.

Long-Term Professional Growth and Career Sustainability

LPIC-2 certification supports enduring professional growth by equipping administrators with a versatile and up-to-date skill set adaptable to various IT environments. The continuous evolution of Linux and open-source tools demands lifelong learning; the certification fosters a mindset geared toward ongoing education and technological agility. Professionals who maintain and renew their certification remain competitive and prepared for shifting market demands.

The ability to adapt to emerging trends, such as container orchestration, cloud-native applications, and DevOps integration, ensures career sustainability. Certified administrators are better positioned to transition into related roles like cloud engineers, security analysts, or systems architects, broadening their career options and resilience against technological disruptions.

Improved Job Security and Professional Confidence

In a rapidly changing technological landscape, job security is often tied to demonstrable skills and certifications that validate an individual’s contribution to organizational success. LPIC-2 certification enhances job security by certifying expertise in critical areas such as system reliability, network configuration, and security hardening, which are indispensable to business continuity.

Possessing this advanced credential instills professional confidence, empowering administrators to tackle challenging tasks, lead initiatives, and mentor junior staff. This self-assurance, combined with recognized competence, fosters leadership qualities and a reputation as a dependable Linux authority within the workplace.

Core System Architecture and Kernel Operations

Understanding the core system architecture and Linux kernel operations is paramount for any advanced system administrator aiming to optimize and maintain robust Linux infrastructures. The Linux kernel functions as the central component bridging hardware and software, orchestrating resource allocation, process scheduling, memory management, and device communications. Its modular architecture allows dynamic loading and unloading of kernel modules, enabling tailored customization and efficient hardware compatibility management.

Mastery of kernel operations entails deep familiarity with system calls, inter-process communication, interrupt handling, and device driver interactions. Kernel modules act as loadable extensions, permitting administrators to enable or disable hardware and software features without recompiling the entire kernel. This modularity contributes to system flexibility and minimizes downtime during configuration changes.

Kernel tuning and optimization involve adjusting parameters such as process scheduling algorithms, memory swapping behavior, and I/O throughput priorities. These configurations can be dynamically applied using tools like sysctl, influencing kernel behavior to meet specific performance and security demands. Moreover, understanding kernel panic scenarios and their debugging techniques is essential for minimizing unplanned outages and facilitating swift recovery.

Kernel compilation from source code remains a critical skill for scenarios requiring custom features, security patches, or optimized performance. Navigating kernel configuration menus demands careful consideration of hardware specifics, software dependencies, and security implications. Additionally, cross-compilation for embedded or alternative architectures presents unique challenges that require comprehensive knowledge of build systems and toolchains.

Strategic Infrastructure Planning and Resource Optimization

Effective Linux infrastructure management demands meticulous strategic planning and resource optimization to sustain system performance amid evolving workloads. Capacity planning integrates continuous monitoring of processor load, memory consumption, disk I/O rates, and network throughput, providing real-time visibility into system health and potential bottlenecks.

Utilizing advanced monitoring tools such as Nagios, Zabbix, or Prometheus allows administrators to gather granular metrics and generate actionable insights. This telemetry forms the foundation for predictive analysis, employing historical data and trend extrapolation to forecast future resource demands aligned with business growth or seasonal spikes.

Resource optimization is not limited to hardware scaling but also encompasses workload balancing and virtualization strategies. Implementing container orchestration with platforms like Kubernetes or deploying lightweight hypervisors enhances resource utilization by isolating applications and enabling dynamic allocation based on priority.

When constraints arise, troubleshooting methodologies combine automated diagnostics with manual inspection of system logs, kernel messages, and process states. Root cause analysis identifies underperforming services, memory leaks, or network congestion, guiding targeted remediation to restore optimal operation without compromising availability.

Incorporating energy-efficient practices and hardware lifecycle management further optimizes operational costs, promoting sustainable infrastructure evolution aligned with organizational goals.

Kernel Management and System Optimization

Kernel management lies at the heart of high-performance Linux systems, requiring continuous oversight and fine-tuning to achieve stability and efficiency. Administrators must comprehend the intricacies of kernel space versus user space operations, ensuring that critical kernel components operate seamlessly while maintaining system security boundaries.

Dynamic kernel module management facilitates on-the-fly adaptability, allowing insertion or removal of drivers to support new hardware or disable malfunctioning components without rebooting. Tools such as modprobe and lsmod assist in managing modules and diagnosing compatibility issues.

Advanced kernel parameters control aspects like network stack behavior, file system caching, and security modules such as SELinux or AppArmor. Tailoring these parameters optimizes system responsiveness and hardens security postures against emerging threats.

System optimization extends to boot time reduction through parallel service initialization and dependency resolution, leveraging modern init systems to streamline startup sequences. Administrators must also maintain comprehensive knowledge of kernel logging subsystems and tracing frameworks, utilizing utilities like dmesg and perf to monitor kernel events and diagnose performance anomalies.

System Startup and Recovery Procedures

The Linux boot process orchestrates a sequence of critical operations that transition a system from powered-off state to a fully operational environment. A sophisticated understanding of bootloaders such as GRUB or systemd-boot is vital for managing complex scenarios involving encrypted filesystems, multi-boot setups, and kernel parameter customization.

Customization of initialization sequences via systemd unit files allows precise control over service dependencies, enabling administrators to tailor startup behavior for security and efficiency. Proper configuration ensures essential services activate in the correct order while minimizing boot delays and reducing attack surfaces during early system phases.

Recovery procedures form a critical safety net for maintaining system availability during catastrophic failures. Techniques include booting into rescue or single-user modes to perform diagnostic checks, repairing corrupted filesystems with tools like fsck, and restoring data from verified backups. Mastery of these procedures is essential for minimizing downtime and ensuring business continuity.

Proficiency in managing emergency shell environments and deploying advanced recovery tools such as live CDs or network boot environments equips administrators to swiftly respond to incidents ranging from hardware malfunctions to security breaches.

Advanced Storage and Filesystem Management

Enterprise-grade storage solutions demand comprehensive expertise in advanced storage architectures and filesystem technologies to ensure data integrity, availability, and performance. RAID configurations combine multiple physical disks into logical arrays, balancing redundancy and speed. Familiarity with RAID levels, from RAID 0 striping to RAID 6 double parity, empowers administrators to tailor solutions based on application requirements and risk tolerance.

Logical Volume Management (LVM) introduces a layer of abstraction over physical storage, enabling dynamic volume resizing, live migration, and snapshot creation without interrupting services. LVM snapshots provide critical point-in-time copies essential for consistent backups and disaster recovery scenarios.

Storage encryption technologies like LUKS safeguard sensitive data by encrypting entire block devices. Administrators must expertly manage encryption keys, understand performance trade-offs, and integrate encrypted volumes seamlessly with system boot processes.

Advanced filesystem features such as thin provisioning optimize disk space allocation, while caching mechanisms enhance I/O performance by temporarily storing frequently accessed data in high-speed storage. Compression capabilities reduce storage footprint, beneficial in environments constrained by physical capacity or budget.

Integration of storage solutions with virtualization platforms demands specialized skills to optimize shared storage access and maintain performance in multi-tenant environments, reinforcing the need for ongoing storage architecture education.

Performance Monitoring and Predictive Analytics

Continuous performance monitoring is foundational for maintaining Linux systems that meet stringent service level agreements and user expectations. Employing robust monitoring stacks that collect metrics on CPU utilization, memory usage, disk latency, and network packet loss enables administrators to proactively address potential issues.

Advanced visualization tools and dashboards transform raw data into intuitive insights, facilitating rapid identification of anomalies and trends. Predictive analytics leverages machine learning models and statistical algorithms to anticipate resource exhaustion or performance degradation before they impact users.

This foresight informs capacity expansion, workload redistribution, and infrastructure upgrades, fostering a culture of proactive maintenance rather than reactive troubleshooting. Integration of alerting systems ensures timely notification of critical conditions, enabling swift intervention to mitigate risks.

The synergy between automated monitoring, predictive analytics, and manual expertise enhances operational resilience and optimizes resource expenditure.

Disaster Recovery Planning and High Availability

Ensuring continuous system availability and rapid recovery from failures demands rigorous disaster recovery planning and high availability strategies. Redundancy at multiple levels, including network interfaces, storage arrays, and compute nodes, forms the backbone of resilient Linux infrastructures.

High availability clusters utilize failover mechanisms that automatically detect node failures and transfer workloads to standby systems, minimizing service interruptions. Technologies such as Pacemaker and Corosync provide orchestration and communication frameworks for cluster management.

Backup strategies employing incremental, differential, and full backups complement high availability by preserving data integrity and enabling restoration in the event of data corruption or catastrophic loss. Regular testing of backup and recovery processes is essential to validate readiness.

Comprehensive disaster recovery plans encompass risk assessment, resource allocation, communication protocols, and post-incident analysis to refine strategies continually. This holistic approach safeguards organizational operations against diverse threats, ranging from hardware failures to cyberattacks.

Advanced Network Architecture and Protocol Implementation

In modern enterprise environments, advanced network architecture is crucial for ensuring robust, scalable, and efficient data communication. Mastery of dynamic routing protocols such as OSPF (Open Shortest Path First) and BGP (Border Gateway Protocol) forms the backbone of sophisticated network infrastructures. These protocols enable routers to dynamically adapt to topology changes, rerouting traffic along optimal paths to maintain high availability and minimize latency.

OSPF operates within an autonomous system using link-state algorithms to calculate the shortest path tree, optimizing internal traffic flow. BGP, conversely, governs routing between autonomous systems, supporting internet-scale routing with policies to control traffic exchange. Understanding the intricate behaviors of these protocols, including route aggregation, path attributes, and route filtering, is essential for network engineers tasked with managing expansive and interconnected networks.

Network segmentation techniques such as VLANs and subnetting enhance security and performance by isolating traffic into logical groups. This containment reduces broadcast domains and limits the attack surface, improving overall network hygiene. Implementing QoS (Quality of Service) mechanisms prioritizes critical traffic, guaranteeing bandwidth and low latency for mission-critical applications such as VoIP, video conferencing, and enterprise resource planning systems.

Proficiency in protocol analysis using packet sniffing and deep packet inspection tools is vital for diagnosing anomalies, detecting misconfigurations, and uncovering potential security breaches. Network administrators leverage these insights to fine-tune configurations and maintain optimal operational conditions.

Enterprise Network Service Configuration

Effective management of foundational network services underpins enterprise communication and operational stability. DNS (Domain Name System) administration is pivotal in translating human-readable domain names to IP addresses, facilitating seamless access to resources. Beyond basic zone and resource record management, advanced DNS configuration involves implementing DNSSEC to prevent cache poisoning and man-in-the-middle attacks, ensuring data integrity and trustworthiness.

Dynamic DNS updates and failover configurations augment DNS resilience, allowing automatic updates in response to network changes and maintaining service availability during outages. Optimizing DNS caching strategies reduces lookup latency and lessens recursive query load, improving user experience.

DHCP (Dynamic Host Configuration Protocol) servers automate IP address allocation, simplifying network management in environments with thousands of client devices. Advanced DHCP configurations include IP address reservations for critical assets, integration with directory services for centralized policy enforcement, and deployment of vendor-specific options to tailor device configurations.

Network time synchronization is another critical service, with NTP (Network Time Protocol) ensuring consistent system clocks across distributed infrastructure. Accurate timekeeping is vital for security protocols, log correlation, and scheduled tasks. Hierarchical NTP architectures employing stratum servers maintain synchronization precision and reliability.

VPN technologies including IPSec, OpenVPN, and SSL/TLS-based solutions provide secure tunnels for remote access and site-to-site connectivity. Selecting appropriate VPN implementations involves balancing cryptographic strength, performance overhead, and ease of deployment to protect data in transit without impeding user productivity.

Performance Monitoring and System Analytics

Comprehensive performance monitoring is integral to maintaining the health and efficiency of network and system infrastructures. Utilizing platforms such as Nagios, Zabbix, and Prometheus enables real-time tracking of critical metrics including CPU load, memory utilization, disk I/O, and network throughput. These systems support threshold-based alerting, ensuring rapid response to emerging issues.

Performance analytics extends beyond monitoring to include historical data evaluation, anomaly detection, and capacity forecasting. Statistical techniques such as time series analysis and trend extrapolation provide predictive insights, empowering administrators to plan resource upgrades proactively and avoid service degradation.

Log management frameworks aggregate data from diverse sources—servers, applications, security devices—creating a centralized repository for correlation and forensic analysis. Tools like the ELK Stack (Elasticsearch, Logstash, Kibana) or similar systems facilitate deep dives into event sequences, aiding in troubleshooting and compliance reporting.

Integration of machine learning models into analytics workflows enhances the detection of subtle performance deviations and security threats, reducing false positives and enabling proactive remediation. This holistic approach to monitoring and analytics forms the foundation of resilient, high-performing network environments.

Network Security Implementation and Management

Network security remains a paramount concern, necessitating a multi-layered defense strategy. Firewalls serve as the primary perimeter control, regulating inbound and outbound traffic according to meticulously crafted rulesets based on IP addresses, ports, and protocols. Crafting these rules demands balancing stringent security policies with operational requirements to prevent disruptions while blocking unauthorized access.

Intrusion Detection Systems (IDS) monitor network traffic patterns to identify malicious activities such as distributed denial-of-service (DDoS) attacks, port scanning, or exploitation attempts. Fine-tuning IDS involves calibrating detection thresholds and signatures to minimize false alarms while maintaining sensitivity to emerging threats.

Network Access Control (NAC) frameworks enforce authentication and authorization policies, ensuring that only verified users and compliant devices gain network entry. Integration with centralized identity management systems and directory services enables seamless enforcement of access policies and audit trails.

Security auditing processes validate that implemented controls comply with organizational standards and regulatory frameworks. Regular vulnerability assessments and penetration testing uncover weaknesses, facilitating continuous security improvements and strengthening the overall security posture.

Quality of Service Configuration and Traffic Management

Quality of Service (QoS) plays a vital role in prioritizing network traffic to guarantee performance for latency-sensitive and critical applications. Configuring traffic classification and shaping policies enables granular control over bandwidth allocation, mitigating congestion during peak usage periods.

Techniques such as traffic policing and queuing mechanisms ensure fair resource distribution while protecting high-priority flows from degradation. Implementing Differentiated Services Code Point (DSCP) marking standardizes traffic prioritization across diverse network segments.

Effective QoS deployment demands thorough understanding of application requirements, user behavior, and underlying network capacity. Continuous monitoring and adjustment of QoS policies ensure alignment with evolving business needs and technology landscapes.

Virtual Private Network Deployment and Management

Deploying secure Virtual Private Networks (VPNs) is essential for safeguarding remote connections and inter-site communications. VPN protocols such as IPSec provide robust encryption and authentication frameworks suitable for site-to-site tunnels, while SSL/TLS-based VPNs cater to client-to-site scenarios with flexible client compatibility.

OpenVPN combines ease of use with strong cryptographic support, making it a popular choice for secure remote access. Administrators must manage certificate authorities, keys, and revocation lists to maintain trust and prevent unauthorized access.

Balancing security with performance involves optimizing encryption algorithms, compression options, and connection parameters. Monitoring VPN health and usage patterns supports capacity planning and threat detection.

Network Protocol Analysis and Troubleshooting

Advanced network troubleshooting requires proficiency in protocol analysis to diagnose performance bottlenecks and security issues. Packet capture tools dissect traffic flows, revealing protocol anomalies, retransmissions, or malformed packets that impair connectivity.

Analyzing protocols at various OSI layers, from Ethernet frames to application-layer exchanges, enables pinpointing of issues such as ARP conflicts, routing loops, or DNS misconfigurations. Correlating packet data with system logs and monitoring alerts accelerates root cause identification.

Regular protocol auditing and baseline establishment assist in detecting deviations indicative of configuration errors or malicious activity. Integrating automated analysis tools with manual expertise fosters a proactive approach to network health management.

Enterprise Web Server Deployment and Optimization

Deploying enterprise-grade web servers demands a profound understanding of server software intricacies, module orchestration, virtual hosting capabilities, and performance tuning methodologies. These competencies empower system administrators and DevOps engineers to deliver resilient, scalable web services that can adeptly handle fluctuating and high-volume traffic scenarios without compromising reliability.

The Apache HTTP Server remains a cornerstone technology in web hosting, prized for its modular architecture and extensive configurability. Mastery of Apache involves enabling and managing various modules, such as mod_rewrite for URL manipulation, mod_ssl for secure connections, and mod_proxy for reverse proxy functions. Fine-tuning multi-processing modules (MPMs) like prefork, worker, and event directly impacts concurrent connection handling and resource consumption, requiring a delicate balance between throughput and server stability.

Optimizing connection parameters—such as KeepAlive settings, timeout durations, and maximum client connections—minimizes latency and prevents resource exhaustion under peak loads. Complementing these settings with thorough log analysis guides performance refinements and preemptive scaling decisions.

Implementing HTTPS protocols necessitates expertise in SSL/TLS standards, including certificate lifecycle management and cryptographic parameter optimization. Selecting robust cipher suites, enforcing protocol version constraints (e.g., TLS 1.3 enforcement), and configuring session resumption mechanisms safeguard communication channels while mitigating computational overhead.

Virtual hosting strategies enable hosting multiple websites or applications on a single physical or virtual server instance. Through domain-based or IP-based virtual hosts, organizations can maximize infrastructure utilization, isolate environments, and streamline maintenance. Proper security hardening within virtual host contexts ensures that cross-site vulnerabilities are mitigated and data integrity is maintained.

Load Balancing and Caching Strategies

Load balancing constitutes a vital technique in distributing inbound network traffic efficiently across multiple backend servers to enhance availability, scalability, and fault tolerance. Reverse proxy servers like Nginx and Apache's mod_proxy act as intermediaries, intelligently routing client requests based on algorithms such as round-robin, least connections, or IP hash.

Deploying load balancers introduces performance gains by mitigating single points of failure and facilitating seamless failover in case of server outages. These solutions often incorporate health checks to monitor backend responsiveness, dynamically adjusting traffic flow accordingly.

Caching layers complement load balancing by intercepting repeated requests and serving pre-processed content, significantly reducing backend server load and accelerating response times. Caching techniques encompass FastCGI caching for dynamic content, proxy caching for HTTP responses, and dedicated reverse proxy cache systems inspired by Varnish.

Effective cache management involves setting appropriate expiration headers, validating content freshness, and enabling purge mechanisms to maintain content accuracy. TLS termination at the reverse proxy offloads cryptographic processing from backend servers, optimizing CPU utilization while centralizing security policies. Integrating Web Application Firewalls (WAFs) within this layer provides advanced protection against SQL injection, cross-site scripting, and other web-based attacks, thereby fortifying the service perimeter.

Database Service Management and Replication

Robust database management is foundational to enterprise applications, requiring deep technical knowledge of installation, configuration, and performance tuning of relational database management systems such as MySQL, MariaDB, and PostgreSQL. Administrators must allocate resources prudently, optimizing memory buffers, disk I/O, and processor usage to support demanding workloads.

Fine-grained tuning of database internals involves adjusting buffer pool sizes, query cache parameters, and connection pooling settings to minimize latency and maximize throughput. Query optimization through indexing strategies, execution plan analysis, and stored procedure design further enhances responsiveness.

Implementing automated backup and recovery solutions is critical for safeguarding business continuity. Techniques include full and incremental backups, point-in-time recovery enabled by binary logs or write-ahead logs, and comprehensive disaster recovery plans encompassing offsite storage and failover procedures. Regular backup validation and simulated restores ensure readiness for unforeseen data loss incidents.

High availability is bolstered by database replication configurations, leveraging master-slave or master-master topologies to distribute load and provide redundancy. Conflict detection and resolution mechanisms maintain data consistency, while replication lag monitoring informs operational adjustments.

Security considerations encompass encrypting data at rest and in transit, enforcing granular access controls through role-based permissions, and maintaining detailed audit logs for compliance and forensic analysis.

File Sharing and Directory Services

Enabling seamless interoperability in heterogeneous network environments requires proficient configuration of file sharing protocols and directory services. Samba, implementing SMB/CIFS protocols, facilitates transparent file and printer sharing between Linux and Windows systems. Integrating Samba with centralized authentication services enables single sign-on experiences, enhancing user convenience and administrative control.

Network File System (NFS) version 4 offers high-performance distributed filesystem access with improved security features compared to earlier iterations. Proper deployment involves fine-tuning mount options, caching policies, and locking mechanisms to balance consistency with efficiency. Implementing Kerberos-based authentication fortifies access controls and mitigates unauthorized usage risks.

Centralized identity and access management through Lightweight Directory Access Protocol (LDAP) streamlines authentication, authorization, and user management across disparate services. Effective LDAP schema design and access control list configurations ensure scalable and secure directory operations, facilitating enterprise-wide governance of user credentials and permissions.

Mail Server Implementation and Management

Managing enterprise mail infrastructure demands comprehensive expertise in Mail Transfer Agents (MTAs) like Postfix, Exim, or Sendmail. Configuration encompasses queue management, routing policies, and spam mitigation techniques to maintain reliable and timely message delivery.

Advanced content filtering integrates antispam and antivirus engines, leveraging heuristic analysis, reputation systems, and signature databases to detect and quarantine malicious or unsolicited emails. Tuning filter aggressiveness minimizes false positives while maintaining protection levels.

Securing mail communication involves deploying authentication standards such as SPF (Sender Policy Framework), DKIM (DomainKeys Identified Mail), and DMARC (Domain-based Message Authentication, Reporting & Conformance). These protocols validate sender authenticity and reduce phishing risks. Encrypting message transport using TLS protects confidentiality and integrity between mail servers and clients.

Web Application Firewall Integration and Security Enhancements

Incorporating Web Application Firewalls (WAFs) into web service architectures strengthens defenses against application-layer attacks. WAFs analyze incoming HTTP/HTTPS traffic, blocking injection attempts, cross-site scripting (XSS), and other exploitation vectors. Custom rule sets tailored to specific application behaviors improve detection accuracy.

WAF deployment at the reverse proxy level synergizes with load balancing and TLS termination, creating a consolidated security and performance management point. Continuous tuning and updating of WAF signatures and policies are essential to adapt to evolving threat landscapes.

Additional security hardening techniques include HTTP security headers (Content-Security-Policy, X-Frame-Options, HSTS), rate limiting to prevent abuse, and comprehensive logging to support incident response.

Advanced Backup Strategies and Disaster Recovery Planning

Ensuring data resilience and service continuity demands well-architected backup strategies aligned with Recovery Time Objectives (RTO) and Recovery Point Objectives (RPO). Enterprise environments employ multi-tiered backups involving full, incremental, and differential snapshots combined with offsite replication.

Automation of backup processes using scripting and scheduling tools reduces human error and operational overhead. Regular testing of recovery procedures validates the integrity of backup media and the effectiveness of restoration workflows.

Disaster recovery planning encompasses failover infrastructure, geographic redundancy, and coordinated response protocols. Incorporating cloud-based backup solutions and hybrid architectures enhances flexibility and scalability, enabling rapid recovery from catastrophic failures.

Security Architecture and Defense Mechanisms

Advanced Linux security requires orchestration of multiple defense mechanisms creating comprehensive protection against diverse threats. This approach addresses vulnerabilities across all system layers from kernel to application level.

Access control implementation combines Discretionary Access Control (DAC), Mandatory Access Control (MAC) with SELinux or AppArmor, and Role-Based Access Control (RBAC) to create fine-tuned permission schemas. These mechanisms ensure minimal privilege principles while maintaining operational functionality.

Encryption deployment spans filesystem encryption, secure communications, and data protection. Key management systems provide secure storage, rotation policies, and lifecycle management to prevent compromise and ensure continued protection.

Security monitoring systems using auditd, OSSEC, or custom log aggregation provide real-time surveillance and anomaly detection. Behavioral baseline establishment and heuristic analysis enable identification of potential threats and security incidents.

Network Security and Access Control

Router configuration combines efficient routing with embedded security mechanisms, supporting both performance and defensive network topologies. Advanced routing protocols must be configured to accommodate complex network architectures while maintaining security.

Network Address Translation (NAT) policies provide address space conservation and security through network structure abstraction. Proper NAT configuration maintains application compatibility while enhancing security through network obfuscation.

Quality of Service (QoS) implementation ensures critical application performance through traffic classification, prioritization, and bandwidth allocation. DSCP marking and traffic shaping guarantee mission-critical packet delivery even during network congestion.

Firewall configuration using stateful inspection, deep packet inspection, and intrusion prevention provides comprehensive perimeter protection. Rate limiting and attack mitigation prevent various network-based threats while maintaining legitimate traffic flow.

Secure Remote Access and Authentication

SSH hardening eliminates password-based authentication vulnerabilities through key-based authentication implementation. Proper sshd configuration includes root login restrictions, protocol enforcement, and session timeout management.

SSH key management requires secure key generation, distribution, and revocation procedures following enterprise standards. Certificate authority implementation can streamline multi-user environments while providing time-bound access controls.

SSH tunneling capabilities enable secure encapsulation of arbitrary traffic through local, remote, and dynamic port forwarding. Proper tunnel configuration and monitoring prevent misuse while enabling secure access to internal resources.

Access logging integration with centralized logging systems provides comprehensive audit trails including source identification, command execution, and session duration tracking. Real-time alerting enables rapid response to unauthorized access attempts.

System Automation and Configuration Management

Shell scripting using Bash combined with text processing tools enables creation of custom automation routines for system management tasks. Automated scripts handle software installation, patching, auditing, and monitoring with scheduled execution.

Configuration management systems like Ansible, Puppet, or SaltStack provide declarative infrastructure control through manifests or playbooks. These systems ensure consistent system states across large-scale deployments.

Monitoring automation enhances operational responsiveness through intelligent metric collection and threshold-based alerting. Event correlation engines determine root causes and trigger automated remediation procedures.

Backup automation ensures reliable data protection through scripted backup creation, verification, and rotation. Snapshot-based backup systems provide rapid recovery capabilities with minimal downtime.

Final Thoughts

Performance optimization requires comprehensive analysis of system telemetry to identify bottlenecks and implement strategic adjustments. This process involves real-time and historical analysis using specialized monitoring tools.

System tuning involves kernel parameter adjustment using sysctl to modify process limits, file descriptors, and memory behavior. Scheduler policy selection optimizes performance for specific workload characteristics.

Capacity forecasting integrates trend analysis with demand projection to predict when resource limits may be exceeded. This proactive approach enables informed hardware acquisition and scaling decisions.

Dynamic resource allocation using control groups and namespaces provides isolation and resource limits for applications and containers. This approach enables workload prioritization and resource distribution optimization.

Scalability planning requires architectural foresight to determine when vertical scaling reaches diminishing returns and horizontal scaling becomes necessary. Container orchestration and distributed systems enable effective load distribution.

Multi-node cluster orchestration requires understanding of load balancers, service discovery, and distributed system coordination. These technologies enable high availability and performance scaling across multiple systems.

Performance monitoring across distributed systems requires comprehensive visibility into all system components and their interactions. Centralized monitoring and alerting ensure coordinated response to performance issues.

Resource optimization strategies balance performance requirements with cost considerations through efficient resource utilization and dynamic allocation based on demand patterns.

This comprehensive guide provides the foundation needed to achieve LPIC-2 (201-450) certification and excel in advanced Linux system administration roles. The certification validates expertise across all these domains, ensuring certified professionals can handle complex enterprise Linux environments effectively.

LPI 201-450 practice test questions and answers, training course, study guide are uploaded in ETE Files format by real users. Study and Pass 201-450 LPIC-2 Exam 201 certification exam dumps & practice test questions and answers are to help students.

Exam Comments * The most recent comment are on top

- 010-160 - Linux Essentials Certificate Exam, version 1.6

- 102-500 - LPI Level 1

- 101-500 - LPIC-1 Exam 101

- 201-450 - LPIC-2 Exam 201

- 202-450 - LPIC-2 Exam 202

- 300-300 - LPIC-3 Mixed Environments

- 305-300 - Linux Professional Institute LPIC-3 Virtualization and Containerization

- 010-150 - Entry Level Linux Essentials Certificate of Achievement

- 303-300 - LPIC-3 Security Exam 303

Purchase 201-450 Exam Training Products Individually

Why customers love us?

What do our customers say?

The resources provided for the LPI certification exam were exceptional. The exam dumps and video courses offered clear and concise explanations of each topic. I felt thoroughly prepared for the 201-450 test and passed with ease.

Studying for the LPI certification exam was a breeze with the comprehensive materials from this site. The detailed study guides and accurate exam dumps helped me understand every concept. I aced the 201-450 exam on my first try!

I was impressed with the quality of the 201-450 preparation materials for the LPI certification exam. The video courses were engaging, and the study guides covered all the essential topics. These resources made a significant difference in my study routine and overall performance. I went into the exam feeling confident and well-prepared.

The 201-450 materials for the LPI certification exam were invaluable. They provided detailed, concise explanations for each topic, helping me grasp the entire syllabus. After studying with these resources, I was able to tackle the final test questions confidently and successfully.

Thanks to the comprehensive study guides and video courses, I aced the 201-450 exam. The exam dumps were spot on and helped me understand the types of questions to expect. The certification exam was much less intimidating thanks to their excellent prep materials. So, I highly recommend their services for anyone preparing for this certification exam.

Achieving my LPI certification was a seamless experience. The detailed study guide and practice questions ensured I was fully prepared for 201-450. The customer support was responsive and helpful throughout my journey. Highly recommend their services for anyone preparing for their certification test.

I couldn't be happier with my certification results! The study materials were comprehensive and easy to understand, making my preparation for the 201-450 stress-free. Using these resources, I was able to pass my exam on the first attempt. They are a must-have for anyone serious about advancing their career.

The practice exams were incredibly helpful in familiarizing me with the actual test format. I felt confident and well-prepared going into my 201-450 certification exam. The support and guidance provided were top-notch. I couldn't have obtained my LPI certification without these amazing tools!

The materials provided for the 201-450 were comprehensive and very well-structured. The practice tests were particularly useful in building my confidence and understanding the exam format. After using these materials, I felt well-prepared and was able to solve all the questions on the final test with ease. Passing the certification exam was a huge relief! I feel much more competent in my role. Thank you!

The certification prep was excellent. The content was up-to-date and aligned perfectly with the exam requirements. I appreciated the clear explanations and real-world examples that made complex topics easier to grasp. I passed 201-450 successfully. It was a game-changer for my career in IT!