- Home

- VMware Certifications

- 2V0-21.20 Professional VMware vSphere 7.x Dumps

Pass VMware 2V0-21.20 Exam in First Attempt Guaranteed!

2V0-21.20 Premium File

- Premium File 109 Questions & Answers. Last Update: Jan 24, 2026

Whats Included:

- Latest Questions

- 100% Accurate Answers

- Fast Exam Updates

Last Week Results!

All VMware 2V0-21.20 certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the 2V0-21.20 Professional VMware vSphere 7.x practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

Essential VCP-DCV 2V0-21.20 Exam Study Guide and Tips

The 2V0-21.20 certification focuses on validating the skills and knowledge required to manage and operate VMware vSphere environments efficiently. This credential emphasizes hands-on proficiency, practical understanding of virtualization concepts, and the ability to implement, maintain, and troubleshoot vSphere infrastructures. Candidates are expected to demonstrate competence in deploying virtual machines, configuring and optimizing resources, managing storage and networking, and maintaining system stability. Achieving this certification signifies that a professional is capable of handling real-world operational challenges in a data center environment.

Understanding vSphere Infrastructure

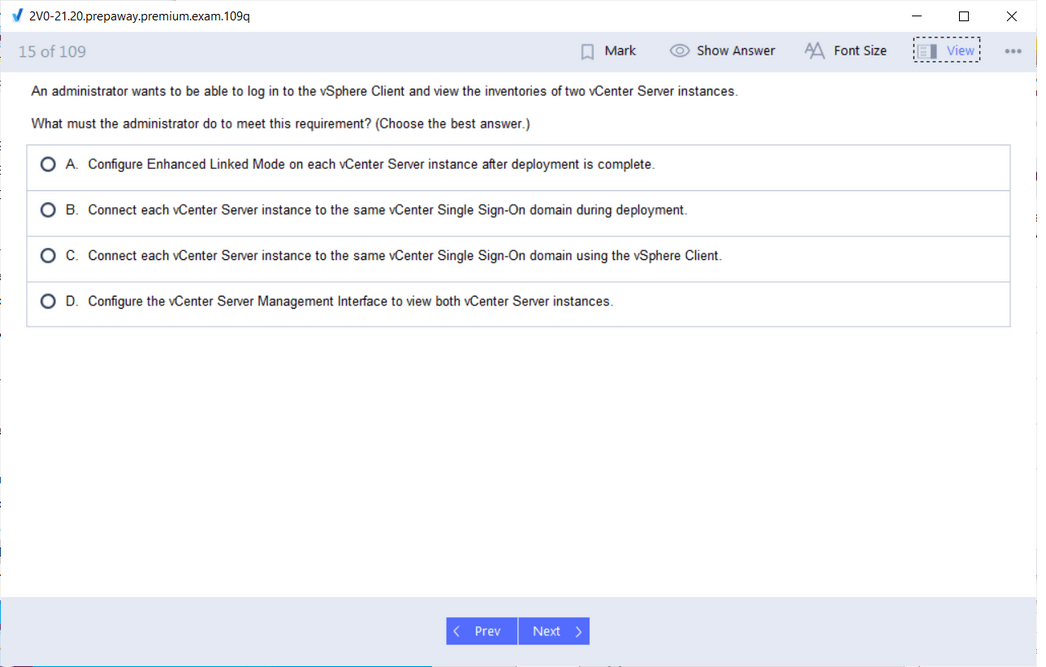

A core component of the 2V0-21.20 exam is understanding the architecture and functionality of VMware vSphere. This includes familiarity with ESXi hosts, vCenter Server, clusters, datastores, and resource pools. Candidates should understand how these components interact to provide a scalable and resilient virtual environment. Knowledge of host configuration, cluster setup, and the role of resource allocation policies ensures that virtual machines operate efficiently without causing performance bottlenecks. Understanding these interactions is crucial for effective management and troubleshooting.

Managing Virtual Machines

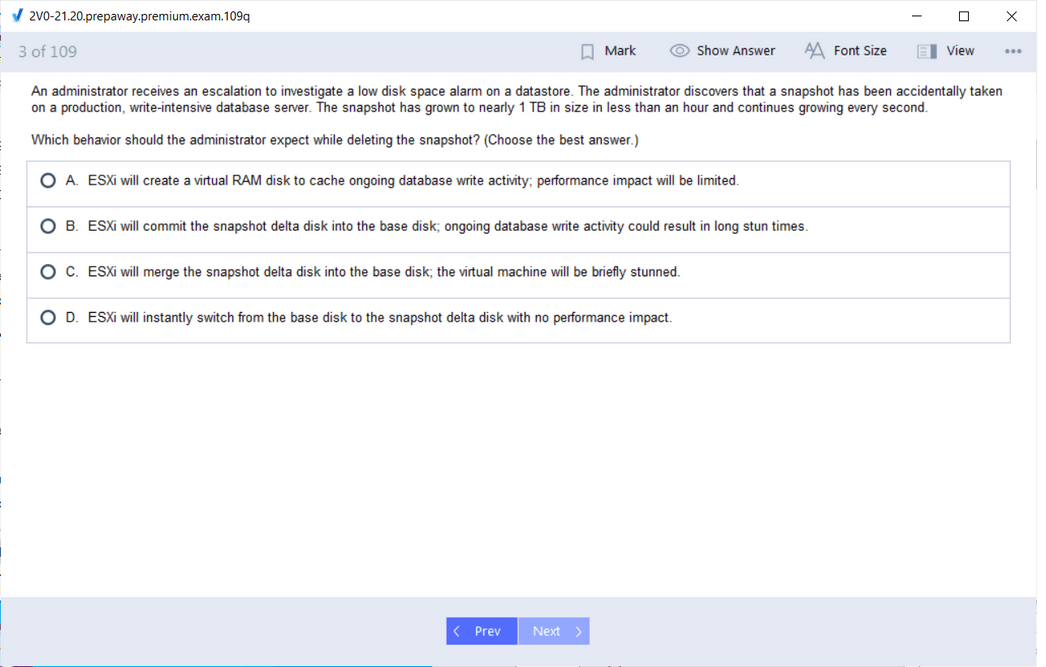

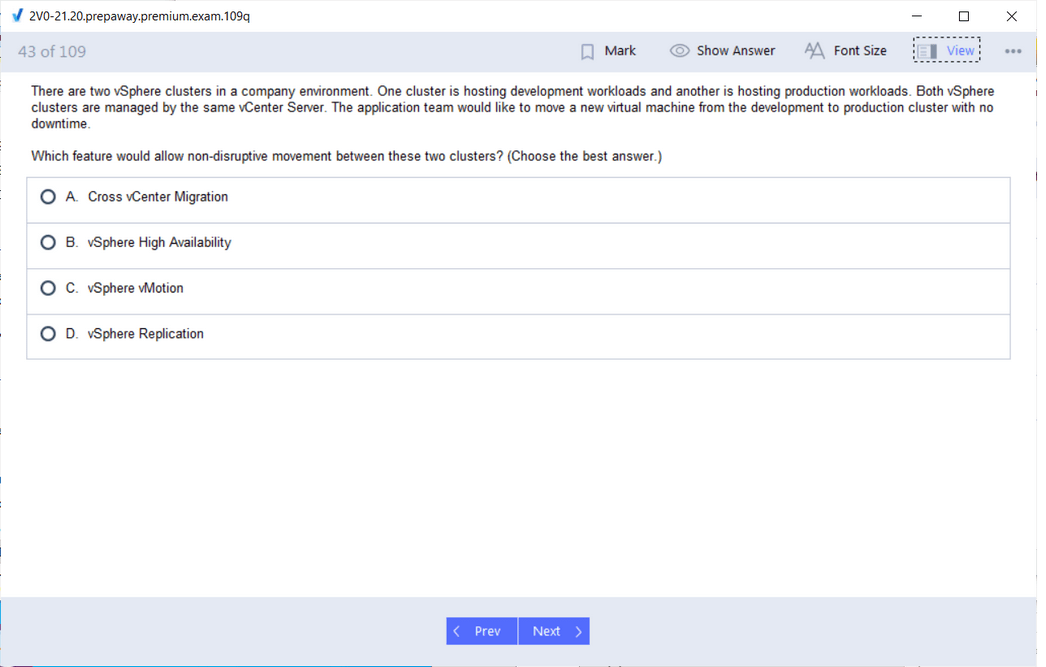

Candidates must demonstrate the ability to manage the full lifecycle of virtual machines. This includes deploying virtual machines, cloning, migrating, and managing templates. Knowledge of snapshots, resource allocation, and operational best practices is essential to maintain performance and flexibility. Candidates should be able to adjust CPU, memory, and storage allocations to optimize workloads while maintaining stability. Monitoring virtual machines for performance and resource utilization allows professionals to identify potential issues and apply corrective measures proactively.

Networking Configuration

Networking is a critical aspect of managing a vSphere environment. Candidates should understand virtual switches, port groups, VMkernel adapters, and network segmentation. Configuring redundancy, load balancing, and traffic management ensures that network resources are reliable and efficient. Professionals must be able to troubleshoot connectivity issues, monitor network performance, and implement best practices to maintain stability. Awareness of how network configurations affect virtual machine performance and overall resource distribution is essential for operational success.

Storage Management

Effective storage management is another key focus area. Candidates are expected to create, configure, and manage datastores, allocate storage resources to virtual machines, and implement storage policies that enhance performance and reliability. Understanding different storage types, such as SAN, NAS, and local storage, and their impact on virtualized workloads, allows for efficient resource utilization. Professionals should also be capable of implementing best practices for storage provisioning, monitoring, and troubleshooting to maintain a robust infrastructure.

Performance Monitoring

Monitoring system performance is a vital skill for the 2V0-21.20 exam. Candidates should be familiar with performance metrics for CPU, memory, network, and storage utilization. Analyzing these metrics allows professionals to detect performance issues, identify bottlenecks, and take corrective actions before problems escalate. Understanding performance trends and capacity planning ensures that virtual environments remain responsive and reliable. Continuous monitoring and proactive adjustments contribute to operational efficiency and reduce downtime.

Troubleshooting and Operational Skills

Troubleshooting is a fundamental component of the exam. Candidates must demonstrate the ability to identify, analyze, and resolve operational issues within a vSphere environment. This includes interpreting logs, responding to alerts, diagnosing failures, and applying corrective measures. Practical troubleshooting skills ensure that professionals can maintain service availability and operational stability. Candidates should also understand the implications of configuration changes and be able to implement solutions that maintain system integrity.

Security and Access Control

Security is integral to managing virtual environments. Candidates must understand user roles, permissions, and authentication mechanisms to protect virtual machines and associated resources. Implementing access controls and monitoring security configurations ensures the environment remains secure from unauthorized changes. Knowledge of best practices for backups, disaster recovery, and redundancy planning contributes to overall resilience and data protection. Candidates should also be aware of how to apply security policies to maintain operational compliance and system integrity.

Hands-On Experience

Hands-on practice is essential for mastering the objectives of the 2V0-21.20 exam. Candidates should engage in lab exercises to deploy virtual machines, configure networking, manage storage, and troubleshoot issues. Simulating real-world scenarios reinforces theoretical knowledge and develops practical skills. Working with vSphere Client and vCenter Server interfaces allows candidates to perform tasks accurately and efficiently. Scenario-based practice helps candidates understand the operational impact of their actions and strengthens decision-making abilities.

Exam Preparation Strategies

A systematic approach to preparation improves the likelihood of success. Candidates should begin by studying the core concepts of vSphere architecture, virtual machine management, networking, storage, and performance monitoring. Creating a structured study plan that combines theoretical learning with practical exercises ensures comprehensive coverage of exam objectives. Reviewing scenario-based exercises and practicing troubleshooting tasks helps build confidence and operational proficiency. Time management during preparation allows candidates to progress steadily without becoming overwhelmed.

Integrating Knowledge

Success in the 2V0-21.20 exam requires integration of knowledge across multiple domains. Candidates must understand the relationships between virtual machines, clusters, storage, and networks. This holistic view enables professionals to make informed decisions that optimize performance, maintain stability, and ensure efficient resource utilization. Integrating theoretical knowledge with hands-on skills prepares candidates to handle complex operational scenarios and apply best practices effectively.

Maintaining Skills

Achieving the 2V0-21.20 certification is only the beginning. Maintaining proficiency requires continuous practice and engagement with virtualized environments. Candidates should regularly perform administrative tasks, monitor system performance, and troubleshoot issues to reinforce skills. Staying current with operational best practices and evolving technologies ensures ongoing competence and readiness to manage modern data center environments effectively.

Operational Competence

Certified professionals are equipped to manage virtual infrastructures, optimize performance, and maintain system stability. The 2V0-21.20 certification demonstrates the ability to deploy, configure, and maintain virtual machines, networks, and storage resources. Professionals are also prepared to implement preventive measures, anticipate potential issues, and respond proactively to operational challenges. This operational competence supports business objectives, enhances infrastructure reliability, and ensures efficient resource utilization.

Career Implications

Holding the 2V0-21.20 certification validates technical proficiency and operational readiness. It demonstrates that professionals possess the skills to manage vSphere environments effectively and implement solutions that enhance performance and reliability. Certified individuals are positioned for career growth, advancement, and opportunities to take on more specialized roles within data center operations. The credential provides recognition of expertise and practical competence in managing modern virtualization infrastructures.

Applying Best Practices

Candidates are expected to apply industry best practices in operational tasks. This includes configuring virtual machines efficiently, allocating resources strategically, and implementing monitoring procedures to maintain system performance. Understanding the impact of operational decisions on workloads and overall infrastructure ensures that virtual environments remain stable and scalable. Certified professionals can optimize processes, reduce downtime, and maintain high availability for critical business applications.

Practical Problem-Solving

Developing practical problem-solving skills is essential for the exam. Candidates should be able to assess situations, identify potential issues, and implement appropriate solutions within the virtualized environment. Practicing troubleshooting scenarios and analyzing system behavior enhances operational judgment and decision-making capabilities. These skills are crucial not only for the exam but also for daily management of virtual infrastructures.

Time Management During Preparation

Efficient time management is critical to successful preparation. Allocating consistent study periods and hands-on practice sessions allows candidates to cover all exam objectives thoroughly. Breaking down preparation into manageable tasks prevents overload and ensures steady progress. Adequate preparation time also allows candidates to review complex topics, practice scenario-based exercises, and refine troubleshooting skills, resulting in increased confidence and readiness for the exam.

Enhancing Operational Understanding

The 2V0-21.20 exam emphasizes understanding operational processes and their impact on the virtual environment. Candidates must comprehend how different configurations, resource allocations, and monitoring practices affect system performance. This understanding enables professionals to implement solutions that maintain stability, optimize resource usage, and ensure smooth operation of virtual infrastructures. Operational insight allows certified individuals to anticipate potential challenges and respond effectively.

Exam Confidence

Confidence comes from preparation and practical experience. Candidates who combine theoretical study with hands-on practice are better equipped to handle the variety of scenarios presented in the exam. Familiarity with vSphere tools, interfaces, and workflows allows candidates to navigate tasks efficiently, apply best practices, and demonstrate operational competence. This confidence reduces stress, improves focus, and contributes to successful exam performance.

Achieving Certification

Earning the 2V0-21.20 certification validates the ability to manage and optimize VMware vSphere environments. It reflects proficiency in deploying virtual machines, configuring networks and storage, monitoring performance, and troubleshooting operational issues. The certification also demonstrates a commitment to professional growth, operational excellence, and readiness to manage modern virtualized infrastructures effectively.

Continuous Improvement

Maintaining skills after certification is crucial for long-term success. Engaging regularly with virtual environments, monitoring performance, implementing optimizations, and troubleshooting issues ensures that certified professionals remain proficient. Continuous practice reinforces knowledge, enhances operational efficiency, and supports career advancement in virtualization and data center management.

The 2V0-21.20 certification serves as a benchmark for operational competence in VMware vSphere environments. Through comprehensive study, hands-on practice, and structured preparation, candidates can develop the skills needed to manage virtual infrastructures effectively. Certified professionals are equipped to deploy, monitor, and optimize virtual machines, configure networks and storage, troubleshoot issues, and apply best practices for operational efficiency and stability. This certification establishes a strong foundation for career growth and demonstrates readiness to meet the challenges of managing modern virtualized data centers.

Comprehensive Understanding of 2V0-21.20 Exam Objectives

The 2V0-21.20 exam evaluates a candidate’s proficiency in managing and operating VMware vSphere environments. It focuses on the ability to implement, configure, monitor, and troubleshoot virtual infrastructures, ensuring operational efficiency and reliability. Candidates are expected to demonstrate practical knowledge in deploying virtual machines, managing resources, configuring networking and storage, and applying best practices for performance and security. Mastery of these core concepts enables professionals to maintain stable, scalable, and highly available virtual environments.

Core Components of vSphere Environments

Understanding the architecture of VMware vSphere is fundamental for this exam. This includes familiarity with ESXi hosts, vCenter Server, clusters, datastores, resource pools, and virtual networks. Candidates must comprehend how these elements interact to deliver reliable, scalable services. Knowledge of host configuration, cluster management, and resource allocation ensures that workloads run efficiently without impacting performance. Recognizing dependencies among infrastructure components helps candidates troubleshoot effectively and make informed operational decisions.

Virtual Machine Lifecycle Management

Candidates should be adept at managing the lifecycle of virtual machines. This encompasses deploying, cloning, migrating, and configuring virtual machines to meet operational requirements. Understanding snapshots, templates, and resource allocation is crucial for optimizing performance and maintaining system flexibility. Monitoring resource consumption, adjusting CPU, memory, and storage allocations, and implementing policies to control performance are critical skills that demonstrate readiness to handle real-world challenges in virtualized environments.

Networking Fundamentals and Configuration

Networking is a critical area in the 2V0-21.20 exam. Candidates must understand virtual switches, port groups, VMkernel adapters, and network segmentation. Configuring redundancy, load balancing, and traffic management ensures efficient and reliable network connectivity. Troubleshooting network issues, monitoring traffic patterns, and optimizing configurations are essential skills. Candidates must also recognize how network setups affect virtual machine performance and overall resource distribution, which is key for maintaining operational stability.

Storage Management and Optimization

Storage configuration and management are vital to sustaining a healthy virtual infrastructure. Candidates should know how to create and manage datastores, allocate storage to virtual machines, and implement policies that improve performance and reliability. Understanding different storage types, such as SAN, NAS, and local storage, is essential for making informed provisioning and optimization decisions. Candidates should be able to monitor storage usage, resolve performance bottlenecks, and ensure that resources are allocated efficiently to support workloads.

Monitoring and Performance Analysis

Performance monitoring is integral to both exam preparation and practical application. Candidates must interpret metrics related to CPU, memory, network, and storage to identify potential issues. Detecting performance trends, analyzing anomalies, and implementing corrective actions are critical to maintaining a responsive and stable environment. Knowledge of monitoring tools and performance dashboards enables professionals to proactively manage infrastructure, ensuring that applications run efficiently and resources are optimally utilized.

Troubleshooting and Problem Resolution

Troubleshooting skills are essential for success in the 2V0-21.20 exam. Candidates should demonstrate the ability to analyze logs, interpret alerts, diagnose failures, and apply appropriate corrective measures. Understanding common error scenarios and being able to resolve them efficiently ensures that operations remain uninterrupted. Developing structured problem-solving approaches, identifying root causes, and implementing preventive measures contribute to long-term operational reliability and demonstrate practical competence in managing vSphere environments.

Security and Access Management

Security management is a critical component of virtual infrastructure operations. Candidates must understand role-based access controls, permissions, authentication mechanisms, and policy enforcement. Implementing these measures ensures that virtual machines and resources are protected against unauthorized access and misconfigurations. Knowledge of backup strategies, redundancy planning, and disaster recovery procedures enhances overall resilience. Professionals must also consider security implications when configuring networks, storage, and clusters to maintain a secure and compliant environment.

Hands-On Experience and Lab Exercises

Practical experience is indispensable for mastering the objectives of the 2V0-21.20 exam. Candidates should engage in lab exercises that replicate real-world scenarios, such as deploying virtual machines, configuring clusters, implementing resource management policies, and troubleshooting operational issues. Hands-on practice allows candidates to explore interfaces, tools, and workflows, reinforcing theoretical understanding and building operational confidence. Scenario-based exercises develop problem-solving abilities and help candidates understand the real-life implications of their configuration choices.

Structured Study and Time Management

A methodical approach to preparation is vital for success. Candidates should create a structured study plan that balances theoretical learning with practical exercises. Reviewing core concepts, performing hands-on tasks, and simulating real-world scenarios ensures comprehensive coverage of exam objectives. Time management is crucial; dedicating consistent study sessions helps maintain focus, prevents last-minute cramming, and allows for thorough understanding of each topic. Prioritizing complex subjects and repeatedly practicing key tasks reinforces knowledge and builds readiness for the exam.

Integration of Knowledge Across Domains

The 2V0-21.20 exam requires candidates to integrate knowledge across multiple domains. Understanding how virtual machines, clusters, storage, and networking interact allows professionals to make informed decisions that optimize performance, ensure stability, and prevent resource conflicts. This integrated understanding is essential for troubleshooting complex issues, planning capacity, and implementing operational best practices. Candidates must be able to apply these skills in scenario-based questions that test both knowledge and practical application.

Operational Proficiency and Best Practices

Candidates are expected to demonstrate operational proficiency by applying best practices in resource management, performance monitoring, and system optimization. This includes implementing policies to ensure consistent performance, maintaining high availability, and preventing potential issues. Professionals must be able to evaluate the impact of configuration changes, anticipate operational challenges, and implement solutions that maintain system integrity. Applying best practices enhances the reliability, scalability, and efficiency of the virtual environment.

Scenario-Based Problem Solving

Scenario-based problem solving is a central component of the exam. Candidates must analyze situations, identify potential issues, and implement effective solutions. Practicing troubleshooting scenarios and understanding the operational consequences of configuration decisions strengthens problem-solving skills. Candidates must be able to address unexpected challenges, apply corrective measures, and maintain optimal performance across all infrastructure components. Developing these abilities ensures preparedness for both the exam and real-world operational tasks.

Reinforcing Practical Skills

Continuous engagement with practical tasks reinforces theoretical knowledge and builds confidence. Candidates should regularly perform configuration changes, monitor system performance, and troubleshoot issues to solidify their understanding. Simulating operational scenarios enables professionals to refine workflows, optimize resource allocation, and develop strategies to handle complex situations. Reinforced practical skills translate to improved exam performance and operational readiness.

Analytical Thinking and Decision Making

The 2V0-21.20 exam evaluates a candidate’s ability to apply analytical thinking and make informed decisions in a virtual environment. Candidates must assess system performance, anticipate resource constraints, and implement strategies to resolve issues efficiently. Developing strong decision-making skills allows professionals to maintain stability, optimize performance, and respond proactively to operational challenges. Analytical thinking is essential for navigating scenario-based questions and managing real-world virtual infrastructures.

Exam Readiness and Confidence

Confidence during the exam is built through preparation, hands-on practice, and understanding of exam objectives. Familiarity with VMware tools, interfaces, and operational tasks enables candidates to approach the exam methodically and efficiently. Confidence reduces stress, enhances focus, and improves the ability to handle complex scenarios. By combining theoretical knowledge with practical experience, candidates position themselves for success.

Certification Impact

Achieving the 2V0-21.20 certification demonstrates operational competence in managing vSphere environments. Certified professionals are recognized for their ability to deploy, configure, and optimize virtual machines, networks, and storage resources. The certification reflects mastery of troubleshooting techniques, performance monitoring, and application of best practices. It validates a professional’s capability to maintain a reliable, scalable, and efficient virtual infrastructure, supporting career growth and advancement in virtualization and data center management.

Continuous Skill Development

Maintaining proficiency after certification is essential for long-term success. Engaging with virtual environments, monitoring performance, implementing optimizations, and troubleshooting operational issues ensures ongoing competence. Continuous practice reinforces knowledge, enhances operational efficiency, and prepares professionals for evolving challenges in virtual infrastructure management. Regular skill development strengthens confidence, supports advanced roles, and solidifies the foundation for future certifications and professional growth.

Operational Excellence

Certified professionals possess the knowledge and skills to ensure operational excellence in virtualized environments. This includes optimizing resource allocation, maintaining high availability, implementing security measures, and applying best practices in performance monitoring. Operational excellence requires continuous analysis, proactive maintenance, and informed decision-making. Professionals equipped with these capabilities contribute to efficient, reliable, and resilient virtual infrastructures that support organizational objectives.

Preparation for the 2V0-21.20 exam involves a combination of theoretical study, practical exercises, and scenario-based problem solving. Candidates must develop a deep understanding of vSphere components, operational workflows, resource management, and troubleshooting strategies. Structured preparation, hands-on experience, and continuous skill development ensure readiness to succeed. Achieving the certification validates technical expertise, demonstrates operational competence, and positions professionals for continued growth and success in managing VMware virtual environments.

Comprehensive Overview of 2V0-21.20 Exam Preparation

The 2V0-21.20 exam is designed to assess a professional’s ability to operate and manage VMware vSphere environments effectively. This exam measures not only theoretical knowledge but also the practical skills needed to maintain, configure, and troubleshoot virtual infrastructures. Candidates are expected to demonstrate a clear understanding of virtual machine management, resource allocation, networking, storage, and overall system monitoring. Successfully completing this exam proves a candidate’s capability to manage a stable, scalable, and efficient virtualized data center environment.

Deep Understanding of vSphere Architecture

A strong grasp of vSphere architecture is essential for the 2V0-21.20 exam. Candidates should understand the role and interaction of ESXi hosts, vCenter Server, clusters, datastores, and resource pools. Knowing how these components interact is crucial to maintaining operational stability. Candidates must comprehend cluster configuration, host management, and the allocation of resources to virtual machines to avoid bottlenecks and ensure efficient workload distribution. Recognizing dependencies and relationships within the infrastructure enables effective troubleshooting and decision-making.

Virtual Machine Management and Optimization

Managing virtual machines involves more than deployment; it includes lifecycle management such as cloning, migration, template utilization, and resource adjustments. Candidates must be able to optimize virtual machines by allocating CPU, memory, and storage resources effectively. Knowledge of snapshots, performance monitoring, and operational best practices ensures that virtual machines run efficiently and reliably. Monitoring resource utilization and making timely adjustments prevents performance degradation and supports a balanced, optimized environment.

Networking Configuration and Best Practices

Networking is a core component of operational success in vSphere environments. Candidates should be able to configure virtual switches, port groups, VMkernel adapters, and implement network segmentation strategies. Ensuring redundancy, implementing load balancing, and monitoring network traffic are critical for maintaining reliable connectivity. Professionals must also be capable of troubleshooting network issues and understanding how network design affects the performance and stability of virtual machines. Knowledge of networking best practices ensures seamless operation within complex environments.

Storage Management and Policy Implementation

Effective storage management is vital for system stability and performance. Candidates are expected to create, configure, and manage datastores while allocating storage resources to virtual machines efficiently. Understanding different storage technologies and their implications on virtual workloads allows professionals to make informed provisioning decisions. Monitoring storage usage, implementing policies, and resolving performance issues are essential skills. Proper storage management ensures high availability, reliability, and optimized performance across the virtual infrastructure.

Performance Monitoring and Analysis

Candidates must demonstrate the ability to monitor system performance comprehensively. Analyzing metrics for CPU, memory, network, and storage utilization allows professionals to detect potential issues and apply corrective measures. Understanding performance trends and capacity planning helps maintain optimal system responsiveness. Knowledge of monitoring tools and techniques enables candidates to proactively manage workloads, identify bottlenecks, and ensure resources are efficiently utilized to support business operations.

Troubleshooting Methodologies

Troubleshooting is a significant focus of the 2V0-21.20 exam. Candidates should be adept at identifying, analyzing, and resolving issues within the vSphere environment. Skills include interpreting logs, responding to alerts, diagnosing failures, and applying corrective actions. Candidates must approach problem resolution systematically, applying best practices to prevent recurrence. Developing these skills ensures operational continuity and demonstrates practical competence in maintaining virtual infrastructures.

Security and Access Control

Security management is integral to maintaining a stable virtual environment. Candidates should understand role-based access control, user permissions, authentication methods, and policy enforcement. Implementing these security measures ensures that virtual machines and data remain protected. Professionals should also understand backup strategies, redundancy, and disaster recovery planning. Applying security best practices reduces risk and ensures compliance with organizational standards, safeguarding both resources and operational integrity.

Hands-On Experience and Practical Application

Practical experience is crucial for mastering the 2V0-21.20 exam objectives. Engaging in lab exercises allows candidates to deploy virtual machines, configure clusters, optimize resources, and troubleshoot operational issues. Hands-on practice reinforces theoretical knowledge and builds confidence in managing complex environments. Working through real-world scenarios enhances problem-solving abilities and prepares candidates to apply their knowledge effectively during the exam and in professional settings.

Structured Study Approach

A systematic approach to preparation increases the chances of success. Candidates should combine theoretical learning with practical exercises in a structured study plan. Reviewing concepts such as cluster configuration, resource management, network setup, and storage optimization ensures coverage of all exam domains. Allocating dedicated study time and focusing on scenario-based exercises helps candidates retain information and develop operational competence. Consistent study habits prevent last-minute cramming and support effective learning.

Integration of Skills Across Domains

The 2V0-21.20 exam tests a candidate’s ability to integrate knowledge across multiple domains. Understanding how virtual machines, clusters, storage, and networking interact allows professionals to optimize performance and maintain system stability. Candidates must apply integrated knowledge to troubleshoot complex issues, plan capacity, and implement best practices. This holistic approach ensures that operational decisions support overall infrastructure efficiency and reliability.

Operational Excellence and Best Practices

Candidates are expected to demonstrate operational excellence through the application of best practices in configuration, monitoring, and performance optimization. Proper resource allocation, implementation of redundancy, and proactive monitoring ensure the stability of virtual environments. Candidates must be able to anticipate potential challenges and apply solutions that maintain high availability and consistent performance. Following best practices is essential for efficient management and long-term operational success.

Scenario-Based Problem Solving

Scenario-based problem-solving is a key skill assessed in the exam. Candidates must analyze situations, identify potential issues, and implement appropriate solutions. Practicing troubleshooting exercises and evaluating operational consequences of configuration decisions strengthens problem-solving abilities. Effective scenario-based responses demonstrate practical readiness and the ability to handle real-world challenges in virtualized environments.

Reinforcing Practical Skills

Continuous hands-on practice reinforces theoretical knowledge and enhances operational confidence. Performing regular administrative tasks, monitoring system performance, and resolving simulated issues help solidify understanding. Practical exercises provide insight into the real-world effects of configuration choices and resource allocation, enabling candidates to make informed operational decisions and refine workflow management.

Analytical Thinking and Decision-Making

Analytical thinking and informed decision-making are critical for success. Candidates must assess performance data, anticipate resource limitations, and implement solutions that optimize system functionality. Effective decision-making ensures stability, efficiency, and reliability within virtual infrastructures. This capability is essential for both the exam and professional roles, where managing complex scenarios requires a combination of technical knowledge and operational judgment.

Exam Readiness and Confidence

Confidence during the exam comes from thorough preparation and practical experience. Familiarity with VMware tools, interfaces, and operational procedures allows candidates to navigate tasks efficiently. Confidence reduces stress, enhances focus, and improves the ability to tackle scenario-based questions. Combining theoretical knowledge with practical application ensures candidates can demonstrate operational competence under exam conditions.

Certification Benefits

The 2V0-21.20 certification validates a professional’s ability to manage VMware vSphere environments effectively. It confirms proficiency in deploying virtual machines, configuring networks and storage, monitoring performance, and troubleshooting operational issues. Achieving this credential signifies operational readiness, enhances career prospects, and demonstrates the capability to maintain efficient and resilient virtual infrastructures.

Continuous Skill Development

Maintaining proficiency after certification is essential. Professionals should continue to engage with virtual environments, monitor performance, implement optimizations, and resolve operational challenges. Continuous practice reinforces skills, ensures operational efficiency, and prepares individuals for evolving responsibilities in virtual infrastructure management. Regular skill development also supports career growth and readiness for advanced roles.

Operational Competence and Efficiency

Certified professionals are equipped to ensure operational competence by implementing resource management strategies, maintaining high availability, and applying security measures. They optimize performance, troubleshoot issues effectively, and adhere to best practices. Operational efficiency ensures that virtual environments remain reliable, scalable, and capable of supporting critical workloads without disruption.

Preparing for Long-Term Success

Preparation for the 2V0-21.20 exam extends beyond passing the test. Developing a deep understanding of vSphere components, operational workflows, and troubleshooting methodologies ensures readiness for professional responsibilities. Consistent practice, scenario-based learning, and integration of knowledge across domains contribute to long-term success. Achieving certification reflects mastery of virtualization concepts, operational skills, and the ability to manage modern data center environments effectively.

Strategic Operational Planning

Strategic planning is a vital component of certification readiness. Candidates must be able to evaluate infrastructure needs, forecast resource requirements, and implement scalable solutions. Effective planning minimizes downtime, optimizes resource utilization, and ensures that virtual environments can handle evolving workloads. Integrating planning skills with operational knowledge allows certified professionals to make informed decisions that enhance system reliability and performance.

Real-World Application and Problem Solving

Success in the 2V0-21.20 exam relies on applying knowledge to realistic operational challenges. Candidates should practice implementing solutions to simulated scenarios, configuring resources efficiently, and troubleshooting issues under controlled conditions. This hands-on experience ensures preparedness for the practical demands of managing virtualized environments and reinforces decision-making skills that are critical for long-term professional competence.

Commitment to Operational Excellence

Achieving the 2V0-21.20 certification demonstrates a commitment to operational excellence. Professionals are recognized for their ability to manage, optimize, and maintain virtual infrastructures effectively. Applying best practices, monitoring performance, and troubleshooting issues consistently contributes to high-performing environments. Certification validates a professional’s skill set, enhances credibility, and positions individuals for ongoing success in virtualization and data center management.

Preparing for Continuous Growth

Certification is a milestone, but continuous learning is essential. Engaging with evolving technologies, experimenting with advanced configurations, and keeping up with best practices ensures that skills remain current. Certified professionals who commit to continuous improvement are better equipped to handle complex virtual environments, address emerging challenges, and advance their careers in virtualization and infrastructure management.

The 2V0-21.20 certification establishes a strong foundation in VMware vSphere operations. Candidates develop practical skills, operational insight, and the ability to troubleshoot complex issues effectively. By integrating theoretical knowledge with hands-on experience, professionals are prepared to manage scalable, reliable, and high-performing virtual infrastructures. Continuous practice, strategic planning, and commitment to operational excellence ensure that certification holders maintain their competence and continue to advance in the field of virtualization.

Understanding the Scope of 2V0-21.20 Exam

The 2V0-21.20 exam evaluates the ability of professionals to manage, operate, and optimize VMware vSphere environments effectively. Candidates are tested on their understanding of virtual machine lifecycle management, cluster configuration, resource allocation, storage and network setup, and system performance monitoring. Mastery of these areas ensures that certified individuals can maintain a scalable, reliable, and high-performing virtual infrastructure. Exam preparation requires not only theoretical knowledge but also practical experience to handle operational challenges efficiently.

Virtual Machine Deployment and Management

A central aspect of the exam is managing virtual machines within vSphere environments. Candidates must demonstrate competence in deploying new virtual machines, configuring templates, cloning, migrating, and optimizing workloads. Resource allocation including CPU, memory, and storage is critical to ensure performance and efficiency. Understanding snapshots, resource reservations, and limits is necessary to prevent bottlenecks and maintain system stability. Effective management of virtual machines under various operational scenarios ensures that candidates can handle real-world infrastructure challenges.

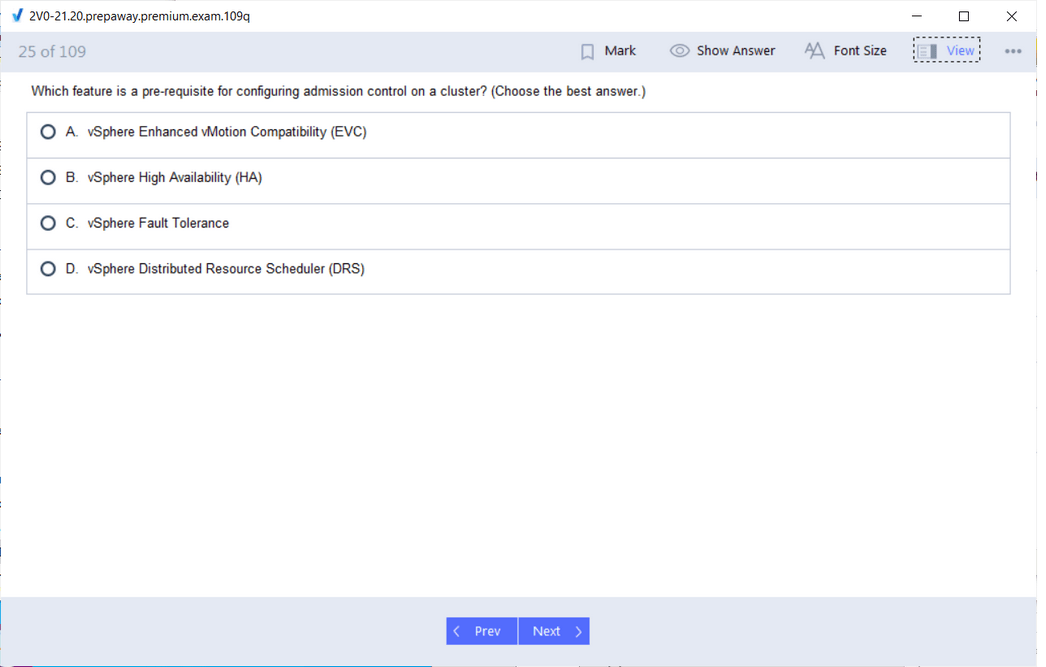

Cluster Design and Resource Management

Cluster configuration and management form a key component of the 2V0-21.20 exam. Candidates must understand the principles of high availability, distributed resource scheduling, and fault tolerance. Proper cluster setup ensures balanced workloads, efficient resource utilization, and redundancy in case of host failures. Knowledge of vSphere cluster features allows professionals to plan capacity, allocate resources dynamically, and maintain operational continuity. Applying best practices in cluster management is essential for achieving optimal performance and reliability in virtualized environments.

Networking Setup and Optimization

Networking knowledge is essential for managing vSphere environments. Candidates must be able to configure virtual switches, port groups, VMkernel adapters, and implement traffic management and redundancy. Proper network configuration ensures connectivity, performance, and fault tolerance for virtual machines. Understanding how network design impacts performance and security is critical for troubleshooting issues and planning network expansion. Candidates should also be familiar with monitoring network utilization, diagnosing traffic bottlenecks, and applying optimizations to support operational requirements.

Storage Configuration and Maintenance

Storage management is a vital part of the exam. Candidates must demonstrate skills in creating and managing datastores, provisioning storage to virtual machines, and optimizing storage performance. Understanding different storage types, implementing policies, and maintaining redundancy ensures data availability and system stability. Efficient storage allocation, monitoring usage, and resolving performance issues are critical for maintaining a responsive and reliable environment. Knowledge of storage best practices helps prevent disruptions and supports the efficient operation of virtual infrastructures.

Performance Monitoring and Troubleshooting

Monitoring and analyzing performance metrics is a core skill tested in the exam. Candidates must be able to interpret data for CPU, memory, storage, and network utilization to identify issues before they impact operations. Troubleshooting techniques include analyzing logs, diagnosing failures, and applying corrective measures. Scenario-based problem solving is emphasized to ensure candidates can address real-world operational challenges. Understanding how to balance performance across resources while maintaining system reliability demonstrates the ability to manage complex vSphere environments effectively.

Security and Access Control Management

Security and access control are essential for operational integrity. Candidates must understand role-based access controls, user permissions, authentication methods, and policy implementation. Effective security practices ensure that virtual machines and data remain protected from unauthorized access. Backup and disaster recovery strategies also play a role in maintaining operational continuity. Knowledge of security best practices helps prevent misconfigurations and ensures that infrastructure remains compliant and resilient.

Practical Application and Lab Experience

Hands-on practice is critical to mastering the 2V0-21.20 exam objectives. Candidates should engage in lab exercises that replicate operational scenarios, such as configuring clusters, managing virtual machines, allocating resources, and troubleshooting performance issues. Practical experience reinforces theoretical knowledge and helps candidates build confidence in handling real-world challenges. Regular practice with configuration changes, monitoring tools, and troubleshooting exercises strengthens operational skills and ensures readiness for both the exam and professional responsibilities.

Scenario-Based Operational Problem Solving

The exam emphasizes the ability to analyze scenarios, identify issues, and implement appropriate solutions. Candidates must practice solving problems that involve resource conflicts, performance bottlenecks, and configuration errors. Scenario-based exercises help develop decision-making skills and ensure that candidates can respond effectively under operational pressures. Understanding the impact of decisions across multiple domains, such as networking, storage, and compute resources, enhances a candidate’s ability to maintain a stable and efficient environment.

Analytical Thinking and Decision-Making Skills

Analytical thinking is essential for assessing performance, anticipating issues, and making informed operational decisions. Candidates must evaluate data from monitoring tools, interpret alerts, and plan corrective actions. Effective decision-making ensures that workloads are balanced, resources are utilized efficiently, and system reliability is maintained. The ability to apply analytical reasoning in practical situations demonstrates readiness to manage complex virtual infrastructures and meet operational objectives.

Exam Preparation Strategies

A structured approach to preparation is vital. Candidates should combine theoretical study with hands-on exercises to cover all exam domains comprehensively. Reviewing core concepts, performing practical tasks, and practicing scenario-based problem solving ensures familiarity with the types of questions and operational scenarios presented in the exam. Consistent study habits, time management, and focused practice build confidence and reinforce knowledge, ensuring candidates are well-prepared for exam challenges.

Integration of Operational Knowledge

Success in the 2V0-21.20 exam requires integrating knowledge across multiple operational domains. Candidates must understand how virtual machines, clusters, storage, and networking interact to support performance and stability. Integrated knowledge allows for informed troubleshooting, resource planning, and optimization. Professionals must be able to evaluate the overall environment and make decisions that maintain efficiency and reliability while minimizing risk.

Continuous Learning and Skill Reinforcement

Continuous engagement with virtual environments enhances proficiency. Candidates should regularly perform administrative tasks, monitor system performance, and resolve operational issues. Practicing with real-world scenarios helps reinforce theoretical knowledge and develop problem-solving capabilities. Ongoing skill reinforcement ensures that candidates remain prepared for evolving challenges and maintain competence in managing complex virtual infrastructures.

Operational Best Practices

Applying best practices in configuration, resource management, and monitoring is critical for achieving operational excellence. Candidates must understand how to implement redundancy, optimize performance, and ensure high availability. Proper application of best practices prevents operational failures and maintains system reliability. Certified professionals who adhere to these standards demonstrate the ability to manage virtual infrastructures efficiently and effectively.

Certification Benefits and Career Impact

Achieving the 2V0-21.20 certification validates a professional’s operational competence in managing vSphere environments. It demonstrates skills in deployment, configuration, performance monitoring, and troubleshooting. Certification enhances credibility, career prospects, and the ability to take on advanced responsibilities. Professionals with this certification are recognized for their ability to maintain stable, scalable, and high-performing virtual infrastructures.

Practical Readiness for Real-World Environments

Preparation for the exam ensures readiness for real-world operational challenges. Candidates who have practiced deploying virtual machines, configuring clusters, managing resources, and troubleshooting issues are better equipped to handle operational demands. Understanding the implications of configuration choices and being able to apply corrective measures in complex scenarios ensures that certified professionals can maintain a reliable and efficient virtual environment.

Strategic Infrastructure Planning

Strategic planning skills are tested through scenario-based questions and operational problem solving. Candidates must assess infrastructure requirements, forecast resource needs, and implement scalable solutions. Effective planning minimizes downtime, optimizes resource utilization, and ensures that virtual environments can handle evolving workloads. Integrating planning and operational knowledge allows professionals to maintain efficiency and resilience across the infrastructure.

Reinforcing Exam Concepts Through Practice

Regular hands-on practice strengthens understanding of exam objectives. Simulating operational scenarios, performing configuration tasks, and resolving performance issues provide insight into practical challenges. This practice develops problem-solving skills, reinforces theoretical concepts, and builds confidence in applying knowledge during the exam. Continuous engagement with practical exercises is essential for mastering the material and achieving certification.

Analytical Approach to Operational Challenges

Candidates must apply analytical thinking to evaluate infrastructure performance, identify bottlenecks, and plan corrective actions. The ability to assess multiple factors simultaneously and determine the best course of action demonstrates operational maturity. Analytical skills are essential for responding effectively to complex scenarios, both in the exam and in professional practice.

Ensuring Long-Term Operational Competence

Certification is a foundation for ongoing skill development. Professionals should continue to engage with virtual environments, monitor performance, and implement optimizations to maintain competence. Continuous learning ensures readiness for advanced responsibilities, evolving technologies, and emerging operational challenges. Maintaining operational competence supports career growth and establishes long-term expertise in virtualization management.

Comprehensive Scenario Analysis

The 2V0-21.20 exam requires candidates to integrate knowledge across domains to solve complex scenarios. Candidates must evaluate how configuration changes, resource adjustments, and network modifications affect overall system performance. Scenario analysis ensures that certified professionals can make informed decisions that maintain stability, performance, and efficiency. Practicing scenario analysis strengthens problem-solving skills and operational readiness.

Operational Efficiency and Reliability

Candidates must demonstrate the ability to maintain operational efficiency while ensuring reliability. Proper resource allocation, monitoring, and troubleshooting contribute to optimized performance. Certified professionals understand the importance of proactive maintenance, system redundancy, and performance tuning to prevent disruptions. Operational efficiency is a key indicator of readiness to manage production-level virtual environments.

Continuous Improvement and Skill Development

Even after certification, continuous improvement is essential. Professionals should regularly update their knowledge, practice new techniques, and explore advanced configurations. This commitment ensures that skills remain relevant, operational efficiency is maintained, and emerging challenges are addressed effectively. Continuous improvement supports long-term career development and strengthens expertise in virtual infrastructure management.

The 2V0-21.20 exam validates a professional’s ability to manage VMware vSphere environments effectively. It emphasizes practical skills, operational understanding, and scenario-based problem solving. By combining theoretical knowledge with hands-on practice, candidates can ensure readiness for the exam and professional success. Continuous skill development, strategic planning, and application of best practices enable certified professionals to maintain efficient, reliable, and scalable virtual infrastructures while advancing their careers in virtualization management.

Mastering Resource Management

Understanding how to manage and allocate resources is critical for maintaining a performant and resilient vSphere environment. Candidates preparing for the 2V0-21.20 exam must demonstrate proficiency in balancing CPU, memory, and storage resources across multiple virtual machines and hosts. Knowledge of resource pools, reservations, limits, and shares allows professionals to prioritize workloads effectively. Practical application involves monitoring resource usage, adjusting allocations dynamically, and identifying potential bottlenecks before they affect operations. Mastery of these concepts ensures that infrastructure remains scalable and efficient.

Advanced Virtual Machine Operations

Candidates should be able to handle complex virtual machine operations beyond basic deployment. This includes cloning, template creation, snapshot management, and virtual machine migration across hosts and clusters. Understanding the implications of each operation on performance, storage consumption, and network traffic is essential. Proper execution ensures minimal disruption to production workloads. Candidates are expected to apply operational best practices to maintain system stability while performing tasks that involve multiple layers of infrastructure components.

Cluster and High Availability Configuration

A significant portion of the 2V0-21.20 exam focuses on cluster design and configuration. Candidates need to understand features such as High Availability, Distributed Resource Scheduler, and Fault Tolerance. These features are critical for maintaining uptime, balancing workloads, and ensuring system resilience. Configuring clusters involves understanding host compatibility, workload distribution, and failover scenarios. Professionals must also plan for capacity expansion and maintenance without impacting availability, demonstrating their ability to manage large-scale virtual infrastructures efficiently.

Storage Provisioning and Management

Effective storage management is integral to virtual infrastructure operations. Exam candidates must be skilled in configuring datastores, assigning storage to virtual machines, and implementing storage policies that align with business requirements. Knowledge of different storage types, including shared and local storage, allows for optimized allocation and redundancy planning. Monitoring storage performance, identifying potential bottlenecks, and applying corrective actions are essential skills for ensuring data availability and supporting high-performance workloads.

Networking Fundamentals and Optimization

Networking plays a crucial role in virtualized environments. Candidates must understand virtual switch configuration, VMkernel setup, and network traffic segmentation. Proper network design ensures connectivity, redundancy, and efficient data flow across virtual machines and hosts. Candidates are expected to apply network monitoring tools, troubleshoot connectivity issues, and optimize traffic management. Knowledge of distributed switches and VLAN configuration supports complex scenarios involving multiple clusters or data centers, ensuring operational efficiency and system reliability.

Performance Monitoring and Tuning

Candidates should be proficient in using performance monitoring tools to assess CPU, memory, storage, and network utilization. Recognizing trends, identifying anomalies, and applying tuning measures are essential skills. The exam tests the ability to interpret metrics accurately, diagnose performance issues, and implement solutions that maintain system efficiency. Continuous monitoring and proactive adjustments ensure that workloads operate smoothly and that resources are used optimally.

Troubleshooting Complex Scenarios

Troubleshooting is a critical competency for the 2V0-21.20 exam. Candidates must demonstrate systematic approaches to identifying and resolving issues in virtual environments. This includes analyzing logs, identifying configuration errors, resolving performance bottlenecks, and addressing network or storage problems. Scenario-based questions require professionals to apply operational knowledge to real-world problems. Effective troubleshooting ensures minimal downtime and maintains the integrity of the virtual infrastructure.

Security and Access Controls

Candidates are expected to understand role-based access controls, user permissions, and authentication mechanisms within vSphere. Properly configuring security settings ensures that virtual machines and data are protected from unauthorized access. Knowledge of encryption, secure communication, and policy enforcement helps maintain operational integrity. Professionals must also implement backup and disaster recovery strategies to safeguard critical workloads and maintain business continuity.

Practical Lab Experience

Hands-on experience is essential for mastering the 2V0-21.20 exam objectives. Candidates should engage in lab exercises that simulate real-world scenarios, such as deploying clusters, configuring resource pools, migrating workloads, and troubleshooting issues. This practical exposure reinforces theoretical knowledge and builds confidence in performing operational tasks. Regular practice allows candidates to explore complex configurations, test system behavior, and refine their problem-solving skills.

Scenario-Based Problem Solving

The exam emphasizes the ability to analyze scenarios, determine root causes, and implement solutions. Candidates must practice addressing challenges that involve multiple interdependent components, such as resource contention, network latency, or storage constraints. Developing the ability to evaluate the impact of decisions across the environment ensures readiness for operational responsibilities. Scenario-based practice enhances critical thinking and prepares candidates for both the exam and professional practice.

Analytical Skills for Operational Efficiency

Analytical thinking is key for interpreting performance metrics, identifying potential issues, and planning corrective measures. Candidates must be able to assess multiple factors simultaneously and prioritize actions that optimize system performance. Applying analytical skills allows professionals to maintain balanced workloads, ensure redundancy, and prevent performance degradation. Proficiency in data analysis contributes to efficient resource management and informed decision-making.

Integration of Core Concepts

Success in the 2V0-21.20 exam requires integrating knowledge across compute, storage, network, and security domains. Candidates must understand how changes in one area impact others and make informed decisions to maintain system balance. This integrated approach ensures that infrastructure operates efficiently, scales effectively, and remains resilient against failures. Professionals who can combine theoretical knowledge with practical application demonstrate readiness to manage complex virtual environments.

Strategic Planning and Capacity Management

Exam candidates should be able to plan infrastructure capacity to meet current and future workload demands. Understanding resource forecasting, expansion planning, and high-availability considerations is essential. Strategic planning includes evaluating storage growth, network scalability, and cluster capacity to prevent resource shortages. Professionals must also account for maintenance windows and unexpected failures to ensure uninterrupted service delivery.

Continual Skill Development

Certification preparation extends beyond passing the exam; ongoing skill development ensures long-term operational competence. Candidates should maintain familiarity with evolving technologies, practice new configurations, and refine troubleshooting techniques. Continuous learning strengthens proficiency in managing virtual infrastructures, preparing professionals for emerging challenges and advanced operational responsibilities.

Operational Best Practices

Adhering to best practices in deployment, resource management, and monitoring ensures consistent performance and reliability. Candidates must be able to implement redundancy, optimize resource allocation, and maintain high availability. Applying standardized procedures reduces errors, minimizes downtime, and improves overall efficiency. Professionals who follow operational best practices can confidently manage enterprise-level virtual environments.

Benefits of Certification

Achieving the 2V0-21.20 certification validates a professional’s ability to operate, manage, and optimize VMware vSphere environments. Certification enhances credibility, demonstrates practical expertise, and opens opportunities for career advancement. Certified individuals are recognized for their ability to maintain high-performing, reliable, and scalable infrastructures, making them valuable assets in any IT organization.

Readiness for Real-World Operations

Exam preparation ensures that candidates can manage virtual infrastructures effectively in real-world scenarios. Hands-on practice, scenario analysis, and troubleshooting exercises develop the skills necessary to respond to operational challenges. Certified professionals are equipped to implement best practices, maintain performance, and ensure system reliability under various conditions.

Efficient Resource Utilization

Proper resource allocation and utilization is central to maintaining performance and cost efficiency. Candidates must understand how to balance workloads across hosts, optimize storage usage, and configure networks for maximum throughput. Efficient resource management prevents over-provisioning and ensures that critical applications have the necessary capacity to operate reliably.

Performance Optimization Techniques

Candidates should be familiar with techniques for optimizing CPU, memory, storage, and network performance. This includes load balancing, tuning resource allocation, and applying performance policies. Understanding the interactions between different components allows professionals to make informed adjustments that enhance system responsiveness and stability.

Continuous Monitoring and Improvement

Ongoing monitoring and evaluation are necessary to maintain optimal performance. Professionals must regularly review performance metrics, identify trends, and implement adjustments to prevent issues. Continuous improvement ensures that infrastructure remains scalable, resilient, and capable of supporting evolving workloads.

Preparing for Operational Challenges

The 2V0-21.20 exam emphasizes readiness for complex operational challenges. Candidates must be able to troubleshoot issues, optimize performance, and apply best practices in real-time scenarios. This preparation translates directly to professional competency, ensuring that certified individuals can maintain stable and efficient virtual environments.

Practical Decision-Making Skills

Effective decision-making is crucial when managing virtual environments. Candidates must evaluate the impact of configuration changes, resource adjustments, and troubleshooting actions on overall system performance. Developing practical decision-making skills ensures that professionals can respond to issues proactively and maintain operational continuity.

Reinforcement Through Hands-On Practice

Consistent hands-on practice reinforces knowledge and strengthens confidence in performing operational tasks. Candidates should simulate real-world scenarios, configure infrastructure components, and resolve performance challenges. This approach ensures comprehensive understanding and readiness for both the exam and professional responsibilities.

Maintaining Long-Term Competence

Certification is a foundation for continued expertise. Professionals must engage in ongoing practice, monitor performance, and apply optimizations to sustain operational competence. Continuous engagement with virtual environments prepares candidates for advanced responsibilities and emerging challenges, supporting long-term career growth.

Comprehensive Scenario Integration

The exam requires candidates to integrate knowledge across compute, storage, network, and security domains. Understanding how different components interact allows professionals to solve complex scenarios efficiently. Scenario integration ensures that certified individuals can make informed decisions that maintain system stability and performance.

Operational Resilience and Reliability

Maintaining operational resilience involves implementing redundancy, monitoring resource usage, and proactively addressing performance issues. Candidates must demonstrate the ability to maintain reliable infrastructure under varying workloads and conditions. Certified professionals are capable of ensuring high availability, minimizing downtime, and supporting business-critical applications effectively.

The 2V0-21.20 exam validates practical skills, operational understanding, and problem-solving capabilities in managing VMware vSphere environments. Through hands-on practice, scenario analysis, and comprehensive preparation, candidates can demonstrate readiness to operate complex virtual infrastructures. Certification confirms the ability to maintain high-performing, resilient, and efficient environments, supporting professional growth and long-term success in virtualization management.

Planning and Designing vSphere Infrastructure

A deep understanding of designing and planning vSphere environments is critical for the 2V0-21.20 exam. Candidates must be able to architect solutions that meet performance, scalability, and availability requirements. This includes designing clusters, resource pools, and storage configurations that align with business needs. Knowledge of host placement, VM distribution, and load balancing ensures that resources are optimized while minimizing potential conflicts. Professionals should also anticipate future growth and plan infrastructure accordingly, incorporating redundancy and failover strategies to maintain continuous operations.

Managing and Configuring Virtual Networks

Networking within a vSphere environment is a core competency. Candidates must understand the configuration of standard and distributed switches, VLANs, and VMkernel interfaces. Proper network design ensures efficient traffic flow, high availability, and minimal latency. Candidates should be familiar with troubleshooting connectivity issues, configuring NIC teaming, and implementing network policies that protect data integrity. Knowledge of network performance monitoring and optimization is essential to maintain a responsive virtual environment and support critical workloads effectively.

Advanced Storage Management

Storage management is a key area of expertise for the 2V0-21.20 exam. Candidates should be able to configure datastores, manage storage policies, and allocate storage efficiently across virtual machines. Understanding different storage types, including SAN, NAS, and local storage, allows professionals to select the right storage for different workloads. Skills in monitoring storage performance, implementing redundancy, and applying best practices in provisioning ensure that storage systems support high-performance operations and data availability.

Implementing High Availability and Fault Tolerance

High Availability and Fault Tolerance are essential for maintaining continuous service delivery. Candidates should be capable of configuring clusters to support failover, monitoring health status, and ensuring that workloads continue without interruption in the event of host failures. Understanding resource allocation within clusters, applying HA admission control, and managing VM restart priorities are critical for ensuring operational stability. Fault Tolerance configuration for critical virtual machines provides an additional layer of protection against downtime and data loss.

Resource Allocation and Optimization

Effective resource management is crucial for sustaining optimal performance. Candidates must demonstrate skills in allocating CPU, memory, and storage resources through resource pools, reservations, limits, and shares. Monitoring resource consumption and adjusting allocations proactively ensures that virtual machines operate efficiently. Techniques for identifying contention, rebalancing workloads, and optimizing performance are fundamental to maintaining a stable and scalable environment.

Backup, Recovery, and Data Protection

Ensuring data integrity through backup and recovery is a critical aspect of vSphere management. Candidates must understand snapshot management, backup solutions, and disaster recovery planning. Properly implemented backup strategies minimize data loss and reduce recovery time in case of failures. Candidates should be able to restore VMs, test recovery procedures, and validate that systems can recover from various failure scenarios. Knowledge of data protection policies and best practices is essential to maintain operational resilience.

Monitoring and Performance Analysis

Proficiency in monitoring tools and performance metrics is essential for identifying issues before they affect operations. Candidates must analyze CPU, memory, storage, and network usage, recognizing trends that may indicate potential problems. Applying corrective actions, tuning configurations, and optimizing resource allocation based on performance data ensures that workloads run efficiently. Skills in interpreting performance reports, identifying anomalies, and implementing improvements are crucial for maintaining a healthy virtual environment.

Troubleshooting Complex Scenarios

Troubleshooting requires a methodical approach to diagnose and resolve issues in a vSphere environment. Candidates must be able to analyze system logs, identify configuration errors, and address performance bottlenecks. This includes resolving network connectivity problems, storage access issues, and VM operational failures. Scenario-based problem solving tests the ability to apply knowledge in real-world situations, ensuring minimal disruption to services and maintaining system reliability.

Security Configuration and Compliance

Security management is an integral part of virtualization operations. Candidates should understand role-based access controls, user permissions, and authentication mechanisms within vSphere. Configuring secure communication channels, applying encryption, and enforcing policies protects workloads and data from unauthorized access. Knowledge of compliance requirements and security best practices ensures that virtual infrastructures adhere to organizational standards and maintain operational integrity.

Automation and Scripting

Automation reduces manual intervention and increases operational efficiency. Candidates should be familiar with automation tools, scripting, and task scheduling to manage repetitive operations. Using scripts to deploy VMs, configure settings, and monitor systems streamlines workflows and reduces the risk of human error. Proficiency in automation enables professionals to maintain consistency across environments, scale operations, and respond quickly to changing demands.

Scenario-Based Operational Management

The 2V0-21.20 exam emphasizes the application of knowledge in scenario-based scenarios. Candidates must analyze situations, make informed decisions, and implement solutions that address complex challenges. This includes balancing workloads, optimizing resource usage, and resolving conflicts across multiple infrastructure components. Scenario practice develops critical thinking and prepares professionals for real-world operational responsibilities.

Integration of Core Concepts

Successful candidates integrate knowledge across compute, storage, network, and security domains to ensure cohesive operation. Understanding the interdependencies between components allows professionals to anticipate the impact of changes and maintain system stability. Integrated management ensures that virtual environments are efficient, resilient, and capable of supporting diverse workloads without degradation of service.

Capacity Planning and Growth Forecasting

Effective capacity planning ensures that infrastructure can accommodate current and future demands. Candidates should assess resource consumption, project growth, and implement scalable solutions. This includes evaluating host capacity, storage availability, and network throughput to prevent performance issues. Planning for growth ensures that the environment remains flexible, resilient, and able to support business requirements efficiently.

Hands-On Experience and Lab Exercises

Practical experience reinforces theoretical knowledge. Candidates should engage in lab exercises to configure clusters, deploy virtual machines, manage resources, and troubleshoot issues. Hands-on practice allows professionals to explore complex scenarios, test configurations, and develop confidence in operational tasks. Lab work is essential for developing the skills necessary to perform effectively in a professional vSphere environment.

Performance Optimization Strategies

Candidates must be skilled in tuning virtual environments for optimal performance. This includes adjusting CPU and memory allocations, optimizing storage access, and managing network traffic efficiently. Applying performance policies, monitoring system behavior, and making data-driven adjustments ensures that workloads operate smoothly and efficiently. Mastery of optimization strategies contributes to system reliability and user satisfaction.

Continuous Monitoring and Proactive Maintenance

Maintaining optimal performance requires continuous monitoring and proactive maintenance. Candidates must track resource usage, identify trends, and implement preventive measures to avoid issues. Proactive actions include updating software, validating backups, and addressing potential failures before they impact operations. Continuous monitoring ensures that the environment remains stable, responsive, and capable of supporting critical workloads.

Advanced Troubleshooting Techniques

Candidates must demonstrate advanced troubleshooting skills to address complex operational challenges. This includes diagnosing performance degradation, resolving storage or network issues, and handling unexpected system behavior. Advanced troubleshooting involves methodical analysis, root cause identification, and application of corrective measures to maintain service continuity and system integrity.

Operational Readiness and Scenario Testing

The exam requires candidates to demonstrate readiness for real-world operations through scenario-based testing. Practicing these scenarios ensures that candidates can handle unexpected issues, optimize resources, and implement best practices under pressure. Scenario testing develops critical thinking, reinforces hands-on skills, and prepares professionals for managing enterprise-level virtual environments.

Decision-Making and Risk Management

Effective decision-making is essential for maintaining operational stability. Candidates must evaluate the potential impact of configuration changes, resource allocation adjustments, and troubleshooting actions. Understanding risk and implementing mitigation strategies ensures that the environment remains resilient and reliable. Decision-making skills are critical for responding to complex challenges and maintaining high availability.

Implementing Operational Best Practices

Candidates must apply best practices across deployment, configuration, monitoring, and troubleshooting activities. Standardized procedures improve efficiency, reduce errors, and enhance reliability. Professionals should implement redundancy, optimize resource usage, and ensure compliance with organizational policies. Following best practices ensures consistent performance and long-term stability of virtual infrastructures.

Long-Term Skill Development and Knowledge Retention

Certification preparation is the first step toward long-term competence. Candidates should engage in continuous learning, practice new configurations, and refine troubleshooting abilities. Maintaining up-to-date knowledge ensures that professionals remain effective in managing evolving environments, supporting emerging technologies, and addressing future operational challenges.

Conclusion

Preparing for the 2V0-21.20 exam requires comprehensive knowledge of vSphere infrastructure, resource management, networking, storage, and security. Candidates must integrate theoretical understanding with hands-on practice to develop operational competence. Mastery of scenario-based problem solving, performance optimization, and best practices ensures that certified professionals can manage complex virtual environments efficiently. Successful completion of the exam validates practical skills and readiness to maintain resilient, high-performing infrastructures, supporting career advancement and professional growth.

VMware 2V0-21.20 practice test questions and answers, training course, study guide are uploaded in ETE Files format by real users. Study and Pass 2V0-21.20 Professional VMware vSphere 7.x certification exam dumps & practice test questions and answers are to help students.

- 2V0-17.25 - VMware Cloud Foundation 9.0 Administrator

- 2V0-13.25 - VMware Cloud Foundation 9.0 Architect

- 2V0-21.23 - VMware vSphere 8.x Professional

- 2V0-16.25 - VMware vSphere Foundation 9.0 Administrator

- 2V0-72.22 - Professional Develop VMware Spring

- 2V0-11.25 - VMware Cloud Foundation 5.2 Administrator

- 2V0-41.24 - VMware NSX 4.X Professional V2

- 3V0-21.23 - VMware vSphere 8.x Advanced Design

- 5V0-22.23 - VMware vSAN Specialist v2

- 2V0-62.23 - VMware Workspace ONE 22.X Professional

- 2V0-31.24 - VMware Aria Automation 8.10 Professional V2

- 1V0-21.20 - Associate VMware Data Center Virtualization

- 3V0-42.20 - Advanced Design VMware NSX-T Data Center

- 2V0-33.22 - VMware Cloud Professional

- 5V0-62.22 - VMware Workspace ONE 21.X UEM Troubleshooting Specialist

- 2V0-51.23 - VMware Horizon 8.x Professional

- 2V0-11.24 - VMware Cloud Foundation 5.2 Administrator

Why customers love us?

What do our customers say?