- Home

- Cloudera Certifications

- CCA-500 Cloudera Certified Administrator for Apache Hadoop (CCAH) Dumps

Pass Cloudera CCA-500 Exam in First Attempt Guaranteed!

Get 100% Latest Exam Questions, Accurate & Verified Answers to Pass the Actual Exam!

30 Days Free Updates, Instant Download!

CCA-500 Premium File

- Premium File 60 Questions & Answers. Last Update: Jan 31, 2026

Whats Included:

- Latest Questions

- 100% Accurate Answers

- Fast Exam Updates

Last Week Results!

All Cloudera CCA-500 certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the CCA-500 Cloudera Certified Administrator for Apache Hadoop (CCAH) practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

Key Insights into the Cloudera CCA-500 Exam for Aspiring Data Professionals

The Cloudera Certified Administrator for Apache Hadoop, also known as the CCA-500 exam, is a benchmark certification for professionals managing and administering Hadoop clusters. This credential is recognized globally as a measure of technical competence and practical expertise in Hadoop operations. Unlike theoretical certifications, the CCA-500 emphasizes hands-on skills, testing candidates on their ability to perform administrative tasks, troubleshoot issues, and optimize cluster performance in real-world scenarios. Achieving this certification validates a professional’s capacity to deploy, monitor, and manage Hadoop infrastructure effectively, making them valuable assets in data-driven organizations. The exam focuses on core areas such as HDFS, YARN, cluster planning, installation, resource management, monitoring, and logging, providing a comprehensive assessment of an administrator's capabilities.

Hadoop administration requires a deep understanding of distributed computing principles, data storage strategies, and operational processes. The CCA-500 exam evaluates a candidate's proficiency in configuring Hadoop clusters to ensure high availability, data integrity, and efficient resource utilization. Candidates are expected to demonstrate knowledge of fault tolerance mechanisms, security protocols, and performance optimization strategies. Mastery of these areas enables administrators to maintain operational continuity, scale resources efficiently, and implement solutions that align with organizational goals. The certification is particularly valuable for professionals working in enterprises where large-scale data processing, analytics, and storage reliability are critical for business operations.

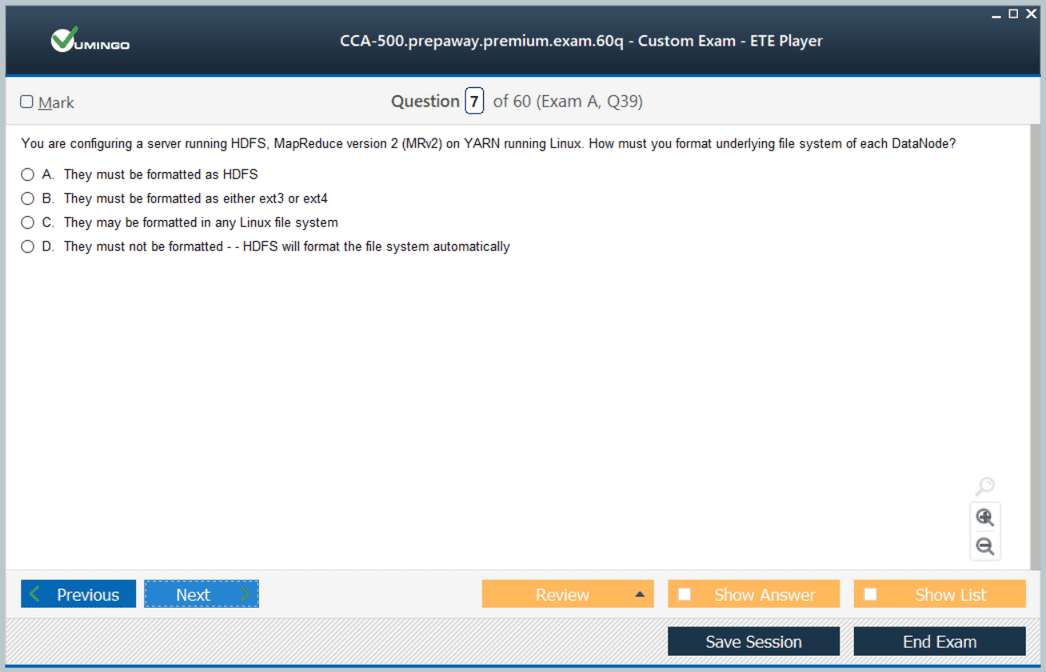

The exam format of CCA-500 includes multiple-choice and scenario-based questions designed to reflect practical challenges administrators encounter. Candidates must complete 60 questions within 90 minutes and achieve a passing score of 70 percent. The exam evaluates problem-solving skills, decision-making abilities, and technical knowledge, ensuring that certified professionals are capable of handling complex cluster management tasks. Preparing for the exam requires a combination of theoretical understanding and hands-on experience with Hadoop components, including HDFS, YARN, and the broader Hadoop ecosystem. By simulating real-world scenarios, candidates are assessed on their ability to apply knowledge effectively rather than simply recalling facts.

HDFS Administration and Operational Knowledge

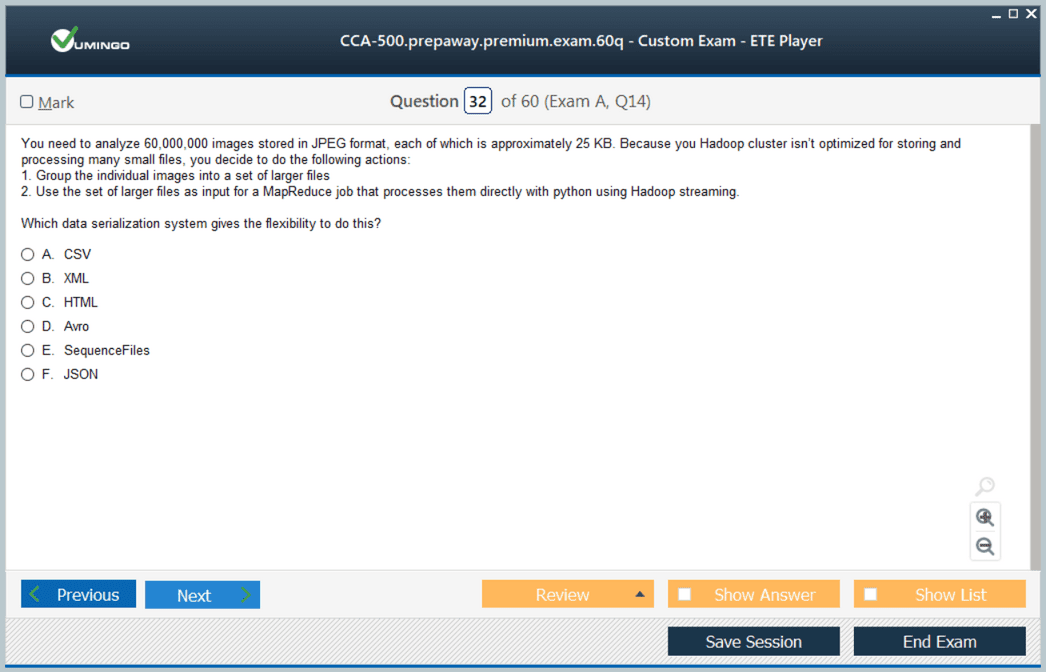

A critical component of the CCA-500 exam focuses on Hadoop Distributed File System administration. HDFS is the foundation of data storage in Hadoop clusters, and administrators must understand its architecture, data storage principles, and operational behavior. Candidates are expected to know the roles of HDFS daemons, file read and write paths, and how to manage high-availability clusters with multiple NameNodes. Understanding security features, including Kerberos authentication, is essential for maintaining data privacy and controlling access to sensitive information. Administrators must also be able to determine the best data serialization formats for different use cases and manage HDFS through command-line utilities.

Effective HDFS administration includes recognizing scenarios that require HDFS federation or HA-Quorum configurations. Candidates must assess cluster design based on operational requirements and identify strategies to optimize performance and resource utilization. Administrators also need to be adept at troubleshooting issues related to data replication, disk failures, and network latency. By mastering these areas, professionals ensure that clusters maintain high availability, data consistency, and resilience under various operational conditions. This knowledge forms the backbone of practical Hadoop administration and is a significant aspect of the CCA-500 certification.

HDFS administration also requires understanding the interaction between storage components and the broader ecosystem. Candidates must be able to deploy supporting services and configure them to work seamlessly with HDFS. Managing large datasets involves knowledge of file manipulation commands, data ingestion, and replication strategies. Administrators must ensure efficient storage utilization while maintaining operational reliability. A deep understanding of these components enables certified professionals to design clusters that meet performance requirements and support enterprise-level analytics workloads efficiently.

YARN Resource Management and Job Scheduling

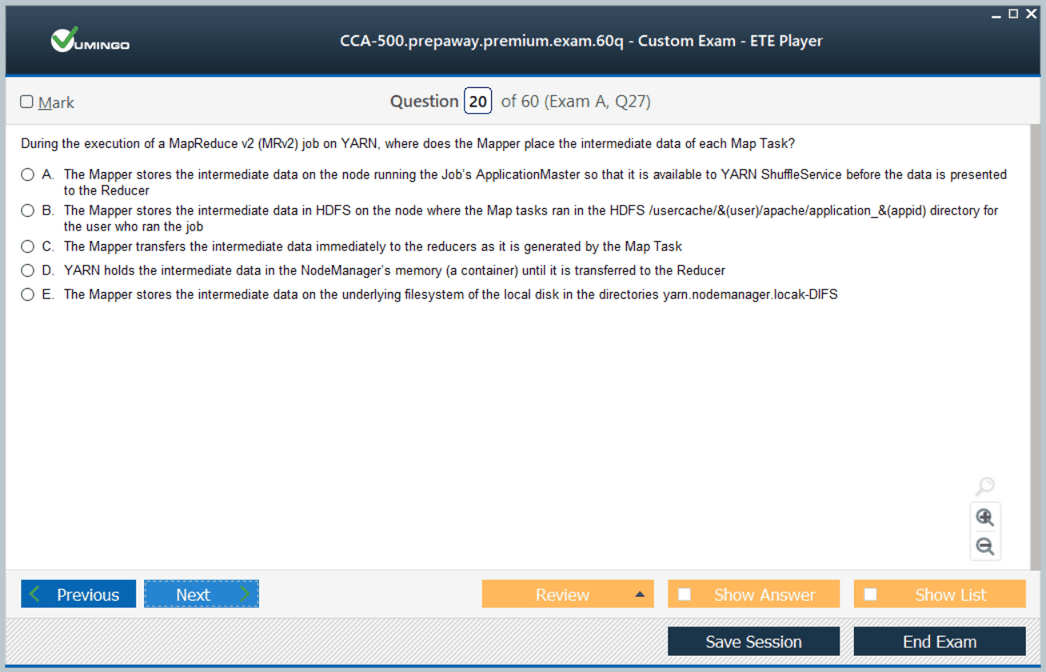

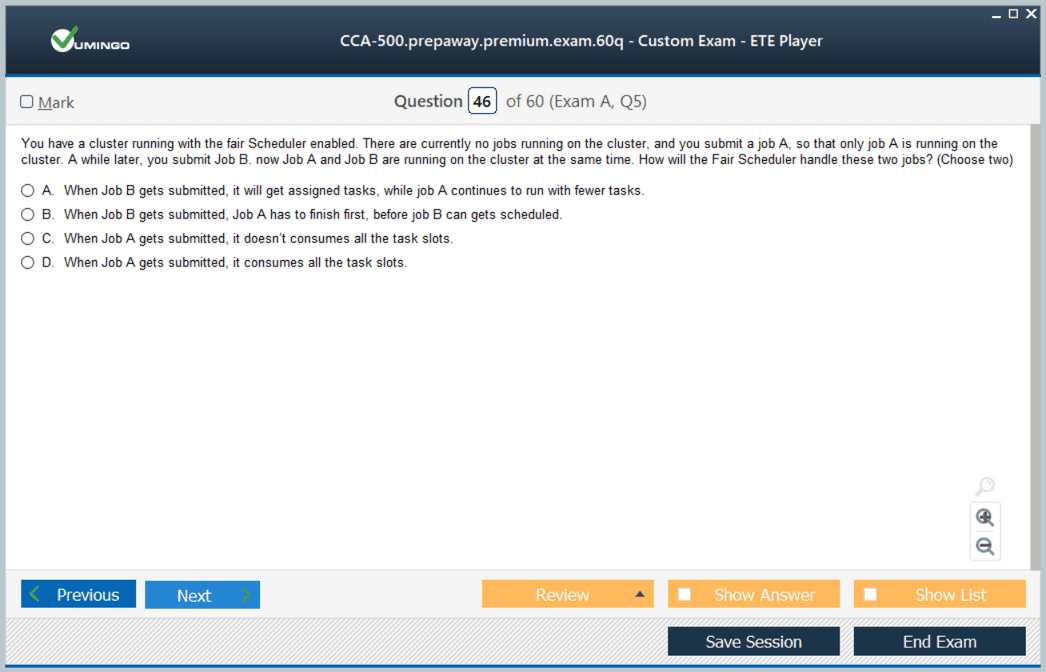

Another core focus of the CCA-500 exam is the administration of YARN, Hadoop’s resource management and job scheduling framework. YARN separates resource management and job scheduling from the data processing layer, allowing for greater scalability and resource utilization efficiency. Candidates are expected to deploy YARN daemons, understand job workflows, and manage resource allocation through various schedulers such as FIFO, Fair, and Capacity Schedulers. Knowledge of MapReduce version migration from MRv1 to MRv2 is also assessed, including the necessary file and configuration changes to support this transition.

YARN administration emphasizes resource monitoring and optimization, ensuring that jobs are executed efficiently across the cluster. Administrators must understand how YARN allocates memory and CPU resources to applications and how to prioritize jobs under different workloads. Effective resource management reduces contention, minimizes latency, and enhances overall cluster performance. Candidates are also tested on their ability to integrate ecosystem components such as Spark, Hive, and Impala with YARN, ensuring smooth operation and resource sharing across diverse processing engines.

Understanding YARN also involves assessing operational strategies for handling high-throughput environments. Candidates must design cluster configurations that balance resource allocation and task scheduling, optimizing performance while preventing bottlenecks. They must also be able to troubleshoot failed or delayed jobs by analyzing resource usage, container logs, and node health metrics. Mastery of these operational tasks ensures that certified administrators can maintain efficient, reliable, and scalable Hadoop clusters.

Cluster Planning and Hardware Considerations

Cluster planning is a critical aspect of the CCA-500 certification. Effective Hadoop administration requires selecting appropriate hardware, operating systems, and network configurations to support the intended workloads. Candidates must evaluate CPU, memory, storage, and network requirements, considering factors such as disk configurations (JBOD vs. RAID), virtualization, and SAN usage. Cluster sizing and workload analysis are essential to ensure that resources are allocated efficiently and that SLAs are met for data processing tasks.

Administrators also need to assess operating system choices and configure kernel parameters for optimal performance. Disk I/O, memory management, and network throughput must be tuned to handle high-volume data processing and storage demands. Candidates are expected to design topologies that account for data locality, fault tolerance, and network efficiency, ensuring that large-scale clusters operate smoothly and reliably under various workloads. Hardware and system planning directly impact the cluster's scalability, reliability, and overall operational success, making this a significant component of the CCA-500 exam.

Proper cluster planning also involves anticipating future growth and designing clusters that can scale without major reconfigurations. Administrators must evaluate the types of workloads the cluster will support and adjust resource allocations accordingly. They must also consider redundancy, failover mechanisms, and high-availability configurations to minimize downtime. By demonstrating expertise in cluster planning, certified professionals prove their ability to design resilient, high-performing Hadoop environments suitable for enterprise needs.

Installation, Configuration, and Ecosystem Integration

The installation and configuration of Hadoop and its ecosystem components is another major domain of the CCA-500 exam. Candidates must demonstrate the ability to deploy core services such as HDFS, YARN, MapReduce, Spark, Hive, Impala, Pig, and Flume. Proper installation requires configuring daemon nodes, service parameters, and cluster-wide settings to ensure optimal operation. Administrators must also integrate these services to support unified workflows and data processing pipelines.

Configuration knowledge includes setting up monitoring tools, logging mechanisms, and security frameworks. Administrators need to know how to handle failures, implement backup strategies, and maintain cluster health during updates or changes. Integration of ecosystem components involves understanding interdependencies, data flow patterns, and resource sharing strategies. Effective configuration ensures seamless operation, enabling the cluster to support complex workloads while maintaining reliability and performance.

Monitoring, Logging, and Operational Maintenance

Monitoring and logging are essential for maintaining Hadoop clusters, and candidates must demonstrate competence in these areas for the CCA-500 exam. Administrators are expected to track metrics, monitor CPU and memory usage, analyze log files, and evaluate cluster health. Tools for monitoring NameNode, JobTracker, and other daemon processes provide visibility into operational performance and potential bottlenecks.

Effective monitoring enables proactive issue detection, helping administrators resolve problems before they impact cluster operations. Logging provides detailed insights into job execution, resource usage, and system events, which is crucial for troubleshooting and auditing. Administrators must also understand how to manage log retention, rotate logs, and interpret anomalies. Continuous monitoring and operational maintenance are critical for sustaining reliable, high-performance Hadoop environments.

The Cloudera CCA-500 certification is a comprehensive assessment of Hadoop administration skills. Candidates are evaluated on HDFS, YARN, cluster planning, installation, resource management, monitoring, and ecosystem integration. Mastery of these domains ensures that certified professionals can design, deploy, and maintain Hadoop clusters efficiently. The certification validates practical, hands-on expertise, positioning administrators for leadership roles in enterprise data management, operational optimization, and big data analytics. Achieving the CCA-500 credential reflects a commitment to technical excellence and demonstrates the capability to manage complex Hadoop environments effectively, supporting organizational data objectives and operational resilience.

Advanced Hadoop Cluster Troubleshooting

For the CCA-500 exam, advanced troubleshooting is a critical skill that differentiates competent administrators from highly skilled ones. Administrators must be able to quickly diagnose cluster issues that arise due to hardware failures, network bottlenecks, or misconfigurations. Understanding log file structures, daemon interactions, and error patterns is essential for identifying root causes efficiently. Candidates must also be familiar with diagnostic tools that provide insights into cluster performance and health, enabling proactive issue resolution. Effective troubleshooting minimizes downtime, ensures data integrity, and maintains the reliability of distributed computing environments.

Troubleshooting also involves evaluating the behavior of HDFS under load, monitoring replication mechanisms, and ensuring that data consistency is preserved across all nodes. Administrators must be able to simulate failure scenarios, such as node crashes or disk corruption, and verify the cluster’s ability to recover without data loss. These exercises help candidates prepare for real-world challenges and demonstrate their practical proficiency in managing large-scale Hadoop deployments.

Performance Tuning and Optimization

Optimization of Hadoop clusters is a core competency for CCA-500 certification. Administrators must evaluate cluster performance metrics, identify bottlenecks, and implement tuning strategies for improved efficiency. Performance tuning encompasses resource allocation, job scheduling, and data storage optimization. Administrators analyze job execution patterns and determine appropriate memory and CPU assignments for YARN containers. They also adjust HDFS block sizes, replication factors, and storage configurations to enhance throughput and reduce latency.

Optimization strategies extend to ecosystem components such as Spark, Hive, and Impala, ensuring that data processing jobs run efficiently without overutilizing cluster resources. Administrators must balance workload distribution across nodes, mitigate straggler tasks, and manage concurrent job executions to maintain consistent performance. Mastery of these techniques is critical for supporting high-demand enterprise workloads and ensures the cluster operates at peak efficiency.

Security and Access Management

Security is a crucial aspect of Hadoop administration and a significant portion of the CCA-500 exam. Administrators are expected to implement authentication, authorization, and encryption mechanisms to safeguard cluster data. Kerberos integration, secure communication channels, and role-based access controls are standard practices that protect against unauthorized access and potential breaches.

In addition to configuration, administrators must continuously monitor security policies, audit logs, and user activities to identify potential vulnerabilities. Proper security management ensures compliance with organizational policies and regulatory standards while protecting sensitive enterprise data. Candidates who demonstrate a comprehensive understanding of Hadoop security are equipped to maintain trustworthy and resilient cluster environments.

Ecosystem Component Integration

A Hadoop administrator must also manage the integration of ecosystem components with the core Hadoop framework. This includes configuring and maintaining tools such as Hive for data warehousing, Impala for interactive SQL queries, Pig for data processing, and Flume or Sqoop for data ingestion. Understanding dependencies, configuration nuances, and optimal deployment strategies is essential for ensuring seamless interaction between components.

Integration planning involves evaluating cluster resources, data access patterns, and workload types. Administrators must design solutions that maintain high availability, prevent resource contention, and support scalability. Proper ecosystem management enables efficient data workflows, reduces operational overhead, and enhances the reliability of enterprise data processing pipelines.

Cluster Scaling and High Availability

Enterprise clusters often need to scale to accommodate growing data volumes and increased processing demands. Administrators must plan for horizontal scaling by adding nodes or vertical scaling through hardware upgrades. High availability configurations, including HDFS NameNode failover and YARN ResourceManager redundancy, ensure continuous operation even during node failures or maintenance activities.

Scaling strategies also consider network topologies, disk configurations, and resource allocation policies. Administrators must anticipate workload growth and implement strategies to maintain performance while avoiding disruptions. Certification candidates are evaluated on their ability to design scalable, fault-tolerant clusters capable of meeting enterprise SLAs and supporting evolving data processing requirements.

Backup, Recovery, and Disaster Planning

Backup and recovery procedures are essential for minimizing data loss and ensuring business continuity. Administrators must implement snapshot strategies, replication policies, and disaster recovery protocols that align with organizational requirements. Regular testing of recovery procedures is critical to validate the effectiveness of backup strategies.

Disaster planning includes identifying critical cluster components, establishing recovery priorities, and defining procedures for different failure scenarios. Certified professionals must understand how to restore services quickly while preserving data integrity and operational continuity. Expertise in backup and recovery is a key differentiator for administrators managing large-scale enterprise Hadoop environments.

Monitoring, Alerting, and Proactive Maintenance

Proactive monitoring is vital for sustained cluster performance and reliability. Administrators utilize monitoring tools to track resource utilization, job performance, and node health. Alerts for abnormal behaviors, high resource consumption, or failed jobs enable rapid response before issues escalate.

Maintenance practices include routine checks, log analysis, performance assessments, and configuration audits. By maintaining visibility into cluster operations, administrators ensure that potential problems are addressed promptly, minimizing downtime and preserving service quality. Certification candidates demonstrate their ability to implement comprehensive monitoring and maintenance plans as part of their practical expertise.

Data Governance and Compliance

Data governance ensures that information stored and processed in Hadoop clusters meets organizational and regulatory standards. Administrators must implement access controls, auditing, and lineage tracking to maintain transparency and accountability. Proper governance practices facilitate compliance with legal requirements, industry standards, and internal policies.

Certified administrators also evaluate data lifecycle management strategies, including retention, archival, and deletion policies. Governance planning ensures that sensitive data is protected, data quality is maintained, and regulatory compliance is achieved without compromising operational efficiency. Knowledge of governance and compliance is essential for enterprise environments where data integrity and privacy are paramount.

Practical Exam Preparation and Real-World Application

Success in the CCA-500 exam relies on hands-on experience and practical application of concepts. Candidates should gain familiarity with deploying, configuring, and managing Hadoop clusters in lab environments. Practice includes troubleshooting scenarios, optimizing resource allocation, managing high availability, and integrating ecosystem components effectively.

Real-world application involves understanding enterprise data workflows, identifying performance bottlenecks, and implementing solutions that align with operational requirements. Candidates who can translate theoretical knowledge into practical administration skills are well-prepared to succeed in the exam and in professional Hadoop administration roles.

Career Implications and Professional Development

Achieving CCA-500 certification signals a high level of competency in Hadoop administration and positions professionals for advanced roles in enterprise data management. Certified administrators are equipped to manage large-scale clusters, optimize performance, and ensure operational resilience. Career opportunities include Hadoop system administrator, data operations manager, big data engineer, and technology consultant.

Professional development involves continuous learning to keep pace with evolving Hadoop versions, ecosystem tools, and emerging best practices. Administrators must stay updated on new features, optimization strategies, and security enhancements to maintain expertise. The certification demonstrates both technical proficiency and commitment to professional growth, providing long-term career benefits and recognition in the data management field.

Advanced HDFS Management Techniques

In the context of CCA-500 certification, mastery of Hadoop Distributed File System (HDFS) management is crucial. Administrators must have in-depth knowledge of HDFS daemons, including NameNode, DataNode, Secondary NameNode, and JournalNode, and understand their interactions within the cluster. Effective HDFS management involves monitoring storage utilization, ensuring replication factors are maintained, and balancing data across nodes to prevent hotspots. Administrators also need to understand HDFS Federation to scale large clusters efficiently and design storage strategies that align with enterprise data requirements.

File system operations, such as creating, reading, writing, and deleting files, must be executed with precision, ensuring minimal disruption to ongoing data processing tasks. Understanding the intricacies of HDFS file paths, permissions, and ownership is necessary to maintain secure and efficient data access. Administrators also assess the suitability of serialization formats for specific workloads, optimizing storage efficiency and access performance.

YARN Resource Optimization

YARN is the backbone of Hadoop’s resource management, and understanding its architecture and operational dynamics is essential for the CCA-500 exam. Administrators must comprehend how the ResourceManager and NodeManagers coordinate to allocate cluster resources for running applications. Knowledge of scheduler types, such as FIFO, Capacity, and Fair Scheduler, allows administrators to optimize workload distribution based on priority and resource availability.

In practical scenarios, administrators analyze job execution workflows, identify bottlenecks, and adjust configurations to improve throughput. Migration from MapReduce version 1 to MapReduce version 2 requires careful planning to ensure compatibility with YARN and the seamless execution of legacy applications. Evaluating resource utilization patterns and tuning memory and CPU allocations is necessary to maintain cluster stability and efficiency.

Cluster Planning and Sizing Considerations

Effective Hadoop administration extends beyond day-to-day operations to strategic cluster planning. For the CCA-500 exam, administrators must evaluate hardware requirements, including CPU, memory, storage, and network infrastructure. Factors such as virtualization, disk configurations, and network topology significantly influence cluster performance and reliability.

Administrators must also plan for cluster growth by analyzing workload patterns, anticipated data volumes, and execution frequencies. Choosing between JBOD and RAID configurations, SANs, or local disks requires a thorough understanding of data access patterns and fault tolerance requirements. Additionally, kernel tuning and system-level optimizations play a significant role in achieving optimal cluster performance under diverse operational conditions.

Installation, Configuration, and Ecosystem Integration

Installing a fully functional Hadoop cluster and integrating ecosystem components is a critical area for CCA-500 certification. Administrators are expected to deploy and configure components such as Hive, Impala, Spark, Oozie, Flume, Sqoop, and Hue efficiently. Proper integration ensures seamless data flow, job execution, and operational stability across all cluster nodes.

Configuration tasks include setting up access control, defining storage paths, tuning performance parameters, and ensuring component interoperability. Administrators must validate that each ecosystem component aligns with the overall architecture, maintaining high availability and fault tolerance. Understanding deployment procedures, rollback strategies, and testing protocols ensures the cluster operates reliably from initial installation through ongoing operations.

Security Management and Compliance

Security management is a vital responsibility for Hadoop administrators. CCA-500 candidates must implement authentication and authorization mechanisms to protect sensitive enterprise data. Kerberos-based authentication, role-based access controls, and encrypted communication channels are standard practices for maintaining cluster security.

Monitoring and auditing are also crucial to detect unauthorized access, configuration changes, or anomalous behaviors. Administrators must establish logging and alerting mechanisms that comply with enterprise security policies and industry regulations. Integrating security considerations into daily cluster management ensures data integrity and protects the organization against potential cyber threats.

Performance Monitoring and Troubleshooting

Proactive performance monitoring is critical to sustaining high-performing Hadoop clusters. Administrators use tools and dashboards to track job execution times, node health, memory usage, disk utilization, and network latency. Continuous observation allows identification of potential bottlenecks and resource constraints before they affect cluster operations.

Troubleshooting involves analyzing log files, diagnosing daemon failures, resolving data node inconsistencies, and correcting misconfigurations. Administrators must also evaluate the impact of external factors, such as hardware failures, network disruptions, or software updates, on cluster performance. Being able to quickly restore services and minimize downtime demonstrates advanced operational competency expected of CCA-500 certified professionals.

High Availability and Disaster Recovery Planning

Ensuring continuous operation in large-scale Hadoop clusters requires planning for high availability and disaster recovery. Administrators configure NameNode failover, ResourceManager redundancy, and replication policies to protect against node failures and data loss. Disaster recovery strategies include regular snapshots, offsite backups, and procedures for rapid restoration of services.

Risk assessment is integral to these planning activities. Administrators must anticipate hardware failures, software bugs, and human errors, implementing contingencies that maintain operational continuity. This planning ensures that enterprise workloads are resilient to disruptions and meet organizational service level agreements.

Resource Management Strategies

Efficient resource management is essential to maximizing cluster performance. Administrators must understand the allocation and prioritization of CPU, memory, and disk I/O for running applications. Scheduling policies within YARN dictate how resources are distributed across jobs, and fine-tuning these policies improves efficiency and fairness in multi-tenant environments.

In complex enterprise setups, administrators often face competing workloads, requiring careful analysis of resource utilization and proactive adjustments. Optimizing resource allocation minimizes job delays, prevents system overloading, and maintains balanced cluster performance, which is a key component of professional competency in CCA-500 certification.

Ecosystem Component Optimization

Beyond core Hadoop administration, optimizing ecosystem components ensures efficient data processing pipelines. Spark and MapReduce jobs must be tuned for memory and executor configurations, while Hive queries benefit from partitioning and indexing strategies. Administrators also manage Impala configurations to support real-time queries and analytics efficiently.

Monitoring the interactions between ecosystem components and the core cluster infrastructure is essential for identifying inefficiencies. Adjustments may involve modifying job parameters, reconfiguring component settings, or balancing data distribution. Skilled administrators enhance cluster efficiency while reducing operational costs and maintaining service reliability.

Career and Skill Implications

Earning CCA-500 certification demonstrates advanced proficiency in Hadoop administration and positions professionals for critical roles in enterprise data management. Certified administrators are equipped to lead cluster design, deployment, optimization, and troubleshooting initiatives. Career opportunities include Hadoop system administrator, big data engineer, and data operations manager, among others.

Continuous professional development is necessary to remain current with Hadoop ecosystem updates, new components, and evolving best practices. CCA-500 certification signals technical expertise, strategic understanding, and the ability to maintain robust enterprise data environments, enhancing career prospects and industry recognition.

Advanced Security Configurations and Data Protection

Security in enterprise Hadoop clusters is not limited to basic authentication and access control. CCA-500 administrators must implement layered security mechanisms to protect both data at rest and in transit. Techniques such as Transparent Data Encryption (TDE) for HDFS, TLS/SSL for inter-node communication, and integration with enterprise identity providers enhance cluster security. Administrators configure audit logging to monitor user activities, detect anomalies, and maintain compliance with industry standards and internal policies. Implementing fine-grained authorization using tools like Apache Ranger or Sentry ensures that users access only authorized datasets, reducing the risk of accidental or malicious data exposure.

Data protection extends to replication strategies and backup procedures. Administrators evaluate replication factors for critical datasets, ensuring high availability even during node failures. Snapshots and offsite backups provide a recovery mechanism in the event of catastrophic failures or data corruption. By incorporating automated monitoring of security events and proactive alerts, administrators can respond to threats swiftly, maintaining operational continuity and organizational trust.

Multi-Cluster Management and Scalability

Managing multiple Hadoop clusters requires careful orchestration of resources, consistent configuration management, and monitoring. CCA-500 certified professionals must be adept at planning cluster expansion, scaling out resources, and ensuring interoperability between clusters. Techniques for managing cross-cluster replication, data synchronization, and job scheduling across clusters are critical for large enterprises with distributed operations.

Administrators evaluate scalability requirements based on projected data growth, workload intensity, and operational goals. They also design strategies for horizontal scaling, adding nodes to clusters without causing downtime, and vertical scaling, adjusting existing node resources for increased capacity. Effective multi-cluster management ensures that data processing remains consistent, efficient, and reliable across distributed environments.

Disaster Recovery and Fault Tolerance Strategies

Enterprise Hadoop clusters must remain resilient in the face of hardware failures, software errors, and human-induced issues. Certified administrators develop disaster recovery plans that include automated failover, high-availability NameNode configurations, and replication across geographically distributed clusters. Understanding the limitations of the cluster architecture and anticipating potential points of failure allows administrators to implement robust fault tolerance measures.

Monitoring systems play a key role in disaster preparedness. Administrators configure real-time alerts for node failures, job errors, and abnormal system behavior. They also design fallback strategies for critical operations, ensuring that essential workflows continue with minimal disruption. A comprehensive approach to fault tolerance enhances the reliability and reputation of enterprise data operations.

Performance Tuning and Resource Optimization

Optimizing the performance of Hadoop clusters is a multifaceted challenge. CCA-500 administrators analyze CPU, memory, disk I/O, and network utilization to identify bottlenecks and implement performance improvements. Techniques such as workload balancing, JVM tuning for YARN and MapReduce tasks, and optimization of HDFS block sizes improve data processing efficiency.

Administrators also focus on job-level optimization, tuning parameters for MapReduce, Spark, and Hive jobs to reduce execution time and resource consumption. Efficient scheduling policies in YARN, combined with proactive monitoring of cluster utilization, allow administrators to allocate resources dynamically and maintain optimal throughput. These performance strategies are critical for handling large-scale data operations without compromising cluster stability.

Ecosystem Component Coordination

The Hadoop ecosystem encompasses diverse components such as Hive, Impala, Pig, Spark, Oozie, and Flume. Certified administrators must ensure that these components are integrated seamlessly with the cluster, supporting complex workflows and enterprise data pipelines. Coordination involves configuring communication between components, tuning resource allocations, and ensuring compatibility across different versions.

Administrators monitor the performance of each component, addressing latency issues, and resolving conflicts that arise from shared resources. By understanding interdependencies, they can prevent job failures, optimize throughput, and maintain consistent data processing. This expertise ensures that enterprise workflows are reliable, scalable, and aligned with organizational objectives.

Monitoring and Operational Analytics

Continuous monitoring is essential for maintaining cluster health. CCA-500 certified administrators utilize dashboards, metrics, and logs to track the performance of HDFS, YARN, and ecosystem components. They monitor CPU, memory, disk usage, network activity, and job execution metrics to identify trends and anticipate potential issues.

Operational analytics involves interpreting collected data to optimize cluster configurations, forecast resource needs, and support capacity planning. Administrators also analyze historical patterns to refine scheduling policies, improve job prioritization, and enhance overall system responsiveness. Effective monitoring practices minimize downtime and ensure that clusters operate at peak efficiency.

Incident Response and Problem Resolution

Efficient incident response is a key competency for CCA-500 administrators. They must quickly diagnose failures, whether due to hardware, software, or configuration issues, and implement corrective actions. Tools for log analysis, cluster diagnostics, and job tracking enable administrators to pinpoint root causes and restore services promptly.

Problem resolution involves documenting issues, implementing preventive measures, and updating operational procedures. Administrators also collaborate with development teams to ensure that applications are optimized for cluster performance and reliability. Proactive incident management reduces operational risk and supports consistent service delivery.

Strategic Planning and Enterprise Alignment

CCA-500 certification emphasizes not only technical proficiency but also strategic alignment with organizational goals. Administrators evaluate cluster design, resource allocation, and operational processes to ensure that Hadoop deployments support business objectives efficiently. This involves aligning data storage strategies, processing workflows, and security policies with enterprise priorities.

Long-term planning includes capacity forecasting, budget considerations, and evaluating emerging technologies to enhance cluster capabilities. Administrators provide recommendations for upgrades, infrastructure expansion, and workflow optimization to maintain competitiveness and operational excellence. Strategic planning ensures that Hadoop clusters deliver measurable value to the organization.

Professional Development and Certification Implications

Achieving CCA-500 certification demonstrates comprehensive expertise in Hadoop administration, encompassing installation, configuration, management, security, performance optimization, and strategic oversight. Certified professionals are positioned to lead enterprise data initiatives, advise on cluster design, and implement best practices in large-scale data environments.

Continuous learning is vital due to the evolving nature of the Hadoop ecosystem. Administrators must stay updated on new components, configuration enhancements, and emerging trends in big data management. Certification serves as a benchmark of skill, providing recognition for advanced knowledge and operational competence. It also enhances career opportunities in system administration, big data engineering, and enterprise architecture, establishing professionals as trusted experts in Hadoop administration.

Operational Excellence and Industry Standards

Maintaining operational excellence requires adherence to industry standards and best practices. Certified administrators implement standardized procedures for deployment, monitoring, maintenance, and security management. They also benchmark cluster performance, audit configurations, and validate compliance with organizational policies and external regulations.

By institutionalizing these practices, administrators minimize risks, improve reliability, and ensure consistent service delivery across enterprise environments. The emphasis on standardization and best practices distinguishes certified professionals and reinforces the value of the CCA-500 certification in demonstrating technical mastery and professional credibility.

Advanced Troubleshooting and Problem Resolution

In the context of CCA-500 certification, advanced troubleshooting involves identifying root causes of complex failures within a Hadoop cluster and implementing effective corrective measures. Certified administrators develop a systematic approach to diagnose issues related to HDFS, YARN, and ecosystem components. By analyzing system logs, monitoring metrics, and reviewing job execution details, administrators can pinpoint problems ranging from hardware malfunctions to configuration errors or software bugs. This process includes isolating failing nodes, identifying bottlenecks, and validating network connectivity to ensure end-to-end cluster functionality.

Problem resolution is not limited to reactive measures; administrators also implement preventive strategies. They create automated monitoring alerts, establish failover mechanisms, and conduct routine audits to minimize the likelihood of recurring issues. By maintaining a comprehensive knowledge of cluster architecture and operational dependencies, CCA-500 certified professionals can ensure high availability, minimize downtime, and sustain optimal performance across large-scale Hadoop deployments.

Multi-Tenant Cluster Management

Enterprise environments often require multi-tenant clusters where multiple teams or departments share the same Hadoop infrastructure. Effective management of such clusters involves setting resource quotas, defining user roles, and isolating workloads to prevent resource contention. Certified administrators evaluate workload priorities, monitor resource consumption, and configure YARN schedulers to allocate memory, CPU, and disk I/O efficiently.

Multi-tenancy also introduces security and privacy challenges. Administrators implement access control policies, enforce data isolation, and monitor activity to prevent unauthorized access. Auditing mechanisms track resource utilization and data access across tenants, ensuring compliance with organizational standards. Properly managing multi-tenant environments maximizes resource utilization, maintains operational fairness, and enhances the overall stability of enterprise Hadoop clusters.

Real-World Application Scenarios

CCA-500 certified administrators must understand how Hadoop clusters are applied in real-world enterprise scenarios. In financial services, administrators configure clusters to handle high-volume transaction data, ensuring low-latency processing, secure storage, and audit-ready reporting. In healthcare, Hadoop clusters manage patient records, enabling secure data sharing and analytics while complying with privacy regulations. Retail and e-commerce enterprises leverage Hadoop for large-scale customer analytics, inventory tracking, and supply chain optimization.

Administrators analyze business requirements, design data pipelines, and ensure that cluster configurations align with operational goals. By integrating ecosystem components such as Hive for structured data analysis, Spark for real-time processing, and Oozie for workflow scheduling, they deliver end-to-end solutions tailored to specific enterprise needs. Understanding these real-world applications ensures that administrators can translate technical expertise into measurable business value.

Automation and Workflow Optimization

Automation plays a crucial role in managing complex Hadoop clusters efficiently. CCA-500 professionals implement automated workflows for job scheduling, resource allocation, and system maintenance. Tools like Oozie orchestrate data processing pipelines, while scripts and configuration templates standardize administrative tasks such as adding nodes, configuring replication, or updating software components.

Workflow optimization includes analyzing job execution patterns, identifying repetitive tasks, and streamlining processes to reduce manual intervention. Automated monitoring and reporting systems alert administrators to anomalies in real time, enabling proactive responses to potential disruptions. By leveraging automation, certified administrators enhance operational efficiency, reduce human error, and ensure consistent cluster performance.

Continuous Improvement and Capacity Planning

Enterprise Hadoop clusters experience evolving workloads and growing data volumes. Certified administrators conduct ongoing capacity planning, forecasting resource needs based on historical data usage and anticipated growth. They evaluate storage requirements, compute capacity, and network bandwidth, adjusting cluster configurations proactively to maintain performance.

Continuous improvement also involves reviewing system metrics, analyzing bottlenecks, and implementing best practices for cluster optimization. Administrators explore advancements in Hadoop ecosystem components, new scheduling algorithms, and performance tuning techniques to enhance throughput and reduce latency. By maintaining a cycle of assessment, adjustment, and optimization, CCA-500 certified professionals ensure that clusters remain resilient, efficient, and aligned with enterprise objectives.

Collaboration and Knowledge Sharing

Effective cluster management requires collaboration with development teams, data engineers, and business analysts. Certified administrators serve as technical advisors, guiding the integration of data pipelines, optimizing job configurations, and ensuring compliance with operational standards. They document procedures, share troubleshooting knowledge, and contribute to organizational training programs to elevate the collective competency of teams working with Hadoop clusters.

Knowledge sharing also involves staying updated on emerging technologies, configuration best practices, and industry trends. Administrators participate in professional networks, workshops, and forums to exchange insights and strategies, enhancing the enterprise’s ability to leverage Hadoop for strategic advantage.

Strategic Oversight and Governance

Beyond technical management, CCA-500 certified administrators provide strategic oversight for enterprise Hadoop deployments. They evaluate operational workflows, security policies, and compliance measures to ensure alignment with organizational objectives. Governance practices include establishing standardized protocols for cluster provisioning, job scheduling, data retention, and audit readiness.

Administrators assess risk factors, implement disaster recovery plans, and establish escalation procedures for critical incidents. They also define success metrics for cluster performance, ensuring that Hadoop deployments deliver tangible value in terms of efficiency, reliability, and business outcomes. This strategic perspective elevates the role of administrators from technical operators to enterprise architects capable of guiding data-driven initiatives.

Emerging Trends and Technological Adaptation

Hadoop technology continuously evolves, introducing new components, integration options, and processing frameworks. CCA-500 certified professionals stay informed about these developments, evaluating how emerging tools can enhance existing clusters. Advances such as containerized Hadoop deployments, cloud-native integration, and real-time streaming analytics provide opportunities to improve flexibility, scalability, and operational efficiency.

Administrators adapt architectures to incorporate these technologies while maintaining stability, security, and performance standards. They also develop strategies for gradual adoption, testing new solutions in controlled environments, and ensuring that upgrades do not disrupt ongoing operations. This adaptability ensures that enterprises remain competitive in managing large-scale data operations effectively.

Leadership and Career Growth

Achieving CCA-500 certification positions professionals for leadership roles in data management and enterprise technology. Certified administrators not only manage Hadoop clusters but also guide organizational strategies for big data initiatives. Their expertise supports decision-making on infrastructure investments, workflow optimization, and technology adoption.

Career growth opportunities include roles such as senior Hadoop administrator, data platform architect, or enterprise data operations manager. Certified professionals are recognized for their ability to deliver high-value solutions, optimize complex environments, and lead technical teams. The credential reinforces professional credibility, providing a foundation for continued advancement in the dynamic field of big data administration.

The Cloudera Certified Administrator for Apache Hadoop (CCA-500) certification embodies a comprehensive understanding of Hadoop administration, covering installation, configuration, management, security, performance, and strategic oversight. Certified administrators develop the expertise to deploy enterprise-grade clusters, ensure operational reliability, optimize performance, and manage complex workflows. They are equipped to address security challenges, ensure compliance, and maintain high availability in multi-tenant, large-scale environments.

Through advanced troubleshooting, automation, capacity planning, and strategic governance, CCA-500 professionals enhance organizational efficiency and derive measurable value from Hadoop deployments. They remain adaptive to emerging technologies, continuously improving clusters and workflows to meet evolving business needs. By bridging technical mastery with strategic insight, certified administrators contribute to innovation, operational excellence, and the successful execution of enterprise big data initiatives.

Data Security and Compliance in Hadoop Clusters

In CCA-500 exam contexts, understanding data security and regulatory compliance is crucial for effective Hadoop administration. Certified administrators implement robust access control mechanisms to protect sensitive data from unauthorized access. This includes configuring Kerberos authentication, managing user roles, and defining permission hierarchies to ensure that only authorized personnel can interact with specific datasets. Encryption at rest and in transit is a standard practice, safeguarding data integrity across nodes and during network transfers.

Regulatory compliance is equally important, especially for industries like finance, healthcare, and government. Administrators establish audit trails, monitor data access, and generate logs for reporting purposes. These measures support compliance with internal policies and external regulations, ensuring that enterprise data handling meets legal and ethical standards.

Cluster Performance Monitoring and Optimization

Certified administrators are responsible for continuous performance monitoring of Hadoop clusters. Using built-in metrics and monitoring tools, they track resource usage across CPU, memory, disk I/O, and network bandwidth. By analyzing these metrics, administrators can identify bottlenecks, optimize job scheduling, and adjust resource allocation dynamically to maintain cluster efficiency.

Optimization strategies include configuring YARN schedulers to balance workloads, tuning HDFS parameters to improve data throughput, and implementing caching mechanisms to reduce latency. Performance monitoring also involves proactive detection of anomalies, enabling timely intervention to prevent potential service degradation or downtime.

High Availability and Disaster Recovery Planning

Enterprise Hadoop deployments require high availability to ensure uninterrupted service. CCA-500 certified professionals design clusters with failover mechanisms, redundant nodes, and replication strategies to maintain data availability even during hardware or software failures. NameNode HA configurations, standby nodes, and quorum-based mechanisms are key components of high availability strategies.

Disaster recovery planning involves creating backup procedures, defining recovery point objectives, and establishing step-by-step protocols for restoring cluster operations after major disruptions. Administrators conduct periodic drills and test failover scenarios to validate recovery strategies, ensuring that enterprise operations remain resilient in the face of unforeseen events.

Ecosystem Component Management

Hadoop ecosystems consist of multiple interdependent components such as Hive, Impala, Spark, Sqoop, Oozie, Flume, and Hue. Certified administrators are responsible for deploying, configuring, and maintaining these components to support diverse data processing and analytics tasks. They ensure compatibility between versions, manage dependencies, and monitor the health of each service to prevent operational issues.

Integration of ecosystem components requires careful planning to achieve efficient workflows. Administrators configure data ingestion pipelines, optimize query execution, and maintain job scheduling for large-scale batch and real-time processing. Proper management of these components enables enterprises to leverage the full capabilities of Hadoop for data-driven decision-making.

Cluster Scaling and Resource Management

Enterprise environments experience dynamic workloads, requiring scalable and adaptable cluster architectures. Certified administrators analyze historical usage patterns and predict future demand to guide cluster expansion. Techniques such as horizontal scaling, adding compute nodes, and vertical scaling, upgrading hardware resources, ensure that clusters can accommodate increasing data volumes and processing requirements.

Resource management is critical to prevent contention between competing workloads. Administrators configure YARN and HDFS to allocate CPU, memory, and storage efficiently across jobs, ensuring that high-priority tasks receive adequate resources without degrading overall cluster performance. Scalable and well-managed clusters provide organizations with the agility to handle growing and evolving data needs effectively.

Backup Strategies and Data Recovery

Data protection in Hadoop clusters involves implementing reliable backup strategies. Certified administrators schedule regular snapshots of HDFS data, replicate critical datasets across geographically dispersed nodes, and validate backup integrity. These measures safeguard against accidental data loss, corruption, or hardware failures.

Recovery strategies include restoring datasets from snapshots, rebuilding failed nodes, and rebalancing clusters to ensure data redundancy. Administrators also maintain documentation of recovery procedures and ensure that team members are trained to execute them efficiently. Effective backup and recovery practices maintain business continuity and minimize operational disruptions.

Job Scheduling and Workflow Orchestration

Managing job scheduling in a Hadoop environment is essential for operational efficiency. Certified administrators design and configure workflow orchestration tools to automate data processing tasks. They define dependencies, sequence job execution, and monitor task completion to ensure timely and accurate results.

Schedulers like Oozie enable administrators to automate complex workflows, integrating multiple ecosystem components into cohesive pipelines. Optimizing job scheduling reduces idle time, improves resource utilization, and minimizes the risk of job failures due to resource constraints. Effective workflow orchestration enhances productivity and supports the scalability of enterprise data operations.

Performance Tuning and Configuration Management

Performance tuning is an ongoing responsibility for certified administrators. This involves adjusting HDFS block sizes, replication factors, and network configurations to optimize data throughput. Administrators also fine-tune YARN settings, including container sizes and queue allocations, to balance resource utilization across different workloads.

Configuration management extends to ecosystem components, ensuring that Spark, Hive, Impala, and other tools are properly tuned for the specific characteristics of the data and processing requirements. Continuous assessment and adjustment maintain cluster efficiency, reduce latency, and enhance the reliability of large-scale Hadoop environments.

Collaboration and Cross-Functional Integration

Hadoop administrators collaborate with data engineers, analysts, and business stakeholders to ensure that clusters meet enterprise objectives. They provide guidance on data ingestion strategies, storage management, and workflow design. By understanding business requirements and aligning technical solutions accordingly, administrators help organizations derive actionable insights from their data.

Cross-functional integration also involves coordinating with security, compliance, and IT infrastructure teams to maintain a secure and stable environment. Effective collaboration ensures that Hadoop clusters not only function efficiently but also support organizational goals across departments.

Emerging Technologies and Continuous Learning

The Hadoop ecosystem continuously evolves, with new tools, frameworks, and best practices emerging regularly. Certified administrators stay updated on advancements such as cloud-based Hadoop deployments, containerization, real-time streaming solutions, and machine learning integrations.

Continuous learning enables administrators to implement innovative solutions while maintaining stability and security. They assess emerging technologies for potential integration, testing new approaches in controlled environments before adoption. Staying current ensures that enterprise Hadoop clusters remain competitive and capable of meeting evolving business requirements.

Strategic Planning and Enterprise Value

CCA-500 certified professionals provide strategic oversight for enterprise Hadoop deployments. They evaluate system architecture, data workflows, and operational processes to ensure alignment with business objectives. By optimizing cluster performance, enhancing security, and streamlining workflows, administrators contribute to measurable organizational value.

Strategic planning includes identifying opportunities for cost savings, performance improvements, and scalability enhancements. Administrators balance technical decisions with business priorities, ensuring that Hadoop investments deliver long-term benefits. Their expertise positions them as key contributors to data-driven enterprise strategies, enhancing both operational efficiency and competitive advantage.

Career Advancement and Professional Recognition

Achieving CCA-500 certification demonstrates a high level of technical skill and strategic understanding in Hadoop administration. Certified professionals are recognized for their ability to manage complex clusters, optimize enterprise data workflows, and support large-scale data initiatives.

Career opportunities include senior Hadoop administrator, data platform architect, enterprise data operations manager, and technology strategist roles. Certification validates expertise, strengthens professional credibility, and provides a foundation for continued advancement in data management and big data technologies.

Conclusion

The CCA-500 certification represents comprehensive knowledge and mastery in Hadoop cluster administration. Certified administrators are equipped to design, implement, and manage large-scale enterprise clusters while ensuring security, performance, and compliance. They oversee ecosystem integration, workflow orchestration, resource management, and disaster recovery planning to support efficient and resilient operations.

Advanced skills in troubleshooting, monitoring, and optimization enable administrators to maintain high availability and adaptability in dynamic environments. Their strategic oversight and collaboration with cross-functional teams align technical capabilities with business objectives, maximizing enterprise value from Hadoop deployments. Continuous learning and technological adaptability ensure that certified professionals remain at the forefront of big data administration.

Cloudera CCA-500 practice test questions and answers, training course, study guide are uploaded in ETE Files format by real users. Study and Pass CCA-500 Cloudera Certified Administrator for Apache Hadoop (CCAH) certification exam dumps & practice test questions and answers are to help students.

Why customers love us?

What do our customers say?

The resources provided for the Cloudera certification exam were exceptional. The exam dumps and video courses offered clear and concise explanations of each topic. I felt thoroughly prepared for the CCA-500 test and passed with ease.

Studying for the Cloudera certification exam was a breeze with the comprehensive materials from this site. The detailed study guides and accurate exam dumps helped me understand every concept. I aced the CCA-500 exam on my first try!

I was impressed with the quality of the CCA-500 preparation materials for the Cloudera certification exam. The video courses were engaging, and the study guides covered all the essential topics. These resources made a significant difference in my study routine and overall performance. I went into the exam feeling confident and well-prepared.

The CCA-500 materials for the Cloudera certification exam were invaluable. They provided detailed, concise explanations for each topic, helping me grasp the entire syllabus. After studying with these resources, I was able to tackle the final test questions confidently and successfully.

Thanks to the comprehensive study guides and video courses, I aced the CCA-500 exam. The exam dumps were spot on and helped me understand the types of questions to expect. The certification exam was much less intimidating thanks to their excellent prep materials. So, I highly recommend their services for anyone preparing for this certification exam.

Achieving my Cloudera certification was a seamless experience. The detailed study guide and practice questions ensured I was fully prepared for CCA-500. The customer support was responsive and helpful throughout my journey. Highly recommend their services for anyone preparing for their certification test.

I couldn't be happier with my certification results! The study materials were comprehensive and easy to understand, making my preparation for the CCA-500 stress-free. Using these resources, I was able to pass my exam on the first attempt. They are a must-have for anyone serious about advancing their career.

The practice exams were incredibly helpful in familiarizing me with the actual test format. I felt confident and well-prepared going into my CCA-500 certification exam. The support and guidance provided were top-notch. I couldn't have obtained my Cloudera certification without these amazing tools!

The materials provided for the CCA-500 were comprehensive and very well-structured. The practice tests were particularly useful in building my confidence and understanding the exam format. After using these materials, I felt well-prepared and was able to solve all the questions on the final test with ease. Passing the certification exam was a huge relief! I feel much more competent in my role. Thank you!

The certification prep was excellent. The content was up-to-date and aligned perfectly with the exam requirements. I appreciated the clear explanations and real-world examples that made complex topics easier to grasp. I passed CCA-500 successfully. It was a game-changer for my career in IT!