- Home

- Splunk Certifications

- SPLK-1001 Splunk Core Certified User Dumps

Pass Splunk SPLK-1001 Exam in First Attempt Guaranteed!

Get 100% Latest Exam Questions, Accurate & Verified Answers to Pass the Actual Exam!

30 Days Free Updates, Instant Download!

SPLK-1001 Premium Bundle

- Premium File 212 Questions & Answers. Last update: Feb 04, 2026

- Training Course 28 Video Lectures

- Study Guide 320 Pages

Last Week Results!

Includes question types found on the actual exam such as drag and drop, simulation, type-in and fill-in-the-blank.

Based on real-life scenarios similar to those encountered in the exam, allowing you to learn by working with real equipment.

Developed by IT experts who have passed the exam in the past. Covers in-depth knowledge required for exam preparation.

All Splunk SPLK-1001 certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the SPLK-1001 Splunk Core Certified User practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

Expert Tips for Cracking the SPLK-1001 Splunk Core Certified User Exam

Splunk is a versatile platform designed to collect, index, and analyze machine-generated data from diverse sources in real time. It provides a centralized interface for managing data, allowing users to search, visualize, and monitor complex datasets efficiently. This capability makes it possible to quickly identify patterns, detect anomalies, and generate actionable insights. The platform supports both operational intelligence and data analytics, enabling professionals to convert raw data into meaningful visualizations, dashboards, alerts, and reports that facilitate informed decision-making. Understanding the platform’s capabilities is crucial for anyone aiming to validate their skills through the SPLK-1001 certification, as the exam tests not only theoretical knowledge but also practical application of these functions.

Introduction to the SPLK-1001 Certification

The SPLK-1001 Splunk Core Certified User certification is an entry-level credential that demonstrates foundational proficiency in using the Splunk platform. It is designed for individuals who are beginning their journey in data analysis, IT monitoring, or operational intelligence. The certification assesses the ability to navigate the platform, conduct searches, utilize fields, create basic statistical reports, generate dashboards, implement alerts, and leverage lookups effectively. This credential applies to both the Splunk Enterprise environment and cloud-based deployments, reflecting the candidate’s ability to manage real-world datasets across various scenarios.

Exam Structure and Objectives

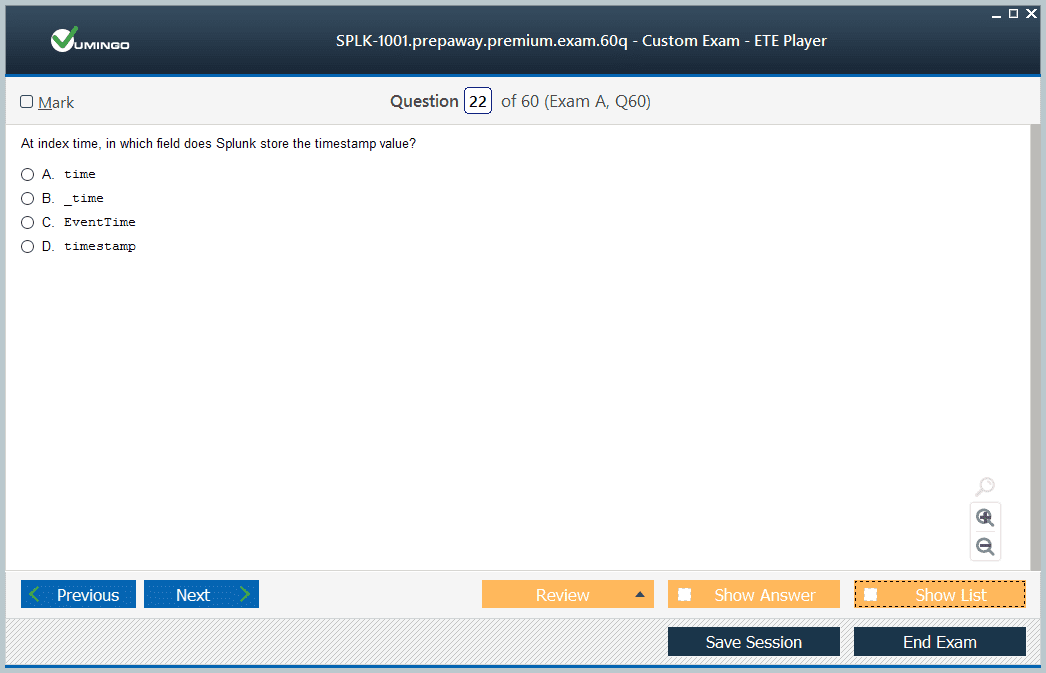

The SPLK-1001 exam evaluates a candidate’s understanding of core Splunk functionalities and practical skills in handling data. It consists of multiple-choice questions that cover a range of domains including basic navigation, searching, field extraction, search language fundamentals, transforming commands, report creation, dashboard design, lookups, and scheduling alerts and reports. The exam emphasizes the practical application of these skills, assessing how candidates can efficiently manipulate data, derive insights, and present findings through visualizations. Familiarity with the exam structure helps candidates manage their time effectively and approach each question strategically.

Fundamental Splunk Concepts

Candidates preparing for SPLK-1001 must have a clear understanding of fundamental Splunk concepts. This includes knowledge of how Splunk collects and indexes data, the architecture of the platform, and how different components interact to support data analysis. Understanding the indexing process, sources of data, and the role of forwarders is critical, as it forms the foundation for performing searches and creating dashboards.

Basic concepts also cover the Splunk interface, including how to navigate menus, access apps, and manage datasets. Awareness of the platform’s search capabilities, use of fields, and the creation of event types forms the backbone of efficient data exploration. Mastery of these fundamentals ensures that candidates can work with data accurately and derive insights effectively during the exam and in professional contexts.

Search and Query Techniques

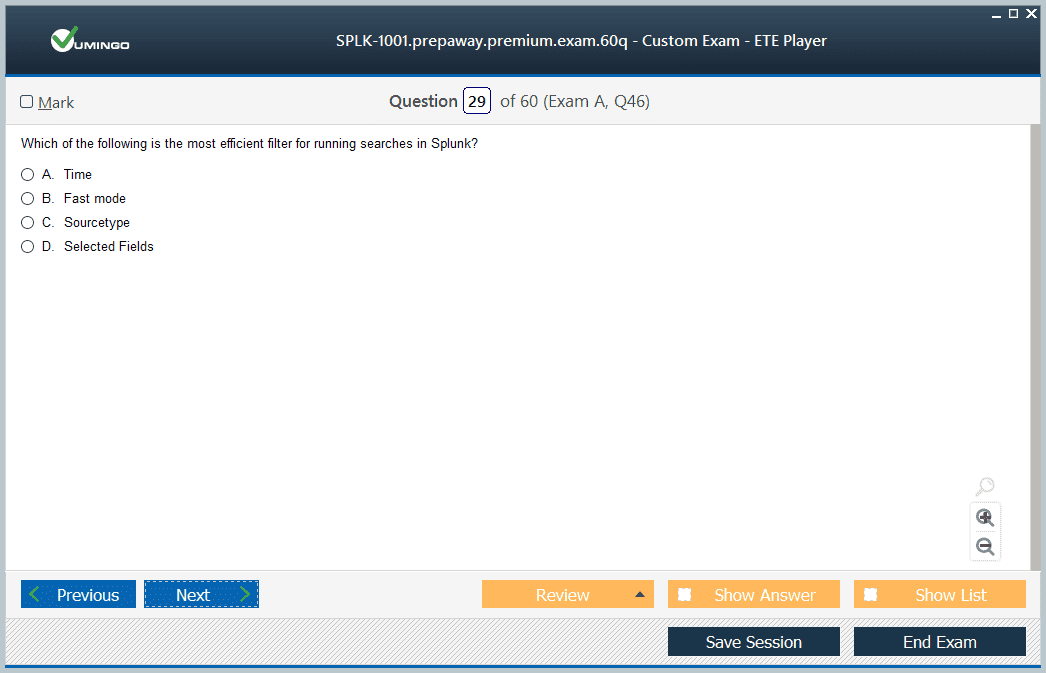

A significant portion of the SPLK-1001 exam focuses on search techniques and query formulation. Candidates need to demonstrate proficiency in creating and refining searches to retrieve relevant information. This includes using search keywords, Boolean operators, and time modifiers to filter datasets efficiently. Understanding how to extract and utilize fields is essential for isolating data points and performing accurate analysis.

Search language fundamentals form a core component of this section. Candidates must be familiar with basic commands, functions, and syntax that enable them to manipulate datasets. This includes commands for filtering, sorting, and formatting results, as well as using statistical functions to generate insights. Proficiency in search techniques ensures that candidates can analyze data effectively and answer questions accurately in both the exam and real-world applications.

Transforming Commands and Data Analysis

Transforming commands are critical for converting raw data into meaningful insights. Candidates should understand commands that allow them to aggregate, summarize, and manipulate data to identify trends and patterns. This includes commands for calculating statistics, grouping results, and applying functions that highlight relevant information within complex datasets.

Understanding the application of transforming commands in the context of reports and dashboards is essential. Candidates must be able to create visualizations that convey insights clearly, using charts, graphs, and tables. The ability to translate search results into actionable visual formats is a key skill assessed in the SPLK-1001 exam and is widely applicable in professional scenarios where data-driven decision-making is required.

Creating Reports and Dashboards

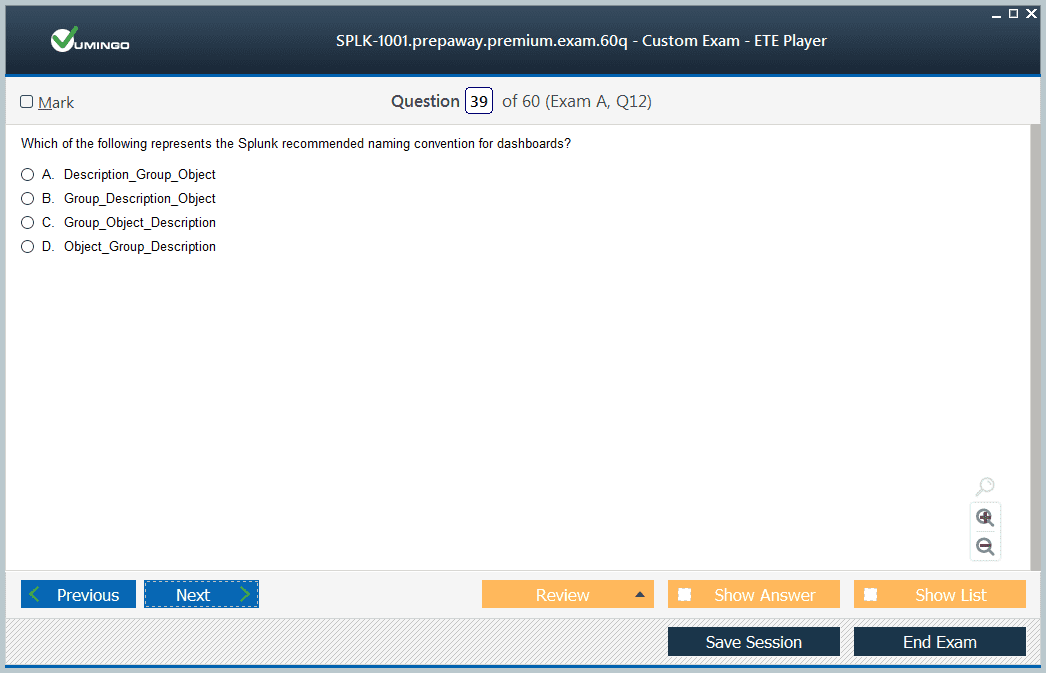

Report and dashboard creation is a central skill for SPLK-1001 candidates. This involves designing visual representations that communicate insights effectively to stakeholders. Candidates should understand how to create basic reports, schedule them for regular updates, and use dashboards to provide a consolidated view of key metrics.

Effective dashboard design requires understanding how to select appropriate visualization types, arrange panels logically, and apply filters to enable dynamic interaction with data. Candidates should also be familiar with best practices for making dashboards intuitive, accessible, and informative. Mastery of these skills ensures that certified professionals can present complex data in a clear and actionable manner.

Utilizing Lookups

Lookups are a powerful feature in Splunk that allow users to enrich event data by referencing external datasets. Candidates should understand how to create, manage, and apply lookups to enhance data analysis. This includes performing field mappings, importing external data, and using lookups to correlate information across datasets.

Proficiency in lookups is essential for solving complex data analysis problems. It enables candidates to integrate additional context, improve search accuracy, and generate more meaningful insights. Understanding lookups also supports the creation of comprehensive reports and dashboards that reflect enriched datasets.

Scheduled Reports and Alerts

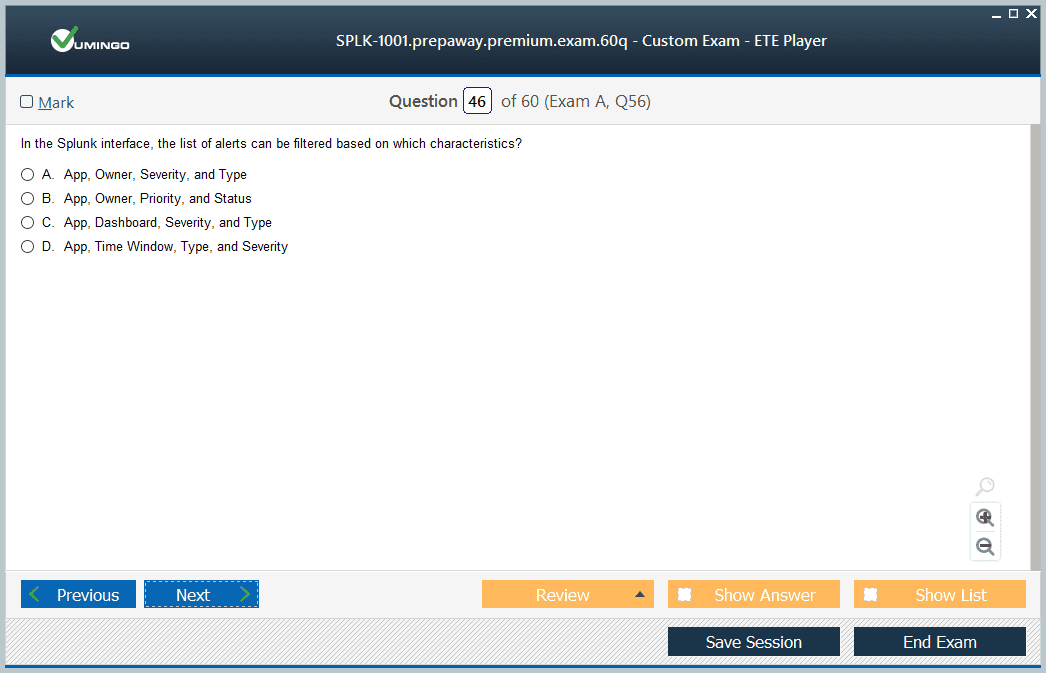

Scheduled reports and alerts are key functionalities that help automate monitoring and reporting processes. Candidates must understand how to configure schedules, define alert conditions, and manage notifications. This includes setting up real-time alerts, thresholds, and triggering mechanisms based on specific search criteria.

Mastery of scheduling and alert configuration demonstrates a candidate’s ability to maintain ongoing oversight of critical metrics. It reflects practical knowledge of monitoring operational systems, ensuring timely responses to anomalies, and supporting proactive decision-making. These skills are directly assessed in the SPLK-1001 exam.

Hands-On Practice and Scenario Simulation

Practical experience is crucial for SPLK-1001 preparation. Candidates should dedicate time to hands-on exercises, working with sample datasets to simulate real-world scenarios. This practice helps solidify understanding of search techniques, transforming commands, lookups, report generation, and dashboard creation.

Scenario simulation allows candidates to apply theoretical knowledge in a controlled environment, reinforcing their ability to analyze data, generate insights, and respond to dynamic situations. Consistent practice with realistic scenarios improves confidence and prepares candidates for both the practical and conceptual challenges of the exam.

Time Management During Preparation

Effective time management is a critical component of preparation. Candidates should plan study sessions to cover all exam domains systematically, allocating more time to areas that require additional focus. Breaking down preparation into focused modules ensures comprehensive coverage without overwhelming the learner.

During practice exams, candidates should simulate real testing conditions to build pacing skills. Allocating specific time blocks for different types of questions allows candidates to practice completing the exam within the allotted timeframe while maintaining accuracy. Time management strategies directly influence exam performance and overall readiness.

Leveraging Peer Support and Collaboration

Engaging with peers and professional communities can enhance preparation. Study groups, discussion forums, and collaborative learning opportunities provide access to diverse perspectives and problem-solving approaches. Candidates can exchange tips, clarify doubts, and gain insights from individuals with prior experience in using Splunk.

Collaboration encourages deeper understanding of challenging concepts, reinforces learning through discussion, and provides accountability throughout the preparation process. Peer support can also help in identifying areas that need additional focus and in developing strategies for effectively tackling exam questions.

Understanding Practical Application

The SPLK-1001 exam emphasizes practical application rather than memorization alone. Candidates should focus on using Splunk features to solve real-world problems, such as monitoring system performance, analyzing log data, and creating meaningful visualizations. Understanding how to translate search results into actionable insights is critical for success.

Applying learned skills professionally helps reinforce knowledge. Working on actual datasets, simulating monitoring scenarios, and creating dashboards strengthens the ability to perform tasks efficiently. This practical approach ensures that certified professionals can utilize Splunk effectively in operational environments and maintain relevance beyond the exam.

Continuous Review and Skill Refinement

Continuous review is essential to retain knowledge and improve proficiency. Candidates should revisit challenging concepts, practice frequently used commands, and explore additional functionality within the platform. Repeated exposure strengthens memory retention, increases familiarity with search patterns, and enhances the ability to troubleshoot issues efficiently.

Skill refinement involves analyzing mistakes in practice exercises, understanding the underlying reasons, and applying corrections. This iterative process builds competence, confidence, and the ability to handle unexpected scenarios during the exam.

Building Confidence and Exam Readiness

Confidence plays a significant role in performance. Familiarity with the platform, hands-on experience, and thorough understanding of exam domains contribute to a candidate’s sense of preparedness. Maintaining a positive mindset and trusting in one’s preparation are crucial for approaching the exam calmly and methodically.

Preparation strategies that include practice tests, scenario-based exercises, and time management simulations build mental readiness. Confidence allows candidates to interpret questions accurately, apply learned skills effectively, and maximize performance under exam conditions.

Integration of Knowledge Across Domains

Success in SPLK-1001 requires integrating knowledge across multiple domains. Candidates must combine search techniques, transforming commands, report creation, lookups, and alert scheduling to solve comprehensive data challenges. Understanding the interconnections between different functionalities ensures a holistic approach to data analysis.

Integration skills enable candidates to handle complex datasets, respond to dynamic scenarios, and create meaningful insights that reflect both technical proficiency and analytical thinking. These capabilities are essential for demonstrating competence on the exam and for practical use in professional settings.

Developing Analytical Thinking

Analytical thinking is cultivated through consistent practice with searches, commands, and visualization tools. Candidates learn to dissect datasets, identify patterns, and interpret results critically. This approach enhances problem-solving abilities and supports evidence-based decision-making in professional contexts.

Developing analytical thinking skills also prepares candidates for scenarios where multiple data points must be correlated, anomalies must be detected, and actionable conclusions must be drawn. Proficiency in analysis directly impacts performance in both the exam and real-world Splunk usage.

Practical Tips for Efficient Learning

Organizing study sessions, focusing on high-priority exam domains, and practicing regularly are essential for effective preparation. Candidates should create a structured plan, track progress, and adjust strategies based on performance in practice exercises. Hands-on experience combined with theoretical understanding reinforces learning outcomes and ensures readiness for the exam.

Balancing preparation with practical application helps solidify knowledge, build confidence, and enhance problem-solving abilities. Efficient learning practices ensure comprehensive coverage of all topics and facilitate successful navigation of the SPLK-1001 exam.

Mentorship and Guidance

Seeking mentorship from experienced Splunk users can provide insights into efficient study techniques, common pitfalls, and best practices. Guidance from professionals who have successfully passed the certification can help candidates focus their preparation and approach the exam strategically.

Mentorship also provides exposure to practical challenges, real-world applications, and professional expectations. Learning from experienced practitioners bridges the gap between theoretical knowledge and practical expertise, reinforcing exam readiness and professional competence.

Exam Day Preparation

On the day of the exam, maintaining focus and composure is essential. Candidates should review key concepts, ensure familiarity with the testing environment, and approach questions methodically. Proper rest, preparation, and confidence contribute to optimal performance.

Reading questions carefully, allocating time effectively, and applying problem-solving skills systematically enhance the likelihood of success. Exam day strategies complement preparation efforts and ensure candidates demonstrate their proficiency fully.

Professional Application of Skills

The skills gained while preparing for SPLK-1001 extend beyond the exam. Candidates learn to navigate Splunk efficiently, perform searches, analyze datasets, create dashboards, and generate reports. These capabilities are directly applicable in professional environments, supporting operational intelligence, monitoring, and data-driven decision-making.

Hands-on experience ensures that candidates can apply theoretical knowledge practically. Mastery of core functionalities allows certified professionals to contribute effectively to organizational objectives, optimize workflows, and provide actionable insights that support business and IT operations.

Continuous Improvement and Learning

SPLK-1001 preparation fosters a mindset of continuous improvement. Candidates are encouraged to revisit concepts, explore additional functionalities, and engage with new data challenges. Continuous learning ensures that skills remain relevant and that candidates are prepared to adapt to evolving scenarios.

Ongoing practice, exploration, and review reinforce competence, enhance confidence, and maintain readiness for professional applications. Developing a habit of continuous improvement ensures long-term proficiency and supports growth in data analysis and monitoring roles.

Applying Skills in Data Monitoring

Certified professionals leverage their knowledge to monitor systems, detect anomalies, and generate actionable insights. They use searches, fields, transforming commands, dashboards, and alerts to maintain oversight over operational data and ensure timely responses to issues.

Practical application reinforces learning, improves decision-making, and demonstrates the value of certification in real-world contexts. The ability to interpret data, respond to alerts, and communicate insights effectively is central to the role of a SPLK-1001 certified user.

Enhancing Problem-Solving Abilities

SPLK-1001 preparation strengthens problem-solving skills by exposing candidates to varied datasets, challenges, and scenarios. Candidates learn to identify issues, apply appropriate commands, and generate solutions efficiently.

Problem-solving skills are critical for both the exam and professional use. They enable candidates to analyze complex information, prioritize tasks, and make informed decisions that optimize outcomes in operational and analytical contexts.

Building Confidence Through Practice

Confidence is reinforced through repeated practice and familiarity with exam concepts. Candidates develop trust in their ability to perform searches, manipulate data, and create reports and dashboards accurately.

Confidence enhances focus, reduces exam-related stress, and ensures a methodical approach to problem-solving. Preparedness and self-assurance contribute to success on the SPLK-1001 exam and in professional applications.

Strategic Use of Resources

Efficient preparation involves strategic use of available learning resources. Candidates should focus on documentation, tutorials, hands-on exercises, and peer interactions. Prioritizing high-impact topics and practicing regularly ensures readiness across all exam domains.

Strategic resource utilization supports knowledge retention, skill development, and practical application. Candidates are better equipped to handle both theoretical questions and real-world scenarios, reflecting comprehensive understanding and competence in Splunk.

Integrating Exam Knowledge with Professional Practice

SPLK-1001 certified users apply exam knowledge in professional settings to monitor systems, analyze data, and generate insights. This integration ensures that skills gained during preparation are practically relevant and enhance workplace performance.

Practical integration involves creating dashboards, scheduling alerts, performing searches, and analyzing trends. Certified professionals contribute to operational efficiency, decision-making, and data-driven strategy implementation, demonstrating the value of certification in real-world contexts.

Reinforcing Learning Through Iteration

Repetition and iterative practice reinforce learning. Candidates benefit from revisiting searches, experimenting with commands, and simulating scenarios to strengthen understanding. Iterative practice ensures that knowledge is retained and can be applied effectively during the exam.

Reinforced learning builds confidence, improves accuracy, and enhances problem-solving abilities. Candidates are better prepared to handle complex exam questions and professional challenges after consistent iterative practice.

Finalizing Exam Readiness

As candidates approach the SPLK-1001 exam, consolidating knowledge across all domains is essential. Review search techniques, transforming commands, field extractions, and visualization practices to ensure a comprehensive understanding. Practice combining multiple functionalities in a single workflow, such as creating dashboards that integrate search results with lookups and scheduled alerts. This integration mirrors the complexity of real-world scenarios and reinforces the ability to apply multiple skills simultaneously.

Hands-On Practice with Realistic Datasets

To strengthen proficiency, candidates should work extensively with realistic datasets. Engage in exercises that simulate operational and business environments, analyzing logs, metrics, and event data. Practice creating visualizations that summarize insights effectively and highlight critical information. This hands-on experience enhances the ability to work with diverse data types, manage large datasets, and extract meaningful patterns.

Refining Search Language Skills

The SPLK-1001 exam evaluates command syntax and efficient use of the search language. Candidates should focus on mastering search commands, operators, and modifiers to construct precise queries. Understanding how to filter data, apply time constraints, and extract relevant fields enables efficient analysis. Practicing search optimization and applying multiple commands in combination helps reinforce command fluency and reduces errors during the exam.

Mastering Transforming Commands

Transforming commands are fundamental for analyzing and manipulating data. Candidates should practice using commands for aggregation, statistical analysis, and grouping results. Exercises should include combining transforming commands with filtering and lookup operations to simulate realistic analytical workflows. Mastery of these commands is critical for creating actionable insights and demonstrates the candidate’s capability to handle complex data tasks.

Effective Use of Lookups

Lookups enhance data by associating external information with event records. Candidates should understand the process of creating lookup tables, configuring automatic field mappings, and applying lookups within searches. Practicing these operations reinforces understanding of data enrichment and correlation, which is essential for producing accurate reports and dashboards. Effective lookup usage ensures candidates can manage data complexity efficiently.

Creating Meaningful Reports and Dashboards

Developing the ability to generate informative reports and interactive dashboards is central to exam success. Candidates should practice selecting appropriate visualization types, arranging components logically, and applying filters to enable dynamic data exploration. Focus on clarity, accuracy, and actionable insights in visual presentations. By simulating real reporting scenarios, candidates can develop skills that reflect both technical proficiency and analytical judgment.

Scheduling and Managing Alerts

Alerting and scheduling are critical for monitoring ongoing data streams. Candidates should practice setting up scheduled searches, defining alert conditions, and configuring notifications. Exercises should include real-time monitoring scenarios where alerts trigger based on thresholds or anomalies. Mastery of scheduling ensures candidates can automate monitoring tasks, respond promptly to events, and maintain consistent oversight over critical data.

Simulating Exam Conditions

Familiarity with the exam structure is important for time management and accuracy. Candidates should simulate full-length exams, practicing under timed conditions to build pacing skills. Focus on completing questions efficiently while maintaining precision. This approach helps candidates adapt to the pressure of timed testing, identify areas requiring additional focus, and develop strategies for handling complex multi-step problems.

Integrating Knowledge Across Functions

The SPLK-1001 exam tests the ability to integrate multiple platform functions to solve analytical challenges. Candidates should practice combining search commands, transforming functions, lookups, reporting, dashboards, and alerts into cohesive workflows. Understanding how different functionalities interact allows candidates to approach complex tasks methodically and demonstrates readiness for practical data analysis scenarios.

Building Analytical Thinking

Analytical thinking is reinforced through practice with searches, commands, and data visualizations. Candidates learn to interpret results, identify patterns, and derive insights from raw data. Exercises that include anomaly detection, trend analysis, and data correlation develop the ability to make informed, evidence-based decisions. Analytical skills are critical both for the exam and for professional application, ensuring that certified users can handle diverse data challenges effectively.

Leveraging Peer Feedback

Engaging with peers during preparation provides opportunities for discussion, clarification, and collaborative problem solving. Study groups allow candidates to explore alternative approaches to common challenges, review difficult concepts, and gain insight into practical applications. Peer feedback strengthens understanding, encourages critical thinking, and builds confidence in applying skills in both exam and professional contexts.

Applying Knowledge Practically

Beyond theoretical understanding, SPLK-1001 preparation emphasizes practical application. Candidates should practice applying commands to diverse datasets, generating dashboards, and configuring alerts. This practical exposure ensures that exam candidates are comfortable performing real-world tasks and can demonstrate their skills effectively under test conditions. Practical application also enhances understanding of the relationships between different platform functionalities.

Continuous Review and Reinforcement

Regular review of key concepts, commands, and workflows is essential for retaining knowledge. Candidates should revisit searches, transforming commands, lookups, and report creation repeatedly to reinforce learning. Reinforcement ensures familiarity with the platform, reduces errors, and increases speed and accuracy during the exam. Continuous practice builds confidence and competence across all exam domains.

Time Management Strategies

Efficient time allocation is critical for preparation and exam performance. Candidates should develop study schedules that balance hands-on practice, review of theoretical concepts, and practice tests. During practice exercises, allocate time proportionally to focus on more complex or heavily weighted topics. Proper time management ensures comprehensive coverage and reduces last-minute stress.

Addressing Challenging Areas

Certain exam topics, such as complex transforming commands, data enrichment through lookups, and multi-step dashboard creation, may require focused attention. Candidates should identify weak areas early, dedicate additional practice, and seek guidance or explanations for difficult concepts. Targeted practice ensures competency across all exam domains and improves overall performance.

Confidence Building

Confidence is developed through hands-on practice, mastery of commands, and repeated scenario simulations. Familiarity with the exam structure, combined with a clear understanding of functionalities, fosters a sense of preparedness. Confidence allows candidates to approach questions methodically, make informed decisions, and manage time effectively during the exam.

Professional Skill Integration

Skills gained during SPLK-1001 preparation are directly applicable to professional environments. Candidates learn to perform searches, analyze logs, generate insights, create dashboards, and manage alerts effectively. These capabilities support operational monitoring, problem-solving, and decision-making in real-world contexts.

Problem-Solving and Critical Thinking

The certification preparation process strengthens problem-solving and critical thinking skills. Candidates learn to approach complex datasets, identify anomalies, apply multiple commands, and generate actionable insights. Developing these skills ensures that certified professionals can respond to dynamic situations and make informed decisions efficiently.

Continuous Learning Beyond Certification

Preparation for SPLK-1001 fosters a mindset of continuous learning. Candidates are encouraged to explore new features, revisit concepts, and practice with diverse datasets. Continuous engagement ensures skills remain relevant and prepares candidates for ongoing challenges in data analysis and system monitoring.

Integration of Exam Knowledge into Daily Practice

Certified users apply exam knowledge to real-world tasks, including monitoring system performance, analyzing operational data, generating reports, and creating dashboards. Integrating learned skills into daily practice reinforces retention and demonstrates practical competence.

Iterative Practice for Mastery

Iterative practice involves repeated exercises to strengthen command usage, search techniques, and data visualization skills. Candidates should focus on performing tasks multiple times under different scenarios to build proficiency. Iterative learning ensures readiness for complex exam questions and professional tasks.

Scenario-Based Problem Solving

Practice with scenario-based exercises enhances the ability to handle real-world challenges. Candidates should simulate incidents, troubleshoot datasets, and generate insights using multiple functionalities. Scenario-based practice develops critical thinking, decision-making, and adaptability—skills directly relevant to SPLK-1001.

Strategic Review and Consolidation

In the final stages of preparation, candidates should consolidate learning by reviewing key commands, workflows, and exam domains. Focused review ensures comprehension, reinforces weak areas, and increases confidence. Structured consolidation supports both efficiency and effectiveness during the exam.

Exam Execution Strategies

On exam day, candidates should approach questions methodically, read carefully, and allocate time according to complexity. Maintaining focus, managing stress, and trusting in preparation ensures optimal performance. Strategic execution complements preparation efforts, maximizing the ability to demonstrate skills accurately.

Applying Skills Professionally

SPLK-1001 certified users leverage their skills in monitoring, analysis, and visualization within operational contexts. Mastery of search techniques, transforming commands, lookups, reporting, and dashboards enables effective oversight of complex data environments. Professional application reinforces knowledge, supports decision-making, and contributes to operational efficiency.

Continuous Skill Enhancement

Even after certification, continuous engagement with Splunk functionalities ensures proficiency. Candidates should explore new features, practice with varied datasets, and refine workflows. Continuous enhancement strengthens skills, maintains relevance, and prepares professionals for evolving challenges.

Preparing for Advanced Concepts

Although SPLK-1001 focuses on foundational skills, preparation develops a base for exploring advanced functionalities. Mastery of core commands, searches, dashboards, and alerts equips candidates for future growth, enabling them to tackle more complex analytical and monitoring tasks.

Collaboration and Knowledge Sharing

Engaging in knowledge sharing with peers enhances learning and provides exposure to alternative approaches. Candidates should participate in discussions, share insights, and review case studies collaboratively. Collaboration reinforces understanding, encourages innovative thinking, and builds practical skills.

Maintaining Focus and Discipline

Effective preparation requires consistent focus and disciplined study habits. Candidates should dedicate regular time for practice, review, and hands-on exercises. Maintaining focus ensures thorough coverage of all domains, reinforces learning, and builds confidence for the exam.

Developing Efficiency in Workflows

Practice with SPLK-1001 domains develops efficiency in data workflows. Candidates learn to structure searches, organize dashboards, and automate alerts effectively. Efficient workflows support professional competence and prepare candidates for practical applications.

Strengthening Technical Proficiency

Preparation enhances technical proficiency across search commands, transforming operations, visualizations, lookups, and scheduling. Technical mastery ensures candidates can execute tasks accurately and efficiently, both for the exam and in professional contexts.

Analytical Application in Real Scenarios

Candidates should practice applying analytical skills to real datasets, interpreting results, and making data-driven decisions. This approach reinforces practical understanding and demonstrates readiness to handle complex operational challenges.

Simulating Complex Workflows

Simulating complex workflows during preparation strengthens problem-solving and integration skills. Candidates learn to connect searches, transforming commands, dashboards, lookups, and alerts in cohesive processes. This practice ensures readiness for multi-step exam scenarios and professional tasks.

Exam Confidence Through Repetition

Repeated practice builds confidence, reduces errors, and improves pacing. Familiarity with commands, workflows, and problem types ensures candidates can navigate the exam effectively. Confidence translates into accurate execution and calm decision-making.

Strategic Use of Study Materials

Candidates should utilize study materials strategically, prioritizing high-weighted domains and areas of difficulty. Structured study ensures comprehensive coverage while reinforcing strengths and addressing weaknesses. Strategic preparation maximizes efficiency and exam readiness.

Connecting Exam Knowledge to Job Functions

Certified users apply SPLK-1001 knowledge in professional settings, performing searches, generating reports, and monitoring data. Applying learned skills bridges the gap between exam preparation and real-world competence, demonstrating value in operational contexts.

Reinforcing Knowledge With Iterative Exercises

Iterative exercises solidify command usage, search techniques, and visualization skills. Repeated practice ensures accuracy, retention, and readiness for dynamic exam scenarios. Iterative learning also strengthens analytical and problem-solving capabilities.

Preparing Mentally for Exam Challenges

Mental preparation complements technical readiness. Candidates should practice stress management, maintain focus, and approach the exam methodically. Mental readiness enhances decision-making, time management, and overall exam performance.

Applying Workflow Integration in Practice

Understanding how to integrate multiple functions into cohesive workflows prepares candidates for the practical aspects of the exam. Combining searches, transforming commands, dashboards, lookups, and alerts develops the ability to manage complex scenarios efficiently.

Advanced Data Handling Techniques

To excel in the SPLK-1001 exam, candidates must understand advanced data handling techniques that extend beyond basic searches. This includes managing structured and unstructured data, applying field extractions effectively, and utilizing lookup tables to enrich event data. Candidates should practice transforming raw logs into usable datasets, understanding the relationships between different fields, and creating structured outputs for further analysis. Proficiency in these techniques ensures the ability to navigate complex datasets, perform comprehensive analysis, and derive actionable insights, reflecting the core skills assessed in the exam.

Efficient Use of Time Modifiers

Mastering time modifiers is essential for precise data retrieval. Candidates should practice filtering events using time ranges, relative times, and specific timestamps to isolate relevant information efficiently. Understanding how time affects search results, report generation, and alert conditions is crucial. Effective application of time modifiers enables candidates to focus on critical events, monitor trends over periods, and produce timely insights for decision-making and exam problem-solving scenarios.

Field Extraction and Usage

Field extraction is a key competency for the SPLK-1001 exam. Candidates should practice identifying and extracting meaningful fields from raw event data. This includes creating field extractions using regular expressions, search commands, and automatic field discovery tools. Properly extracted fields enhance searches, support report creation, and enable more precise analysis. Developing skill in this area is critical for performing accurate searches, producing insightful visualizations, and demonstrating proficiency in exam tasks.

Optimizing Search Performance

Efficiency in search execution is a significant aspect of professional Splunk use and exam readiness. Candidates should learn strategies to optimize search performance, such as refining query structure, limiting unnecessary data retrieval, and applying filters early in the search process. Optimized searches reduce execution time, improve accuracy, and allow candidates to handle larger datasets effectively. Exam scenarios often require quick, accurate searches, making optimization skills critical for success.

Understanding Statistical Commands

Statistical commands enable deeper analysis and are a core component of the SPLK-1001 exam. Candidates should practice commands for calculating counts, averages, sums, percentages, and other metrics. Understanding how to use statistical commands in conjunction with transforming operations allows for aggregation and summarization of complex datasets. Mastery of these commands equips candidates to create meaningful reports, highlight trends, and provide actionable insights during the exam.

Visualization Best Practices

Creating effective visualizations is essential for conveying insights clearly. Candidates should practice selecting chart types appropriate for the data, applying color and formatting for clarity, and arranging dashboard panels to support user interpretation. Visualization best practices involve balancing detail with readability, ensuring that key trends and anomalies are easy to identify. Exam tasks often require generating dashboards that communicate data effectively, making proficiency in visualization a critical skill.

Lookup Management and Correlation

Lookups are not only for enrichment but also for correlating disparate datasets. Candidates should practice integrating external tables with event data to enhance analysis. This involves mapping fields correctly, updating lookup tables as needed, and combining multiple lookups to generate comprehensive insights. Strong lookup management skills enable candidates to handle complex relationships between datasets, which is often tested in practical exam questions.

Alert Configuration and Automation

Candidates must be adept at configuring alerts to monitor events and automate notifications. Practice should include setting thresholds, creating conditional alerts, and scheduling automated actions based on search results. Effective alert management ensures that important events are detected and communicated promptly. Mastery of this functionality demonstrates the ability to maintain operational oversight and respond to incidents, which aligns with SPLK-1001 exam objectives.

Combining Multiple Functionalities

The SPLK-1001 exam evaluates the ability to integrate multiple Splunk functionalities in cohesive workflows. Candidates should practice combining searches, statistical commands, field extractions, lookups, dashboards, and alerts to solve complex scenarios. Understanding how these elements interact allows candidates to approach multi-step tasks methodically, produce accurate results, and demonstrate applied problem-solving skills during the exam.

Simulating Real-World Scenarios

Preparation should include simulations of real-world monitoring and analysis challenges. Candidates should work with datasets representing operational environments, perform searches to identify anomalies, enrich data using lookups, generate dashboards, and configure alerts. Scenario-based practice reinforces the practical application of knowledge, improves decision-making under pressure, and builds familiarity with tasks similar to those presented in the exam.

Reviewing Weak Areas

Identifying and reviewing weak areas is crucial for exam readiness. Candidates should analyze performance in practice exercises and focus on concepts, commands, or workflows that pose challenges. Targeted practice strengthens understanding, reinforces skills, and ensures comprehensive coverage of all exam domains. Addressing weaknesses methodically contributes to higher accuracy and confidence during the actual exam.

Integrating Reporting and Visualization

Candidates should focus on integrating reporting and visualization skills to present data effectively. This includes creating reports from search results, summarizing key metrics, and designing dashboards that allow interactive exploration of data. Integration ensures that analytical outputs are not only accurate but also actionable and easy to interpret. This competency is central to SPLK-1001 exam tasks that require creating meaningful outputs from data analysis.

Practicing Exam Simulations

Full-length practice simulations help candidates adapt to the exam environment. Simulating timed conditions, reviewing exam-like questions, and performing tasks under realistic constraints build pacing, reduce stress, and improve accuracy. Exam simulations also allow candidates to test their understanding of complex workflows, integrate multiple skills, and develop strategies for time management and prioritization.

Developing Critical Thinking Skills

Critical thinking is essential for interpreting data and solving analytical problems. Candidates should practice evaluating search results, identifying patterns, detecting anomalies, and drawing conclusions based on evidence. This approach supports informed decision-making and is directly relevant to practical exam scenarios where analytical judgment is required.

Continuous Hands-On Practice

Consistent hands-on practice solidifies command usage, search skills, and visualization abilities. Candidates should dedicate time to working with varied datasets, experimenting with commands, and creating reports and dashboards repeatedly. Continuous engagement reinforces understanding, improves speed, and develops confidence in performing tasks under exam conditions.

Applying Knowledge to Troubleshooting

Troubleshooting is a key professional skill assessed indirectly in SPLK-1001 preparation. Candidates should practice identifying errors in searches, correcting command syntax, resolving field extraction issues, and verifying report accuracy. Developing troubleshooting skills ensures candidates can handle unexpected problems efficiently, a competency that reflects real-world use of Splunk and exam scenarios.

Time Management Strategies

Efficient time management is crucial during both preparation and exam execution. Candidates should develop structured study schedules, allocate sufficient time to challenging areas, and practice under timed conditions. During the exam, distributing time according to question complexity and prioritizing tasks ensures completion without sacrificing accuracy. Time management skills are essential for demonstrating proficiency and maximizing performance.

Peer Collaboration and Knowledge Sharing

Engaging with peers provides opportunities for collaborative learning, discussion, and feedback. Candidates should participate in study groups, share problem-solving approaches, and review workflows collaboratively. Peer interaction enhances understanding, provides diverse perspectives, and fosters practical insights that reinforce preparation for the SPLK-1001 exam.

Professional Application of Skills

SPLK-1001 certified users apply their skills in professional settings to analyze operational data, monitor systems, generate reports, and create dashboards. Applying learned skills in real contexts reinforces exam knowledge, develops practical competence, and prepares candidates for professional responsibilities that require data-driven decision-making.

Integrating Exam Concepts in Daily Practice

Candidates should practice integrating exam concepts into daily workflows to reinforce understanding. This includes performing searches, managing datasets, creating dashboards, configuring alerts, and applying lookups consistently. Integration ensures skills remain active, develops efficiency, and demonstrates the relevance of certification knowledge to professional tasks.

Reinforcing Learning Through Repetition

Repeated practice with searches, commands, reports, and dashboards strengthens proficiency. Candidates should engage in iterative exercises to consolidate knowledge, improve accuracy, and build confidence. Repetition ensures familiarity with exam scenarios and enhances problem-solving skills in both testing and professional environments.

Scenario-Based Learning

Scenario-based exercises help candidates prepare for complex, multi-step tasks. Simulating operational challenges, integrating multiple functionalities, and analyzing results develops analytical thinking, workflow integration, and applied problem-solving skills. This method mirrors exam requirements and reinforces practical readiness.

Maintaining Focus and Consistency

Sustained focus and consistent study habits are essential for mastering SPLK-1001 domains. Candidates should dedicate regular time for review, practice, and hands-on exercises, ensuring that each exam area receives adequate attention. Consistency builds depth of knowledge, reduces gaps, and strengthens overall preparation.

Developing Workflow Efficiency

Practice in creating efficient workflows ensures candidates can combine searches, transforming commands, lookups, dashboards, and alerts effectively. Efficiency in executing workflows reflects both technical proficiency and the ability to manage tasks quickly, a skill relevant for exam scenarios and professional data analysis.

Enhancing Analytical Skills

Analytical skills are developed through repeated exercises in interpreting data, identifying trends, and generating insights. Candidates should focus on connecting search results with visualizations and reports, improving their ability to draw meaningful conclusions from data. Analytical competence is crucial for both SPLK-1001 success and practical application in professional environments.

Integrating Alerts and Reporting

Integrating alerts with reporting enhances the ability to monitor systems and respond to events efficiently. Candidates should practice configuring alerts based on search results, correlating them with reports and dashboards, and creating comprehensive monitoring solutions. This integration reflects real-world applications and reinforces competencies assessed in the exam.

Iterative Review and Skill Enhancement

Iterative review of commands, searches, dashboards, and alerts strengthens retention, improves accuracy, and builds confidence. Candidates should cycle through practice exercises multiple times, refining workflows and addressing mistakes. Iterative learning ensures mastery of SPLK-1001 domains and readiness for both exam and professional tasks.

Exam-Day Strategies

On exam day, candidates should approach questions methodically, manage time effectively, and rely on practiced skills. Reading questions carefully, applying problem-solving strategies, and using knowledge of integrated workflows ensures accurate and efficient completion. Confidence, focus, and familiarity with exam tasks maximize performance.

Connecting Exam Knowledge to Real-World Tasks

SPLK-1001 skills translate directly to professional responsibilities, such as operational monitoring, data analysis, and dashboard creation. Applying knowledge in practice solidifies understanding, enhances efficiency, and demonstrates competence. Certified users leverage these skills to optimize workflows, generate actionable insights, and contribute effectively to operational decision-making.

Continuous Practice Beyond Certification

Ongoing practice maintains proficiency and prepares candidates for future challenges. Regularly working with searches, commands, lookups, reports, dashboards, and alerts ensures that skills remain sharp and relevant. Continuous engagement supports long-term growth and effectiveness in professional environments.

Strategic Focus on Challenging Areas

Focusing on complex areas, such as advanced transformations, multi-step dashboards, and lookup correlations, ensures comprehensive readiness. Candidates should identify and dedicate extra practice to these domains, reinforcing understanding and boosting confidence. Targeted preparation improves accuracy and reduces exam-day uncertainty.

Professional Integration of Knowledge

Certified users integrate SPLK-1001 skills into daily tasks, creating dashboards, performing searches, configuring alerts, and analyzing datasets. Professional integration ensures that certification knowledge has practical value, supporting operational oversight, reporting, and decision-making.

Building Confidence Through Mastery

Confidence is reinforced through repeated practice, scenario simulations, and applied problem-solving. Mastery of commands, workflows, and integrated functions ensures candidates are prepared to tackle exam questions effectively and perform competently in professional contexts.

Preparing for Multi-Step Problem Solving

Candidates should practice combining multiple functionalities to address complex tasks. This includes integrating searches, transforming commands, lookups, dashboards, and alerts into cohesive workflows. Multi-step practice develops analytical thinking, workflow integration, and practical problem-solving skills.

Continuous Skill Reinforcement

Regular practice, review, and applied usage reinforce SPLK-1001 knowledge. Candidates maintain competence across domains, ensuring readiness for both exam scenarios and real-world data analysis responsibilities.

Workflow Optimization and Automation

Candidates should focus on optimizing workflows and automating repetitive tasks through searches, alerts, and scheduled reports. Workflow optimization enhances efficiency, reduces errors, and reflects the practical application of SPLK-1001 skills.

Analytical Decision-Making

Developing the ability to make informed decisions based on data analysis is central to SPLK-1001 preparation. Candidates should practice interpreting search results, evaluating trends, and drawing actionable conclusions. Analytical decision-making skills are essential for both exam success and professional effectiveness.

Leveraging Integrated Skills

Integrated use of search commands, transforming operations, dashboards, lookups, and alerts ensures candidates can address complex scenarios. Mastering these combined skills prepares candidates for exam questions that assess applied knowledge and workflow proficiency.

Scenario-Based Dashboard Development

Candidates should practice creating dashboards that reflect operational scenarios, integrating multiple datasets and visualizations. Scenario-based dashboard exercises develop practical skills, analytical thinking, and visualization proficiency, all of which are tested in the exam.

Exam Simulation and Review

Full exam simulations under timed conditions allow candidates to practice pacing, workflow integration, and problem-solving strategies. Reviewing performance post-simulation highlights areas for improvement, ensuring readiness for the SPLK-1001 assessment.

Confidence in Practical Application

Confidence develops through consistent practice, mastery of commands, and successful scenario simulations. Candidates learn to approach tasks methodically, interpret results accurately, and manage time effectively. Confidence enhances performance during both the exam and real-world data analysis activities.

Professional Relevance of Skills

The skills honed during SPLK-1001 preparation apply directly to operational monitoring, reporting, and analysis in professional environments. Certified users leverage their knowledge to improve data management, generate insights, and support informed decision-making in daily workflows.

Maintaining Competence Through Practice

Continuous engagement with searches, dashboards, alerts, and reports ensures that SPLK-1001 skills remain current. Regular practice, scenario simulations, and iterative review reinforce mastery and sustain readiness for professional applications.

Iterative Improvement and Mastery

Iterative practice strengthens familiarity with complex searches, transforming commands, lookups, dashboards, and alerts. Candidates improve speed, accuracy, and confidence, preparing effectively for exam challenges and professional scenarios.

Applying SPLK-1001 Skills in Complex Scenarios

Mastery of the SPLK-1001 exam domains requires the ability to apply learned skills in complex, multi-layered scenarios. Candidates should practice integrating searches, transforming commands, lookups, dashboards, and alerts into workflows that simulate operational challenges. Exercises should include analyzing event sequences, correlating multiple datasets, and generating actionable insights. This approach helps develop problem-solving skills, reinforces understanding of platform capabilities, and prepares candidates to handle the type of scenario-based questions that are typical in the exam.

Data Correlation and Event Analysis

A critical aspect of SPLK-1001 preparation involves analyzing relationships between different types of events. Candidates should practice identifying correlations between logs, metrics, and performance data to detect anomalies and trends. Techniques such as combining multiple searches, applying statistical transformations, and enriching datasets with lookups are essential. Mastery in correlating data ensures accurate analysis, facilitates comprehensive monitoring, and demonstrates proficiency expected in both the exam and professional use cases.

Advanced Field Extraction Techniques

Field extraction is a foundational skill that becomes more advanced when dealing with complex or nested data. Candidates should practice extracting fields from structured, semi-structured, and unstructured data, applying regular expressions and search commands effectively. Correct field extraction improves search precision, enables accurate reporting, and supports dashboard visualizations. Developing these skills ensures candidates can manage varied data formats and respond to the demands of intricate SPLK-1001 exam tasks.

Efficient Use of Search Commands

Efficient searches are central to performance and accuracy in the SPLK-1001 exam. Candidates should practice constructing concise, optimized queries that retrieve relevant data quickly. This includes combining search modifiers, using Boolean operators, applying time filters, and limiting results when appropriate. Efficient use of commands reduces search latency, allows deeper analysis, and ensures timely generation of results, which is critical for both exam performance and professional data monitoring tasks.

Transforming Data for Insight

Transforming commands convert raw event data into summarized and actionable information. Candidates should practice commands that calculate statistics, group events, and manipulate results for deeper understanding. Exercises should include combining transforming operations with filtering and aggregation to highlight patterns and anomalies. Competence in transforming data ensures that candidates can generate accurate insights and develop reports that clearly communicate findings, a skill directly evaluated in the SPLK-1001 exam.

Comprehensive Dashboard Design

Designing effective dashboards involves more than visual appeal; it requires organizing panels to communicate insights efficiently. Candidates should practice selecting chart types, applying appropriate visualizations, arranging panels logically, and integrating filters for dynamic interaction. Well-designed dashboards allow users to explore data, monitor performance, and identify trends quickly. Mastery of dashboard design is crucial for demonstrating applied knowledge in the exam and for practical operational analysis.

Integrating Lookups for Context

Lookups provide context by enriching event data with external information. Candidates should practice creating and managing lookup tables, configuring automatic field mappings, and applying multiple lookups to correlate data. Integrating lookups into searches and dashboards enhances analysis accuracy, enables complex event correlation, and supports reporting. Proficiency in lookups ensures candidates can handle advanced exam scenarios requiring enriched datasets.

Scheduled Reports and Automation

Automation is a key competency in SPLK-1001. Candidates should practice scheduling reports and configuring automated alerts to ensure continuous monitoring. This includes defining thresholds, setting conditions for alerts, and verifying the accuracy of scheduled outputs. Automating repetitive tasks increases efficiency, supports timely decision-making, and reflects practical skills assessed in the exam.

Scenario-Based Problem Solving

Scenario-based exercises prepare candidates for complex exam questions. Candidates should simulate operational challenges, integrate multiple functionalities, and create cohesive workflows that address specific monitoring or analytical requirements. Practicing scenario-based problem solving strengthens critical thinking, workflow integration, and decision-making capabilities, ensuring readiness for both the exam and professional applications.

Optimizing Workflow Efficiency

Efficient workflow design enhances the speed and accuracy of data analysis. Candidates should practice structuring searches, combining transforming commands, and integrating lookups and dashboards for streamlined results. Optimized workflows reduce redundancy, facilitate faster insights, and reflect the skill level expected in SPLK-1001 tasks.

Iterative Practice and Review

Consistent, iterative practice is essential for mastering SPLK-1001 domains. Candidates should repeatedly perform searches, create reports, design dashboards, and configure alerts to reinforce knowledge. Iterative review identifies mistakes, consolidates learning, and improves problem-solving accuracy, ensuring preparedness for multi-step exam scenarios.

Time Management for Exam Success

Time management is a critical factor in exam performance. Candidates should allocate study time to cover all domains thoroughly, prioritize challenging areas, and practice completing exercises under time constraints. During the exam, managing time efficiently ensures candidates can address all questions accurately and methodically. Time management skills are vital for maintaining focus and avoiding errors under pressure.

Analytical Thinking and Decision-Making

Developing analytical thinking skills enables candidates to interpret data, detect anomalies, and draw actionable conclusions. Candidates should practice evaluating search results, identifying trends, and correlating multiple datasets. Strong analytical thinking supports evidence-based decision-making and is central to both the exam and professional data analysis.

Advanced Visualization Techniques

Beyond basic charts, advanced visualization techniques allow candidates to highlight key patterns and trends effectively. Practice should include combining multiple visualization types, applying dynamic filters, and creating interactive panels. Mastering advanced visualizations improves communication of insights and prepares candidates for the visualization-related components of the SPLK-1001 exam.

Peer Collaboration and Knowledge Sharing

Collaboration with peers enhances understanding and exposes candidates to diverse problem-solving approaches. Study groups and discussion forums allow knowledge exchange, clarification of complex concepts, and collaborative scenario-based exercises. Peer interaction reinforces learning, provides new perspectives, and builds confidence in handling challenging tasks.

Applying Skills to Operational Monitoring

SPLK-1001 skills are directly applicable to operational monitoring tasks. Candidates should practice using searches, dashboards, lookups, and alerts to monitor system performance, detect anomalies, and respond to incidents. Applying skills in simulated operational contexts reinforces practical competence and demonstrates readiness for professional responsibilities.

Troubleshooting and Problem Resolution

Troubleshooting is a vital skill for SPLK-1001 candidates. Practice should include identifying errors in search syntax, field extractions, dashboards, and alerts. Candidates should develop strategies to diagnose problems efficiently, correct mistakes, and verify accurate results. Mastery of troubleshooting ensures that candidates can handle unexpected challenges both in the exam and in real-world environments.

Continuous Review and Skill Reinforcement

Regular review and practice reinforce proficiency across all exam domains. Candidates should revisit key concepts, reperform critical workflows, and analyze previous mistakes to improve understanding. Continuous reinforcement maintains skill sharpness, reduces errors, and builds confidence for complex, integrated tasks.

Workflow Integration for Multi-Step Tasks

Multi-step workflow exercises simulate the integrated nature of real-world data analysis. Candidates should practice combining searches, transforming commands, dashboards, lookups, and alerts to produce cohesive outputs. Workflow integration strengthens analytical skills, enhances problem-solving, and ensures readiness for practical exam scenarios.

Professional Application of Exam Knowledge

SPLK-1001 knowledge is applicable in professional environments to analyze logs, monitor systems, generate reports, and create dashboards. Candidates should practice translating exam skills into operational tasks, reinforcing retention and demonstrating practical value. Professional application ensures that certification knowledge supports decision-making and operational efficiency.

Developing Efficiency and Accuracy

Efficiency and accuracy are crucial in both the exam and professional work. Candidates should practice performing searches, creating dashboards, and configuring alerts quickly while maintaining correctness. Efficient execution reflects competence in handling complex data tasks under time constraints.

Enhancing Problem-Solving Skills

Problem-solving exercises improve the ability to address complex scenarios, integrate multiple functionalities, and interpret results effectively. Candidates should simulate operational challenges, identify solutions, and implement workflows that resolve issues. Strengthened problem-solving skills ensure readiness for multi-step exam questions.

Mastering Command Syntax

Proficiency in command syntax ensures precise search execution. Candidates should practice applying search operators, modifiers, and functions correctly. Mastery of syntax reduces errors, improves result accuracy, and supports advanced analysis during the exam.

Data Enrichment Techniques

Data enrichment through lookups, calculated fields, and event tagging enhances analysis quality. Candidates should practice integrating enrichment techniques into searches and dashboards to provide context, improve accuracy, and generate actionable insights. Enrichment skills are critical for the exam and practical monitoring tasks.

Optimizing Dashboards for Insight

Candidates should focus on designing dashboards that highlight key metrics and trends effectively. Optimized dashboards improve interpretability, allow dynamic exploration, and support actionable insights. Mastery of dashboard optimization reflects both exam competence and professional analytical skills.

Integrated Alerting and Reporting

Integrating alerting with reporting ensures comprehensive monitoring and timely response. Candidates should practice creating alerts based on search results, linking them with dashboards, and generating reports that reflect event trends. This integration demonstrates the ability to manage operational oversight efficiently.

Reviewing and Refining Exam Skills

Reviewing and refining skills through iterative exercises strengthens retention and accuracy. Candidates should revisit searches, dashboards, alerts, and lookups to ensure comprehensive mastery. Iterative refinement prepares candidates for the variety of tasks presented in the SPLK-1001 exam.

Conclusion

Preparing for the SPLK-1001 certification requires a comprehensive understanding of the Splunk platform, including searching, field extraction, transforming commands, reporting, dashboards, lookups, and alerting. Mastery of these core functionalities enables candidates to analyze complex datasets, detect anomalies, and generate actionable insights efficiently. Hands-on practice with realistic datasets and scenario-based exercises reinforces practical application, ensuring that candidates can integrate multiple functionalities into cohesive workflows.

Time management, iterative review, and continuous skill refinement are essential for exam readiness. By simulating exam conditions, focusing on weak areas, and practicing multi-step workflows, candidates develop confidence, accuracy, and efficiency. Analytical thinking, problem-solving, and workflow optimization are critical not only for the exam but also for professional applications, where certified users leverage Splunk to monitor systems, generate insights, and support operational decision-making.

The SPLK-1001 certification validates foundational proficiency in Splunk, demonstrating the ability to handle real-world data challenges and apply knowledge effectively. Continuous learning, scenario simulation, and consistent practice ensure long-term competence, preparing candidates to excel both in the certification exam and in professional environments that require operational intelligence and data-driven decision-making.

Splunk SPLK-1001 practice test questions and answers, training course, study guide are uploaded in ETE Files format by real users. Study and Pass SPLK-1001 Splunk Core Certified User certification exam dumps & practice test questions and answers are to help students.

Exam Comments * The most recent comment are on top

- SPLK-1002 - Splunk Core Certified Power User

- SPLK-1003 - Splunk Enterprise Certified Admin

- SPLK-2002 - Splunk Enterprise Certified Architect

- SPLK-1001 - Splunk Core Certified User

- SPLK-5001 - Splunk Certified Cybersecurity Defense Analyst

- SPLK-1005 - Splunk Cloud Certified Admin

- SPLK-5002 - Splunk Certified Cybersecurity Defense Engineer

- SPLK-3001 - Splunk Enterprise Security Certified Admin

- SPLK-3002 - Splunk IT Service Intelligence Certified Admin

- SPLK-2003 - Splunk SOAR Certified Automation Developer

- SPLK-3003 - Splunk Core Certified Consultant

- SPLK-4001 - Splunk O11y Cloud Certified Metrics User

- SPLK-1004 - Splunk Core Certified Advanced Power User

Purchase SPLK-1001 Exam Training Products Individually

Why customers love us?

What do our customers say?

The resources provided for the Splunk certification exam were exceptional. The exam dumps and video courses offered clear and concise explanations of each topic. I felt thoroughly prepared for the SPLK-1001 test and passed with ease.

Studying for the Splunk certification exam was a breeze with the comprehensive materials from this site. The detailed study guides and accurate exam dumps helped me understand every concept. I aced the SPLK-1001 exam on my first try!

I was impressed with the quality of the SPLK-1001 preparation materials for the Splunk certification exam. The video courses were engaging, and the study guides covered all the essential topics. These resources made a significant difference in my study routine and overall performance. I went into the exam feeling confident and well-prepared.

The SPLK-1001 materials for the Splunk certification exam were invaluable. They provided detailed, concise explanations for each topic, helping me grasp the entire syllabus. After studying with these resources, I was able to tackle the final test questions confidently and successfully.

Thanks to the comprehensive study guides and video courses, I aced the SPLK-1001 exam. The exam dumps were spot on and helped me understand the types of questions to expect. The certification exam was much less intimidating thanks to their excellent prep materials. So, I highly recommend their services for anyone preparing for this certification exam.

Achieving my Splunk certification was a seamless experience. The detailed study guide and practice questions ensured I was fully prepared for SPLK-1001. The customer support was responsive and helpful throughout my journey. Highly recommend their services for anyone preparing for their certification test.

I couldn't be happier with my certification results! The study materials were comprehensive and easy to understand, making my preparation for the SPLK-1001 stress-free. Using these resources, I was able to pass my exam on the first attempt. They are a must-have for anyone serious about advancing their career.

The practice exams were incredibly helpful in familiarizing me with the actual test format. I felt confident and well-prepared going into my SPLK-1001 certification exam. The support and guidance provided were top-notch. I couldn't have obtained my Splunk certification without these amazing tools!

The materials provided for the SPLK-1001 were comprehensive and very well-structured. The practice tests were particularly useful in building my confidence and understanding the exam format. After using these materials, I felt well-prepared and was able to solve all the questions on the final test with ease. Passing the certification exam was a huge relief! I feel much more competent in my role. Thank you!

The certification prep was excellent. The content was up-to-date and aligned perfectly with the exam requirements. I appreciated the clear explanations and real-world examples that made complex topics easier to grasp. I passed SPLK-1001 successfully. It was a game-changer for my career in IT!