- Home

- Mulesoft Certifications

- MCPA - Level 1 MuleSoft Certified Platform Architect - Level 1 Dumps

Pass Mulesoft MCPA - Level 1 Exam in First Attempt Guaranteed!

Get 100% Latest Exam Questions, Accurate & Verified Answers to Pass the Actual Exam!

30 Days Free Updates, Instant Download!

MCPA - Level 1 Premium Bundle

- Premium File 58 Questions & Answers. Last update: Jan 31, 2026

- Training Course 99 Video Lectures

Last Week Results!

Includes question types found on the actual exam such as drag and drop, simulation, type-in and fill-in-the-blank.

Based on real-life scenarios similar to those encountered in the exam, allowing you to learn by working with real equipment.

All Mulesoft MCPA - Level 1 certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the MCPA - Level 1 MuleSoft Certified Platform Architect - Level 1 practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

Your Guide to Successfully Clearing MuleSoft MCPA Level 1 Exam

The MuleSoft Certified Platform Architect Level 1 exam evaluates the knowledge and skills required to design, implement, and manage integration solutions using the Anypoint Platform. The assessment is structured as a multiple-choice examination with a total of sixty questions, and candidates must achieve a score of seventy percent or higher to pass. This exam serves as a benchmark for professionals seeking to validate their ability to create efficient and scalable integration strategies, ensuring that they can work confidently with enterprise applications and complex system architectures. The exam fee reflects the extensive preparation required and the value of certification in demonstrating professional competence.

Importance of Structured Exam Preparation

Success in the MCPA Level 1 exam depends heavily on adopting a structured approach to preparation. This involves combining theoretical knowledge with practical experience, and organizing study resources in a way that allows for systematic coverage of all syllabus topics. A clear preparation strategy ensures that candidates understand platform architecture principles, runtime environments, integration patterns, and API-led connectivity methods. Structured preparation also helps to manage study time efficiently and prevents gaps in critical knowledge areas.

Familiarization with Official Resources

Understanding the scope of the exam starts with consulting official resources. Official documentation provides clarity on the exam objectives, essential topics, and best practices for platform architecture. Reviewing these materials at the beginning of the preparation process allows candidates to identify which areas require deeper study and ensures alignment with the evaluation criteria. Candidates should focus on building a strong foundation in system design principles, API management, and integration strategies using Anypoint Platform tools.

Breaking Down the Syllabus

The MCPA Level 1 syllabus covers a wide range of topics including integration strategy, system architecture, API design, application lifecycle management, and best practices for building reusable integration assets. Candidates should break down the syllabus into manageable segments and allocate sufficient time to each topic. Studying incrementally allows for better retention and comprehension. A deep understanding of integration paradigms, runtime plane design, and platform governance is critical, as these are core areas evaluated in the exam.

Creating an Effective Study Routine

A disciplined study schedule is crucial to maintaining consistent progress. Candidates should identify the hours during which they are most productive and dedicate these periods to focused study sessions. Establishing a daily or weekly routine ensures that all syllabus topics are addressed while balancing review sessions and practical exercises. Regularly revisiting topics reinforces understanding and prepares candidates to handle complex questions that require analytical thinking and problem-solving skills.

Emphasizing Hands-On Practice

Practical experience is essential for mastering the concepts required in the MCPA Level 1 exam. Working with the Anypoint Platform through exercises in integration design, solution deployment, and runtime monitoring allows candidates to apply theoretical knowledge in real-world scenarios. Hands-on practice helps to solidify understanding of API-led connectivity, messaging patterns, error handling, and performance optimization. Developing sample integration solutions provides insight into how architectural decisions impact overall system performance and scalability.

Using Study Notes and Documentation

Maintaining detailed notes and documentation during preparation helps in quick revision and reinforces comprehension. Writing down key points, diagrams, and workflow structures enhances memory retention and provides a reference for reviewing difficult concepts. Organizing study notes logically according to syllabus sections allows for efficient revision before the exam and serves as a resource for practical application exercises.

Incorporating Practice Tests

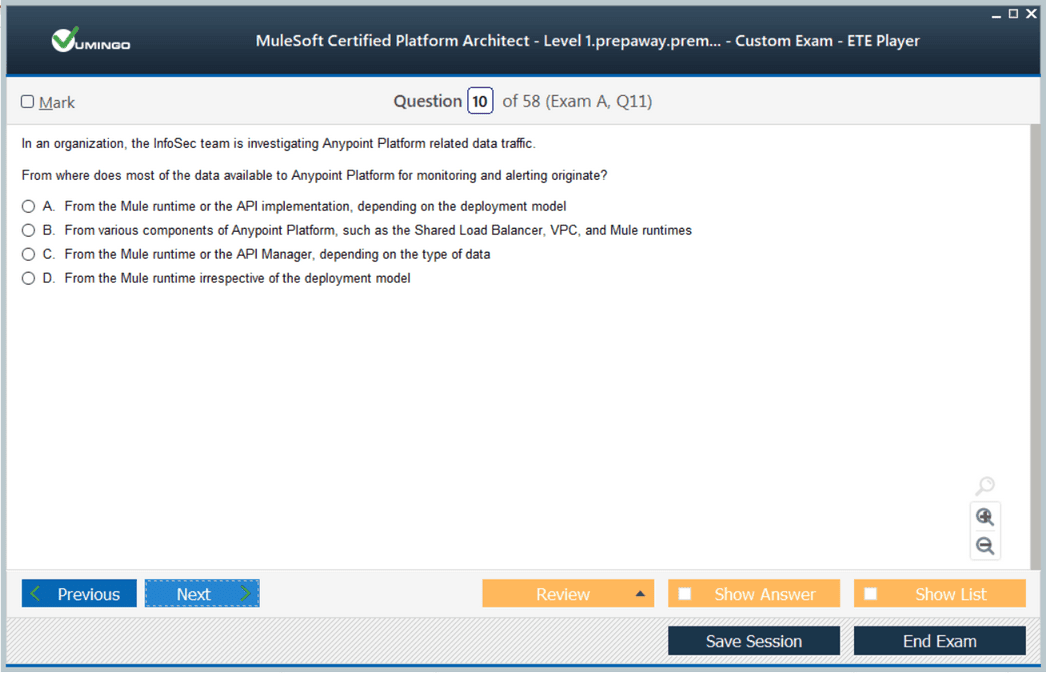

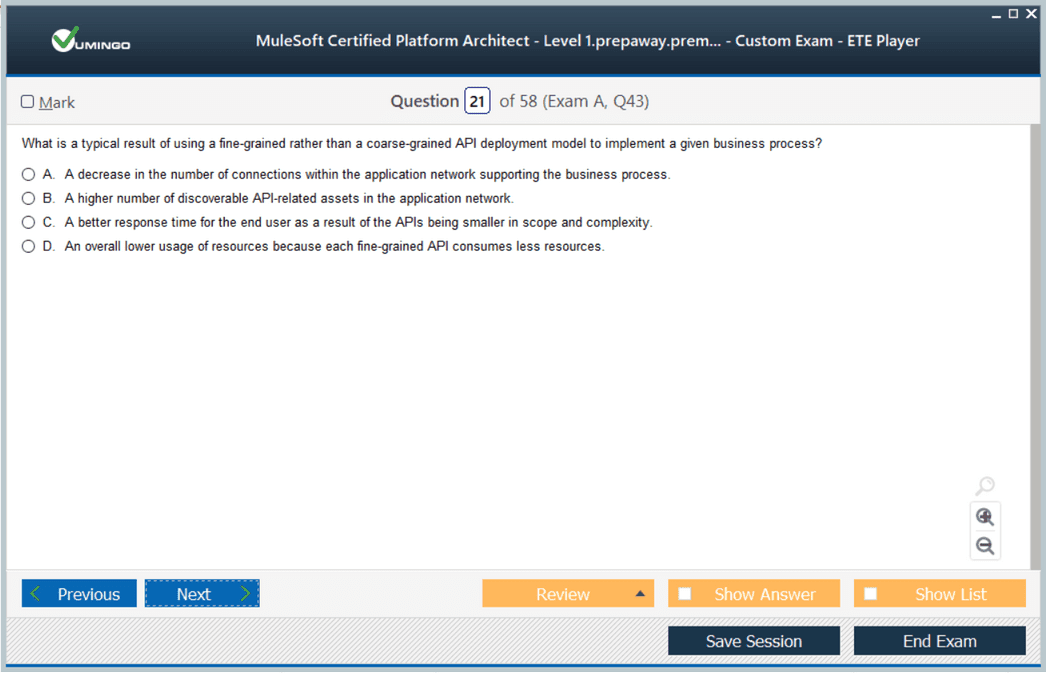

Practice tests play a critical role in exam readiness. They provide an opportunity to simulate the exam environment, practice time management, and assess the application of knowledge under test conditions. Candidates should use practice tests after completing the core syllabus to identify weak areas, refine problem-solving techniques, and build confidence. Regular engagement with mock exams helps in becoming familiar with question formats, understanding the level of detail required, and gauging readiness for the actual assessment.

Timing Your Practice and Revision

Effective preparation requires balancing study, practice, and revision. Allocating dedicated periods for reviewing previously covered topics and attempting timed exercises improves retention and builds exam stamina. Gradually increasing the complexity of practice scenarios ensures that candidates are prepared for diverse question types. Iterative learning through repeated cycles of study and assessment reinforces understanding of architectural concepts and integration strategies.

Leveraging Real-World Scenarios

Applying knowledge in real-world contexts enhances the depth of learning. Candidates should work on simulated integration projects, addressing challenges such as data mapping, error handling, system scaling, and security management. Engaging with practical scenarios helps in understanding the nuances of platform architecture, runtime configuration, and API governance, which are crucial components of the MCPA Level 1 exam.

Collaborative Learning and Peer Interaction

Collaboration with peers and participation in discussion groups can provide additional insights and learning techniques. Exchanging ideas about integration solutions, architectural decisions, and platform management strategies enhances understanding and exposes candidates to multiple problem-solving approaches. Collaborative learning fosters communication skills that are valuable in professional roles and can aid in tackling scenario-based questions in the exam.

Avoiding Overreliance on Memorization

Relying solely on memorized answers can be detrimental to genuine understanding. The MCPA Level 1 exam assesses the ability to apply architectural knowledge to practical scenarios. Candidates should focus on comprehending the principles of platform design, API lifecycle management, and integration patterns rather than memorizing questions and answers. Deep learning enables flexible application of concepts, which is essential for addressing complex or unexpected questions.

Understanding Time Management During the Exam

Managing time effectively during preparation and on exam day is essential for success. Candidates should practice completing practice tests within the designated timeframe to develop pacing strategies. Efficient time management allows for thoughtful analysis of each question, reducing errors and improving accuracy. Practicing under timed conditions also helps candidates become comfortable with the pressure of the exam environment.

Continuous Improvement Through Feedback

Using feedback from practice tests to guide study efforts ensures continuous improvement. Candidates should focus on areas where performance is weak, revisiting relevant topics and refining understanding. This iterative approach strengthens knowledge retention, improves problem-solving skills, and enhances confidence. Regular evaluation of progress provides a clear indication of readiness for the actual exam.

Integration of Theory and Practice

Combining theoretical understanding with hands-on practice is key to mastering the MCPA Level 1 exam. Candidates should not only study architectural concepts but also implement them in practical scenarios using the Anypoint Platform. This dual approach helps in understanding the real-world implications of design decisions, workflow management, system performance, and scalability, aligning preparation with professional responsibilities.

Long-Term Career Benefits of MCPA Level 1 Certification

Obtaining the MuleSoft Platform Architect Level 1 certification validates a candidate’s proficiency in designing and managing enterprise integration solutions. Certified individuals demonstrate expertise in platform architecture, API governance, and integration strategy, which can enhance credibility in professional settings. The certification opens doors to advanced roles in integration and architecture, provides opportunities for career growth, and can lead to improved job prospects and compensation.

Professional Recognition and Advancement

The MCPA Level 1 certification distinguishes professionals in a competitive field. Employers recognize the certification as evidence of technical competence and strategic understanding in integration architecture. Certified architects are often sought after for critical roles in system design, project leadership, and enterprise integration, enabling them to contribute effectively to organizational objectives.

Maintaining Skills and Knowledge

Achieving certification is the beginning of continuous professional development. Certified individuals are encouraged to stay updated with evolving trends in enterprise integration, emerging technologies, and platform updates. Ongoing learning ensures that professionals maintain relevance, continue to deliver value, and remain equipped to handle increasingly complex integration challenges.

The MuleSoft Platform Architect Level 1 certification is an essential milestone for professionals seeking to establish credibility and expertise in integration architecture. Successful preparation requires a combination of structured study, hands-on practice, iterative revision, and exposure to real-world scenarios. Mastery of the Anypoint Platform, integration patterns, and architectural best practices is critical for exam success. Achieving this certification not only validates technical skills but also provides significant advantages in career advancement, professional recognition, and long-term growth in the field of enterprise integration.

Deep Dive into MCPA Level 1 Exam Preparation

The MuleSoft Certified Platform Architect Level 1 exam is designed to evaluate a candidate’s ability to understand and manage the Anypoint Platform’s architecture, implement integration solutions, and optimize system performance. Preparation for this exam requires not only theoretical knowledge but also practical experience with integration frameworks, API-led design, and enterprise architecture concepts. Understanding the exam’s structure and requirements forms the foundation for developing a successful study plan.

Comprehending Exam Objectives

To start preparation effectively, candidates must identify the core objectives of the MCPA Level 1 exam. These objectives encompass understanding how to design integration solutions, apply architectural patterns, manage APIs, and address performance, reliability, and security challenges within an enterprise ecosystem. Familiarity with these objectives ensures that candidates can prioritize topics based on their significance and complexity, creating a targeted approach to study.

Organizing Study Materials

A comprehensive preparation plan involves gathering relevant study materials, including official platform documentation, architecture guides, and conceptual overviews. Organizing these resources allows candidates to create a structured approach, covering every topic thoroughly. Segmentation of materials by architectural principles, runtime design, integration patterns, and operational strategies ensures a balanced preparation process that reinforces both theory and practical application.

Strategic Study Plan

Developing a strategic study plan is crucial for maintaining consistent progress. Candidates should allocate specific time blocks for reviewing key concepts, practicing implementation tasks, and revising critical architectural patterns. By breaking the syllabus into focused segments, aspirants can study each area systematically, ensuring no topic is overlooked. This method also enables better retention of information and builds a strong understanding of complex integration scenarios.

Emphasis on Hands-On Experience

Practical experience is essential for mastering the concepts required in the MCPA Level 1 exam. Working on the Anypoint Platform through exercises that simulate real-world integration challenges helps candidates develop confidence in designing solutions, deploying applications, and managing system performance. Hands-on practice enhances comprehension of runtime management, API orchestration, error handling, and security implementations, which are central to exam success.

Building Conceptual Understanding

A deep conceptual understanding is necessary for applying architectural knowledge effectively. Candidates should focus on API-led connectivity, integration patterns, system scalability, and governance frameworks. Understanding the rationale behind architectural decisions allows candidates to adapt solutions to complex scenarios and answer scenario-based questions accurately during the exam.

Creating Notes and Summaries

Maintaining detailed notes and summaries during preparation aids in rapid revision and strengthens retention. Documenting key architectural patterns, integration techniques, and platform-specific practices provides a reference for review and practical implementation. Organized notes also serve as a quick guide to recall essential concepts before the exam, improving confidence and reducing the risk of overlooking important details.

Time Management and Practice

Efficient time management during preparation and examination is critical for success. Candidates should practice completing questions under timed conditions, developing strategies to allocate appropriate time for each topic. Practicing with mock exams simulates the real exam environment, helping candidates manage pacing, understand question complexity, and maintain composure during the actual assessment.

Iterative Learning Approach

An iterative learning approach involves repeated cycles of studying, practicing, and revising. Candidates should revisit topics multiple times, gradually increasing the depth of understanding with each iteration. This approach ensures mastery over complex architectural concepts, runtime behaviors, and integration strategies, making candidates better equipped to tackle difficult questions and practical scenarios.

Leveraging Real-World Integration Scenarios

Applying theoretical knowledge to real-world scenarios enhances comprehension and practical skills. Candidates should attempt to design integration solutions that address performance bottlenecks, scalability challenges, error handling, and security requirements. This practical exposure aligns with the exam’s emphasis on solution design and implementation, reinforcing learning outcomes and providing confidence in applying concepts to enterprise environments.

Study Groups and Peer Discussions

Participating in study groups or peer discussions can provide diverse perspectives on integration solutions and architectural decisions. Candidates can exchange ideas, clarify doubts, and learn alternative approaches to solving complex problems. Collaborative learning encourages critical thinking and exposes candidates to varied problem-solving techniques, which can be valuable for scenario-based exam questions.

Avoiding Memorization Pitfalls

Success in the MCPA Level 1 exam relies on understanding and application rather than rote memorization. Candidates should focus on grasping architectural principles, runtime configurations, and integration patterns, enabling them to adapt their knowledge to different scenarios. Deep understanding ensures the ability to answer questions that test analytical thinking and practical application, which are essential components of the exam.

Practice Tests and Self-Assessment

Regular practice tests are vital for gauging readiness and improving performance. They provide insights into knowledge gaps, time management skills, and question-solving strategies. Candidates should take multiple practice tests after completing syllabus coverage, analyzing results to identify weak areas and revisiting corresponding topics for improvement. This process builds confidence and reinforces exam preparedness.

Enhancing Exam Confidence

Repeated exposure to practice questions, simulated exam conditions, and real-world problem-solving enhances candidates’ confidence. Understanding the exam format, time constraints, and question types reduces anxiety and improves accuracy during the actual assessment. Confidence gained through continuous practice allows candidates to approach the exam with a calm and focused mindset, increasing the likelihood of success.

Integrating Theory with Practice

A balanced integration of theory and practice strengthens exam readiness. Candidates should study architectural principles alongside practical exercises, designing, deploying, and monitoring integration solutions on the Anypoint Platform. This approach ensures candidates are prepared to answer both conceptual and scenario-based questions, demonstrating comprehensive mastery of the platform and integration methodologies.

Professional Advantages of Certification

Achieving the MCPA Level 1 certification validates expertise in integration architecture and platform management. Certified professionals gain recognition for their ability to design scalable, reliable, and secure integration solutions. Certification enhances career opportunities, demonstrating proficiency to employers and peers, and may provide a competitive edge in job roles that require advanced integration skills.

Long-Term Career Impact

MCPA Level 1 certification supports long-term career growth by reinforcing knowledge in integration architecture, API management, and platform governance. Certified professionals are better positioned for leadership roles in integration projects, strategic planning, and system architecture. Continuous application of certified skills ensures ongoing relevance and professional development, enabling growth within enterprise IT environments.

Continuous Knowledge Development

Post-certification, maintaining expertise requires staying updated with emerging trends, new features, and evolving integration technologies. Professionals should engage in ongoing learning and practical application to ensure sustained competence. Remaining informed about best practices in integration design, API governance, and system performance helps certified individuals maintain their advantage in professional roles.

Building Strategic Thinking

The MCPA Level 1 exam emphasizes strategic thinking in platform architecture and integration solution design. Candidates should focus on understanding system dependencies, scalability concerns, error-handling strategies, and security implications. Developing the ability to plan and execute integration solutions strategically ensures readiness for scenario-based questions and prepares candidates for practical challenges in enterprise environments.

The MuleSoft Certified Platform Architect Level 1 exam is a comprehensive assessment of a professional’s capability to design and manage integration solutions using the Anypoint Platform. Effective preparation involves understanding exam objectives, studying systematically, applying practical exercises, practicing under timed conditions, and integrating knowledge with real-world scenarios. Achieving this certification validates both technical and strategic skills, opening pathways for career advancement, enhanced professional credibility, and long-term growth in integration architecture.

Understanding the Core Concepts for MCPA Level 1 Exam

The MuleSoft Certified Platform Architect Level 1 exam evaluates a candidate’s ability to design and manage integrations using the Anypoint Platform. A foundational understanding of platform architecture, API-led connectivity, and integration patterns is essential. Candidates should focus on how different components of the Anypoint Platform interact, including design centers, runtime managers, and monitoring tools. Mastery of these concepts ensures candidates can effectively plan and implement integration strategies while maintaining operational efficiency.

Effective Study Planning

Preparing for the MCPA Level 1 exam requires a structured approach. Candidates should begin by analyzing the syllabus and categorizing topics into core areas such as architecture design, API management, solution deployment, and operational monitoring. Establishing a schedule that allocates dedicated time for each area ensures comprehensive coverage and prevents gaps in knowledge. Regularly reviewing completed topics reinforces understanding and enables candidates to connect theoretical concepts with practical implementation.

Exploring Platform Architecture

A strong grasp of Anypoint Platform architecture is critical. Candidates must understand how API-led connectivity organizes services into layers, including experience, process, and system layers. Each layer has distinct responsibilities, and knowing how to structure integrations across these layers is vital. Familiarity with runtime environments, deployment options, and management tools helps candidates assess trade-offs when designing solutions and ensures scalability, security, and performance are optimized.

Designing Integration Solutions

Designing effective integration solutions requires knowledge of architectural patterns and best practices. Candidates should learn how to use APIs to connect applications, implement error handling, ensure reliability, and optimize performance. Practical experience in designing flows, mapping data transformations, and configuring connectors enhances understanding. Understanding how to balance system requirements, security considerations, and maintainability is essential for creating solutions that align with enterprise standards.

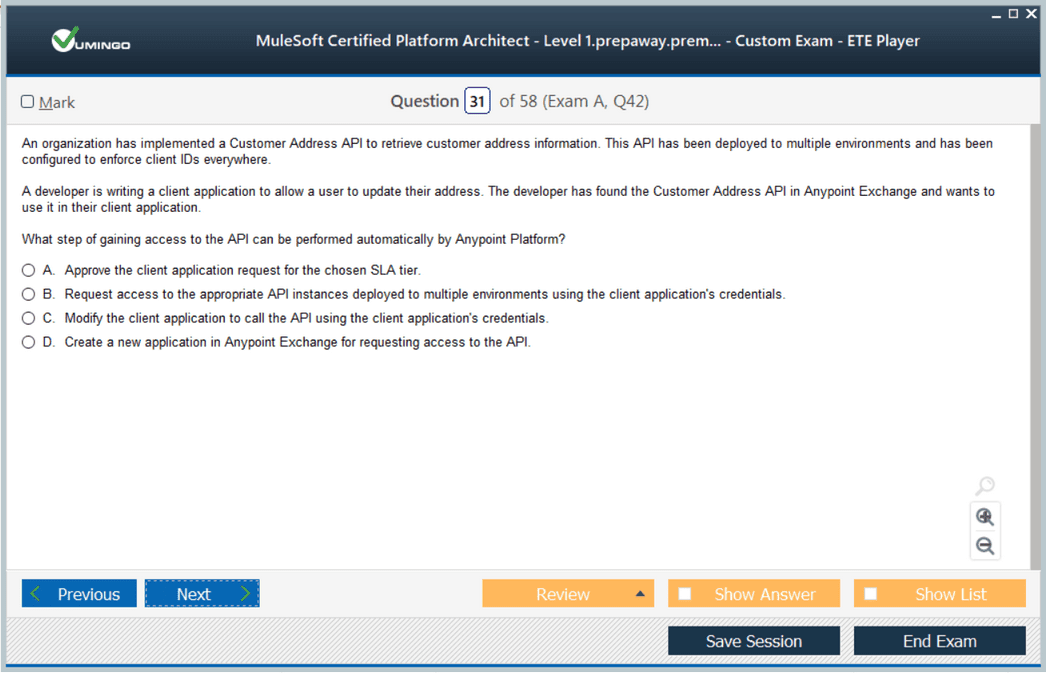

Application of API Management Principles

API management forms a key part of the MCPA Level 1 exam. Candidates should focus on API design, versioning, security protocols, and access management. Knowing how to create reusable APIs, apply governance rules, and monitor API usage ensures candidates can manage enterprise-scale integrations effectively. Awareness of policies for throttling, rate limiting, and authentication helps in designing secure and reliable API solutions.

Hands-On Platform Experience

Practical experience is essential for translating theoretical knowledge into actionable skills. Candidates should engage in building and deploying integration projects on the Anypoint Platform. Working with flows, data transformation, error handling, and connectors simulates real-world challenges. Hands-on experience reinforces concepts such as runtime management, logging, and monitoring, preparing candidates for scenario-based questions in the exam.

Developing Analytical Skills

The MCPA Level 1 exam emphasizes analytical thinking. Candidates must evaluate complex integration scenarios, identify potential bottlenecks, and propose scalable solutions. Analytical skills enable the candidate to consider trade-offs, assess risks, and make informed design decisions. This skill set is critical not only for passing the exam but also for applying knowledge effectively in professional environments.

Incorporating Best Practices

Knowledge of best practices ensures that solutions are maintainable, scalable, and reliable. Candidates should understand naming conventions, error handling strategies, reusable asset creation, and documentation standards. Applying these practices consistently allows candidates to demonstrate their understanding of professional-grade integration design and ensures alignment with organizational requirements.

Exam Simulation and Practice

Simulating exam conditions through timed practice tests helps candidates manage stress and improve time management. Practicing multiple-choice and scenario-based questions allows candidates to become familiar with question types, identify weak areas, and refine their approach to problem-solving. Continuous assessment through practice tests ensures that candidates develop confidence in their knowledge and readiness for the real exam.

Integrating Theory with Scenario-Based Learning

Linking theoretical concepts with real-life scenarios strengthens comprehension. Candidates should focus on case studies and sample integration problems that mirror enterprise challenges. By designing and evaluating solutions for these scenarios, candidates can see how architectural principles, API management, and integration patterns are applied. This approach enhances problem-solving skills and ensures candidates are prepared for scenario-oriented questions in the exam.

Maintaining Consistency in Preparation

Consistency is a key factor in exam success. Candidates should follow a disciplined schedule, revisiting topics regularly and reinforcing practical exercises. Daily practice, combined with systematic review sessions, ensures steady progress. Maintaining a consistent study rhythm helps retain complex concepts, reduces last-minute pressure, and builds overall confidence.

Leveraging Peer Discussions

Collaborating with peers or joining study groups can provide new perspectives on integration challenges and solutions. Discussing architectural decisions, integration patterns, and problem-solving strategies enables candidates to refine their understanding. Peer learning encourages critical thinking, exposes candidates to diverse approaches, and strengthens preparation for analytical and scenario-based questions.

Developing Problem-Solving Techniques

Effective problem-solving is crucial for the MCPA Level 1 exam. Candidates should practice evaluating requirements, identifying potential issues, and proposing integration solutions. Developing systematic approaches to troubleshoot problems, optimize processes, and improve system reliability ensures candidates can apply knowledge in both exam scenarios and professional projects.

Tracking Progress and Identifying Gaps

Monitoring progress throughout preparation helps candidates stay on track. Regular self-assessment and evaluation of practice test results identify knowledge gaps that require additional focus. By addressing weaknesses promptly, candidates can ensure comprehensive coverage of all exam topics and enhance overall readiness.

Understanding Operational Management

A complete understanding of operational management is essential for the MCPA Level 1 exam. Candidates must know how to deploy solutions, manage runtime environments, monitor performance, and implement DevOps practices. Knowledge of logging, alerts, and troubleshooting enhances the ability to maintain stable and efficient integration systems, reflecting real-world responsibilities of a platform architect.

Building Confidence Through Repetition

Repeated exposure to study materials, practical exercises, and practice exams builds confidence. Candidates gradually improve their knowledge retention, problem-solving ability, and familiarity with the exam format. Confidence gained through structured preparation reduces exam-day anxiety and enables candidates to perform effectively under timed conditions.

Applying Knowledge to Enterprise Solutions

The MCPA Level 1 exam emphasizes practical application. Candidates should focus on designing solutions that meet enterprise requirements, including scalability, reliability, security, and maintainability. Applying knowledge to realistic integration projects ensures readiness to address complex challenges and demonstrates proficiency in platform architecture and integration management.

Strategic Revision Techniques

Strategic revision involves revisiting high-priority topics, reinforcing key concepts, and practicing scenario-based questions. Candidates should focus on areas with higher weight in the exam, ensuring a deep understanding of critical topics. Combining review with hands-on exercises reinforces knowledge and prepares candidates for both conceptual and practical questions.

Long-Term Skill Development

Beyond exam success, MCPA Level 1 preparation contributes to long-term skill development. Candidates acquire expertise in integration architecture, API management, platform governance, and enterprise-level solution design. These skills enhance career prospects, enable effective participation in complex projects, and establish a strong foundation for advanced certifications and professional growth.

Integrating Time Management Practices

Time management is crucial during both preparation and the exam itself. Candidates should develop the ability to allocate time efficiently for studying, practicing, and reviewing topics. Simulating timed exams helps develop pacing strategies, ensuring that all questions are answered accurately within the allotted time. Effective time management also reduces stress and improves performance.

Emphasizing Security and Governance

Security and governance are integral to enterprise integration solutions. Candidates should focus on applying authentication, authorization, and encryption practices within API and integration design. Governance involves maintaining standards, monitoring usage, and ensuring compliance. A thorough understanding of these principles ensures candidates can design secure, compliant, and maintainable systems.

Combining Practical and Theoretical Knowledge

The best preparation strategy combines theoretical understanding with hands-on practice. Candidates should continuously apply learned concepts in practical exercises while reviewing underlying principles. This dual approach reinforces knowledge, enhances problem-solving skills, and ensures readiness for both conceptual and scenario-based questions.

Developing Analytical Mindset for Exam Scenarios

An analytical mindset is essential for interpreting complex scenarios presented in the MCPA Level 1 exam. Candidates must evaluate integration requirements, anticipate potential issues, and propose effective solutions. Practicing scenario-based exercises strengthens analytical thinking and enables candidates to approach questions logically and systematically.

Enhancing Understanding Through Documentation

Reading platform documentation and architecture guides supports a deeper understanding of integration practices and design patterns. Candidates should study official materials, focusing on runtime environments, API management, deployment strategies, and operational monitoring. Documentation provides authoritative guidance and clarifies concepts that may not be fully understood through practical exercises alone.

Focusing on High-Impact Areas

Certain topics have higher relevance in the exam and practical integration work. Candidates should emphasize areas such as API-led connectivity, architecture design patterns, runtime management, monitoring, and DevOps practices. Prioritizing these high-impact areas ensures efficient preparation and maximizes chances of success in the exam.

Continuous Feedback and Improvement

Feedback from practice tests, peer discussions, and self-assessment guides improvement. Candidates should use feedback to identify weaknesses, refine strategies, and reinforce understanding. Iterative improvement strengthens knowledge retention, problem-solving skills, and confidence, ultimately enhancing exam performance.

Preparing for Scenario-Based Questions

Scenario-based questions assess practical application of knowledge in real-world situations. Candidates should practice interpreting requirements, designing solutions, and evaluating outcomes. Exposure to varied scenarios develops the ability to apply theoretical knowledge, assess trade-offs, and propose optimal solutions, ensuring readiness for the most challenging aspects of the exam.

Reinforcing Conceptual Connections

Candidates should focus on connecting different concepts, such as API design with operational monitoring, error handling with reliability strategies, and architecture patterns with scalability. Understanding these interconnections enables holistic solution design and enhances the ability to answer questions that require comprehensive reasoning.

Building Exam Day Readiness

Exam day readiness combines knowledge, confidence, and strategic planning. Candidates should ensure they are familiar with the exam format, question types, and timing. Reviewing notes, revisiting key concepts, and practicing under timed conditions helps reduce anxiety and promotes efficient performance during the assessment.

Integrating Best Practices in Preparation

Applying best practices throughout preparation, such as organized note-taking, systematic review, hands-on exercises, and continuous practice tests, ensures thorough readiness. Best practices also include maintaining focus, avoiding distractions, and adhering to a consistent study schedule. These habits strengthen preparation and increase the likelihood of success in the MCPA Level 1 exam.

Preparing for Enterprise Integration Challenges

The MCPA Level 1 exam mirrors the challenges faced in real enterprise integration projects. Candidates should focus on designing solutions that are scalable, reliable, secure, and maintainable. Preparing for these challenges equips candidates with practical skills applicable beyond the exam and ensures they can deliver value in professional roles managing integration initiatives.

Preparation for the MuleSoft Certified Platform Architect Level 1 exam requires a strategic, structured, and practice-oriented approach. Candidates must develop conceptual understanding, hands-on expertise, analytical thinking, and problem-solving abilities. By combining theoretical knowledge with practical application, practicing under timed conditions, and focusing on enterprise integration challenges, candidates can achieve certification and demonstrate comprehensive skills in designing, managing, and optimizing integrations on the Anypoint Platform.

Deepening Knowledge of API-Led Connectivity

A crucial aspect of preparing for the MCPA Level 1 exam is mastering API-led connectivity. Candidates should understand how APIs facilitate communication between different layers of the integration architecture, including experience, process, and system layers. Comprehending how to design reusable APIs that adhere to enterprise standards is essential. This involves ensuring secure data flow, managing dependencies, and creating scalable solutions that support evolving business needs.

Managing Data Transformation and Mapping

Data transformation is a vital skill for platform architects. Candidates must be able to map data between different formats such as JSON, XML, and CSV, ensuring data integrity and compatibility across systems. Understanding transformation tools and strategies within the Anypoint Platform allows architects to design integrations that are both efficient and reliable. Proper handling of data mapping reduces errors and improves the quality of integration solutions.

Integrating Security Practices

Security considerations are integral to the MCPA Level 1 exam. Candidates should focus on implementing authentication, authorization, and encryption within integration flows. Knowledge of API security policies, such as OAuth, client ID enforcement, and IP whitelisting, ensures that solutions meet enterprise security standards. Incorporating security best practices throughout the architecture is critical to protecting sensitive information and maintaining regulatory compliance.

Monitoring and Performance Optimization

Candidates must understand how to monitor integrations effectively and optimize performance. This includes configuring logging, alerts, and metrics to track system health and performance. Understanding how to identify bottlenecks, optimize data flows, and enhance throughput ensures that integration solutions remain robust under varying loads. Knowledge of monitoring tools and dashboards within the Anypoint Platform equips candidates to maintain high operational efficiency.

Handling Error Management and Reliability

Error handling is a significant topic for platform architects. Candidates should learn how to design integrations that detect, log, and recover from errors gracefully. Implementing retry strategies, fallback mechanisms, and exception handling ensures that integrations remain reliable and resilient. Building reliability into designs not only improves user experience but also demonstrates proficiency in creating enterprise-grade solutions.

Designing Scalable Integration Architectures

Scalability is a core principle for enterprise integration. Candidates should understand how to design architectures that accommodate growth in users, data, and transactions. This involves selecting appropriate deployment strategies, utilizing clusters, and implementing load balancing. Scalable designs ensure that solutions can adapt to business expansion without compromising performance or reliability.

Applying DevOps Principles

DevOps practices are increasingly relevant for platform architects. Candidates should be familiar with continuous integration and deployment processes, automated testing, and version control. Knowledge of deployment pipelines, environment promotion, and rollback strategies enables architects to manage integrations efficiently and reduce operational risks. Integrating DevOps into preparation ensures that candidates can align with modern enterprise practices.

Evaluating Integration Patterns

Understanding common integration patterns is vital for the MCPA Level 1 exam. Candidates should analyze synchronous and asynchronous messaging, batch processing, event-driven architectures, and orchestration patterns. Evaluating the trade-offs between different patterns enables architects to select the most appropriate solutions for specific business requirements. Knowledge of patterns ensures that integrations are both effective and maintainable.

Strengthening Practical Skills

Hands-on practice is essential for mastering the Anypoint Platform. Candidates should engage in building real-world integration projects, testing flows, and troubleshooting issues. Practical exercises reinforce theoretical knowledge and improve problem-solving capabilities. Working with actual scenarios allows candidates to experience the challenges of enterprise integration and develop confidence in applying solutions.

Understanding API Governance and Lifecycle

API governance ensures consistency, security, and compliance in integration projects. Candidates must understand API lifecycle management, including design, versioning, deployment, monitoring, and deprecation. Applying governance principles helps maintain the quality and maintainability of APIs, which is critical in enterprise environments. Knowledge of lifecycle management enables candidates to create solutions that are robust and sustainable over time.

Developing Analytical and Strategic Thinking

Analytical skills are crucial for evaluating integration requirements and designing effective solutions. Candidates should practice analyzing complex scenarios, identifying potential issues, and proposing strategic solutions. Strategic thinking involves considering long-term implications, resource constraints, and alignment with enterprise objectives. Developing these skills prepares candidates for scenario-based questions in the exam and real-world integration challenges.

Reviewing Documentation and Guidelines

A thorough review of platform documentation and integration guidelines enhances understanding of best practices. Candidates should study architectural references, API design guidelines, and operational manuals. Documentation provides insights into recommended practices, common pitfalls, and advanced features of the Anypoint Platform. Familiarity with these resources ensures comprehensive knowledge and readiness for the exam.

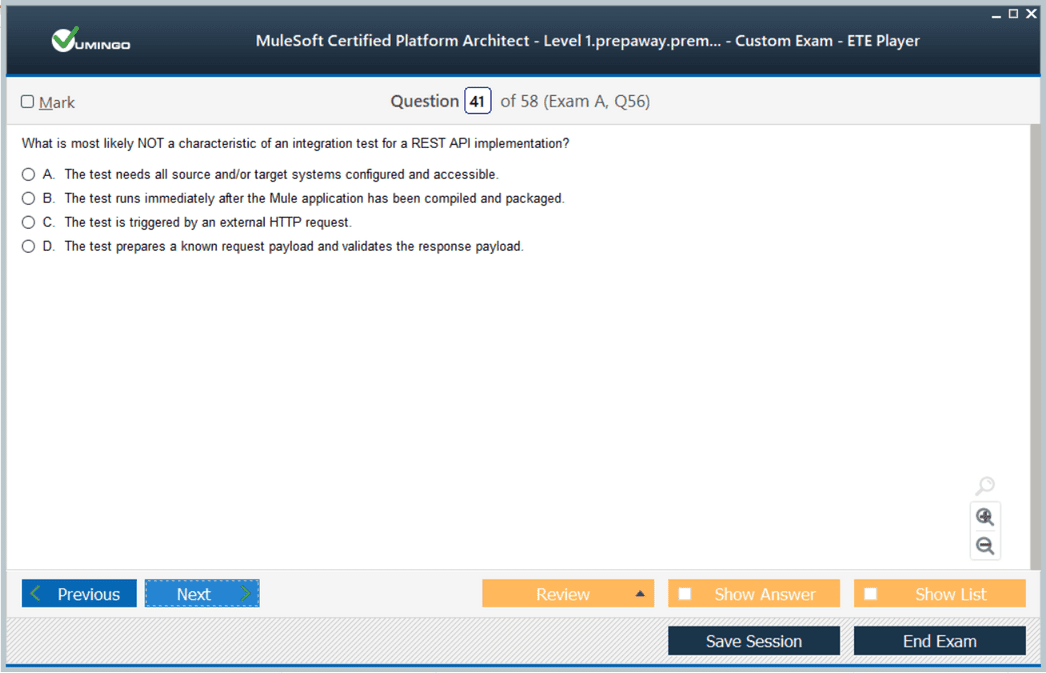

Implementing Testing and Validation Techniques

Testing is a critical component of successful integration. Candidates should understand how to create automated tests, validate data flows, and perform regression testing. Implementing robust testing strategies ensures that integrations function correctly and meet business requirements. Knowledge of testing techniques demonstrates the ability to maintain high-quality integration solutions.

Strengthening Time Management Skills

Time management is vital during exam preparation and the assessment itself. Candidates should practice pacing themselves while working through practice tests, ensuring they can answer all questions within the allotted time. Efficient time management reduces stress and increases accuracy, allowing candidates to perform effectively under pressure.

Integrating Knowledge Across Topics

Candidates must learn to integrate knowledge from multiple domains, such as architecture design, API management, security, and monitoring. Understanding the interconnections between these areas enables comprehensive solution design and enhances problem-solving capabilities. Integrated knowledge ensures that candidates can approach questions holistically and develop well-rounded solutions.

Focusing on Reliability and Maintenance

Designing solutions that are reliable and maintainable is essential. Candidates should incorporate error handling, monitoring, documentation, and reusable components into their designs. A focus on maintainability ensures that integrations can evolve with business needs and remain efficient over time. Preparing with this perspective enhances both exam performance and practical expertise.

Engaging in Scenario-Based Learning

Scenario-based exercises prepare candidates for real-world challenges and exam-style questions. Candidates should practice interpreting requirements, designing solutions, and evaluating outcomes. Scenario-based learning develops critical thinking, adaptability, and the ability to make informed decisions under constraints. This approach ensures readiness for complex questions and practical application of knowledge.

Utilizing Review and Reflection

Regular review and reflection solidify understanding of key concepts. Candidates should revisit challenging topics, summarize key points, and reflect on practical exercises. Reflection helps identify gaps, reinforce learning, and build confidence in knowledge. Consistent review enhances retention and ensures preparedness for all aspects of the exam.

Preparing for Enterprise-Level Responsibilities

The MCPA Level 1 exam mirrors the responsibilities of a platform architect in enterprise settings. Candidates should focus on designing solutions that meet scalability, security, reliability, and maintainability requirements. Understanding the expectations of enterprise integration ensures that candidates are prepared for both the exam and professional roles managing complex integration projects.

Balancing Theory and Practice

Effective preparation balances theoretical understanding with hands-on practice. Candidates should continuously apply learned concepts in practical exercises while reviewing underlying principles. This approach ensures that candidates are proficient in both conceptual and applied aspects of platform architecture and integration management.

Strengthening Confidence and Readiness

Confidence is built through consistent preparation, practice, and application of knowledge. Candidates should engage in timed practice tests, scenario-based exercises, and hands-on projects. Continuous exposure to different question types and integration challenges builds familiarity, reduces anxiety, and enhances readiness for the exam.

Enhancing Problem-Solving Capabilities

Problem-solving is a central skill for platform architects. Candidates should practice identifying integration challenges, analyzing requirements, and proposing optimal solutions. Developing systematic approaches to troubleshooting, performance optimization, and error handling strengthens practical expertise and ensures candidates can manage real-world integration scenarios effectively.

Consolidating Knowledge Through Iteration

Iterative learning reinforces understanding and prepares candidates for complex exam questions. Revisiting topics, practicing scenarios, and applying feedback enables candidates to refine skills continuously. Iterative consolidation ensures mastery of the Anypoint Platform and prepares candidates for all dimensions of the MCPA Level 1 exam.

Preparing for Exam Day

Exam readiness involves familiarity with the format, timing, and question types. Candidates should simulate exam conditions, review notes, and engage in final practice sessions. Preparedness reduces anxiety, improves focus, and ensures candidates can apply knowledge efficiently during the assessment.

Building a Strong Professional Foundation

Beyond passing the exam, MCPA Level 1 preparation equips candidates with practical skills for enterprise integration. Candidates develop expertise in architecture design, API management, monitoring, and operational governance. This foundation enhances career prospects and prepares individuals for complex integration projects in professional environments.

Continuous Learning and Improvement

Preparation for the MCPA Level 1 exam is an ongoing process. Candidates should maintain curiosity, practice regularly, and seek new integration challenges. Continuous learning ensures that knowledge remains current, skills improve over time, and candidates are prepared for both the exam and evolving industry demands.

Achieving the MuleSoft Certified Platform Architect Level 1 credential requires a structured approach combining theoretical knowledge, practical application, scenario-based learning, and consistent practice. Candidates should focus on core architecture principles, API-led connectivity, security, scalability, monitoring, and reliability. By integrating these elements, practicing under realistic conditions, and maintaining disciplined preparation, candidates can confidently approach the MCPA Level 1 exam and demonstrate mastery in designing and managing enterprise-level integration solutions on the Anypoint Platform.

Advanced Integration Strategies for MCPA Level 1 Exam

To excel in the MCPA Level 1 exam, candidates must develop a strong understanding of advanced integration strategies. These include designing hybrid integration solutions that connect cloud-based and on-premises systems, managing event-driven and streaming architectures, and optimizing API performance under different workloads. An architect should understand when to apply synchronous versus asynchronous messaging and how to leverage message queues or event brokers effectively.

API Lifecycle Management

A comprehensive grasp of the API lifecycle is essential for platform architects. Candidates should know how to design, version, deploy, monitor, and retire APIs efficiently. Proper lifecycle management ensures that APIs remain reliable, secure, and maintainable over time. Knowledge of deprecation strategies, backward compatibility, and version control allows architects to maintain enterprise-grade API ecosystems.

Governance and Compliance Considerations

Governance plays a critical role in maintaining quality and consistency across integration solutions. Candidates must be familiar with setting and enforcing standards for API design, security policies, data handling, and error management. Compliance with internal and external regulations is also a crucial responsibility. Understanding these principles helps ensure that integration solutions are robust, auditable, and aligned with organizational policies.

Performance Optimization Techniques

Optimizing performance is vital for large-scale enterprise integration. Candidates should learn how to identify bottlenecks, enhance throughput, and reduce latency in integration flows. This involves proper selection of connectors, efficient data transformation methods, and minimizing unnecessary processing steps. Performance tuning is also about monitoring resources, balancing loads, and designing integrations that scale with growing demands.

Designing for Reliability and Fault Tolerance

Architects must design integration solutions that are fault-tolerant and resilient. This includes implementing retry mechanisms, error logging, fallback strategies, and ensuring continuity in case of system failures. Understanding how to create idempotent operations and maintain transactional consistency across multiple systems is key to building reliable integrations.

Monitoring and Observability

Candidates should master the use of monitoring and observability tools within the Anypoint Platform. Tracking metrics, setting up alerts, analyzing logs, and visualizing performance trends help maintain healthy integrations. Understanding the indicators of system stress or failure enables proactive management, ensuring seamless operations and minimal downtime.

DevOps Integration for Platform Architects

Integrating DevOps principles into platform architecture preparation is essential. Candidates should understand continuous integration and deployment, automated testing, version control, and rollback strategies. Familiarity with pipelines, environment promotion, and deployment strategies ensures smooth operational workflows and reduces the risk of errors in production environments.

Real-World Scenario Practice

Hands-on experience with real-world scenarios enhances exam readiness. Candidates should simulate business requirements, design integration flows, troubleshoot errors, and validate results. Practicing with varied scenarios strengthens problem-solving skills, reinforces theoretical concepts, and prepares candidates for scenario-based questions that require critical thinking and practical application.

Security Implementation in Integration Solutions

Securing integration flows is a top priority for enterprise architects. Candidates must learn to implement authentication, authorization, encryption, and access control within API and integration designs. This includes understanding OAuth, token management, IP restrictions, and secure handling of sensitive data. Effective security design ensures compliance and protects enterprise information assets.

Strategic Use of Integration Patterns

Architects should be proficient in choosing and applying the correct integration patterns for different requirements. This includes orchestration, messaging, event-driven, and batch processing patterns. Understanding trade-offs and appropriate use cases allows architects to build flexible, maintainable, and efficient solutions tailored to business needs.

Enhancing Analytical and Decision-Making Skills

MCPA Level 1 preparation requires strong analytical and decision-making abilities. Candidates should practice evaluating integration requirements, analyzing risks, and proposing optimal architectural solutions. Developing structured approaches to troubleshooting, design decisions, and performance evaluation ensures readiness for complex exam questions and practical enterprise challenges.

Documentation and Knowledge Management

Proper documentation is crucial for maintainable and scalable integration solutions. Candidates should practice creating clear, comprehensive, and accessible design documents, architecture diagrams, and process workflows. Effective documentation aids in knowledge transfer, team collaboration, and ongoing system maintenance, which reflects best practices expected from a platform architect.

Time Management During Exam Preparation

Efficient time management is key to comprehensive exam preparation. Candidates should allocate specific hours for reading, practical exercises, review sessions, and practice tests. Consistent study routines and planned breaks help maintain focus and reduce fatigue. Simulating exam conditions helps develop pacing strategies to manage time effectively during the actual assessment.

Integrating Knowledge Across Multiple Domains

A platform architect must combine knowledge from multiple domains, including API design, security, monitoring, DevOps, and enterprise architecture principles. Candidates should practice connecting these concepts to design holistic integration solutions. Understanding interdependencies enhances problem-solving capabilities and ensures comprehensive preparation for complex questions.

Stress Management and Exam Readiness

Managing stress during preparation and the actual exam is essential. Candidates should develop strategies for maintaining focus, handling difficult questions, and remaining calm under pressure. Regular practice, scenario-based learning, and review of challenging topics contribute to increased confidence and better performance.

Iterative Learning and Continuous Improvement

Iterative learning reinforces understanding and ensures retention. Candidates should revisit topics, apply feedback from practice exercises, and continuously refine skills. This iterative approach deepens comprehension, strengthens problem-solving abilities, and ensures readiness for both theoretical and practical components of the exam.

Hands-On Project Exposure

Exposure to real integration projects enhances understanding of platform capabilities. Candidates should work on projects that involve API design, data transformation, monitoring, and security implementation. Practical experience solidifies learning, reinforces theoretical knowledge, and prepares candidates for enterprise-level responsibilities reflected in the exam.

Collaboration and Knowledge Sharing

Engaging with peers or study groups can enhance learning. Sharing insights, discussing challenges, and analyzing integration scenarios help consolidate knowledge. Collaboration fosters deeper understanding of concepts, exposes candidates to diverse approaches, and encourages critical thinking.

Preparing for Enterprise Responsibilities

The MCPA Level 1 exam simulates the responsibilities of a platform architect in enterprise environments. Candidates should understand how to design solutions that meet business objectives, comply with governance policies, ensure security, and optimize performance. Preparing with enterprise-level considerations develops practical skills and aligns learning with professional expectations.

Combining Theory and Application

Balanced preparation integrates theoretical knowledge with applied practice. Candidates should study architectural principles, API-led connectivity, and integration strategies while simultaneously implementing solutions in practical exercises. This combination ensures proficiency in both conceptual understanding and practical application.

Building Confidence Through Practice

Confidence is built through consistent practice and mastery of key concepts. Candidates should engage in hands-on exercises, timed practice tests, and scenario-based simulations. Familiarity with various question types and real-world integration challenges develops confidence, reduces anxiety, and ensures effective exam performance.

Evaluation and Self-Assessment

Regular evaluation and self-assessment help identify strengths and areas needing improvement. Candidates should track performance across topics, practice scenarios, and mock tests. This allows for targeted preparation, ensuring all exam objectives are addressed and gaps are resolved before the assessment.

Advanced Error Handling Techniques

Architects must design integrations that can handle complex error scenarios. Candidates should learn how to implement logging, retries, compensating transactions, and exception handling mechanisms. Advanced error handling ensures resilience, improves reliability, and demonstrates expertise in managing enterprise-grade integrations.

Preparing for Long-Term Professional Growth

Preparing for MCPA Level 1 not only equips candidates for the exam but also for professional growth. The skills acquired in API-led design, architecture planning, security, monitoring, and DevOps practices position candidates for advanced roles in integration architecture and enterprise system design.

Continuous Review and Reinforcement

Maintaining consistent review sessions ensures knowledge retention. Candidates should revisit difficult topics, summarize learnings, and apply knowledge in practice exercises. Continuous reinforcement solidifies understanding, prepares candidates for diverse exam questions, and enhances practical application skills.

Simulating Exam Conditions

Simulating exam conditions is an effective strategy for readiness. Candidates should practice with timed sessions, answer scenario-based questions, and mimic the pressure of the actual assessment. This helps manage time efficiently, reduces stress, and builds familiarity with the structure and pacing of the exam.

Mastery of Platform Architecture Concepts

Candidates should develop mastery over platform architecture concepts, including system design, API-led connectivity, governance, scalability, and monitoring. Comprehensive understanding ensures readiness to design and manage complex integration solutions effectively, reflecting the professional capabilities expected from a certified platform architect.

Conclusion

The MCPA Level 1 exam requires extensive preparation across multiple facets of platform architecture. Candidates should focus on advanced integration strategies, API lifecycle management, governance, security, performance optimization, and practical application. By combining theoretical study, hands-on experience, scenario-based exercises, and continuous practice, candidates can develop a thorough understanding of enterprise integration principles and confidently demonstrate their capabilities in the exam. Success in MCPA Level 1 reflects both technical proficiency and the ability to design, manage, and maintain robust integration solutions in professional environments.

Mulesoft MCPA - Level 1 practice test questions and answers, training course, study guide are uploaded in ETE Files format by real users. Study and Pass MCPA - Level 1 MuleSoft Certified Platform Architect - Level 1 certification exam dumps & practice test questions and answers are to help students.

Exam Comments * The most recent comment are on top

Purchase MCPA - Level 1 Exam Training Products Individually

Why customers love us?

What do our customers say?

The resources provided for the Mulesoft certification exam were exceptional. The exam dumps and video courses offered clear and concise explanations of each topic. I felt thoroughly prepared for the MCPA - Level 1 test and passed with ease.

Studying for the Mulesoft certification exam was a breeze with the comprehensive materials from this site. The detailed study guides and accurate exam dumps helped me understand every concept. I aced the MCPA - Level 1 exam on my first try!

I was impressed with the quality of the MCPA - Level 1 preparation materials for the Mulesoft certification exam. The video courses were engaging, and the study guides covered all the essential topics. These resources made a significant difference in my study routine and overall performance. I went into the exam feeling confident and well-prepared.

The MCPA - Level 1 materials for the Mulesoft certification exam were invaluable. They provided detailed, concise explanations for each topic, helping me grasp the entire syllabus. After studying with these resources, I was able to tackle the final test questions confidently and successfully.

Thanks to the comprehensive study guides and video courses, I aced the MCPA - Level 1 exam. The exam dumps were spot on and helped me understand the types of questions to expect. The certification exam was much less intimidating thanks to their excellent prep materials. So, I highly recommend their services for anyone preparing for this certification exam.

Achieving my Mulesoft certification was a seamless experience. The detailed study guide and practice questions ensured I was fully prepared for MCPA - Level 1. The customer support was responsive and helpful throughout my journey. Highly recommend their services for anyone preparing for their certification test.

I couldn't be happier with my certification results! The study materials were comprehensive and easy to understand, making my preparation for the MCPA - Level 1 stress-free. Using these resources, I was able to pass my exam on the first attempt. They are a must-have for anyone serious about advancing their career.

The practice exams were incredibly helpful in familiarizing me with the actual test format. I felt confident and well-prepared going into my MCPA - Level 1 certification exam. The support and guidance provided were top-notch. I couldn't have obtained my Mulesoft certification without these amazing tools!

The materials provided for the MCPA - Level 1 were comprehensive and very well-structured. The practice tests were particularly useful in building my confidence and understanding the exam format. After using these materials, I felt well-prepared and was able to solve all the questions on the final test with ease. Passing the certification exam was a huge relief! I feel much more competent in my role. Thank you!

The certification prep was excellent. The content was up-to-date and aligned perfectly with the exam requirements. I appreciated the clear explanations and real-world examples that made complex topics easier to grasp. I passed MCPA - Level 1 successfully. It was a game-changer for my career in IT!