- Home

- Amazon Certifications

- AWS Certified Machine Learning - Specialty AWS Certified Machine Learning - Specialty (MLS-C01) Dumps

Pass Amazon AWS Certified Machine Learning - Specialty Exam in First Attempt Guaranteed!

Get 100% Latest Exam Questions, Accurate & Verified Answers to Pass the Actual Exam!

30 Days Free Updates, Instant Download!

AWS Certified Machine Learning - Specialty Premium Bundle

- Premium File 370 Questions & Answers. Last update: Feb 26, 2026

- Training Course 106 Video Lectures

- Study Guide 275 Pages

Last Week Results!

Includes question types found on the actual exam such as drag and drop, simulation, type-in and fill-in-the-blank.

Based on real-life scenarios similar to those encountered in the exam, allowing you to learn by working with real equipment.

Developed by IT experts who have passed the exam in the past. Covers in-depth knowledge required for exam preparation.

All Amazon AWS Certified Machine Learning - Specialty certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the AWS Certified Machine Learning - Specialty AWS Certified Machine Learning - Specialty (MLS-C01) practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

Why AWS Certified Machine Learning - Specialty Certification Matters Today

The AWS Certified Machine Learning – Specialty certification is a distinguished credential designed to validate a professional’s expertise in machine learning using the AWS cloud platform. This certification targets individuals who engage in complex machine learning projects that involve designing, developing, and deploying scalable machine learning solutions.

What sets this certification apart is its comprehensive focus on the entire machine learning lifecycle, from data collection and preparation to feature engineering, model training, tuning, and deployment. Candidates are expected to demonstrate proficiency in applying machine learning algorithms effectively in real-world scenarios using AWS services.

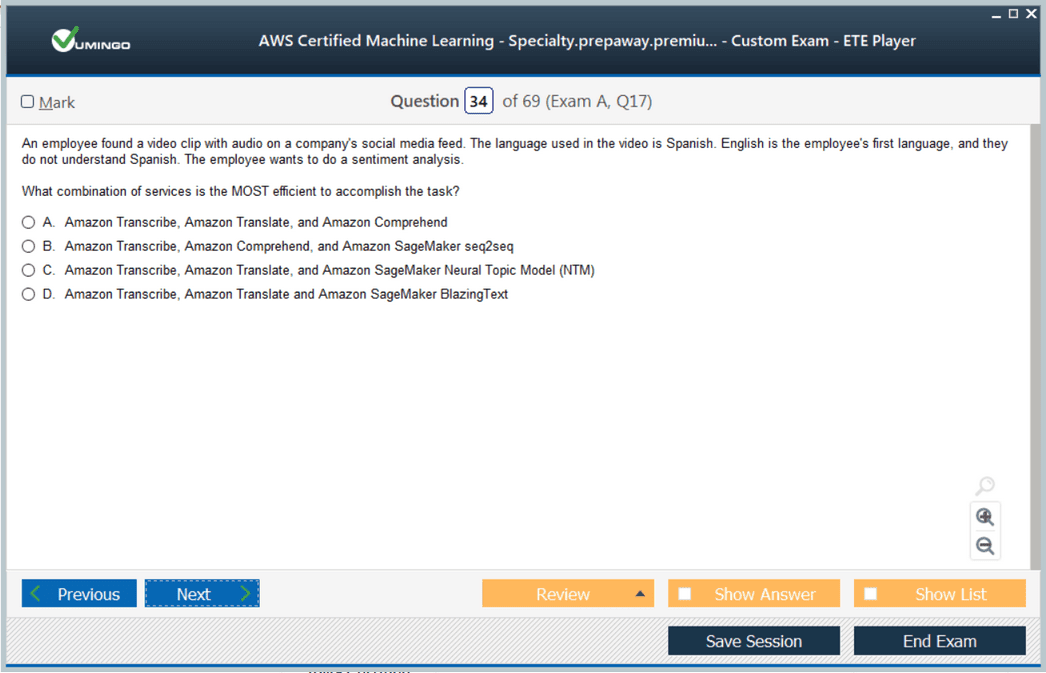

Machine learning, as a field, has grown rapidly, with cloud platforms playing an essential role in democratizing access to powerful computing resources. AWS, as a leader in cloud services, provides an extensive ecosystem of tools and services that facilitate the entire ML workflow. Earning this certification signals to employers that an individual has not only theoretical knowledge but also hands-on experience working with AWS’s ML services such as SageMaker, Rekognition, Comprehend, and others.

The Growing Demand For Machine Learning Expertise

The global shift towards automation and data-driven decision-making has accelerated the demand for professionals skilled in machine learning. Organizations across industries are leveraging AI and ML to optimize operations, enhance customer experiences, and innovate products.

The AWS Machine Learning – Specialty certification provides a strategic advantage for professionals seeking to meet this growing demand. The certification represents a benchmark of skill that is recognized by employers worldwide. It demonstrates the ability to build solutions that can process large volumes of data, extract meaningful patterns, and deliver actionable insights, all within the AWS environment.

The future job landscape indicates that machine learning expertise will be increasingly integrated into numerous roles, including data analysts, engineers, consultants, and project managers. Those holding this certification have the flexibility to transition across multiple roles or specialize further in AI-driven initiatives.

The Core Competencies Validated By The Certification

This certification is not merely about understanding machine learning theory but also about applying best practices and AWS technologies to solve complex problems. It covers four critical domains:

Data Engineering – Candidates must show they can create, maintain, and transform datasets. This involves skills in data ingestion, storage, cleaning, and preparation using AWS services such as AWS Glue, Kinesis, and S3. Data engineering forms the foundation for successful ML projects, as quality and well-prepared data are crucial.

Exploratory Data Analysis – This domain focuses on analyzing and interpreting data using visualization and statistical tools. Understanding how to analyze structured and unstructured data helps in selecting the right features and models. AWS tools like Athena and QuickSight support these activities.

Modeling – This is the heart of the certification, emphasizing the ability to select appropriate machine learning algorithms and train models. Candidates are expected to demonstrate knowledge of supervised, unsupervised, and reinforcement learning techniques. They should also be proficient in hyperparameter tuning and model evaluation metrics.

Machine Learning Implementation and Operations – This domain tests the deployment, monitoring, and optimization of ML models in production environments. It includes knowledge of automation, scalability, and continuous integration/continuous delivery (CI/CD) pipelines for ML. Services like SageMaker, CloudWatch, and Lambda play important roles here.

Why This Certification Matters For Career Growth

Obtaining the AWS Certified Machine Learning – Specialty credential can significantly impact a professional’s career trajectory. It signals expertise in a cutting-edge domain that is pivotal to the future of technology. With more organizations embracing AI-driven solutions, certified specialists often command higher salaries, more challenging projects, and leadership opportunities.

Furthermore, machine learning skills are transferable across industries—from healthcare and finance to retail and manufacturing. This versatility allows certified individuals to explore diverse career paths and adapt to evolving business needs.

Holding this certification also builds confidence in handling cloud-based machine learning tools, ensuring that professionals can innovate rapidly while maintaining best practices around security, cost optimization, and scalability.

Preparing For The AWS Certified Machine Learning - Specialty Exam

The AWS Certified Machine Learning - Specialty exam is a challenging test designed to validate advanced skills in machine learning (ML) within the AWS ecosystem. Success requires not only understanding theoretical ML concepts but also practical proficiency in deploying and managing ML solutions on AWS. Preparing effectively involves mastering core domains of the exam, practicing hands-on exercises, and adopting a strategic study plan.

Understanding The Exam Domains In Depth

The exam consists of four main domains: data engineering, exploratory data analysis, modeling, and ML implementation and operations. Each domain demands a deep understanding of both ML principles and AWS services.

Data Engineering covers 20% of the exam and focuses on how to prepare data for machine learning tasks. This includes data collection, transformation, and storage strategies. Candidates should be comfortable working with AWS tools such as AWS Glue for ETL, Amazon S3 for scalable storage, and Amazon Kinesis for real-time data streaming. Proper data preprocessing is critical to ensure that the input to ML models is clean, balanced, and relevant.

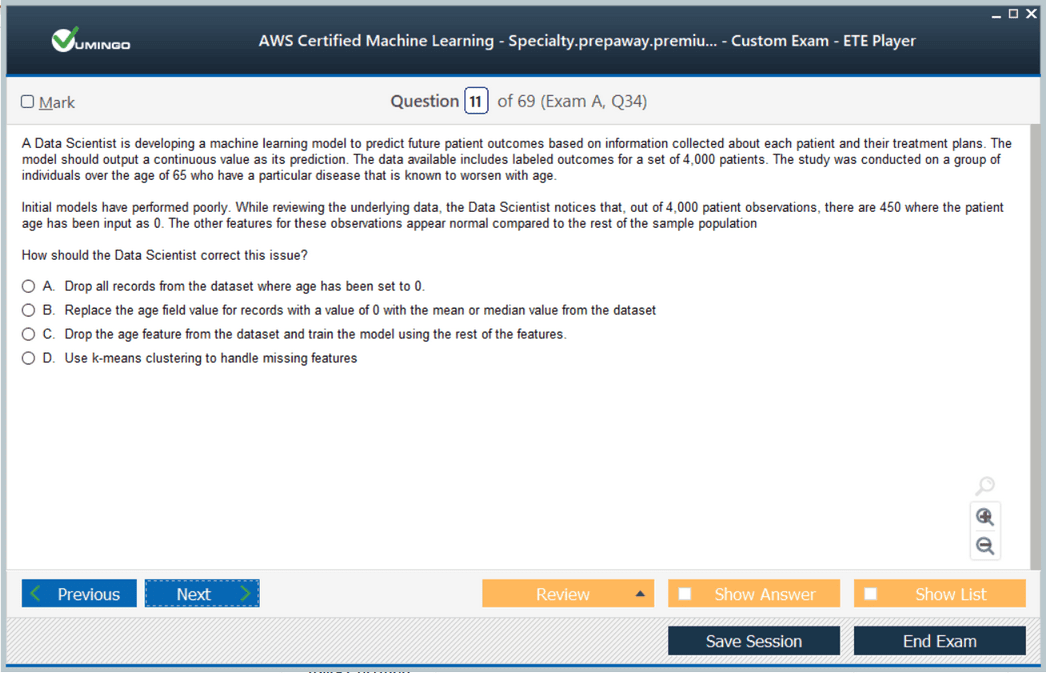

In this domain, knowledge of data formats, schema design, and data partitioning also plays an important role. Handling missing or noisy data and designing pipelines for continuous data ingestion are common tasks. Mastery of these skills reduces errors and enhances model accuracy.

Exploratory Data Analysis (EDA), which makes up 24% of the exam, requires proficiency in analyzing and interpreting data to extract meaningful insights. Visualization tools and statistical techniques help identify trends, outliers, and correlations. Candidates should be skilled in using AWS services like Amazon Athena for querying large datasets and Amazon QuickSight for visualization.

EDA involves selecting appropriate features for model training, reducing dimensionality, and performing feature scaling or normalization. These steps influence model performance and training efficiency. Understanding the difference between supervised and unsupervised data, recognizing biases, and applying statistical tests are vital.

Modeling constitutes the largest portion of the exam at 36%. It tests knowledge about selecting the right machine learning algorithms and training models. Candidates must understand the differences between regression, classification, clustering, and reinforcement learning. Familiarity with Amazon SageMaker for model building and tuning is essential.

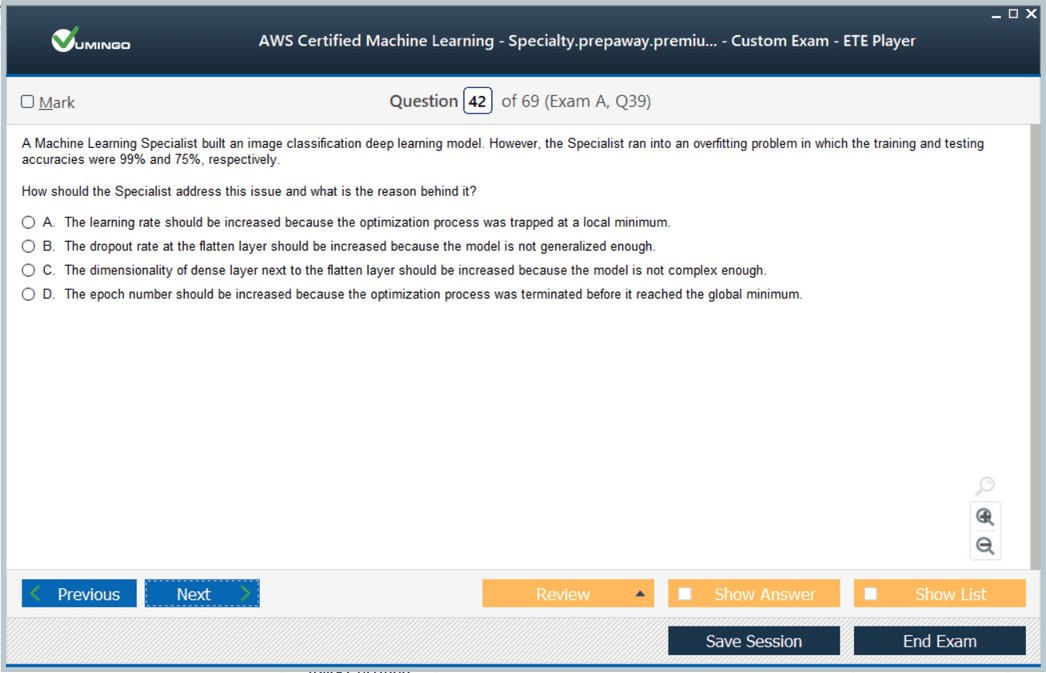

In this domain, it is important to grasp hyperparameter optimization, model evaluation metrics such as accuracy, precision, recall, F1 score, and ROC-AUC. Candidates should also understand overfitting, underfitting, bias-variance tradeoff, and how to handle imbalanced datasets. Knowledge of deep learning frameworks like TensorFlow, MXNet, and PyTorch is beneficial.

Machine Learning Implementation and Operations make up 20% of the exam and cover deploying models into production environments, monitoring their performance, and ensuring scalability. Skills in automating workflows, version control, CI/CD pipelines, and managing ML lifecycle are necessary.

Candidates should be familiar with deploying models using SageMaker endpoints, managing model endpoints' scalability, and setting up monitoring with Amazon CloudWatch. Understanding security best practices, including encryption, access control, and compliance within ML pipelines, is critical. Concepts like model drift, retraining strategies, and cost optimization techniques are also tested.

Developing A Comprehensive Study Plan

Preparing for the AWS Certified Machine Learning - Specialty exam requires a disciplined and structured study approach. It is important to allocate time for learning theory, gaining hands-on experience, and reviewing exam-specific topics.

Start with a thorough review of the AWS exam guide, which outlines the skills and knowledge areas covered. Map out a study schedule that covers each domain progressively, focusing more time on modeling and ML operations due to their heavier weight.

Reading whitepapers and AWS documentation related to ML services deepens conceptual understanding. Practical labs or sandbox environments allow candidates to implement AWS ML services, build pipelines, and deploy models. Real-world practice is invaluable to grasp how services interact and what challenges arise.

Using sample exam questions and timed practice tests helps familiarize candidates with the exam format and question types. It also aids in identifying weak areas to revisit.

Joining study groups or online forums can provide peer support and expose learners to diverse problem-solving approaches. However, it is essential to validate the accuracy of shared information.

Mastering Machine Learning Algorithms And Frameworks

Deep knowledge of machine learning algorithms is essential. Candidates should be comfortable explaining how popular algorithms work, their strengths and limitations, and scenarios where each is most applicable.

Supervised learning algorithms such as linear regression, logistic regression, decision trees, random forests, and support vector machines are fundamental. Understanding ensemble methods like boosting and bagging further enhances modeling skill.

Unsupervised learning techniques like k-means clustering, hierarchical clustering, and principal component analysis are important for exploratory tasks.

Reinforcement learning concepts, though less emphasized, may appear, especially related to sequential decision-making problems.

Understanding neural networks, including feedforward networks, convolutional neural networks (CNNs), and recurrent neural networks (RNNs), is beneficial due to their prevalence in modern ML applications.

Hands-on familiarity with AWS SageMaker’s built-in algorithms and the ability to bring your own models using popular frameworks is necessary. Knowing how to use SageMaker’s hyperparameter tuning jobs to optimize models can improve exam readiness.

Gaining Hands-On Experience With AWS Machine Learning Tools

Theory alone is insufficient for the exam; hands-on experience is crucial. Working directly within the AWS ecosystem provides familiarity with service limitations, configurations, and integration capabilities.

Building end-to-end ML pipelines, from data ingestion using AWS Glue or Kinesis to model training in SageMaker, deployment, and monitoring, simulates real-world workflows.

Practice setting up SageMaker notebooks, training jobs, endpoints, and batch transform jobs. Experiment with tuning hyperparameters, model debugging, and logging.

Implementing security controls such as IAM roles, encryption, and VPC configurations for ML resources highlights the importance of secure deployments.

Continuous experimentation with AWS CLI and SDKs for automating ML workflows helps deepen understanding and prepares candidates for scenario-based exam questions.

Strategies For Tackling The Exam Questions

The exam consists of multiple-choice and multiple-response questions, many of which are scenario-based. Effective strategies include carefully reading each question to identify key details and constraints.

Because questions may include distractors, eliminating clearly incorrect options first can improve accuracy. For multiple-response questions, selecting all correct answers is necessary to earn points.

Time management is critical. Candidates should pace themselves to ensure they answer all questions within the allotted three hours.

Reviewing flagged questions at the end can help catch misread or overlooked items.

Understanding the AWS context is crucial; the best answers align with AWS best practices around scalability, cost-effectiveness, security, and operational excellence.

Maintaining Skills After Certification

Earning the certification is just the beginning. The field of machine learning evolves rapidly, and continuous learning is necessary to remain proficient.

Keeping abreast of new AWS ML services and features, following emerging research in AI, and practicing with new datasets sharpen skills.

Participation in ML competitions, open-source projects, and community events can provide practical exposure.

Building a portfolio of projects that demonstrate advanced ML applications on AWS further solidifies expertise.

Career Opportunities With AWS Certified Machine Learning - Specialty Certification

Earning the AWS Certified Machine Learning - Specialty certification opens doors to a broad spectrum of career opportunities in the rapidly expanding field of artificial intelligence and machine learning. This credential validates a professional's ability to design, build, deploy, and maintain machine learning solutions on AWS, positioning certified individuals as valuable assets to organizations across industries.

The certification is recognized globally and highly regarded by employers who seek candidates capable of leveraging AWS services to solve complex ML problems. Job roles typically associated with this certification include machine learning engineer, data scientist, AI specialist, solutions architect focusing on ML, and data analyst with a focus on predictive modeling.

Emerging Roles In Machine Learning

With AI becoming increasingly integrated into business processes, specialized roles have emerged that require deep knowledge of ML workflows and cloud implementation. Certified professionals often transition into positions such as ML engineer, where they develop algorithms and models, tune hyperparameters, and deploy models in production environments.

Data scientists with this certification can better manage the end-to-end ML pipeline, including data wrangling, feature engineering, and interpreting model results to provide actionable insights. These roles demand a blend of statistical expertise and cloud proficiency, which this certification helps demonstrate.

Solutions architects focusing on machine learning design scalable, cost-effective architectures that incorporate data lakes, ML pipelines, and monitoring systems. They play a crucial role in bridging the gap between data science teams and cloud infrastructure teams.

In addition, roles such as ML operations (MLOps) engineers are gaining prominence. These specialists focus on maintaining and optimizing ML models after deployment, handling model versioning, monitoring model drift, and ensuring models comply with governance and security standards.

Salary Expectations For AWS Machine Learning Specialists

The financial incentives for pursuing the AWS Certified Machine Learning - Specialty certification are significant. Salaries in machine learning roles are competitive and tend to increase with certification and experience.

Certified professionals often command salaries well above average IT roles due to the high demand for ML expertise combined with cloud knowledge. Compensation varies by location, industry, and organizational size, but in many tech hubs, salaries can reach six figures and beyond.

The certification enhances earning potential not just through higher base salaries but also by enabling access to roles that include bonuses, stock options, and other benefits tied to high-impact projects.

Industries such as finance, healthcare, retail, and manufacturing increasingly rely on ML solutions for automation, risk management, customer insights, and predictive maintenance, fueling demand for certified specialists.

Industry Applications Of AWS Machine Learning

AWS Machine Learning services are applied across a wide range of industries to solve business challenges and create value through automation and intelligence.

In healthcare, ML models assist in medical imaging analysis, patient data management, and predictive analytics for disease outbreaks. AWS enables secure and scalable environments to handle sensitive health data while meeting compliance requirements.

Finance uses ML for fraud detection, credit risk assessment, algorithmic trading, and customer segmentation. The ability to process large datasets quickly and securely is a major advantage of AWS cloud-based ML solutions.

Retailers deploy ML for personalized recommendations, inventory forecasting, and customer sentiment analysis. Machine learning models integrated with AWS services allow retailers to adapt dynamically to consumer behavior changes.

Manufacturing benefits from predictive maintenance models that analyze sensor data to forecast equipment failures and optimize production schedules. AWS’s IoT and ML integration capabilities support real-time monitoring and decision-making.

In addition, AWS Machine Learning enables innovations in autonomous vehicles, natural language processing applications like chatbots and virtual assistants, and image and speech recognition across multiple domains.

The Role Of AWS Machine Learning In Digital Transformation

Digital transformation efforts increasingly depend on artificial intelligence and machine learning to automate processes, enhance customer experiences, and drive innovation.

Organizations leverage AWS ML services to accelerate their digital transformation journey by utilizing scalable, flexible, and cost-effective machine learning infrastructure. Certified professionals guide these initiatives by architecting end-to-end solutions that meet business objectives.

With the AWS Certified Machine Learning - Specialty certification, professionals are equipped to translate business problems into ML solutions. They can lead initiatives involving data ingestion, model building, and deployment while ensuring security and compliance.

The ability to operationalize AI models on AWS helps companies remain competitive and responsive in fast-changing markets.

Preparing For The Future Of Machine Learning Careers

The future of machine learning careers looks promising, with continuous advancements in AI technologies and expanding cloud adoption.

To stay relevant, certified professionals should focus on continuous learning. This includes mastering new AWS ML features, deepening understanding of emerging algorithms, and gaining expertise in related fields like edge computing, AI ethics, and explainability.

Building a diverse portfolio of projects, contributing to open-source ML tools, and engaging with the AI community helps sharpen skills and establish thought leadership.

Cross-disciplinary knowledge, including business acumen and communication skills, is becoming increasingly important for ML professionals to effectively collaborate with stakeholders and drive impactful outcomes.

Challenges And Opportunities In Machine Learning On AWS

Working with machine learning on AWS presents unique challenges and opportunities.

Data privacy and security are paramount. Certified professionals must ensure models and data pipelines comply with regulatory requirements while maintaining performance and availability.

Managing cost in cloud-based ML solutions requires careful planning. Selecting the right instance types, optimizing data storage, and automating resource scaling are key areas of focus.

Interpreting complex ML models and explaining them to non-technical stakeholders remains a challenge but is critical for trust and adoption.

The opportunity lies in the ever-expanding AWS ecosystem, which continuously introduces new ML services and enhancements. Staying current enables professionals to leverage the latest innovations, such as automated ML pipelines and AI-driven analytics.

Building Expertise Through Practical Experience

Hands-on experience is essential to mastering machine learning on AWS. Working on real-world projects enables professionals to understand nuances that theoretical study cannot cover.

Implementing data pipelines, training models, deploying endpoints, and monitoring performance in live environments build confidence and problem-solving skills.

Experimentation with various datasets and problem types broadens understanding of algorithm applicability and limitations.

Active participation in ML projects also provides insight into collaboration dynamics, DevOps practices for ML, and operational challenges.

The Importance Of Soft Skills In Machine Learning Roles

While technical skills are fundamental, soft skills significantly impact success in machine learning roles.

Effective communication helps explain complex models and results to diverse audiences, facilitating informed decision-making.

Problem-solving and critical thinking are necessary to navigate ambiguous data scenarios and evolving business requirements.

Project management skills enable managing timelines, resources, and cross-functional teams in ML initiatives.

Adaptability and continuous learning mindset prepare professionals for the fast-evolving AI landscape.

Preparing For The AWS Certified Machine Learning - Specialty Exam

The AWS Certified Machine Learning - Specialty certification demands thorough preparation, combining theoretical knowledge with practical skills. This certification assesses the ability to build, train, tune, and deploy machine learning models on the AWS platform. Preparation requires understanding core AWS services related to machine learning and mastering data engineering, exploratory data analysis, modeling, and machine learning operations.

Candidates should begin by studying the exam guide to identify the key knowledge domains and topics. Each domain carries a different weight, so allocating study time proportionally will help. The exam is not only about recalling facts but also about applying concepts to solve real-world problems. Therefore, hands-on experience with AWS services like SageMaker, Glue, Kinesis, and Athena is essential.

Mastering Data Engineering On AWS

Data engineering is foundational to machine learning workflows. It involves collecting, cleaning, and preparing data to feed ML models effectively. In the AWS ecosystem, this often means using services like AWS Glue for extract, transform, and load (ETL) processes, Amazon S3 for scalable storage, and Amazon Kinesis for real-time data ingestion.

Understanding how to design efficient data pipelines that handle batch and streaming data is crucial. Candidates should be able to implement automated workflows that preprocess and transform data, handle missing values, and balance datasets for training. Knowledge of data partitioning, compression, and format choices such as Parquet or CSV impacts pipeline performance and cost.

Data security and compliance also fall under data engineering responsibilities. Candidates need to understand how to encrypt data at rest and in transit and apply appropriate IAM policies to restrict access.

Developing Expertise In Exploratory Data Analysis

Exploratory data analysis (EDA) is the process of inspecting data sets to summarize their main characteristics, often using visual methods. It helps identify patterns, anomalies, and relationships that inform feature engineering and model selection.

Candidates should be comfortable working with both structured and unstructured data. Statistical analysis skills, including measures of central tendency, variance, correlation, and hypothesis testing, support sound EDA practices. Visualization techniques like histograms, scatter plots, and box plots reveal data distributions and outliers.

AWS tools such as Amazon Athena allow querying large data lakes with SQL-like syntax, while Amazon QuickSight facilitates interactive visualization. Effective use of these tools enables candidates to explore data efficiently and generate actionable insights.

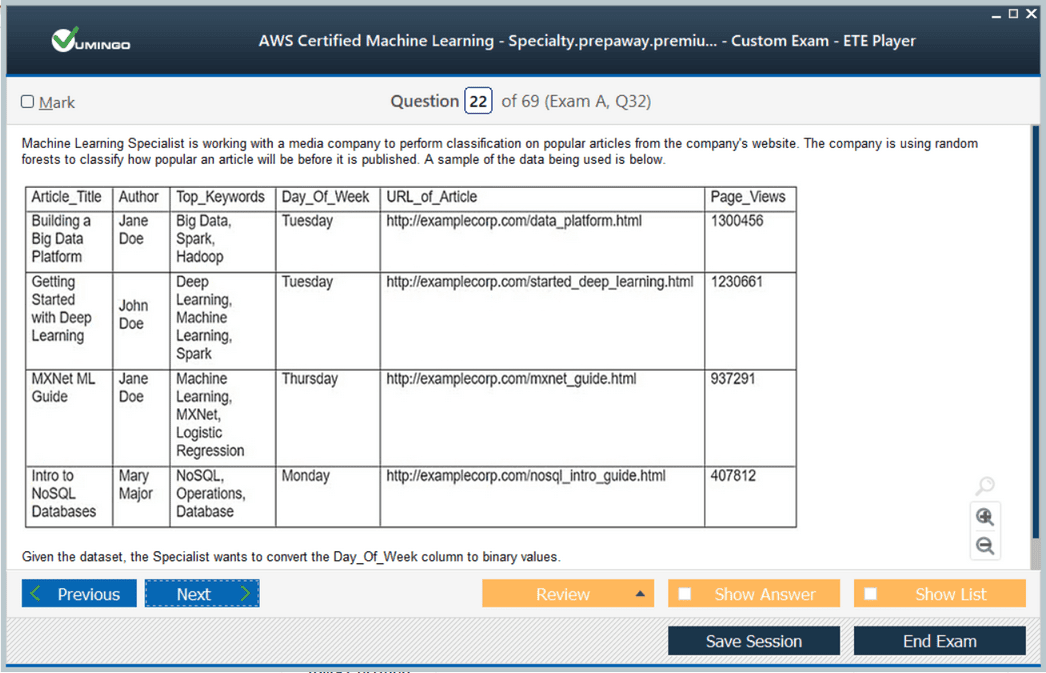

Feature engineering, which is closely tied to EDA, involves selecting, transforming, or creating new features that improve model accuracy. Scaling, normalization, encoding categorical variables, and dimensionality reduction methods like principal component analysis are commonly used.

In-Depth Understanding Of Machine Learning Modeling

Modeling forms the core of the certification and accounts for the largest share of the exam. Candidates need a solid grasp of various machine learning algorithms and when to apply them.

Supervised learning techniques such as linear regression, logistic regression, support vector machines, decision trees, and ensemble methods like random forests and gradient boosting are fundamental. Understanding the assumptions behind each algorithm and their sensitivity to data characteristics is essential.

Unsupervised learning methods like clustering and anomaly detection also play a role, especially for exploratory analysis and preprocessing tasks.

Deep learning techniques including convolutional neural networks (CNNs) for image data and recurrent neural networks (RNNs) for sequence data are increasingly important. Familiarity with neural network architectures and training processes gives candidates an edge.

Proficiency in hyperparameter tuning, which involves adjusting model parameters to improve performance, is necessary. AWS SageMaker offers automated hyperparameter tuning jobs, and candidates should know how to configure and interpret these.

Evaluating models using metrics like accuracy, precision, recall, F1 score, and ROC-AUC helps select the best-performing models. Candidates must also recognize issues such as overfitting and underfitting and apply techniques like cross-validation and regularization to mitigate them.

Machine Learning Implementation And Operations On AWS

Deploying machine learning models into production and managing them over time are key skills tested by the certification. Candidates should understand how to deploy models as SageMaker endpoints for real-time inference or batch transform jobs for large-scale predictions.

Operational tasks include monitoring model performance and usage, detecting model drift, and automating retraining workflows. AWS CloudWatch and SageMaker Model Monitor are tools that facilitate these functions.

Security is paramount when deploying ML models. Candidates must implement access controls using IAM roles, encrypt data and models, and ensure compliance with industry standards.

Scalability and cost optimization are additional considerations. Using auto-scaling endpoints, selecting appropriate instance types, and scheduling training jobs during off-peak hours reduce costs without compromising performance.

Understanding the full machine learning lifecycle from data ingestion to monitoring and retraining prepares candidates for real-world challenges.

Effective Study Methods And Resources

The breadth of knowledge required means candidates should adopt a multi-faceted study approach. Combining theory, practical labs, and practice exams is ideal.

Starting with AWS’s official exam guide and documentation sets a strong foundation. Whitepapers on machine learning best practices deepen conceptual understanding.

Hands-on practice using AWS free tiers or sandbox accounts is vital. Candidates should build end-to-end ML pipelines, experiment with different algorithms, and deploy models to test scenarios.

Practice exams help familiarize candidates with question formats and time constraints. Reviewing incorrect answers clarifies misunderstandings and reinforces learning.

Collaborating with study groups or mentors can provide additional insights and motivation. However, verifying information is important to avoid learning inaccuracies.

Time Management And Exam Strategies

During the exam, managing time efficiently can influence success. Candidates have three hours to complete 65 questions, of which only 50 are scored. Questions include multiple-choice and multiple-response formats.

Reading questions carefully to understand context and constraints is critical. Eliminating clearly wrong answers first improves odds when guessing.

For multiple-response questions, identifying all correct answers is required to earn credit. Marking difficult questions for review and returning later prevents getting stuck.

Candidates should avoid rushing but also keep a steady pace. Leaving time to revisit flagged questions increases confidence.

A calm and focused mindset supports better comprehension and decision-making.

Continuing Professional Development Post-Certification

The certification represents an important milestone but ongoing learning is essential. Machine learning and AWS evolve rapidly, so professionals must stay current.

Exploring new AWS ML services, such as automated machine learning and augmented AI, helps maintain technical edge.

Engaging with the machine learning community, attending conferences, and contributing to projects deepen expertise.

Experimenting with diverse datasets and problem domains broadens skills.

Soft skills like communication, problem-solving, and project management enhance effectiveness and career growth.

The AWS Certified Machine Learning - Specialty certification opens the door to a rewarding, dynamic career. Commitment to lifelong learning ensures sustained success in this exciting field.

Final Words

Earning the AWS Certified Machine Learning - Specialty certification is more than just achieving a credential; it marks a significant milestone in a professional’s journey within the rapidly evolving field of machine learning on the cloud. This certification demonstrates a deep understanding of machine learning concepts combined with the practical ability to design, build, and deploy ML solutions using AWS services. As organizations increasingly adopt AI and machine learning to gain competitive advantages, certified professionals become essential drivers of innovation and efficiency.

The exam’s focus on data engineering, exploratory data analysis, modeling, and operationalizing machine learning reflects the real-world challenges faced by practitioners. Successfully navigating these areas requires not only technical skills but also a strategic mindset and attention to security, scalability, and cost management. Preparing for this exam encourages a comprehensive skill set that balances theory with hands-on experience, which ultimately leads to better decision-making and more effective ML deployments.

Furthermore, this certification opens diverse career opportunities across industries, from healthcare to finance, retail, and manufacturing, where machine learning applications are transforming how businesses operate. The ability to leverage AWS’s robust ecosystem to solve complex problems makes certified professionals highly sought after and often rewarded with competitive salaries and career growth potential.

However, earning the certification is just the beginning. The field of machine learning and cloud technology is constantly advancing. Continuous learning, staying updated on new tools and practices, and engaging with the AI community will keep certified individuals at the forefront of innovation. Developing soft skills such as communication and collaboration also plays a critical role in translating technical achievements into impactful business solutions.

In conclusion, the AWS Certified Machine Learning - Specialty certification is a powerful investment in one’s career. It equips professionals with the knowledge and skills to harness the full potential of machine learning on AWS, helping them become leaders in this dynamic and impactful domain.

Amazon AWS Certified Machine Learning - Specialty practice test questions and answers, training course, study guide are uploaded in ETE Files format by real users. Study and Pass AWS Certified Machine Learning - Specialty AWS Certified Machine Learning - Specialty (MLS-C01) certification exam dumps & practice test questions and answers are to help students.

Exam Comments * The most recent comment are on top

- AWS Certified Solutions Architect - Associate SAA-C03

- AWS Certified Solutions Architect - Professional SAP-C02

- AWS Certified Cloud Practitioner CLF-C02

- AWS Certified AI Practitioner AIF-C01

- AWS Certified DevOps Engineer - Professional DOP-C02

- AWS Certified Machine Learning Engineer - Associate MLA-C01

- AWS Certified CloudOps Engineer - Associate SOA-C03

- AWS Certified Data Engineer - Associate DEA-C01

- AWS Certified Developer - Associate DVA-C02

- AWS Certified Advanced Networking - Specialty ANS-C01

- AWS Certified Machine Learning - Specialty - AWS Certified Machine Learning - Specialty (MLS-C01)

- AWS Certified Security - Specialty SCS-C03

- AWS Certified Security - Specialty SCS-C02

- AWS Certified SysOps Administrator - Associate - AWS Certified SysOps Administrator - Associate (SOA-C02)

- AWS Certified Generative AI Developer - Professional AIP-C01

- AWS-SysOps - AWS Certified SysOps Administrator (SOA-C01)

Purchase AWS Certified Machine Learning - Specialty Exam Training Products Individually

Why customers love us?

What do our customers say?

The resources provided for the Amazon certification exam were exceptional. The exam dumps and video courses offered clear and concise explanations of each topic. I felt thoroughly prepared for the AWS Certified Machine Learning - Specialty test and passed with ease.

Studying for the Amazon certification exam was a breeze with the comprehensive materials from this site. The detailed study guides and accurate exam dumps helped me understand every concept. I aced the AWS Certified Machine Learning - Specialty exam on my first try!

I was impressed with the quality of the AWS Certified Machine Learning - Specialty preparation materials for the Amazon certification exam. The video courses were engaging, and the study guides covered all the essential topics. These resources made a significant difference in my study routine and overall performance. I went into the exam feeling confident and well-prepared.

The AWS Certified Machine Learning - Specialty materials for the Amazon certification exam were invaluable. They provided detailed, concise explanations for each topic, helping me grasp the entire syllabus. After studying with these resources, I was able to tackle the final test questions confidently and successfully.

Thanks to the comprehensive study guides and video courses, I aced the AWS Certified Machine Learning - Specialty exam. The exam dumps were spot on and helped me understand the types of questions to expect. The certification exam was much less intimidating thanks to their excellent prep materials. So, I highly recommend their services for anyone preparing for this certification exam.

Achieving my Amazon certification was a seamless experience. The detailed study guide and practice questions ensured I was fully prepared for AWS Certified Machine Learning - Specialty. The customer support was responsive and helpful throughout my journey. Highly recommend their services for anyone preparing for their certification test.

I couldn't be happier with my certification results! The study materials were comprehensive and easy to understand, making my preparation for the AWS Certified Machine Learning - Specialty stress-free. Using these resources, I was able to pass my exam on the first attempt. They are a must-have for anyone serious about advancing their career.

The practice exams were incredibly helpful in familiarizing me with the actual test format. I felt confident and well-prepared going into my AWS Certified Machine Learning - Specialty certification exam. The support and guidance provided were top-notch. I couldn't have obtained my Amazon certification without these amazing tools!

The materials provided for the AWS Certified Machine Learning - Specialty were comprehensive and very well-structured. The practice tests were particularly useful in building my confidence and understanding the exam format. After using these materials, I felt well-prepared and was able to solve all the questions on the final test with ease. Passing the certification exam was a huge relief! I feel much more competent in my role. Thank you!

The certification prep was excellent. The content was up-to-date and aligned perfectly with the exam requirements. I appreciated the clear explanations and real-world examples that made complex topics easier to grasp. I passed AWS Certified Machine Learning - Specialty successfully. It was a game-changer for my career in IT!