- Home

- Google Certifications

- Professional Cloud Architect Google Cloud Certified - Professional Cloud Architect Dumps

Pass Google Professional Cloud Architect Exam in First Attempt Guaranteed!

Get 100% Latest Exam Questions, Accurate & Verified Answers to Pass the Actual Exam!

30 Days Free Updates, Instant Download!

Professional Cloud Architect Premium Bundle

- Premium File 346 Questions & Answers. Last update: Feb 26, 2026

- Training Course 63 Video Lectures

- Study Guide 491 Pages

Last Week Results!

Includes question types found on the actual exam such as drag and drop, simulation, type-in and fill-in-the-blank.

Based on real-life scenarios similar to those encountered in the exam, allowing you to learn by working with real equipment.

Developed by IT experts who have passed the exam in the past. Covers in-depth knowledge required for exam preparation.

All Google Professional Cloud Architect certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the Professional Cloud Architect Google Cloud Certified - Professional Cloud Architect practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

Google Cloud Professional Cloud Architect: Top Labs for Real-World Prep

The Google Cloud Professional Cloud Architect certification is one of the most recognized credentials in cloud computing. It is designed for professionals who want to demonstrate their ability to design, develop, and manage secure, scalable, and reliable cloud solutions on Google Cloud. The certification is widely preferred across industries because it validates expertise in applying cloud architecture best practices to solve business challenges. Organizations that are adopting cloud technologies are in constant search of skilled professionals who can optimize operations, reduce costs, and deliver innovation through Google Cloud.

Achieving this certification requires both theoretical understanding and practical experience. While theoretical learning builds the foundation, it is through hands-on practice that candidates truly develop the ability to tackle real-world problems. Hands-on labs offer candidates a controlled environment to simulate complex scenarios, allowing them to practice designing, deploying, and managing Google Cloud solutions in a practical manner.

Why Hands-On Labs Are Essential for Cloud Architects

The Professional Cloud Architect exam does not simply test rote memorization of facts. Instead, it assesses the candidate’s ability to apply concepts to business cases and technical challenges. This is where hands-on labs prove to be indispensable. By working directly in simulated Google Cloud environments, candidates learn how to configure services, troubleshoot issues, and implement best practices in architecture.

Hands-on labs provide a bridge between theory and application. For instance, reading about virtual machines is not enough; configuring them, scaling them, and integrating them with other services builds true expertise. Candidates learn how different services interact, how permissions and policies influence operations, and how to optimize architectures for performance, reliability, and cost efficiency.

Beyond technical skills, labs also build confidence. Working through tasks such as setting up load balancers, creating virtual networks, or deploying cloud functions prepares candidates to face the unpredictable challenges of the real exam. Since the exam includes case studies and scenario-based questions, practical experience is key to analyzing requirements, evaluating alternatives, and selecting the best solutions.

The Structure of the Google Cloud Professional Cloud Architect Exam

To appreciate the value of labs, it is important to understand the structure of the certification exam. The exam evaluates six key areas of expertise:

Planning and designing a cloud solution architecture

Managing and provisioning cloud solution infrastructure

Designing for security and compliance

Analyzing and optimizing technical and business processes

Managing the implementation of cloud architecture

Ensuring solution and operations reliability

These domains highlight that a candidate must not only know individual Google Cloud services but also understand how to bring them together in an integrated architecture. For example, designing for security may involve setting up Identity and Access Management policies, while ensuring reliability may require configuring autoscaling or backup strategies.

Hands-on labs provide real exposure to these areas by allowing candidates to perform tasks such as creating virtual private clouds, configuring firewall rules, enabling monitoring and logging, and deploying applications using different compute services. This structured practice strengthens the ability to connect theory with action, which is critical for success.

The Role of Labs in Real-World Readiness

While the certification itself is valuable, the larger goal of becoming a professional cloud architect is to handle real-world challenges. Enterprises expect certified professionals to not only pass exams but also deliver tangible results. Hands-on labs prepare candidates for this by exposing them to scenarios that closely mirror industry use cases.

For example, one lab might focus on building a load-balanced architecture to handle increasing web traffic. Another may require deploying applications with automated scaling and recovery features. Others may dive into data storage, database management, or using infrastructure as code to automate deployments. Each of these labs enhances practical skills that are directly applicable to the workplace.

Furthermore, the iterative practice in labs develops problem-solving skills. Candidates learn how to identify bottlenecks, troubleshoot errors, and optimize systems for better performance. These skills not only help in passing the certification exam but also make professionals more effective in their job roles.

Using Cloud Scheduler with Cloud Functions

Understanding event-driven architecture and task automation is a fundamental skill for a cloud architect. Cloud Scheduler allows you to schedule recurring tasks, while Cloud Functions enables the execution of lightweight, serverless functions in response to events. In this lab, candidates learn how to integrate Cloud Scheduler with Cloud Functions to automate processes efficiently.

The lab begins with creating a virtual machine instance, which is essential for simulating a cloud environment. Once the instance is operational, learners deploy a Cloud Function triggered by Pub/Sub messages. Configuring Pub/Sub topics and subscriptions helps candidates understand asynchronous communication patterns in cloud architectures. Next, learners create Cloud Scheduler jobs that trigger these Cloud Functions according to a defined schedule. Testing the scheduled jobs allows candidates to validate the end-to-end workflow and troubleshoot errors.

Through this lab, candidates gain a practical understanding of scheduling recurring tasks, managing event-driven architecture, and integrating multiple cloud services. These are critical skills for designing scalable, maintainable, and efficient cloud solutions.

Introduction to Cloud Monitoring

Monitoring and observability are crucial for maintaining the performance and reliability of cloud applications. This lab introduces candidates to Cloud Monitoring, demonstrating how to set up logging agents, create alerting policies, and build dashboards.

Learners start by deploying a virtual machine and installing the logging and monitoring agents. They then configure uptime checks to monitor system availability and set up alerting policies to notify administrators when predefined thresholds are breached. Finally, learners create visual dashboards to display system metrics, which helps them understand system performance trends.

By completing this lab, candidates gain hands-on experience with monitoring tools and learn how to design systems that are observable and maintainable. This knowledge directly maps to the exam’s focus on reliability and operational efficiency.

Using Ansible on Google Compute Engine

Automation is a key competency for a cloud architect, and Ansible is one of the widely used configuration management tools. This lab demonstrates how to use Ansible to automate the deployment and management of Google Compute Engine instances.

Learners begin by opening the cloud console and deploying a Cloud Shell environment. They then install Ansible within the shell and create a playbook file to define the configuration of virtual machines. Running the playbook automatically provisions the VM instances, demonstrating how automation reduces manual effort and minimizes human error.

This lab equips candidates with skills to implement infrastructure as code, a practice that improves consistency, scalability, and maintainability of cloud solutions. Understanding how to automate deployment is essential for large-scale cloud environments.

Cloud Shell and Google Cloud SDK

The Cloud Shell and Google Cloud SDK are indispensable tools for managing cloud resources programmatically. This lab helps candidates practice creating and deleting resources using both the Cloud Shell interface and the SDK command-line tools.

The lab begins with creating a virtual machine instance and a cloud storage bucket using Cloud Shell. Learners then perform deletion tasks, which help them understand resource lifecycle management. Next, candidates use the SDK to perform the same operations programmatically. This practice emphasizes the importance of automation, repeatability, and efficient cloud resource management.

By completing this lab, candidates develop the confidence to manage cloud environments using command-line tools, which is critical for professional cloud architects who need to handle complex and dynamic infrastructures.

Startup and Shutdown Scripts in Compute Engine

Managing virtual machines effectively requires understanding lifecycle scripts. This lab demonstrates how to use startup and shutdown scripts to automate tasks during VM initialization and termination.

Learners open the console and configure scripts to run at VM startup and shutdown. They observe how scripts can automate tasks such as software installation, configuration, or cleanup processes. Analyzing script behavior provides insight into how cloud resources can be customized for specific operational needs.

This skill is directly relevant for designing cloud architectures that are resilient, cost-efficient, and self-managing. Automating routine tasks ensures that resources are used optimally and reduces the risk of human error.

Introduction to Compute Engine

Compute Engine forms the backbone of Google Cloud’s Infrastructure as a Service offerings. In this lab, candidates create a virtual machine instance and explore both command-line and GUI access.

The lab emphasizes selecting operating systems, configuring instance parameters, and establishing remote access. Candidates learn how to manage virtual machines efficiently and understand their role in scalable cloud architectures. By using GUI mode and SSH connections, learners gain flexibility in managing resources, which is essential for operational efficiency.

Autoscaling in Compute Engine

Autoscaling ensures that cloud applications can handle varying workloads without manual intervention. This lab guides candidates through creating instance templates, defining autoscaling policies, and testing scaling behavior based on CPU utilization.

Through this exercise, candidates understand how to design applications that dynamically adjust resources to meet demand. They gain insights into cost optimization, performance management, and system resilience. These skills are essential for ensuring that cloud solutions remain reliable and efficient under different traffic patterns.

Cloud Load Balancing

Load balancing is a fundamental component of highly available and scalable architectures. This lab introduces candidates to TCP and HTTP load balancers, firewall rules, target pools, and IP reservations.

Learners create firewall rules to control access, reserve external IP addresses for public access, and configure target pools to distribute traffic across multiple instances. Setting forwarding rules and testing the load balancer demonstrates how traffic management ensures reliability and performance.

Understanding load balancing is crucial for designing systems that maintain high availability, improve user experience, and optimize resource utilization. This lab emphasizes practical knowledge that candidates can directly apply in enterprise environments.

Cloud Storage Bucket

Cloud storage is central to managing data in Google Cloud. This lab teaches candidates how to create buckets, upload objects, and manage permissions.

Learners explore different storage classes, understand access controls, and practice making objects publicly accessible when required. Handling storage in a secure, efficient, and scalable way is a critical skill for cloud architects, particularly when designing data-heavy solutions.

Google Cloud SQL

Databases are integral to cloud applications, and this lab introduces Google Cloud SQL for relational database management. Candidates learn to create database instances, define schemas, and insert data into tables.

This hands-on experience teaches database administration, schema design, and data integrity. It also demonstrates how to integrate databases with other cloud services, which is essential for developing complete application architectures.

HTTP(S) Load Balancing

HTTP(S) load balancers distribute traffic at the application layer and are crucial for delivering web applications reliably. This lab guides candidates through creating instance templates, instance groups, firewall rules, and backend services.

Learners configure routing rules, assign public IP addresses, and perform health checks. This practice helps candidates understand how to ensure high availability, optimize latency, and maintain secure application delivery.

Terraform for Infrastructure Automation

Infrastructure as code is a critical skill for cloud architects, and Terraform is one of the leading tools. This lab demonstrates how to deploy networks, virtual machines, and other resources using declarative configuration files.

Candidates start by defining a virtual private cloud with custom subnets, deploy resources programmatically, and finally tear down the infrastructure. This exercise teaches repeatable, scalable, and automated infrastructure management, which is essential for enterprise-grade cloud solutions.

Persistent Disk Backups

Data protection and recovery are fundamental to operational reliability. This lab introduces persistent disk backups, snapshots, and scheduled snapshot management.

Learners create disks, generate manual snapshots, schedule recurring snapshots, and understand restoration processes. These skills are critical for designing architectures that minimize data loss and ensure business continuity.

Cloud Deployment Manager

Deployment Manager allows candidates to automate cloud resource management through templates. This lab teaches how to define deployment configurations, create templates, and deploy resources.

Candidates learn how to manage multiple resources simultaneously, which reduces complexity and ensures consistency across cloud environments. Understanding deployment automation is essential for professional cloud architects who design scalable and maintainable systems.

Hosting Static Websites and CDN Optimization

This lab demonstrates hosting static websites on cloud storage and optimizing delivery with a content delivery network. Candidates upload HTML files, configure bucket permissions, and attach backend buckets to HTTP(S) load balancers with CDN enabled.

Through this lab, candidates gain insight into website performance optimization, global content delivery, and secure resource access. This knowledge is directly applicable when designing cloud-native applications for global user bases.

Firewalls and Security Prioritization

Security is an essential pillar of cloud architecture. This lab focuses on firewall rules, priority configuration, and monitoring traffic flow between instances.

Candidates design ingress firewall rules, test connectivity, and adjust priorities to control network traffic effectively. Understanding these principles ensures the design of secure, compliant, and reliable cloud systems.

Sticky Sessions with HTTP Load Balancers

Session affinity, or sticky sessions, is critical for applications that require session persistence. This lab guides candidates in creating instance groups, enabling sticky sessions, and validating health checks.

Candidates learn how traffic routing policies impact user experience, reliability, and scalability. Designing architectures that handle session management efficiently is an essential skill for cloud architects, particularly for web applications and services.

Cloud Trace and Observability

Observability is crucial for monitoring performance and diagnosing issues. This lab introduces Cloud Trace, demonstrating how to collect and analyze traces from applications deployed on Cloud Run.

Learners understand how to instrument applications, visualize request flows, and analyze latency. Observability ensures cloud systems are transparent, diagnosable, and maintainable.

Network Load Balancers

Network load balancing distributes traffic at the transport layer and is essential for high-performance, low-latency applications. This lab teaches candidates how to configure TCP load balancers, set up unmanaged instance groups, and deploy firewall rules.

Practical experience with network load balancing enables candidates to design architectures that handle large-scale traffic efficiently and maintain performance under heavy loads.

HTTP(S) Load Balancer Routing Rules

Advanced routing rules in load balancers allow candidates to control traffic distribution based on host and path. This lab guides learners in configuring backend services, instance groups, and DNS records to manage routing.

Candidates learn how to implement multi-tier architectures, manage traffic for multiple services, and optimize application availability. This experience is critical for designing sophisticated cloud applications.

Dataflow and Dataproc

Understanding data processing services is essential for architects handling big data workflows. These labs teach candidates to create pipelines using Dataflow and process jobs with Dataproc.

Learners explore batch and stream processing, workflow automation, and performance optimization. This knowledge equips architects to design scalable, reliable, and cost-efficient data pipelines for enterprise applications.

Using Cloud Shell for CLI Commands

Proficiency in the command line is vital for efficient cloud management. This lab focuses on using Cloud Shell to execute CLI commands, create virtual machines, and configure VPC networks.

Candidates practice scripting, automating tasks, and managing resources programmatically. Mastery of Cloud Shell ensures architects can operate efficiently in complex and dynamic cloud environments.

Designing Secure Network Architectures

Network architecture is a core component of cloud solution design. In this lab, candidates learn to design Virtual Private Clouds (VPCs) with subnets, route tables, firewall rules, and peering connections.

The lab begins with creating multiple VPCs to simulate different application environments, such as development, testing, and production. Candidates then configure subnets to segment traffic based on application requirements and security policies. Firewall rules are established to allow or restrict access, and routing tables are defined to direct traffic efficiently between networks. Peering connections between VPCs demonstrate how different environments communicate securely.

Through this lab, candidates develop skills in designing secure, scalable, and maintainable network architectures. These skills are critical for ensuring application performance, reliability, and compliance in enterprise deployments.

Multi-Region Load Balancing and High Availability

High availability is a requirement for mission-critical applications. This lab teaches candidates to configure multi-region load balancing to ensure traffic distribution across different geographical locations.

Learners deploy instances in multiple regions and configure an HTTP(S) load balancer to route traffic based on proximity and availability. Health checks are established to monitor instance performance and remove failing instances automatically from the load balancing pool. Candidates also learn to configure SSL certificates, optimize session handling, and implement global DNS routing.

By completing this lab, candidates understand how to design architectures that maintain high uptime, optimize latency, and ensure a seamless user experience for global applications.

Advanced Cloud Storage Solutions

Managing large-scale data efficiently is essential for cloud architects. This lab focuses on configuring storage buckets with multiple classes, lifecycle management policies, and access controls.

Candidates create buckets in different regions to optimize redundancy and latency. They configure lifecycle rules to automate data transitions between storage classes and set retention policies to comply with regulatory requirements. Access control policies and IAM roles are applied to secure data while maintaining necessary access for applications.

This exercise equips candidates with the ability to design storage solutions that balance cost, performance, and security while meeting enterprise compliance requirements.

Cloud SQL and High-Availability Databases

Relational database management is central to many cloud applications. This lab focuses on deploying Cloud SQL instances with high availability, failover, and read replicas.

Learners create primary Cloud SQL instances and configure failover replicas to ensure uninterrupted service. They also configure read replicas for load distribution and performance optimization. Backup and recovery processes are implemented, and monitoring is enabled to track database health and performance.

Through this lab, candidates gain experience designing database solutions that are resilient, scalable, and maintainable, addressing both performance and business continuity requirements.

Implementing Cloud Bigtable and NoSQL Databases

For large-scale, high-throughput applications, NoSQL databases such as Cloud Bigtable are essential. This lab teaches candidates how to deploy Bigtable instances, create tables, and manage throughput and storage optimizations.

Learners practice inserting, updating, and querying data while monitoring read and write latency. They also configure IAM permissions for secure access and explore integration with analytics tools for real-time insights.

This lab emphasizes the ability to select and implement the appropriate database solution based on application needs, which is critical for efficient cloud architecture design.

Building Data Pipelines with Dataflow

Data processing pipelines enable real-time and batch data transformation. This lab demonstrates creating Dataflow pipelines for ingestion, transformation, and loading into storage or analytical systems.

Candidates construct pipelines to read data from Cloud Storage or Pub/Sub, apply transformations such as filtering and aggregation, and write results to Cloud BigQuery or Cloud SQL. They monitor pipeline execution and optimize performance by adjusting parallelism and worker configurations.

Through this lab, candidates learn how to automate data workflows, design scalable pipelines, and integrate data processing into larger cloud architectures. These skills are critical for designing data-driven solutions in enterprises.

Implementing Machine Learning Models

Cloud architects increasingly need to design solutions that incorporate AI and machine learning. This lab teaches candidates to deploy machine learning models using AI Platform or Vertex AI and integrate predictions into applications.

Learners start with training a model using sample datasets and then deploy it as an endpoint for online predictions. They explore batch predictions, monitor model performance, and manage versions to maintain accuracy over time. Security practices are applied to restrict access to authorized users or applications.

By completing this lab, candidates understand how to integrate AI services into cloud solutions and design architectures that leverage predictive insights for business optimization.

Using Kubernetes Engine for Containerized Applications

Containers and orchestration are critical components of modern cloud architecture. This lab demonstrates deploying containerized applications on Google Kubernetes Engine (GKE).

Candidates create clusters, configure node pools, and deploy applications using deployment and service manifests. They practice scaling applications, updating deployments, and monitoring cluster health. Advanced concepts such as load balancing, persistent storage integration, and network policies are also covered.

This lab equips candidates with the skills to design scalable, resilient, and maintainable containerized architectures, which is increasingly important for enterprise applications.

Monitoring, Logging, and Observability

Observability ensures that cloud applications are transparent and maintainable. This lab focuses on integrating Cloud Monitoring, Cloud Logging, and Cloud Trace into a unified observability solution.

Candidates deploy applications, generate traffic, and observe system metrics, logs, and traces. They configure alerts for thresholds, create dashboards for real-time monitoring, and analyze trace data to identify performance bottlenecks.

Through this lab, candidates develop skills to ensure that cloud solutions are observable, maintainable, and optimized for performance and reliability.

Implementing Security and Compliance Controls

Security is a fundamental aspect of cloud architecture. This lab teaches candidates to design secure architectures using IAM policies, service accounts, encryption, and audit logging.

Learners configure role-based access control, apply encryption at rest and in transit, and monitor logs for unauthorized activity. Compliance requirements such as data residency and access auditing are implemented to ensure enterprise-grade security.

By completing this lab, candidates understand how to build cloud solutions that are secure, compliant, and aligned with organizational policies.

Automating Infrastructure with Deployment Manager and Terraform

Infrastructure automation reduces operational complexity and ensures consistency. This lab demonstrates using Deployment Manager and Terraform to deploy complex architectures, including networks, virtual machines, storage, and databases.

Candidates create declarative configuration files, deploy multiple resources simultaneously, and test rollbacks. Advanced techniques include parameterization, modular design, and integration with CI/CD pipelines for continuous delivery.

Through this lab, candidates gain experience in automating cloud architectures, ensuring repeatability, scalability, and maintainability of enterprise solutions.

Disaster Recovery and Backup Strategies

Designing resilient systems requires planning for failure. This lab focuses on implementing disaster recovery strategies using multi-region deployments, backups, snapshots, and failover mechanisms.

Candidates configure automated snapshots for compute and storage resources, test backup restorations, and simulate regional failures to validate recovery procedures. Monitoring and alerting are used to ensure that recovery objectives are met.

This lab emphasizes designing architectures that are resilient to failures and ensure business continuity, which is a key requirement for professional cloud architects.

Hybrid and Multi-Cloud Integration

Many enterprises adopt hybrid and multi-cloud strategies. This lab teaches candidates to integrate Google Cloud with on-premises systems and other cloud providers.

Learners set up VPN or Interconnect connections, configure routing, and test connectivity between environments. They explore data replication, application migration, and workload balancing across clouds.

By completing this lab, candidates understand the challenges and best practices for hybrid and multi-cloud architectures, preparing them to design flexible and interoperable solutions.

Optimizing Cost and Performance

Cloud architects must balance cost efficiency with performance. This lab focuses on resource monitoring, rightsizing compute instances, optimizing storage usage, and implementing autoscaling policies.

Candidates analyze billing reports, identify underutilized resources, and adjust instance configurations. They also implement caching, CDN, and load balancing to improve performance while reducing operational costs.

Through this lab, candidates develop skills in designing architectures that are not only technically robust but also economically efficient.

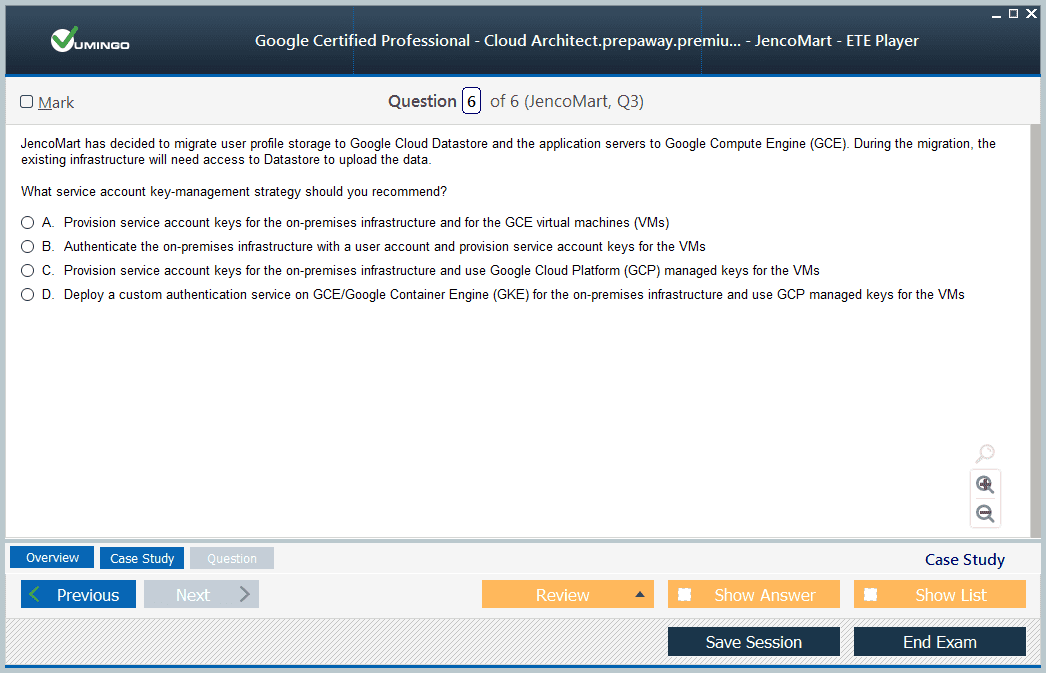

Advanced Identity and Access Management

Managing identities and access across complex cloud environments is critical for security. This lab demonstrates implementing hierarchical IAM policies, service accounts, and custom roles.

Candidates practice granting permissions at project, folder, and organization levels, configuring service account keys, and enforcing security best practices. This ensures that only authorized users or services have access to sensitive resources.

Understanding IAM principles is vital for building secure, compliant, and maintainable cloud architectures.

Logging, Alerting, and Incident Management

This lab integrates logging, alerting, and incident response workflows. Candidates configure logs to capture application and system events, create alerting policies for anomalies, and simulate incident response procedures.

Learners explore using monitoring dashboards, alert notifications, and automated remediation to handle incidents efficiently. This prepares candidates to maintain operational excellence in enterprise cloud environments.

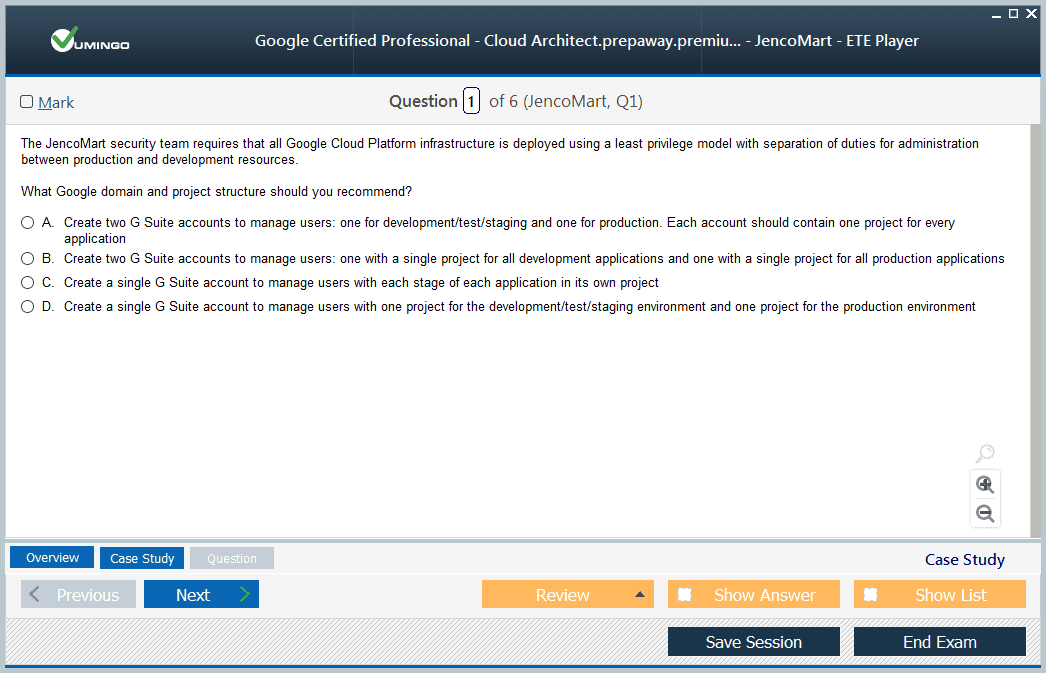

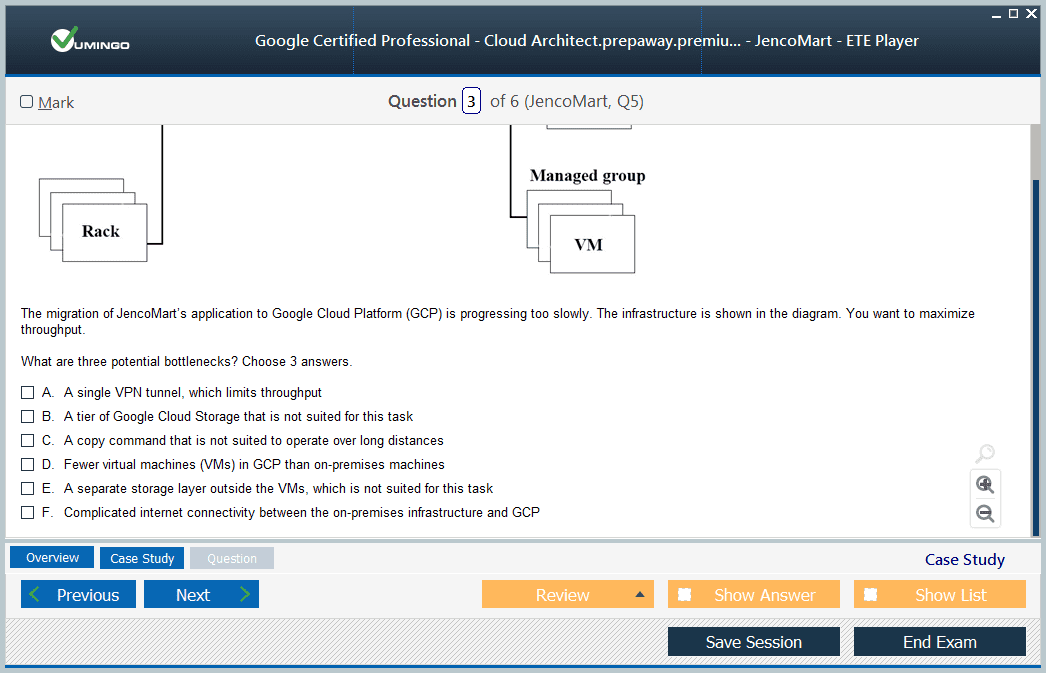

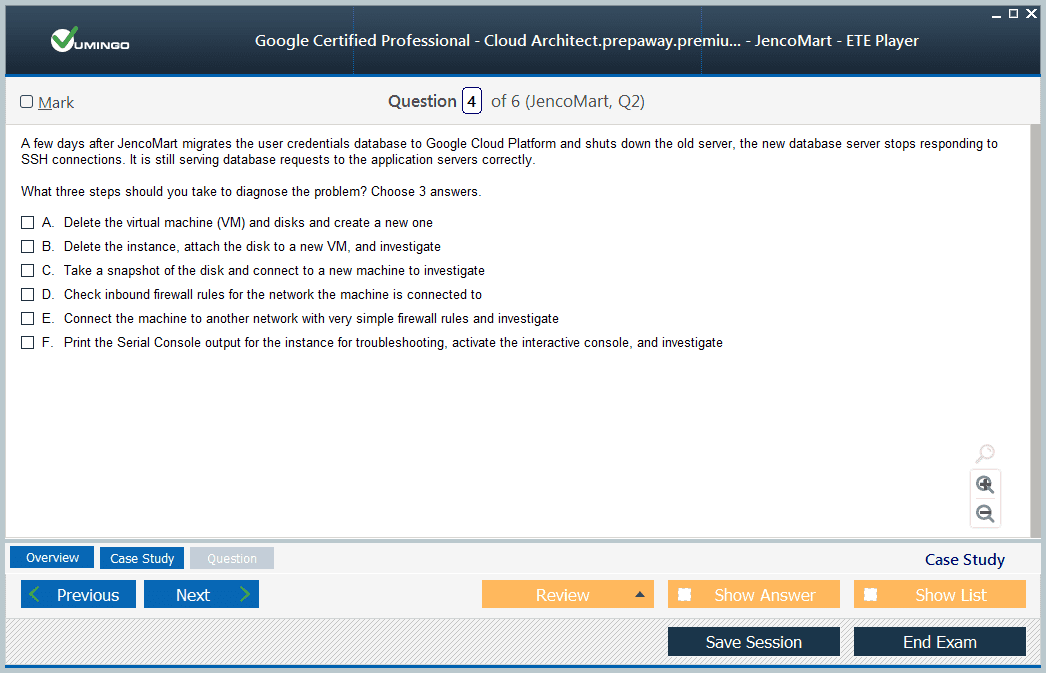

Understanding the Exam Format and Objectives

The exam tests candidates across multiple domains, including solution design, implementation, security, compliance, optimization, and operational reliability. Questions are primarily scenario-based, requiring candidates to analyze business requirements and design appropriate cloud solutions.

Exam simulation is crucial because it helps candidates become familiar with the types of problems presented, the level of detail required in solutions, and the decision-making process expected. Candidates must practice evaluating trade-offs, selecting services, configuring resources, and planning for scalability, reliability, and cost optimization.

Understanding exam objectives helps candidates focus their hands-on practice. For example, knowledge of network architecture, load balancing, autoscaling, monitoring, and disaster recovery is tested not as isolated skills but as integrated solutions.

Integrating Labs into a Study Plan

The hands-on labs covered in previous sections serve as the backbone of a comprehensive study plan. Candidates should organize labs according to exam domains, ensuring that each objective is covered practically.

A recommended approach begins with foundational labs to establish basic familiarity with compute, storage, networking, and database services. Once these are mastered, candidates progress to advanced labs involving automation, monitoring, security, and multi-region architectures. Each lab should be revisited multiple times to reinforce knowledge, test variations in configurations, and practice troubleshooting.

Hands-on labs should not be completed passively. Candidates should actively experiment by modifying parameters, introducing failures, and testing alternative approaches. This deepens understanding and prepares candidates for the dynamic and scenario-based nature of the exam.

Scenario-Based Learning

Scenario-based learning is an effective method to simulate real-world challenges. In this approach, candidates are presented with business cases that require designing end-to-end solutions using Google Cloud services.

For example, a scenario may involve deploying a global web application that requires high availability, low latency, and cost optimization. Candidates must choose appropriate compute services, configure load balancers, implement autoscaling, secure data with IAM policies, and monitor performance using logging and monitoring tools.

Another scenario could involve designing a data processing pipeline to handle streaming and batch data. Candidates must select between Dataflow and Dataproc, optimize workflows, and integrate storage and database services.

Practicing such scenarios ensures candidates can apply their knowledge holistically, analyze trade-offs, and make decisions aligned with both technical and business objectives.

Case Study: Designing a Scalable Web Application

A common case study in the exam involves deploying a scalable web application. Candidates must consider multiple aspects: compute resources, networking, security, monitoring, and disaster recovery.

The lab-based preparation allows candidates to experiment with different strategies. They may start by deploying instances using Compute Engine, configure autoscaling policies to handle variable traffic, and implement an HTTP(S) load balancer with session affinity. Cloud Storage can be used for static assets, and Cloud SQL or Bigtable for dynamic data storage.

Monitoring dashboards and alerting policies are configured to track performance metrics, detect anomalies, and ensure uptime. Candidates also test disaster recovery by simulating failures and verifying that recovery processes work correctly.

This case study reinforces the importance of integrating multiple services, optimizing performance, and designing resilient architectures. By practicing such scenarios, candidates gain confidence in addressing similar questions in the exam.

Case Study: Data Pipeline Architecture

Another common scenario involves designing a data pipeline for analytics and reporting. Candidates must choose between batch processing and real-time streaming, select appropriate storage and database services, and implement monitoring and error-handling mechanisms.

In hands-on labs, candidates practice creating Dataflow pipelines to transform and load data into Cloud BigQuery for analysis. They may also deploy Dataproc clusters for batch processing jobs. IAM policies ensure data security, and logging/monitoring tools track pipeline performance.

Through repeated practice, candidates develop the ability to optimize data processing pipelines, handle large-scale workloads, and ensure reliability. This lab-based experience is directly transferable to exam case studies and real-world projects.

Case Study: Hybrid Cloud Integration

Some scenarios require candidates to integrate Google Cloud with on-premises infrastructure or other cloud providers. Hands-on labs prepare candidates to configure VPN connections, Interconnect, routing rules, and multi-cloud resource management.

Candidates practice deploying workloads across multiple environments, configuring data replication, and implementing access control policies. They also monitor latency, bandwidth utilization, and operational performance.

This case study teaches candidates to handle hybrid architectures, understand networking complexities, and design solutions that balance flexibility, performance, and security. Such experience is valuable for the exam and enterprise roles.

Optimizing Cost and Performance

Cost management is an important component of cloud architecture. Hands-on labs allow candidates to experiment with resource utilization, autoscaling policies, and storage classes to optimize cost efficiency.

Candidates analyze billing data, identify underutilized resources, and test the impact of scaling decisions on both performance and cost. They practice implementing caching mechanisms, CDNs, and load balancing to enhance application responsiveness while controlling expenses.

This practical experience ensures candidates understand how to design architectures that are both high-performing and cost-effective, which is a key competency tested in the certification exam.

Security and Compliance Best Practices

Security is a recurring theme in the exam. Hands-on labs provide candidates with practical experience in implementing IAM policies, service accounts, encryption, audit logging, and firewall configurations.

Candidates practice creating hierarchical access controls, assigning roles at project and organization levels, and enforcing encryption for data in transit and at rest. Audit logging and monitoring are configured to detect unauthorized access and ensure compliance.

By integrating security considerations into every lab scenario, candidates learn to design architectures that are secure, compliant, and aligned with enterprise policies. This holistic approach prepares candidates for security-focused exam questions and real-world responsibilities.

Observability and Monitoring

Observability ensures that cloud solutions are maintainable, performant, and resilient. Hands-on labs provide practice with Cloud Monitoring, Cloud Logging, Cloud Trace, and custom dashboards.

Candidates simulate application traffic, monitor resource utilization, and create alerts for performance anomalies. They analyze trace data to identify bottlenecks and optimize resource configurations.

Developing these skills allows candidates to design systems that are transparent, diagnosable, and efficient, which is essential for operational excellence in enterprise cloud environments.

Disaster Recovery and Business Continuity

Designing resilient systems requires planning for disaster recovery. Labs provide candidates with experience in multi-region deployment, backup strategies, snapshots, failover configurations, and recovery testing.

Candidates simulate failures, validate automated recovery, and ensure business continuity under various scenarios. This experience prepares candidates to design architectures that meet organizational recovery objectives and demonstrate resilience under pressure.

Integrating Automation into Cloud Solutions

Automation reduces operational complexity and enhances consistency. Hands-on labs allow candidates to implement infrastructure as code using Deployment Manager and Terraform.

Candidates define declarative configurations, deploy resources, and manage updates or rollbacks efficiently. Automation is applied to compute, storage, networking, and database resources, ensuring repeatable and maintainable cloud architectures.

This practical expertise ensures candidates can design automated, scalable, and consistent cloud solutions, which is a critical skill for professional cloud architects.

Exam Simulation and Practice Strategy

Preparing for the certification exam requires both knowledge and strategy. Hands-on labs provide the technical foundation, but candidates should also practice scenario-based exam questions to develop decision-making skills.

Simulated exams help candidates manage time, understand question patterns, and practice evaluating trade-offs between competing solutions. Candidates should focus on understanding the reasoning behind correct answers, reviewing lab exercises to reinforce concepts, and identifying areas that require additional practice.

Regular practice ensures candidates build confidence, reduce exam anxiety, and strengthen problem-solving abilities. By integrating labs and exam simulations, candidates develop a well-rounded preparation strategy.

Continuous Learning and Skill Reinforcement

Cloud technologies evolve rapidly, and continuous learning is essential. Hands-on labs are a means of reinforcing skills learned through theory, but candidates should also explore new services, features, and best practices.

Revisiting labs, experimenting with alternative configurations, and exploring advanced use cases ensure that knowledge remains current. Continuous learning also prepares candidates for practical challenges beyond the certification exam, ensuring long-term career growth as a cloud architect.

Consolidating Knowledge from All Labs

The final stage of preparation involves consolidating knowledge from foundational, advanced, and scenario-based labs. Candidates should review key concepts, cross-reference labs with exam objectives, and integrate lessons learned into a cohesive understanding of Google Cloud architecture.

This consolidation process involves mapping services to business requirements, understanding interdependencies, and practicing integrated solutions that cover compute, storage, networking, security, monitoring, and automation.

By synthesizing knowledge from all labs, candidates develop a holistic understanding of cloud architecture, which is essential for both the exam and real-world implementation.

Real-World Case Studies and Applications

To further reinforce learning, candidates should study real-world cloud deployment scenarios. For example, designing an e-commerce platform with high availability, secure payments, and global reach, or building a data analytics platform with real-time insights and automated reporting.

These case studies illustrate the application of hands-on skills in complex environments. Candidates can simulate these scenarios in labs, testing configurations, monitoring performance, and implementing best practices. This practical exposure ensures candidates are ready to handle enterprise-level cloud architecture challenges.

Conclusion

Preparing for the Google Cloud Professional Cloud Architect certification requires more than theoretical knowledge. Hands-on labs, combined with scenario-based learning and strategic exam preparation, form the foundation of a successful approach. Foundational labs build core skills in compute, storage, networking, and databases, while advanced labs teach automation, monitoring, security, multi-region deployment, and data processing.

Scenario-based exercises and case studies allow candidates to integrate multiple services, optimize architectures, and address complex enterprise challenges. Practicing cost optimization, disaster recovery, security, and observability ensures that solutions are resilient, efficient, and compliant. Automation with tools like Deployment Manager and Terraform reinforces repeatable and maintainable cloud architectures.

By consolidating knowledge across labs, practicing exam simulations, and continuously exploring new features and services, candidates develop the confidence and expertise necessary to excel in both the certification exam and professional cloud architect roles. Hands-on labs are not just a preparation tool—they are a bridge to mastering real-world cloud architecture challenges and achieving operational excellence on Google Cloud.

Google Professional Cloud Architect practice test questions and answers, training course, study guide are uploaded in ETE Files format by real users. Study and Pass Professional Cloud Architect Google Cloud Certified - Professional Cloud Architect certification exam dumps & practice test questions and answers are to help students.

Exam Comments * The most recent comment are on top

- Professional Cloud Architect - Google Cloud Certified - Professional Cloud Architect

- Generative AI Leader

- Professional Machine Learning Engineer

- Associate Cloud Engineer

- Professional Data Engineer - Professional Data Engineer on Google Cloud Platform

- Professional Cloud Security Engineer

- Professional Cloud Network Engineer

- Cloud Digital Leader

- Professional Security Operations Engineer

- Professional Cloud Developer

- Professional Cloud DevOps Engineer

- Associate Google Workspace Administrator

- Professional Cloud Database Engineer

- Associate Data Practitioner - Google Cloud Certified - Associate Data Practitioner

- Professional ChromeOS Administrator

- Professional Google Workspace Administrator

- Professional Chrome Enterprise Administrator

- Google Analytics - Google Analytics Individual Qualification (IQ)

Purchase Professional Cloud Architect Exam Training Products Individually

Why customers love us?

What do our customers say?

The resources provided for the Google certification exam were exceptional. The exam dumps and video courses offered clear and concise explanations of each topic. I felt thoroughly prepared for the Professional Cloud Architect test and passed with ease.

Studying for the Google certification exam was a breeze with the comprehensive materials from this site. The detailed study guides and accurate exam dumps helped me understand every concept. I aced the Professional Cloud Architect exam on my first try!

I was impressed with the quality of the Professional Cloud Architect preparation materials for the Google certification exam. The video courses were engaging, and the study guides covered all the essential topics. These resources made a significant difference in my study routine and overall performance. I went into the exam feeling confident and well-prepared.

The Professional Cloud Architect materials for the Google certification exam were invaluable. They provided detailed, concise explanations for each topic, helping me grasp the entire syllabus. After studying with these resources, I was able to tackle the final test questions confidently and successfully.

Thanks to the comprehensive study guides and video courses, I aced the Professional Cloud Architect exam. The exam dumps were spot on and helped me understand the types of questions to expect. The certification exam was much less intimidating thanks to their excellent prep materials. So, I highly recommend their services for anyone preparing for this certification exam.

Achieving my Google certification was a seamless experience. The detailed study guide and practice questions ensured I was fully prepared for Professional Cloud Architect. The customer support was responsive and helpful throughout my journey. Highly recommend their services for anyone preparing for their certification test.

I couldn't be happier with my certification results! The study materials were comprehensive and easy to understand, making my preparation for the Professional Cloud Architect stress-free. Using these resources, I was able to pass my exam on the first attempt. They are a must-have for anyone serious about advancing their career.

The practice exams were incredibly helpful in familiarizing me with the actual test format. I felt confident and well-prepared going into my Professional Cloud Architect certification exam. The support and guidance provided were top-notch. I couldn't have obtained my Google certification without these amazing tools!

The materials provided for the Professional Cloud Architect were comprehensive and very well-structured. The practice tests were particularly useful in building my confidence and understanding the exam format. After using these materials, I felt well-prepared and was able to solve all the questions on the final test with ease. Passing the certification exam was a huge relief! I feel much more competent in my role. Thank you!

The certification prep was excellent. The content was up-to-date and aligned perfectly with the exam requirements. I appreciated the clear explanations and real-world examples that made complex topics easier to grasp. I passed Professional Cloud Architect successfully. It was a game-changer for my career in IT!