- Home

- Microsoft Certifications

- DP-201 Designing an Azure Data Solution Dumps

Pass Microsoft DP-201 Exam in First Attempt Guaranteed!

DP-201 Premium File

- Premium File 209 Questions & Answers. Last Update: Jan 31, 2026

Whats Included:

- Latest Questions

- 100% Accurate Answers

- Fast Exam Updates

Last Week Results!

All Microsoft DP-201 certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the DP-201 Designing an Azure Data Solution practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

How to Prepare for DP-201: A Practical Guide to Azure Data Solution Design

The foundation of the DP-201 exam lies in understanding the responsibilities of an Azure data engineer. This professional is responsible for designing and implementing data solutions that can store, process, and analyze data efficiently within the Azure ecosystem. The work of a data engineer ensures that data pipelines run smoothly, data is stored securely, and information is readily available for analytical purposes. The DP-201 exam is designed to evaluate how well a candidate can translate business requirements into reliable data solutions that align with performance, scalability, and compliance needs. Candidates pursuing this path must have a strong understanding of data architecture, data modeling, and the ability to use various Azure tools and services to meet organizational goals.

The exam focuses on the candidate’s ability to design solutions rather than implement them. This means one must demonstrate skills in planning and structuring data systems that support business intelligence and advanced analytics. Azure offers several services that make up the ecosystem of a data solution, such as Azure SQL Database, Data Lake, Synapse Analytics, and Stream Analytics. A candidate must know when and how to apply each service in different scenarios. The core of the DP-201 exam revolves around making design choices that ensure high availability, disaster recovery, and performance efficiency in large-scale data systems.

Key Focus Areas in DP-201 Exam

The DP-201 exam primarily measures a candidate’s skills across three major domains that define a complete Azure data solution. These areas include designing data storage, designing data processing, and designing for security and compliance. Each domain contributes a specific percentage of the overall exam and together form the core structure of a data engineer’s responsibilities.

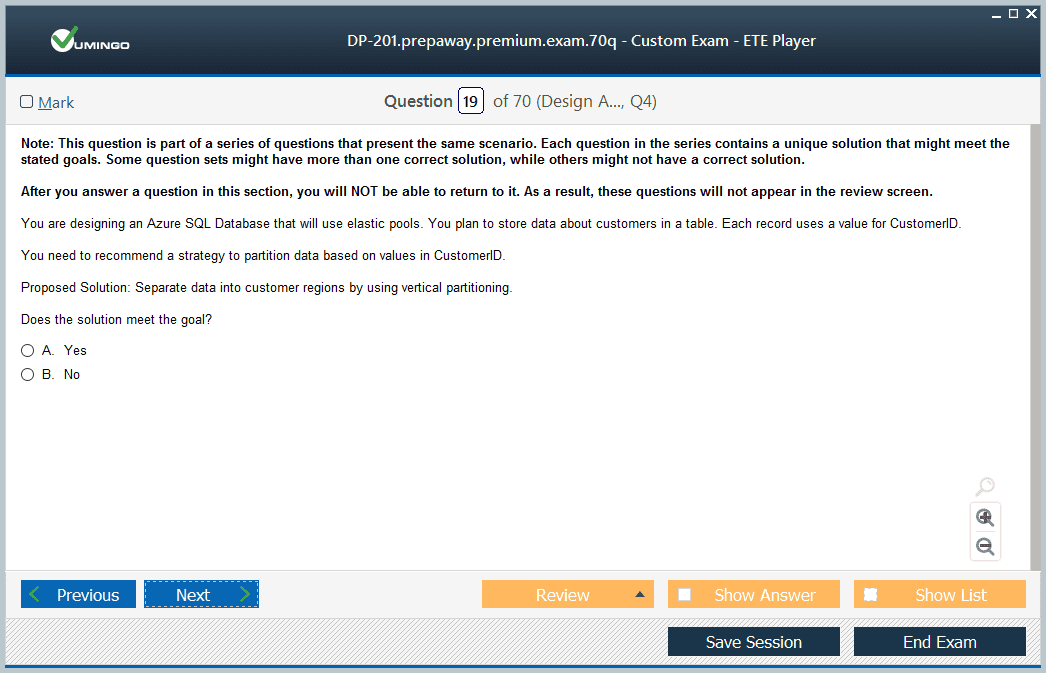

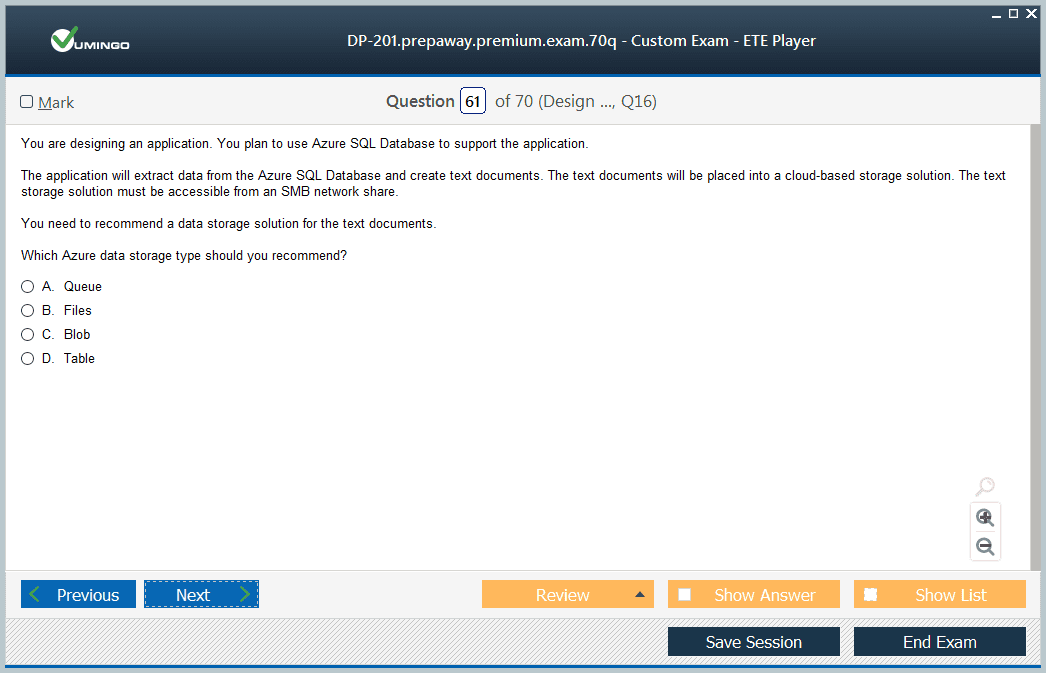

Designing data storage solutions is one of the most important areas. It involves choosing appropriate storage systems based on factors like data volume, access frequency, and processing requirements. Candidates need to understand the differences between structured and unstructured data, how relational and non-relational databases operate, and when to use services such as Azure SQL, Cosmos DB, or Data Lake. A key part of this domain is also about planning for data partitioning, indexing, and optimizing performance while keeping storage costs manageable.

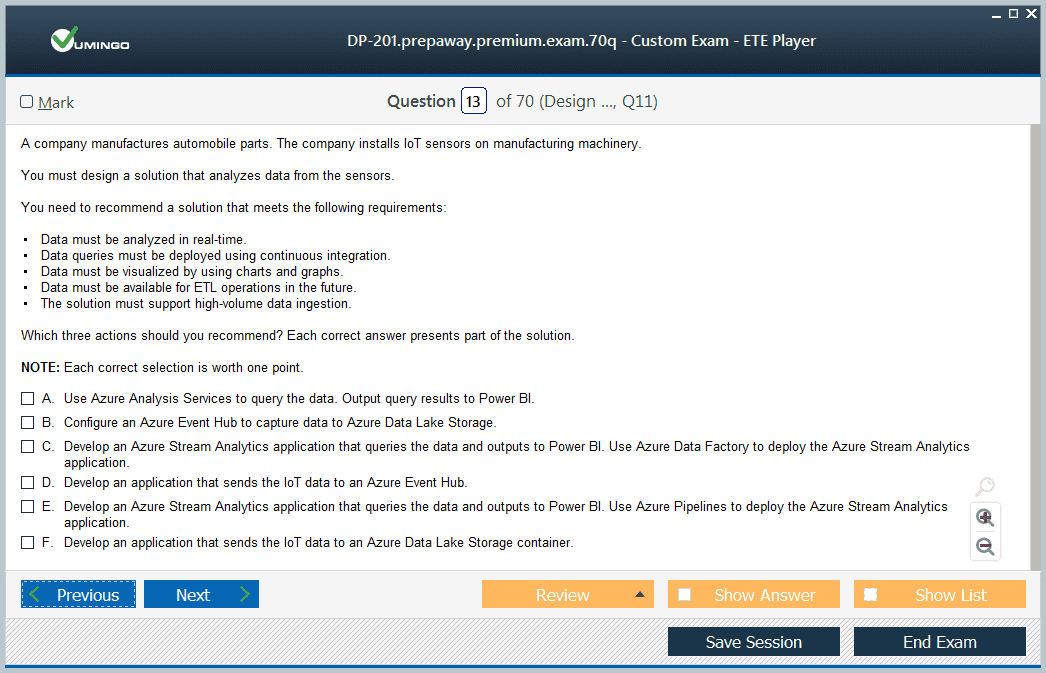

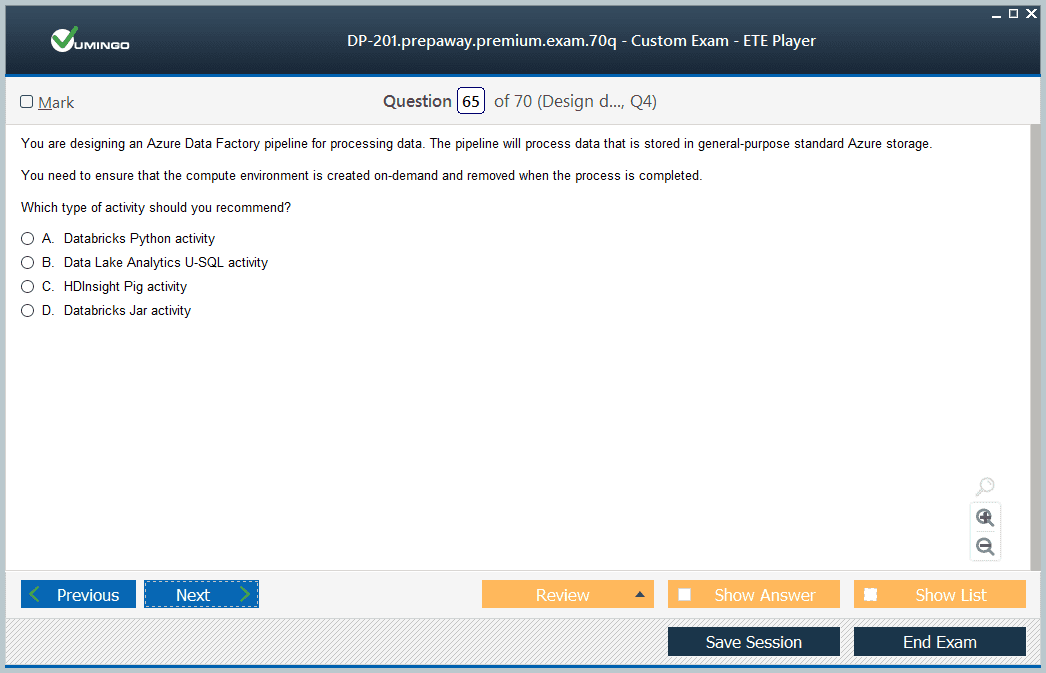

The second area, designing data processing solutions, evaluates how a candidate can create systems that transform raw data into usable insights. This involves batch and real-time processing designs using Azure services like Data Factory, Databricks, and Stream Analytics. Understanding how to orchestrate data workflows, manage dependencies, and ensure fault tolerance in data pipelines is crucial. Candidates should also be familiar with designing systems that can scale dynamically to handle large data volumes efficiently.

The third area centers on designing for data security and compliance. Every data engineer must ensure that sensitive information is protected and that data solutions comply with governance standards. This requires knowledge of Azure’s identity and access management, encryption methods, and policies for protecting data at rest and in transit. Designing access control mechanisms, managing data policies, and ensuring compliance with privacy regulations are all part of this domain.

Importance of the DP-201 Exam

The DP-201 exam holds great value for anyone looking to specialize in Azure-based data engineering. It validates an individual’s ability to design systems that can handle complex data challenges in modern cloud environments. With the increasing shift of enterprises toward cloud platforms, professionals with proven expertise in designing Azure data solutions are in high demand. Passing this exam demonstrates that a candidate can take theoretical business requirements and turn them into technical blueprints for data-driven operations.

In today’s data-driven landscape, organizations depend heavily on the seamless flow of information between systems. A certified professional in this domain helps businesses establish a robust foundation for their data strategy. The exam is not only about understanding Azure tools but also about mastering architectural principles such as scalability, resiliency, and cost optimization. These concepts are essential for creating systems that can evolve with changing business demands.

Another significant advantage of pursuing this exam is the recognition it brings to one’s professional capabilities. It represents proficiency in cloud data architecture and design, both of which are essential for advanced career paths in data management. The preparation process itself helps candidates develop a deeper understanding of how data ecosystems function within Azure and how various services interconnect to form complete solutions.

Skills and Knowledge Areas Required

To excel in the DP-201 exam, candidates need to possess a blend of technical knowledge and analytical thinking. Understanding the structure of data storage systems is fundamental. One must be able to choose between relational and non-relational databases depending on the type of data and the access patterns required by business operations. Familiarity with data integration concepts such as extract, transform, and load (ETL) processes is essential for designing systems that maintain data consistency across different sources.

Another key skill area involves designing scalable data solutions. As data continues to grow exponentially, engineers must ensure that their systems can scale seamlessly without performance degradation. This requires knowledge of distributed architectures, caching mechanisms, and workload balancing strategies. Candidates should also be familiar with designing for disaster recovery, backup strategies, and ensuring business continuity through multi-region deployments.

Candidates must also demonstrate strong command over data security and governance. Understanding Azure’s encryption mechanisms, key management systems, and access controls is essential. Additionally, one must be able to design data solutions that align with privacy standards and ensure that sensitive information remains protected at all times.

Analytical and problem-solving skills also play a critical role in this certification. The ability to analyze a business problem and translate it into a well-structured technical design is what sets apart a proficient data engineer. This means understanding not only how data is stored or processed but also how it contributes to decision-making and organizational goals.

Effective Preparation Strategy

Preparing for the DP-201 exam requires a structured and focused approach. The first step is to understand the official exam objectives. These objectives outline what topics and skills the exam will assess. Reviewing them helps candidates identify knowledge gaps and allocate study time efficiently. Building a study plan around these domains ensures that no critical topic is overlooked.

Practical experience plays an equally important role. Candidates should spend time working with Azure services to gain hands-on exposure to how data systems function in real scenarios. Setting up sample projects, creating data pipelines, and experimenting with various storage and processing solutions will solidify theoretical knowledge. Real-world practice not only boosts confidence but also enhances problem-solving ability during the exam.

Reading technical documentation and reference materials related to Azure data services is another essential step. These materials help candidates understand how to use each service effectively, along with its limitations and best practices. Building a solid understanding of Azure’s architecture and data integration capabilities will make it easier to design comprehensive solutions.

Mock tests and practice exercises are also beneficial in preparation. They help candidates familiarize themselves with the exam format and the style of questions that may appear. Practice exams can also help identify weak areas that need additional focus. A consistent routine of studying, revising, and practicing will gradually improve speed and accuracy in answering questions.

Real-World Application of DP-201 Knowledge

The concepts covered in the DP-201 exam are not just theoretical. They have direct applications in real-world data projects. Professionals who have mastered these concepts can design end-to-end data systems that handle everything from data ingestion to analytics. These skills are valuable for roles that involve building data lakes, integrating streaming data, or creating reporting systems for business intelligence.

An engineer with expertise in Azure data design can help organizations make informed decisions based on accurate and timely data. This includes building architectures that combine structured and unstructured data, enabling organizations to leverage insights from multiple sources. By ensuring that data solutions are scalable and cost-efficient, engineers contribute to both operational efficiency and strategic growth.

Security and governance are equally important in real-world applications. Engineers must ensure that data systems comply with regulations and organizational policies. The knowledge gained through DP-201 preparation enables professionals to design systems with strong access control, encryption, and auditing mechanisms. These practices safeguard sensitive information and ensure that the data environment remains compliant and resilient.

Building a Career after DP-201

Achieving success in the DP-201 exam can open doors to advanced roles in data engineering and cloud architecture. The certification validates that a candidate has the technical depth required to handle complex data challenges within the Azure ecosystem. Professionals with this qualification are often involved in designing enterprise-level data platforms that support advanced analytics, artificial intelligence, and business intelligence initiatives.

Many organizations rely on certified professionals to lead digital transformation initiatives that center on cloud data management. The role of a data engineer extends beyond technical implementation; it involves aligning technology with business goals and ensuring that data serves as a strategic asset. Having this certification provides a competitive advantage in the job market and sets the foundation for continuous career growth.

A certified data engineer often collaborates with data scientists, analysts, and architects to develop cohesive data strategies. This collaboration ensures that data solutions are optimized for both performance and analytical insights. The DP-201 certification builds the foundation for such collaborative and cross-functional roles, enabling professionals to become key contributors to an organization’s data-driven culture.

The DP-201 exam represents a significant milestone for professionals seeking to establish expertise in designing data solutions on Azure. It focuses on assessing practical skills that are critical for modern data environments, from designing storage and processing systems to ensuring security and compliance. The exam encourages candidates to think strategically about data architecture, emphasizing scalability, reliability, and performance optimization.

Preparing for this exam requires a disciplined approach that combines theoretical understanding with practical experience. Through dedicated study and real-world practice, candidates can develop a comprehensive understanding of Azure’s data ecosystem and how to design effective solutions. Success in this exam not only validates technical knowledge but also demonstrates the ability to apply that knowledge in designing systems that support business goals.

The DP-201 exam serves as a gateway to advanced roles in cloud data engineering and offers professionals the opportunity to make a meaningful impact through data-driven innovation. By mastering its concepts and developing a strong foundation in Azure data design, individuals can contribute significantly to the evolution of intelligent and efficient data systems in the digital age.

Deep Dive into the DP-201 Exam Objectives

The DP-201 exam centers around evaluating how well candidates can design and plan Azure data solutions that meet the needs of modern organizations. This assessment focuses on a candidate’s ability to translate business requirements into scalable, secure, and efficient data architectures using Azure services. Each area of the exam corresponds to the essential stages of data design: storage, processing, and protection. Understanding these areas in depth allows candidates to visualize how each component contributes to a complete data solution.

Designing data storage solutions is often considered the foundation of the exam. It involves more than just knowing which storage service to select; it requires an understanding of data modeling, data access patterns, and system performance. Candidates are expected to choose storage systems that balance cost, scalability, and latency requirements. For example, selecting between Azure SQL Database for transactional workloads and Azure Cosmos DB for distributed, non-relational data involves careful consideration of data structure, query patterns, and response time expectations.

Designing data processing solutions forms the next key objective. This section assesses how well a candidate can design workflows for transforming, integrating, and analyzing data. The challenge lies in creating solutions that can handle both batch and real-time data efficiently. Candidates must demonstrate the ability to design pipelines that are reliable, fault-tolerant, and capable of scaling automatically under varying loads. Understanding the use of data orchestration tools and integration services is vital, as they connect diverse systems and enable data movement across multiple environments.

The third area focuses on data security and compliance. In a world where data breaches and regulatory constraints are common, designing secure systems is a crucial responsibility. Candidates must ensure that every data layer, from storage to access, complies with governance and privacy standards. Designing for security involves implementing data encryption, managing user permissions, and setting up role-based access controls. The exam tests how well one can ensure that sensitive information remains protected without compromising accessibility or performance.

Understanding the Architecture Behind Azure Data Solutions

The Azure platform provides a comprehensive suite of services for building and managing data systems. Candidates preparing for the DP-201 exam must understand how these services interact and how to use them in the context of architectural design. Azure data architecture typically revolves around ingestion, storage, processing, and visualization. Each stage requires careful selection of services to meet specific organizational requirements.

In the data ingestion phase, engineers must decide how data will enter the system. This could involve streaming data from IoT devices, importing data from on-premises sources, or integrating APIs. Choosing between real-time streaming and batch ingestion depends on the nature of the business use case. Once data is ingested, it moves to the storage layer, where it must be stored in a format suitable for analysis. Understanding structured versus unstructured data and how to manage them effectively on Azure is an essential part of the design process.

The processing layer transforms raw data into structured, usable formats. Engineers must design solutions that process data in an efficient, scalable, and reliable way. Whether it’s cleaning, aggregating, or enriching data, processing plays a major role in determining the accuracy and speed of insights. This layer also determines how well a solution can handle large datasets and maintain performance under heavy workloads.

The final stage involves delivering insights to business users. Though visualization tools are not a major focus of the DP-201 exam, understanding how processed data is consumed helps engineers design better systems. They must ensure that data is organized, accessible, and structured in ways that enable efficient querying and reporting.

Best Practices for Designing Azure Data Solutions

When designing Azure data solutions, following best practices is essential for achieving reliable, cost-effective, and scalable systems. One key practice is to separate storage and compute resources. This approach improves flexibility and allows for independent scaling of storage and processing power, which optimizes cost and performance.

Another important practice is designing for fault tolerance and disaster recovery. Data engineers must anticipate potential failures and build redundancy into their systems. Techniques such as data replication, geo-redundant storage, and automated backup strategies ensure continuity in case of outages or data corruption. This level of design foresight demonstrates an engineer’s ability to create resilient systems that support uninterrupted business operations.

Monitoring and optimization are also central to best design practices. Engineers should design systems that include performance monitoring tools and automated alerts. This helps detect bottlenecks, identify inefficiencies, and maintain consistent service quality. Optimizing system performance requires balancing factors like query efficiency, data partitioning, and network latency to deliver smooth and predictable results.

Security and compliance must be built into the architecture from the start. Instead of treating them as afterthoughts, engineers should consider them at every design stage. Implementing least-privilege access, securing endpoints, and encrypting data both in transit and at rest are standard practices that strengthen data protection. Governance policies should also be enforced through automation to maintain compliance consistently.

The Importance of Hands-On Practice

While theoretical knowledge forms the base for DP-201 exam preparation, practical experience brings real understanding. Working directly with Azure services enables candidates to visualize how concepts apply in real-world scenarios. Setting up test environments and experimenting with different architectures helps solidify core principles like scalability, data partitioning, and processing design.

Hands-on practice also builds problem-solving skills that are essential for success in both the exam and real projects. When faced with a design challenge, the ability to think critically and test multiple approaches becomes invaluable. Experimenting with storage services like Data Lake, SQL Database, or Cosmos DB reveals how performance and cost vary under different conditions. Similarly, working with integration tools helps candidates understand how to orchestrate and monitor complex data pipelines.

Testing security configurations in practice environments is equally important. Engineers should test different access controls, encryption methods, and auditing options to learn how to maintain compliance without restricting functionality. This experience helps in building intuition for balancing usability and protection, a skill that the exam and real projects both demand.

Strategic Study Approach

Preparing for the DP-201 exam effectively requires planning and persistence. The process begins with understanding the structure and objectives of the exam, followed by creating a realistic study schedule that covers all core domains. Each topic should be approached through a combination of conceptual reading and practical experimentation.

Breaking study sessions into smaller, focused segments can help maintain concentration. For example, dedicating specific sessions to data storage design, followed by sessions on processing design, allows for deeper exploration of each topic. Regularly revisiting previously studied material reinforces retention and ensures that no topic is overlooked.

Creating notes and diagrams can also be beneficial. Visualizing system architectures, data flows, and dependencies enhances understanding of how different components interact. It also helps candidates recall details quickly during the exam. Concept mapping of Azure services and their roles within the ecosystem further strengthens comprehension.

Self-assessment is another crucial aspect of preparation. After studying each domain, candidates should test their understanding by solving scenario-based problems. This helps in identifying weak areas that require more attention. Time management practice is also essential, as the exam requires answering a variety of questions within a limited time frame.

Common Challenges and How to Overcome Them

One of the biggest challenges candidates face while preparing for the DP-201 exam is understanding the interconnection between Azure services. The vast range of options can sometimes create confusion about which service fits best in a given scenario. Overcoming this challenge involves continuous practice and revisiting documentation to understand each service’s capabilities, limitations, and use cases.

Another challenge is managing time effectively during the exam. The variety of question types, including case studies and scenario-based items, can consume time if not approached strategically. The key is to read questions carefully, eliminate incorrect options, and rely on architectural reasoning rather than memorization.

Some candidates struggle with retaining technical details, especially configuration parameters and service limitations. To address this, consistent revision and practical implementation are essential. Working with the actual platform embeds knowledge more effectively than simply reading about it.

Finally, maintaining motivation over an extended study period can be difficult. Setting clear goals, tracking progress, and rewarding small achievements can help sustain momentum. Joining communities or study groups can also provide support, exchange of ideas, and exposure to different problem-solving approaches.

Real-World Relevance of the DP-201 Certification

The DP-201 certification is not just a theoretical achievement but a practical validation of an engineer’s ability to design robust data architectures. In professional environments, these skills are applied in diverse ways. For instance, organizations rely on data engineers to design architectures that integrate data from multiple sources, manage storage effectively, and support analytics that drive decision-making.

The certification proves that a professional understands how to create data ecosystems that balance performance, cost, and compliance. These capabilities are critical for managing data in hybrid and cloud-native environments. The ability to design flexible systems that adapt to evolving business needs gives certified professionals a strategic edge.

Professionals who have mastered these skills contribute significantly to digital transformation projects. They enable businesses to move from traditional, fragmented data systems to unified platforms that support automation, machine learning, and predictive analytics. Such transformations not only improve efficiency but also create opportunities for innovation.

Continuous Learning Beyond the Exam

The DP-201 exam serves as a gateway to deeper expertise in data architecture and engineering. However, the field of data technology evolves rapidly, and staying relevant requires continuous learning. Once certified, professionals should continue exploring new features in Azure data services, experiment with emerging design patterns, and stay updated on best practices for cloud data management.

Developing advanced skills in areas like big data processing, real-time analytics, and data governance can further strengthen a professional’s profile. Participating in projects that challenge one’s existing knowledge base helps reinforce learning and fosters innovation. Documenting and sharing these experiences also contributes to professional growth and community learning.

A commitment to lifelong learning ensures that professionals remain adaptable as technologies evolve. It also prepares them to take on leadership roles where they can design, implement, and oversee enterprise-level data solutions. The DP-201 certification marks the beginning of this journey, setting a strong foundation for continuous advancement in data engineering.

The DP-201 exam is a vital benchmark for professionals aiming to master the design of Azure data solutions. It encapsulates the essential aspects of modern data engineering—storage, processing, and security—and evaluates a candidate’s ability to bring these together into a cohesive architecture. Success in this exam requires not only theoretical understanding but also practical experience, critical thinking, and attention to detail.

Through disciplined preparation, consistent practice, and a focus on real-world application, candidates can develop the expertise needed to design data solutions that drive business innovation. The knowledge and experience gained from preparing for this exam extend far beyond certification, forming the foundation for a successful and rewarding career in data engineering.

Professionals who achieve proficiency in designing Azure data solutions through this exam become integral to their organizations’ ability to harness data for strategic advantage. Their work ensures that data systems are efficient, reliable, and ready to support the analytical and operational demands of the future. The DP-201 certification thus serves as both a milestone and a stepping stone toward excellence in the evolving world of cloud data engineering.

Comprehensive Understanding of DP-201 Exam and Its Core Focus

The DP-201 exam evaluates how effectively a candidate can design and structure data solutions that operate seamlessly within the Azure ecosystem. This exam focuses on the technical and architectural aspects of building data systems that support analytics, reporting, and operational efficiency. It is designed for individuals who already have an understanding of data management principles and wish to apply them within the Azure environment. The exam bridges the gap between theoretical data concepts and practical application by testing one’s ability to create optimized, secure, and scalable data architectures.

The purpose of the exam is to ensure that professionals can make intelligent design choices that balance performance, cost, and security. Data engineers are expected to interpret business needs and translate them into data solutions that deliver measurable results. This requires not only familiarity with Azure data services but also an ability to understand system interdependencies, design patterns, and implementation strategies. Candidates preparing for this exam should be able to envision the entire data lifecycle, from ingestion to analysis, while maintaining governance and compliance throughout.

Understanding how different Azure components fit together is key to success in this exam. Each question in the DP-201 exam challenges the candidate to think critically about data system design, weighing trade-offs between technologies. Candidates are expected to demonstrate how to architect systems that can scale with business growth while remaining resilient and efficient.

Designing Data Storage and Management Strategies

A significant part of the DP-201 exam focuses on designing efficient storage solutions. Data engineers must understand the various storage options Azure provides and how to select the right one based on workload requirements. These include relational databases, non-relational databases, and data lakes, each serving distinct use cases. A well-designed storage architecture should support both high availability and disaster recovery while optimizing for performance and cost.

Relational databases on Azure, such as SQL-based solutions, are often used for structured data and transactional workloads. Candidates need to understand when to use partitioning, indexing, and replication to enhance query performance. Non-relational storage, like document or key-value stores, is better suited for unstructured or semi-structured data. These systems are often used in scenarios involving large-scale web applications or real-time data ingestion.

Data lakes provide flexible storage for vast amounts of raw data, enabling organizations to store data in its native format until it is needed for processing. Designing data lakes involves considering factors like access control, schema evolution, and data lifecycle management. The DP-201 exam tests understanding of how to combine these storage types into a cohesive architecture where data can move efficiently across systems.

An equally critical aspect of storage design is managing performance and scalability. Engineers must consider how data volume, access frequency, and query complexity affect performance. The ability to design systems that can automatically adjust resources based on demand is a valuable skill. Efficient data partitioning, caching, and indexing strategies can significantly improve performance and reduce operational costs.

Building Data Processing Architectures

Data processing is the next key area tested in the DP-201 exam. Designing efficient data pipelines involves more than just moving data from one point to another. It requires planning how data will be cleaned, transformed, and enriched for analytics or reporting. Candidates must be able to design batch and real-time processing systems that meet the organization’s operational and analytical needs.

Batch processing is commonly used for large datasets that are processed at scheduled intervals. This method is ideal for workloads where real-time processing is unnecessary but accuracy and completeness are critical. Real-time processing, on the other hand, allows data to be ingested and analyzed as it arrives, enabling immediate decision-making. Engineers must know how to design these pipelines in a way that ensures reliability, fault tolerance, and maintainability.

The exam also measures the candidate’s ability to choose appropriate processing frameworks. Engineers must understand how to orchestrate workflows, manage dependencies, and ensure consistent data quality across pipelines. Designing processing systems that minimize latency, maximize throughput, and ensure fault recovery are essential parts of this domain.

Another consideration in processing design is integration with existing systems. Data rarely resides in a single source, so data engineers must design pipelines capable of extracting and transforming data from multiple origins while maintaining consistency. Integration patterns, data flow optimization, and schema mapping are important design factors tested in the exam.

Ensuring Data Security and Compliance

Security design is integral to the DP-201 exam and forms the foundation of a reliable data solution. Engineers must ensure that data remains protected throughout its lifecycle—from ingestion and storage to processing and access. Designing secure systems involves more than implementing encryption; it requires a comprehensive approach to identity management, access control, and data governance.

Access control mechanisms define who can view or modify data within the system. Candidates must understand how to design role-based access controls and implement identity management solutions that align with organizational policies. Effective security design ensures that users only access the data they are authorized to view while maintaining transparency for auditing purposes.

Data encryption is another critical topic. Engineers must design encryption strategies that protect data both in transit and at rest. Implementing these measures ensures data integrity and reduces the risk of unauthorized access. The DP-201 exam assesses how well candidates can design systems that maintain security without sacrificing performance or usability.

Compliance with regulations is also an essential part of data security. Engineers need to design solutions that meet governance and privacy standards. This involves defining data retention policies, maintaining audit logs, and ensuring that data is processed and stored according to compliance requirements. A well-designed system incorporates these considerations from the start, rather than treating them as afterthoughts.

Strategic Preparation for the DP-201 Exam

Effective preparation for the DP-201 exam requires a clear strategy that combines theoretical understanding with practical application. Candidates should start by thoroughly reviewing the exam objectives and identifying the key areas where they need to strengthen their knowledge. Creating a structured study plan helps ensure that all domains are covered systematically.

One effective approach is to begin with conceptual learning. This involves understanding the principles of data design, the functions of Azure services, and the relationship between them. Once the conceptual foundation is established, candidates should move on to practical application by building sample projects and experimenting with various Azure services. Hands-on experience helps reinforce learning and enhances problem-solving abilities.

Time management during preparation is crucial. Candidates should allocate time for revising previously learned topics, practicing design scenarios, and evaluating their progress. Using visual aids such as diagrams and architecture blueprints can help in understanding complex systems and recalling them during the exam.

It is also beneficial to approach preparation as a problem-solving exercise. Instead of memorizing service features, candidates should focus on understanding how to apply them in real-world situations. This mindset aligns with the design-oriented nature of the exam and enhances critical thinking skills.

Understanding the Role of a Data Engineer

To excel in the DP-201 exam, candidates must understand the broader role of a data engineer. The role extends beyond implementing systems; it involves designing solutions that align technology with business objectives. A data engineer ensures that the organization’s data ecosystem is efficient, scalable, and secure.

A data engineer’s work begins with analyzing business requirements and translating them into technical designs. This requires an understanding of both data architecture and business strategy. Engineers must choose technologies that meet performance goals while staying within budget and operational constraints.

Another responsibility is maintaining data quality. Poor data quality can lead to inaccurate insights and flawed decision-making. Therefore, engineers design systems that validate, clean, and standardize data automatically. They also ensure that data lineage is traceable, which supports transparency and auditing.

In addition to building systems, data engineers collaborate closely with data analysts, architects, and developers. They create frameworks that allow seamless integration between data sources and analytical tools. This collaboration ensures that data flows smoothly across departments and is readily available for decision-making.

Challenges in Designing Azure Data Solutions

Designing data solutions in Azure presents unique challenges. One of the most common challenges is choosing the right combination of services for a specific scenario. Azure offers multiple options for storage, processing, and analytics, and selecting the most suitable one requires a deep understanding of their strengths and limitations.

Scalability and performance optimization are other significant challenges. As data volumes grow, maintaining fast query performance and low latency becomes complex. Engineers must design systems that automatically scale resources based on workload while minimizing cost. This requires understanding resource allocation, data partitioning, and caching mechanisms.

Security challenges are also prominent. Balancing accessibility and protection requires careful planning. Engineers must design systems that allow users to access the data they need while preventing unauthorized access. This often involves implementing fine-grained access controls, encryption, and auditing mechanisms.

Another challenge lies in maintaining system reliability. Designing for fault tolerance ensures that systems can continue operating even when individual components fail. This requires planning for redundancy, replication, and automated recovery. Engineers must test and validate these mechanisms to ensure they work under real-world conditions.

Real-World Application of the DP-201 Exam Knowledge

The skills and concepts tested in the DP-201 exam directly translate to practical scenarios in professional environments. Data engineers use these skills to design end-to-end data systems that enable business intelligence and analytics. From structuring databases to setting up pipelines and ensuring compliance, the principles learned during preparation serve as a foundation for real-world success.

In real projects, engineers apply these concepts to design architectures that can handle diverse data sources and dynamic workloads. They ensure that data is always available for analysis, regardless of its source or format. These designs support decision-making by enabling real-time access to accurate and reliable data.

Professionals who master these concepts also play a vital role in an organization’s digital transformation. They help businesses leverage cloud capabilities to improve data agility, enhance analytics, and support innovation. Their designs enable predictive analytics, machine learning, and advanced reporting capabilities, empowering organizations to make informed decisions.

The DP-201 exam represents a comprehensive evaluation of an individual’s ability to design and implement Azure data solutions. Success in this exam requires not only technical expertise but also strategic thinking and practical problem-solving skills. Candidates who prepare thoroughly gain a deep understanding of how to design storage, processing, and security frameworks that align with business goals.

The certification associated with this exam validates a professional’s ability to create data architectures that are secure, scalable, and efficient. It prepares individuals to take on complex data engineering challenges and contribute meaningfully to their organizations. By mastering the principles and practices evaluated in the DP-201 exam, candidates position themselves for continued growth in the evolving field of cloud data engineering.

Achieving proficiency in designing Azure data solutions signifies a strong grasp of modern data management practices. It demonstrates the ability to turn data into a strategic asset and lays the groundwork for future expertise in advanced data technologies. The DP-201 exam thus serves as both a milestone of achievement and a gateway to greater professional development in the realm of data engineering.

In-Depth Approach to Designing Azure Data Solutions

The DP-201 exam centers on the candidate’s ability to design efficient, scalable, and secure Azure data solutions. This assessment focuses on transforming business requirements into a structured data design that supports analytical and operational workloads. A successful candidate must demonstrate proficiency in identifying the most appropriate architecture patterns, data processing systems, and storage solutions that align with performance, security, and cost considerations. Understanding how different Azure services integrate and complement each other is fundamental to creating effective data solutions.

Designing Azure data solutions requires a holistic understanding of data flow within an enterprise environment. It begins with identifying data sources and continues through ingestion, transformation, storage, and presentation. Every decision within this design process affects scalability, reliability, and maintainability. Candidates must understand how to balance cost efficiency with high performance, ensuring that data remains secure and available when needed. The DP-201 exam measures the ability to design architectures that not only work efficiently in theory but also perform effectively under practical conditions.

Designing for Scalable and Resilient Data Architectures

Scalability is one of the most important principles in Azure data solution design. As data volumes grow, the system must handle increasing workloads without performance degradation. Candidates must understand how to design systems that scale both vertically and horizontally depending on the nature of the workload. Vertical scaling increases the capacity of a single resource, while horizontal scaling distributes workloads across multiple nodes. In Azure, many services provide automatic scaling options, which must be properly configured to ensure consistent performance during peak loads.

Resilience is another critical component of architecture design. Data systems must be able to recover from failures without data loss or service interruption. Designing for resilience involves using redundancy, replication, and failover mechanisms. Azure provides multiple options for ensuring high availability, such as geo-replication and load balancing. Candidates must know when and how to implement these features to achieve optimal reliability. The exam expects engineers to anticipate potential points of failure and design systems that minimize downtime.

Monitoring and observability are equally important when designing scalable architectures. Engineers must implement logging, metrics, and alerts to track system health. These monitoring mechanisms allow teams to identify issues before they affect performance. Proper monitoring design also helps in cost management, as it enables the identification of underutilized resources that can be adjusted or decommissioned.

Designing Data Integration and Movement Solutions

Data integration is central to the DP-201 exam, as modern organizations often store data across multiple systems and formats. Designing integration solutions requires understanding how to extract, transform, and load data from various sources into centralized storage or analytics systems. Azure provides multiple tools for this purpose, and the ability to choose the right one based on use case and performance requirements is essential.

Batch data movement is suitable for scenarios where large volumes of data are processed periodically. It emphasizes efficiency and reliability over immediacy. On the other hand, real-time data integration supports use cases where immediate insights are required. Designing these systems requires balancing latency, throughput, and consistency. Engineers must ensure that data arrives in the correct format and order, maintaining integrity across the pipeline.

Data transformation is also a vital aspect of integration design. Engineers must decide where transformations should occur—either during ingestion, in transit, or post-storage. The choice depends on the complexity of transformations, the need for historical accuracy, and the performance implications. Designing efficient data flows minimizes unnecessary processing and ensures that downstream systems receive clean, consistent data.

Another important consideration is data lineage and traceability. Modern data systems require the ability to track the origin and transformation history of data elements. This capability is crucial for auditing, troubleshooting, and maintaining trust in analytics outputs. Designing pipelines with built-in lineage tracking helps ensure that every piece of data can be traced through its lifecycle.

Designing for Performance Optimization

Performance optimization plays a key role in Azure data solution design. A well-designed system ensures that queries and operations execute efficiently, even under heavy workloads. The DP-201 exam assesses understanding of various strategies to improve performance without unnecessary cost increases. Candidates must know how to optimize queries, design effective indexing strategies, and structure data for quick retrieval.

Storage performance can be enhanced through proper data partitioning. Partitioning involves dividing large datasets into smaller, manageable segments, enabling parallel processing and faster query execution. Choosing the right partition key is critical, as poor selection can lead to performance bottlenecks or uneven data distribution.

Caching is another effective optimization technique. By storing frequently accessed data in memory, caching reduces the need to repeatedly query slower storage layers. Engineers must design caching strategies that balance speed and freshness of data. Improper caching configurations can lead to stale or inconsistent data, so understanding the trade-offs is important.

Network performance also influences data architecture efficiency. Data engineers must minimize latency by designing systems that process data close to its source whenever possible. This concept, known as data locality, reduces data transfer costs and improves responsiveness. Optimizing data transfer and synchronization between distributed systems ensures seamless performance in global architectures.

Designing Security and Compliance for Data Solutions

Security design is a core part of the DP-201 exam. Every data solution must ensure that data is protected at all stages—at rest, in transit, and during processing. Designing a secure architecture involves implementing multiple layers of protection, identity management, and access control mechanisms. Azure offers a wide range of tools for securing data, and candidates must demonstrate the ability to choose the appropriate security measures for each scenario.

Access control begins with identity management. Engineers must design systems that restrict access based on roles and responsibilities. Role-based access control ensures that only authorized users or applications can view or manipulate data. Proper access control design helps prevent unauthorized activities and maintains compliance with organizational security policies.

Encryption is another essential security mechanism. Engineers must design encryption strategies for data at rest and in transit, ensuring that sensitive information remains protected even if intercepted or accessed without permission. Choosing the correct encryption algorithms and managing encryption keys securely are important aspects of this design area.

Data governance and compliance are integral to maintaining trust and accountability in data systems. Engineers must ensure that data is collected, stored, and processed in alignment with applicable regulations and internal policies. Designing systems with built-in auditing, data classification, and retention policies helps maintain compliance and simplifies future audits.

Designing Analytical and Business Intelligence Solutions

The DP-201 exam also evaluates the candidate’s ability to design solutions that support analytics and reporting. Analytical systems transform raw data into insights that drive business decisions. Designing such systems requires understanding data modeling, visualization, and the flow of data from ingestion to presentation.

Data modeling involves structuring data in a way that supports efficient querying and reporting. Engineers must choose between star, snowflake, or normalized schemas based on analytical requirements. The goal is to ensure that data can be accessed quickly and easily by business intelligence tools.

Data visualization is another important consideration. Engineers must design systems that deliver clean, structured data to visualization platforms. Properly designed data models ensure that analysts and decision-makers can derive insights without additional transformations.

Performance optimization in analytical systems focuses on pre-aggregation, caching, and query tuning. Engineers must identify common queries and precompute results where possible to reduce processing time. Designing a well-structured data warehouse or analytical model minimizes redundancy and accelerates reporting capabilities.

Designing Data Lifecycle and Governance Strategies

Managing data throughout its lifecycle is another area covered by the DP-201 exam. Data lifecycle design ensures that data remains valuable, relevant, and compliant from the moment it is created until it is retired. Engineers must design systems that automatically manage data aging, archival, and deletion according to business and compliance requirements.

Data classification is a foundational step in lifecycle management. By categorizing data based on sensitivity and importance, organizations can apply appropriate security and retention policies. Engineers must design architectures that support automated classification to reduce manual oversight.

Archiving strategies ensure that historical data remains accessible when needed but does not consume expensive active storage. Designing cost-effective archival systems requires balancing retrieval speed and storage costs. Engineers must also plan for data deletion processes that ensure permanent removal of obsolete or redundant data.

Effective governance requires visibility into data ownership, access, and lineage. Engineers must design systems that provide clear accountability for data handling. This visibility supports auditability, security enforcement, and regulatory compliance.

Designing for Cost Optimization and Resource Efficiency

Designing cost-efficient solutions is a key responsibility for Azure data engineers. The DP-201 exam expects candidates to understand how to balance performance, scalability, and cost. Every design decision—from storage type to data movement method—affects the overall cost structure of a solution.

Choosing the appropriate storage tier is one of the most direct ways to manage cost. Frequently accessed data may require premium storage for high performance, while infrequently used data can be stored in lower-cost tiers. Engineers must analyze access patterns and design policies that automatically move data between tiers to optimize costs.

Processing costs can also be controlled through automation and scheduling. Batch jobs can be designed to run during low-demand periods to reduce resource consumption. Engineers should design data pipelines that automatically scale down when idle, reducing unnecessary expenses.

Monitoring and cost analysis tools help track spending and identify inefficiencies. Designing systems with clear visibility into cost metrics ensures that optimization opportunities are identified early. Engineers who understand the cost implications of each design choice can deliver solutions that are both technically strong and financially sustainable.

Strategies for Exam Preparation and Success

Preparing for the DP-201 exam requires structured study and practical experience. Candidates should begin by reviewing all the domains covered in the exam blueprint and focusing on areas that test architectural design and decision-making. Understanding the theoretical aspects of Azure services is important, but hands-on practice solidifies knowledge and improves problem-solving skills.

Practical experience with Azure tools and services is essential. Building sample projects that involve real-world scenarios helps reinforce understanding. Candidates should design sample data pipelines, storage solutions, and analytical architectures to gain experience in identifying trade-offs between services.

Visual learning aids such as architectural diagrams and flowcharts can enhance comprehension. These visuals help candidates conceptualize data movement, dependencies, and integrations across services. Studying official documentation, whitepapers, and design patterns also contributes to deeper understanding.

Time management is important during preparation and the exam itself. Candidates must practice answering scenario-based questions efficiently while ensuring accuracy. Understanding the reasoning behind each design decision is key to success, as the exam evaluates conceptual clarity rather than memorization.

The DP-201 exam is a comprehensive test of one’s ability to design data solutions that are secure, scalable, and efficient in the Azure environment. It measures not only technical proficiency but also the capacity to think strategically about architecture and long-term system sustainability. Candidates who prepare effectively develop the skills to design data systems that deliver value across analytics, operations, and governance domains.

A well-prepared candidate understands how to balance performance, cost, and security while ensuring system reliability. Achieving success in this exam demonstrates readiness to take on advanced data engineering challenges and contribute meaningfully to enterprise data strategy. By mastering the principles of Azure data design, professionals position themselves for continued growth in the evolving field of cloud data engineering, where efficient and secure data solutions are key to driving innovation and informed decision-making.

Understanding the Core Focus of the DP-201 Exam

The DP-201 exam emphasizes a candidate’s ability to design end-to-end data solutions using Azure technologies. It assesses not only technical knowledge but also architectural thinking, ensuring that the professional can translate complex business requirements into functional and scalable Azure data systems. The exam focuses on the design phase of a data engineering project, where the candidate must choose appropriate data storage, processing, integration, and security mechanisms while balancing performance and cost. Understanding how Azure services interact and complement each other is central to this exam.

At its core, the DP-201 exam expects the candidate to demonstrate an understanding of designing data solutions that align with business goals. It involves knowledge of various architectural patterns, data management principles, and the practical implications of choosing one Azure service over another. Success in this exam requires the ability to assess trade-offs, design for reliability, and implement data governance policies that support compliance and operational efficiency.

Designing Data Storage Solutions for Azure

One of the most critical parts of the DP-201 exam is understanding how to design data storage solutions that meet organizational needs. Every organization deals with diverse data types such as structured, semi-structured, and unstructured data. The design must ensure that storage solutions support performance requirements, enable scalability, and remain cost-efficient. Engineers must decide between options such as relational databases, NoSQL systems, and object storage, depending on workload characteristics and data access patterns.

Designing an efficient storage solution also involves defining data partitioning strategies. Partitioning helps manage large datasets and improves performance by dividing them into manageable sections. Selecting the correct partition key and distribution model ensures even data distribution and minimizes processing overhead. Proper indexing and schema design further enhance performance by enabling faster query execution and reducing resource consumption.

Another aspect of storage design involves data durability and availability. Redundancy and replication strategies must be implemented to protect against data loss. Azure provides multiple replication models that ensure data remains accessible even in the event of regional or hardware failures. The candidate must understand when to use these models and how to design for cost-effective resilience without compromising data integrity.

Data lifecycle management is also important in storage design. Engineers must plan for data retention, archival, and deletion based on business and compliance requirements. Designing storage solutions with built-in lifecycle policies ensures that data remains current and that obsolete or redundant information does not consume valuable resources.

Designing Data Processing Solutions

Designing data processing systems is another major focus of the DP-201 exam. A well-designed processing architecture transforms raw data into meaningful insights while maintaining efficiency and scalability. Candidates must demonstrate the ability to design batch and real-time processing systems depending on the business scenario.

Batch processing solutions handle large volumes of data at scheduled intervals. These systems are efficient for use cases where immediacy is not required but accuracy and reliability are critical. Engineers must design workflows that manage dependencies, handle errors gracefully, and optimize throughput.

Real-time processing systems, on the other hand, are designed for scenarios that require immediate insights. These systems process continuous streams of data and provide near-instantaneous responses. Designing real-time systems involves considerations around data ingestion rates, latency reduction, and fault tolerance. Candidates must understand how to design architectures that maintain data accuracy while handling high-velocity data streams.

Hybrid processing systems often combine batch and real-time methods, offering flexibility for modern analytics workloads. The exam expects candidates to design architectures capable of integrating both models efficiently. This includes designing message queues, event hubs, and scalable compute resources that work together seamlessly.

Designing for Security and Compliance

Security and compliance form a foundational element of every Azure data solution. The DP-201 exam evaluates how well a candidate can design systems that safeguard data at every stage—from ingestion to storage and processing. Candidates must understand the importance of encryption, identity management, and access control in securing enterprise data.

Encryption ensures that data remains protected both at rest and in transit. Designing encryption strategies involves selecting appropriate algorithms, managing keys securely, and applying encryption consistently across services. Access control mechanisms, such as role-based access management, restrict data access to authorized users and applications. Proper role design prevents unauthorized access and maintains accountability.

Compliance involves adhering to data privacy laws and organizational policies. Engineers must design systems that store and process data according to regulatory standards, ensuring traceability and audit readiness. Implementing data classification, retention policies, and logging mechanisms helps maintain compliance without affecting performance.

Designing for security also requires attention to network configuration. Engineers should minimize exposure by placing sensitive components within private networks and restricting public access wherever possible. Secure network design ensures that data flows through controlled pathways, reducing the risk of interception or misuse.

Designing for Performance and Optimization

Performance design is integral to creating efficient Azure data solutions. The DP-201 exam tests a candidate’s ability to build architectures that meet performance requirements while maintaining scalability and cost efficiency. Engineers must understand the factors affecting query performance, data retrieval speed, and overall system responsiveness.

Optimization begins with efficient data modeling. Designing schemas that align with query patterns ensures faster data access and minimizes unnecessary joins or computations. For analytical workloads, denormalized models such as star or snowflake schemas improve performance by reducing complexity.

Partitioning and indexing play a key role in optimizing performance. Properly partitioned datasets allow for parallel processing, while indexing reduces the time required to locate data. Engineers must balance indexing strategy with write performance to avoid excessive overhead.

Caching is another effective way to enhance performance. Frequently accessed data can be stored temporarily in memory to reduce retrieval time. Designing caching strategies involves identifying data that benefits most from caching and setting appropriate refresh intervals to maintain data accuracy.

Network optimization is equally important. Designing systems that minimize data transfer between regions or services reduces latency and improves performance. Engineers should ensure that data is processed as close to its source as possible, a concept known as data locality.

Designing Data Integration and Orchestration

Integration and orchestration are critical elements of the DP-201 exam. Modern data architectures involve multiple systems that must communicate effectively. Designing integration solutions ensures that data moves seamlessly between systems without loss or corruption.

Batch data integration focuses on transferring large datasets at defined intervals, ensuring that data remains consistent across systems. Real-time integration designs, however, rely on streaming technologies to deliver data continuously. Engineers must decide which method fits the business requirement and ensure that the integration process maintains data fidelity.

Orchestration involves managing the execution of various data workflows. Engineers must design systems that control dependencies, handle errors, and maintain data consistency throughout the pipeline. Proper orchestration design ensures that each process executes in the correct sequence and that failures are automatically detected and mitigated.

Monitoring and logging are vital to integration design. Engineers must ensure that every data movement is traceable and auditable. Designing with observability in mind enables faster troubleshooting and helps maintain operational stability.

Designing for Analytics and Insights

Analytical capabilities are the ultimate goal of most data solutions, and the DP-201 exam emphasizes this aspect heavily. Engineers must design architectures that support business intelligence and analytics systems, ensuring that data can be transformed into actionable insights efficiently.

Designing analytical systems requires an understanding of data modeling for analytics. Data warehouses and analytical stores must be structured to support complex queries and aggregations without significant performance degradation. Engineers must determine the right data modeling approach based on query patterns and reporting needs.

Data preprocessing and transformation are also critical. Clean and standardized data improves the accuracy of analytical results. Engineers must design data pipelines that prepare data effectively for analysis while minimizing redundancy and latency.

Scalability is important for analytical solutions, as the volume of data used for analysis grows over time. Designing scalable analytical architectures ensures that the system can handle increasing workloads without compromising performance or cost efficiency.

Visualization and reporting depend on well-designed data models. Engineers must ensure that data is readily available to reporting tools in a format that supports self-service analytics. This allows decision-makers to access insights quickly and independently.

Designing for Monitoring and Maintenance

An often-overlooked aspect of data solution design is monitoring and maintenance. The DP-201 exam evaluates how well a candidate can design systems that are maintainable, observable, and self-healing. Proper monitoring ensures that potential issues are detected early and resolved before they affect performance or availability.

Designing monitoring systems involves setting up metrics, alerts, and dashboards that provide visibility into system health. Engineers must choose the right parameters to monitor, such as latency, throughput, error rates, and resource utilization. Designing alerting mechanisms that distinguish between critical and non-critical events helps prevent alert fatigue.

Automation plays an important role in system maintenance. Engineers should design processes that automatically adjust resources, restart failed components, and perform regular updates without manual intervention. Automation not only reduces downtime but also ensures consistent performance.

Maintenance design also includes planning for scalability and future upgrades. Engineers must ensure that the architecture can evolve with business needs without requiring major redesigns. Designing modular components and loosely coupled systems facilitates easier updates and integration of new technologies.

Designing for Cost Efficiency and Resource Management

Cost efficiency is a fundamental principle of cloud architecture design. The DP-201 exam assesses how effectively a candidate can design solutions that optimize costs while maintaining performance and reliability. Engineers must understand how resource utilization, data storage, and compute configurations influence cost structures.

Designing for cost optimization involves selecting the right service tiers and configurations. Engineers must analyze workload patterns to determine whether to use reserved, on-demand, or serverless resources. Automation can help scale resources dynamically based on demand, ensuring that the system operates efficiently.

Data storage costs can be optimized through tiered storage strategies. Frequently accessed data may reside in high-performance storage, while infrequently accessed data is moved to lower-cost archival tiers. Designing automated data tiering policies reduces manual effort and ensures continuous cost savings.

Network costs can also accumulate in large-scale data solutions. Designing architectures that minimize data transfer between services and regions helps control these expenses. Engineers should prioritize co-locating services within the same region when possible to reduce latency and cost.

Monitoring resource consumption is essential for ongoing cost control. Engineers must design systems that track usage metrics, enabling continuous optimization. Visibility into cost patterns helps organizations identify inefficiencies and adjust configurations proactively.

Conclusion

The DP-201 exam is a comprehensive evaluation of an individual’s ability to design robust, secure, and efficient Azure data solutions. It measures the candidate’s understanding of data architecture principles, system scalability, and performance optimization within the Azure ecosystem. The exam challenges candidates to think beyond technical execution and consider how design decisions influence reliability, cost, and long-term sustainability.

Achieving success in this exam requires a balance of theoretical understanding and practical application. Candidates must be comfortable designing solutions that integrate multiple Azure services, ensuring that they work together to deliver value across data ingestion, processing, storage, and analytics. Mastery of these principles not only prepares candidates for the exam but also builds the foundation for a strong career in data engineering.

In essence, the DP-201 exam encourages professionals to approach data design as a strategic discipline. By mastering architectural decision-making, candidates develop the ability to design systems that empower organizations to harness data effectively, driving insight, innovation, and efficiency across all levels of operation.

Microsoft DP-201 practice test questions and answers, training course, study guide are uploaded in ETE Files format by real users. Study and Pass DP-201 Designing an Azure Data Solution certification exam dumps & practice test questions and answers are to help students.

Exam Comments * The most recent comment are on top

- AZ-104 - Microsoft Azure Administrator

- AI-102 - Designing and Implementing a Microsoft Azure AI Solution

- DP-700 - Implementing Data Engineering Solutions Using Microsoft Fabric

- AI-900 - Microsoft Azure AI Fundamentals

- AZ-305 - Designing Microsoft Azure Infrastructure Solutions

- PL-300 - Microsoft Power BI Data Analyst

- MD-102 - Endpoint Administrator

- AZ-500 - Microsoft Azure Security Technologies

- AZ-900 - Microsoft Azure Fundamentals

- SC-200 - Microsoft Security Operations Analyst

- SC-300 - Microsoft Identity and Access Administrator

- MS-102 - Microsoft 365 Administrator

- AZ-204 - Developing Solutions for Microsoft Azure

- SC-401 - Administering Information Security in Microsoft 365

- DP-600 - Implementing Analytics Solutions Using Microsoft Fabric

- SC-100 - Microsoft Cybersecurity Architect

- AZ-700 - Designing and Implementing Microsoft Azure Networking Solutions

- PL-200 - Microsoft Power Platform Functional Consultant

- AZ-400 - Designing and Implementing Microsoft DevOps Solutions

- AZ-800 - Administering Windows Server Hybrid Core Infrastructure

- AZ-140 - Configuring and Operating Microsoft Azure Virtual Desktop

- SC-900 - Microsoft Security, Compliance, and Identity Fundamentals

- PL-400 - Microsoft Power Platform Developer

- PL-600 - Microsoft Power Platform Solution Architect

- AZ-801 - Configuring Windows Server Hybrid Advanced Services

- MS-900 - Microsoft 365 Fundamentals

- DP-300 - Administering Microsoft Azure SQL Solutions

- MS-700 - Managing Microsoft Teams

- MB-280 - Microsoft Dynamics 365 Customer Experience Analyst

- PL-900 - Microsoft Power Platform Fundamentals

- GH-300 - GitHub Copilot

- MB-800 - Microsoft Dynamics 365 Business Central Functional Consultant

- MB-330 - Microsoft Dynamics 365 Supply Chain Management

- MB-310 - Microsoft Dynamics 365 Finance Functional Consultant

- DP-100 - Designing and Implementing a Data Science Solution on Azure

- DP-900 - Microsoft Azure Data Fundamentals

- MB-820 - Microsoft Dynamics 365 Business Central Developer

- MB-230 - Microsoft Dynamics 365 Customer Service Functional Consultant

- MB-700 - Microsoft Dynamics 365: Finance and Operations Apps Solution Architect

- GH-200 - GitHub Actions

- MS-721 - Collaboration Communications Systems Engineer

- GH-900 - GitHub Foundations

- MB-920 - Microsoft Dynamics 365 Fundamentals Finance and Operations Apps (ERP)

- MB-910 - Microsoft Dynamics 365 Fundamentals Customer Engagement Apps (CRM)

- PL-500 - Microsoft Power Automate RPA Developer

- MB-335 - Microsoft Dynamics 365 Supply Chain Management Functional Consultant Expert

- MB-500 - Microsoft Dynamics 365: Finance and Operations Apps Developer

- GH-500 - GitHub Advanced Security

- DP-420 - Designing and Implementing Cloud-Native Applications Using Microsoft Azure Cosmos DB

- MB-240 - Microsoft Dynamics 365 for Field Service

- AZ-120 - Planning and Administering Microsoft Azure for SAP Workloads

- GH-100 - GitHub Administration

- SC-400 - Microsoft Information Protection Administrator

- DP-203 - Data Engineering on Microsoft Azure

- AZ-303 - Microsoft Azure Architect Technologies

- 98-388 - Introduction to Programming Using Java

- 62-193 - Technology Literacy for Educators

- MB-900 - Microsoft Dynamics 365 Fundamentals

- 98-383 - Introduction to Programming Using HTML and CSS

- MO-100 - Microsoft Word (Word and Word 2019)

- MB-210 - Microsoft Dynamics 365 for Sales

Why customers love us?

What do our customers say?

The resources provided for the Microsoft certification exam were exceptional. The exam dumps and video courses offered clear and concise explanations of each topic. I felt thoroughly prepared for the DP-201 test and passed with ease.

Studying for the Microsoft certification exam was a breeze with the comprehensive materials from this site. The detailed study guides and accurate exam dumps helped me understand every concept. I aced the DP-201 exam on my first try!

I was impressed with the quality of the DP-201 preparation materials for the Microsoft certification exam. The video courses were engaging, and the study guides covered all the essential topics. These resources made a significant difference in my study routine and overall performance. I went into the exam feeling confident and well-prepared.

The DP-201 materials for the Microsoft certification exam were invaluable. They provided detailed, concise explanations for each topic, helping me grasp the entire syllabus. After studying with these resources, I was able to tackle the final test questions confidently and successfully.

Thanks to the comprehensive study guides and video courses, I aced the DP-201 exam. The exam dumps were spot on and helped me understand the types of questions to expect. The certification exam was much less intimidating thanks to their excellent prep materials. So, I highly recommend their services for anyone preparing for this certification exam.

Achieving my Microsoft certification was a seamless experience. The detailed study guide and practice questions ensured I was fully prepared for DP-201. The customer support was responsive and helpful throughout my journey. Highly recommend their services for anyone preparing for their certification test.

I couldn't be happier with my certification results! The study materials were comprehensive and easy to understand, making my preparation for the DP-201 stress-free. Using these resources, I was able to pass my exam on the first attempt. They are a must-have for anyone serious about advancing their career.

The practice exams were incredibly helpful in familiarizing me with the actual test format. I felt confident and well-prepared going into my DP-201 certification exam. The support and guidance provided were top-notch. I couldn't have obtained my Microsoft certification without these amazing tools!

The materials provided for the DP-201 were comprehensive and very well-structured. The practice tests were particularly useful in building my confidence and understanding the exam format. After using these materials, I felt well-prepared and was able to solve all the questions on the final test with ease. Passing the certification exam was a huge relief! I feel much more competent in my role. Thank you!

The certification prep was excellent. The content was up-to-date and aligned perfectly with the exam requirements. I appreciated the clear explanations and real-world examples that made complex topics easier to grasp. I passed DP-201 successfully. It was a game-changer for my career in IT!