- Home

- Microsoft Certifications

- DP-200 Implementing an Azure Data Solution Dumps

Pass Microsoft DP-200 Exam in First Attempt Guaranteed!

DP-200 Premium File

- Premium File 246 Questions & Answers. Last Update: Feb 11, 2026

Whats Included:

- Latest Questions

- 100% Accurate Answers

- Fast Exam Updates

Last Week Results!

All Microsoft DP-200 certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the DP-200 Implementing an Azure Data Solution practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

DP-200 Explained: What You Gain and Whether It’s Worth Pursuing

The DP-200 exam, also known as Implementing an Azure Data Solution, is an essential part of the journey toward becoming a skilled Azure data professional. It is designed to measure a candidate’s ability to work with data solutions in the Azure environment. The exam focuses on practical skills and knowledge required to implement various data storage, processing, and security solutions using Azure services. It is aimed at individuals who want to gain deeper expertise in managing data within the cloud and learn how to build, manage, and optimize data platforms effectively.

This exam forms one of the foundational components for those pursuing data engineering roles within the Azure ecosystem. It helps professionals demonstrate their ability to use Azure technologies for data storage, integration, and monitoring. Rather than being an introductory-level assessment, it assumes a certain level of familiarity with Azure and focuses on translating that understanding into practical implementations. Candidates are expected to know how to use Azure resources to handle real-world data tasks efficiently.

Core Focus of the DP-200 Exam

The DP-200 exam evaluates candidates on multiple domains that are crucial for implementing data solutions in Azure. These domains include designing and implementing data storage systems, managing and developing data processing systems, and monitoring and optimizing data solutions. Each domain tests practical application rather than theoretical understanding, emphasizing the hands-on capabilities required in modern data engineering environments.

Implementing data storage solutions is a major portion of the exam. Candidates must know how to configure both relational and non-relational storage systems. This includes knowledge of Azure services such as Azure Blob Storage, Data Lake Storage, and Cosmos DB. They must understand how to handle partitioning, replication, consistency models, and data distribution. Managing security within storage systems, including encryption and data masking, is another important part of this domain. The ability to ensure high availability and implement disaster recovery strategies is also assessed, as data continuity is critical in enterprise operations.

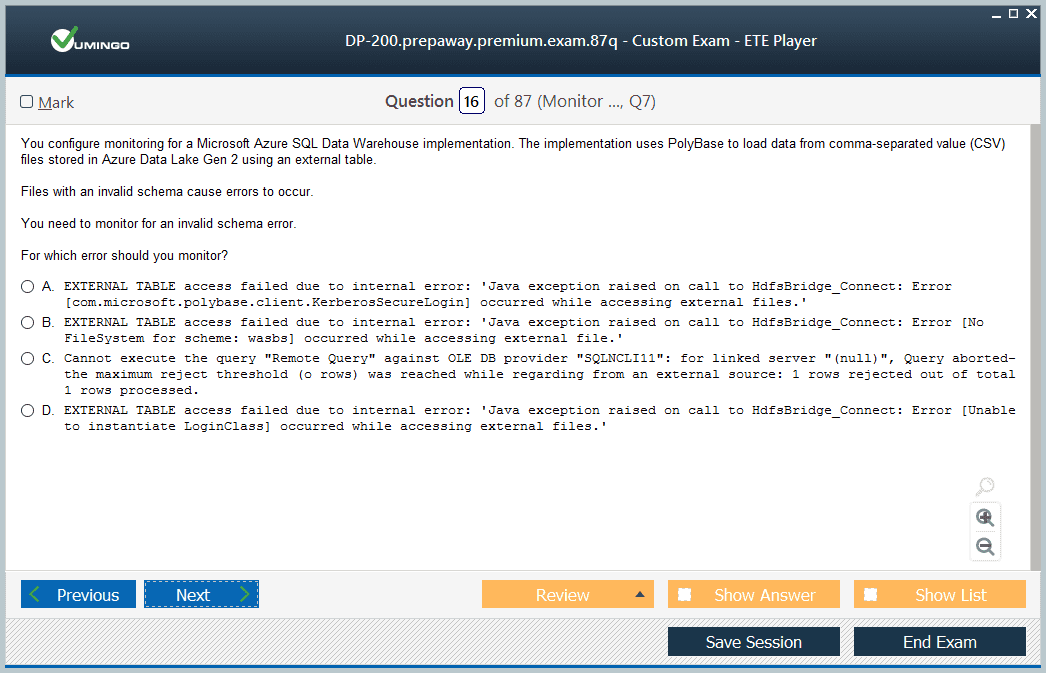

Another significant section involves managing and developing data processing solutions. Candidates are tested on their ability to create and manage pipelines for batch and streaming data using Azure tools such as Data Factory and Databricks. Understanding how to set up triggers, pipelines, linked services, and data transformation workflows is vital. They must also demonstrate knowledge of creating and optimizing data flows for both real-time and scheduled processes, ensuring data is efficiently moved, cleaned, and made ready for analytics or reporting.

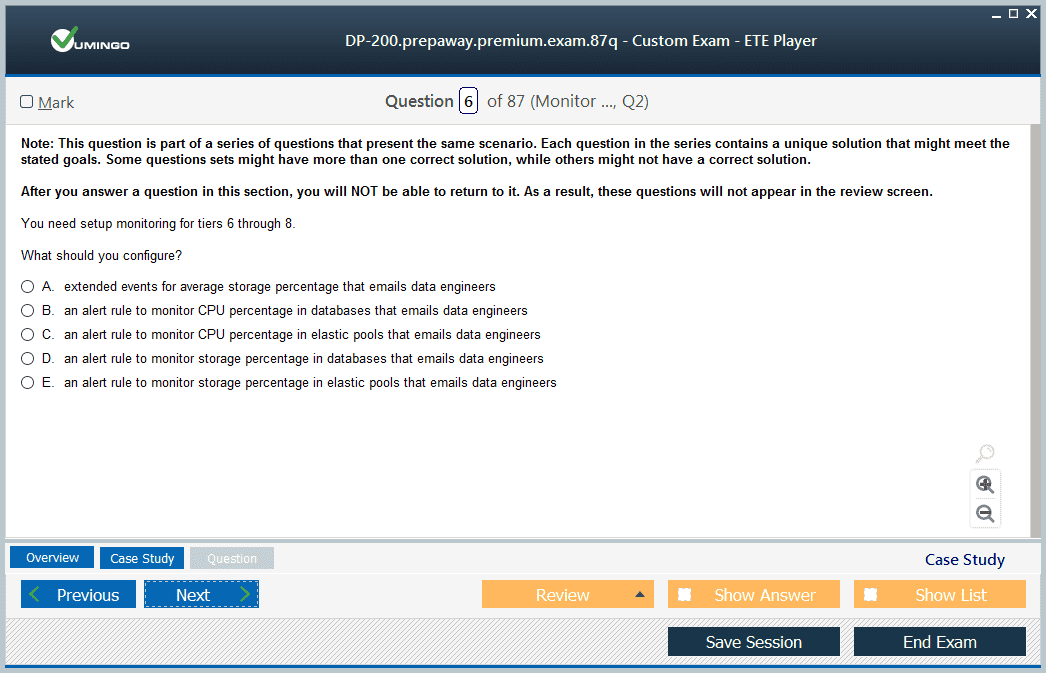

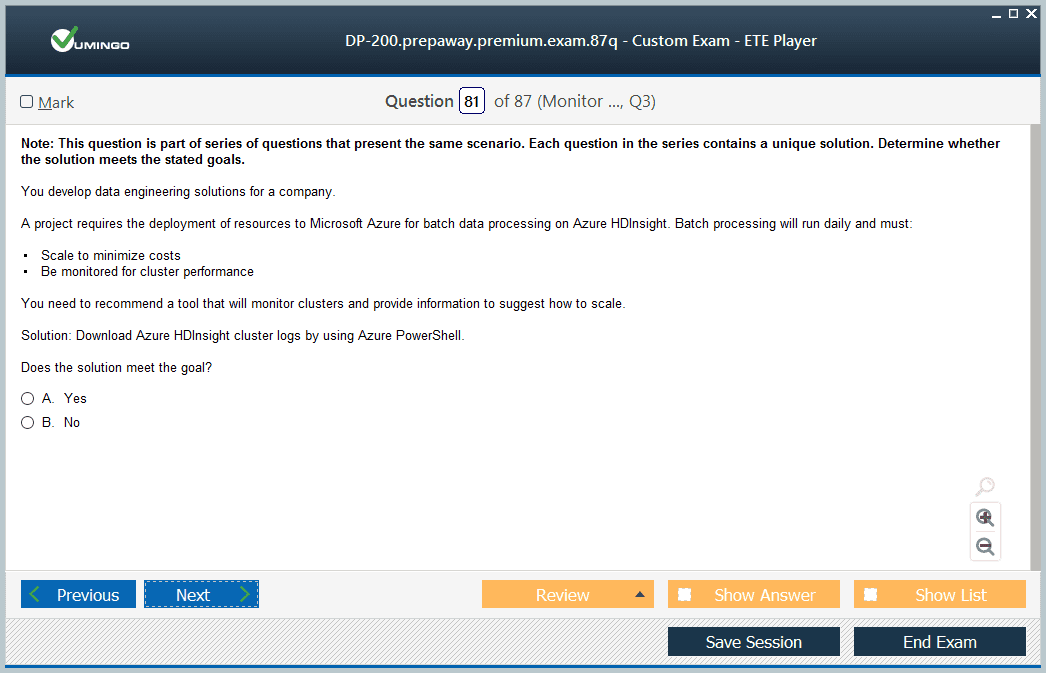

The final section focuses on monitoring and optimizing data solutions. This part of the exam ensures that candidates can track performance, identify bottlenecks, and apply corrective measures. Candidates are expected to use Azure Monitor, Log Analytics, and diagnostic tools to evaluate system health and troubleshoot issues. They also need to know optimization techniques related to data partitioning, indexing, caching, and resource allocation to ensure efficient operation across data services.

Skills Measured in the DP-200 Exam

To pass the DP-200 exam, a candidate must show proficiency in implementing data solutions using Azure services. The required skills span across several areas of Azure data management, from creating secure and scalable storage systems to managing large-scale data processing pipelines. The exam assesses the candidate’s ability to translate organizational requirements into practical implementations that can handle structured and unstructured data efficiently.

A key skill tested is the ability to deploy relational databases in Azure. This involves understanding the use of Azure SQL Database, Synapse Analytics, and other relational solutions. The candidate should know how to manage distributed databases, configure high-availability systems, and secure data during both storage and transmission.

For non-relational data, candidates must know how to work with solutions like Azure Cosmos DB and Data Lake Storage. They are expected to understand data modeling, access control, and partitioning strategies that ensure scalability and performance. The exam also includes topics like implementing global distribution, tuning read and write performance, and configuring consistency levels that meet business needs.

Developing and managing data pipelines is another crucial skill area. This includes understanding how to design workflows in Data Factory, integrate with multiple data sources, and apply transformations to prepare data for analysis. Candidates should also be familiar with using Databricks for big data processing and implementing streaming data solutions that process information in near real-time. The ability to create and manage these data flows with precision demonstrates a candidate’s readiness to work in dynamic data-driven environments.

Importance of Monitoring and Optimization

Monitoring and optimization are vital parts of data management, and the DP-200 exam places strong emphasis on these skills. Data engineers must ensure that their data systems operate efficiently and securely at all times. This includes monitoring pipelines, checking for failed jobs, and analyzing logs to detect potential issues before they affect production systems. The ability to configure alerts and use dashboards for visual monitoring is also tested.

Optimization requires understanding how to improve performance without compromising reliability. Candidates must know how to identify bottlenecks in data movement, query execution, or processing workloads. Techniques such as partitioning data, optimizing query plans, and managing resource consumption are part of this skill set. Candidates who master these areas can build systems that not only meet but exceed performance expectations.

Required Experience and Knowledge

The DP-200 exam is not designed for complete beginners. While formal prerequisites are not mandatory, it is expected that candidates have some familiarity with Azure services and basic data concepts. Experience with data management, database administration, or software development can make preparation easier. Candidates should understand data storage types, relational and non-relational data models, and basic networking and security principles within the Azure ecosystem.

A background in working with data-centric technologies such as SQL, ETL processes, and data transformation tools is beneficial. Understanding how to structure and optimize data solutions to meet organizational needs helps in applying concepts during the exam. Even though hands-on experience is not explicitly required, candidates who have worked on real or simulated Azure projects will find the exam more approachable and easier to relate to.

Career Value of the DP-200 Exam

The DP-200 exam plays an important role for professionals aiming to work as Azure data engineers. It equips them with the technical understanding needed to handle data in cloud-based systems. Organizations value professionals who can design and implement solutions that manage data securely and efficiently using Azure technologies. Successfully completing this exam demonstrates that the individual is capable of managing the entire data lifecycle, from ingestion and transformation to storage and visualization.

For individuals already in data-related roles, earning this certification can help expand their expertise into Azure-specific implementations. It validates their ability to work with modern data architectures and cloud-based analytics solutions. It also helps professionals position themselves for higher responsibility roles where they oversee complex data systems or design cloud data infrastructures.

For newcomers to the field, the DP-200 serves as a stepping stone into the world of cloud data engineering. It introduces them to the concepts of building scalable data systems in the cloud and helps them understand how to use Azure resources effectively. While it may require some prior exposure to data handling, it provides a structured path to mastering Azure data technologies.

Practical Benefits of Learning Through the DP-200

Preparing for the DP-200 exam offers more than just certification. The process teaches important practical skills that can be applied to real projects. Candidates learn how to build data pipelines, integrate services, and automate workflows that process data efficiently. They gain experience in securing data, implementing compliance controls, and maintaining data integrity.

Another benefit is that it encourages the development of problem-solving abilities. Data engineers frequently face challenges such as managing large volumes of data, ensuring low-latency access, or dealing with multiple data sources. The knowledge gained from studying for this exam helps them analyze and address such problems with confidence.

Additionally, the DP-200 fosters a deeper understanding of Azure’s ecosystem and how different services interact with each other. Learning how to choose the right tools for specific use cases improves decision-making and technical planning skills. These capabilities are essential for professionals who want to deliver robust and optimized data solutions.

Why the DP-200 Is Considered Valuable

The DP-200 is widely regarded as valuable because it focuses on practical applications rather than just theory. It prepares professionals to handle data workloads in real-world scenarios. By covering topics like data storage, processing, security, and optimization, it ensures that candidates understand the complete data lifecycle within Azure.

For organizations that rely on Azure, having employees certified through the DP-200 exam brings measurable advantages. It ensures that their data infrastructure is managed by professionals who understand Azure’s tools and services thoroughly. This leads to more reliable data systems, fewer operational issues, and better performance across analytics and business intelligence applications.

On an individual level, the exam helps professionals validate their technical skills and gain recognition in the industry. It demonstrates commitment to continuous learning and expertise in modern data practices. As data continues to be at the heart of business operations, professionals with proven Azure data capabilities are in higher demand.

Using the DP-200 to Advance Skills

Beyond certification, the DP-200 exam serves as a platform for continuous skill development. The topics it covers introduce professionals to a wide range of Azure services, providing them with the foundation needed to explore more advanced data concepts later. The knowledge of implementing and managing data solutions can be expanded upon through further practical experience.

Studying for the exam also enhances understanding of end-to-end data workflows. From data ingestion to visualization, candidates gain a holistic perspective on how data travels through different systems. This helps them design more efficient and reliable solutions that support analytics and business decision-making processes.

In a rapidly evolving technology landscape, learning to use Azure for data management provides a strong competitive advantage. Cloud-based data systems are now central to many organizations, and professionals who can effectively manage them will remain valuable. The DP-200 serves as a bridge between foundational knowledge and advanced technical expertise, helping data professionals progress in their careers.

The DP-200 exam is a significant certification for individuals aiming to specialize in Azure data solutions. It provides a comprehensive understanding of implementing, managing, and optimizing data systems within the Azure environment. Through its focus on real-world applications, it helps candidates build practical skills that directly translate into workplace value.

Whether a professional is seeking to strengthen their cloud knowledge or transition into a data engineering role, this exam provides a structured and meaningful learning path. It combines technical depth with practical relevance, making it one of the most useful certifications for data professionals working with Azure technologies.

Deep Understanding of the DP-200 Exam Structure

The DP-200 exam focuses on the implementation of Azure data solutions, making it a vital part of the Azure data engineering certification path. It measures the technical capabilities required to create, manage, and optimize data solutions using Azure technologies. This exam is not about memorizing facts but about understanding real-world applications of data engineering concepts. The structure of the exam is designed to test both conceptual understanding and hands-on skills. Each section corresponds to a major responsibility area of a data engineer in the Azure ecosystem.

The exam content is divided into three broad areas: implementing data storage solutions, managing and developing data processing, and monitoring and optimizing data solutions. Each of these categories evaluates how well a candidate can design, deploy, and maintain systems that can store and process data effectively in the cloud. Candidates must demonstrate proficiency in using Azure services for data storage, integration, security, and performance tuning.

Implementing data storage solutions tests the ability to choose the right type of storage for specific workloads. It also assesses the understanding of concepts like replication, sharding, indexing, and scalability. This section requires knowledge of both relational and non-relational data stores. Candidates must be familiar with data consistency, redundancy management, and disaster recovery configurations. The goal is to ensure that the candidate can design storage solutions that are secure, reliable, and aligned with business continuity needs.

Data Storage Implementation in Azure

Data storage forms the foundation of any data solution. The DP-200 exam requires understanding how to implement various storage systems in Azure that handle diverse data formats and workloads. Candidates are tested on how to design solutions that store structured, semi-structured, and unstructured data efficiently. This involves understanding services such as Azure Blob Storage, Azure Data Lake Storage, and Azure SQL Database.

In relational storage, candidates must know how to implement and manage distributed databases and how to design schemas that can handle large data volumes while maintaining performance. Knowledge of indexing strategies, data partitioning, and query optimization is crucial. Azure Synapse Analytics also plays an important role here, as it allows for large-scale data warehousing. Understanding how to create dedicated SQL pools, manage compute resources, and integrate with data ingestion tools is part of this area.

Non-relational storage involves services like Azure Cosmos DB, which support flexible data models and global distribution. The exam tests understanding of how to configure consistency levels, manage throughput, and secure access to these databases. It also assesses the candidate’s ability to handle large data ingestion scenarios and implement data access policies that align with organizational security requirements.

Candidates should also be aware of backup and recovery methods, encryption of data at rest and in transit, and implementing compliance policies. The ability to design solutions that maintain data integrity while allowing for high availability is an essential skill tested in this part of the exam.

Developing and Managing Data Processing Systems

A large portion of the DP-200 exam focuses on developing and managing data processing workflows. Azure provides multiple tools that support batch and streaming data processing, and candidates must know when and how to use each. The goal of this section is to ensure that the candidate can design efficient data pipelines that handle ingestion, transformation, and loading processes effectively.

Azure Data Factory is one of the central tools covered. Candidates are tested on their understanding of how to create and manage pipelines, link services, and configure triggers. Knowing how to orchestrate data movement between multiple data sources and destinations is key. Candidates should understand how to manage data transformations within these pipelines to prepare data for analytics or reporting systems.

Another area of focus is Azure Databricks, which is used for large-scale data processing and analytics. The exam evaluates the ability to create and manage Databricks clusters, run notebooks, schedule jobs, and handle data ingestion from various sources. Understanding how to optimize Databricks workloads and ensure efficient use of resources is important for success.

Streaming data processing is another critical area. Candidates should know how to configure Azure Stream Analytics for real-time data ingestion and processing. This involves creating input and output configurations, applying functions to transform data streams, and setting up alerts or actions based on event data. The ability to handle continuous data flow efficiently is an important skill that separates capable data engineers from beginners.

Monitoring and Optimizing Data Solutions

Monitoring and optimization ensure that data solutions operate smoothly and deliver reliable results. The DP-200 exam tests the candidate’s ability to maintain operational efficiency through proactive monitoring, troubleshooting, and fine-tuning of systems. This includes tracking data flows, measuring system performance, and applying corrections when bottlenecks or inefficiencies are detected.

Azure provides several tools for monitoring and diagnostics, such as Azure Monitor and Log Analytics. Candidates must know how to configure these tools to capture relevant performance metrics, logs, and alerts. Understanding how to interpret diagnostic data helps in identifying performance issues before they impact the system.

Optimization involves both hardware and software considerations. Candidates need to demonstrate knowledge of partitioning data to reduce latency, optimizing queries for better performance, and adjusting compute resources dynamically. It is also important to understand caching mechanisms, parallel processing, and the balance between performance and cost efficiency.

Another aspect of optimization is ensuring that data lifecycles are managed efficiently. Candidates should know how to apply data retention policies, automate archival processes, and ensure compliance with organizational data governance standards. Effective lifecycle management not only improves performance but also reduces storage costs.

Practical Knowledge Required for the DP-200 Exam

To perform well in the DP-200 exam, candidates should have hands-on experience with Azure’s data services. Practical familiarity with creating databases, building pipelines, managing clusters, and monitoring workloads is essential. The exam emphasizes problem-solving within real-world scenarios, meaning candidates should be comfortable navigating through Azure’s portal, using command-line tools, and applying scripting when necessary.

Understanding the relationships between different Azure services is also critical. For instance, a candidate should know how to integrate Azure Data Factory with Synapse Analytics or Databricks to create an end-to-end data solution. Knowing how to manage permissions, control access, and secure data across multiple services demonstrates comprehensive knowledge of Azure data management.

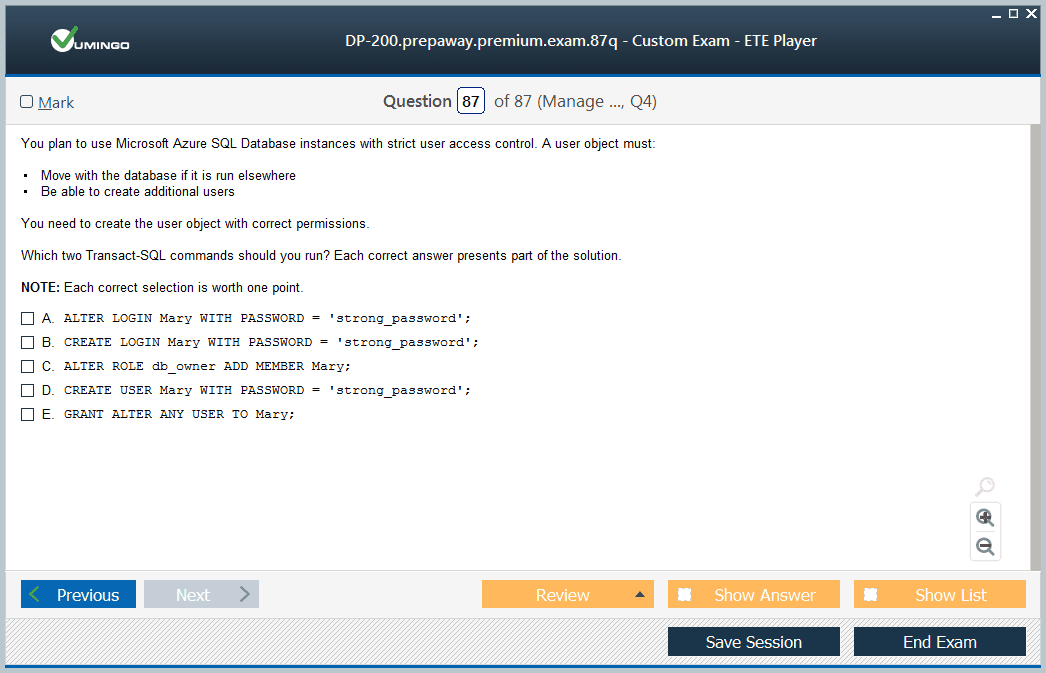

Another important area is data security and compliance. The exam tests understanding of implementing encryption, role-based access controls, and data masking. Candidates should also be aware of how to configure auditing and logging to maintain accountability and transparency in data operations.

Preparing for the DP-200 Exam

Preparation for the DP-200 exam requires both theoretical understanding and practical experience. It is beneficial for candidates to gain experience through real projects or hands-on practice using Azure’s tools. Building and deploying sample data solutions can help in understanding the interactions between various Azure services.

Candidates should focus on understanding key Azure services used in data engineering, such as Data Factory, Databricks, Synapse Analytics, and Cosmos DB. Learning how these services work together in a data pipeline will help in mastering the exam content. Additionally, studying topics like data security, monitoring, and optimization will ensure a balanced understanding of all tested areas.

Hands-on experience with data ingestion, transformation, and analysis processes will help in retaining the concepts. Familiarity with scripting languages such as SQL or Python can be an advantage, as these are commonly used in Azure data workflows. Reviewing Microsoft documentation and using sandbox environments to practice deployments are effective preparation strategies.

Benefits of the DP-200 for Data Professionals

Earning the DP-200 certification demonstrates a professional’s ability to implement and manage Azure-based data solutions. It is a testament to one’s capability to handle the complete data lifecycle in a cloud environment. For data engineers, this certification strengthens credibility and enhances professional growth opportunities.

Data engineers who pass this exam show that they can design secure, scalable, and high-performance data systems. This expertise is crucial for organizations that depend on data-driven decision-making. The ability to build reliable data pipelines and manage analytics platforms efficiently is valuable in industries that rely heavily on insights derived from data.

Additionally, this certification helps professionals transition into more specialized or leadership roles in data management. It validates their ability to work with complex systems and handle responsibilities such as data governance, performance optimization, and system integration. The knowledge gained through preparation also enables professionals to contribute to strategic decisions related to data infrastructure and technology adoption.

Relevance of the DP-200 in Modern Data Environments

As organizations increasingly adopt cloud-based infrastructures, the demand for professionals skilled in managing data on Azure continues to grow. The DP-200 exam aligns closely with the needs of modern data environments, emphasizing scalability, automation, and integration. It reflects the practical skills required to manage hybrid and multi-cloud data systems effectively.

The focus on automation within the DP-200 exam is particularly significant. Modern data systems often require automated workflows for ingestion, transformation, and monitoring. By mastering these areas, candidates can design solutions that minimize manual effort while maintaining high reliability.

Another key aspect is adaptability. The technologies and tools within Azure continue to evolve, and the principles covered in the DP-200 exam prepare candidates to adapt quickly to these changes. This flexibility makes certified professionals more capable of handling evolving business requirements and integrating new services seamlessly into existing data architectures.

Long-Term Impact of the DP-200

Beyond immediate career benefits, the DP-200 provides long-term advantages by establishing a strong foundation in data engineering principles. The exam helps professionals understand how to align technical solutions with business goals, ensuring that data systems contribute effectively to organizational success.

By learning to implement optimized data workflows, certified professionals can reduce operational costs and improve data accessibility. This leads to better analytics capabilities and faster decision-making within organizations. The knowledge gained also supports continuous professional development, as it builds the groundwork for learning more advanced data technologies in the future.

For individuals aiming to specialize further, the DP-200 serves as a stepping stone toward deeper expertise in areas such as big data analytics, artificial intelligence, or cloud architecture. The problem-solving skills developed through preparation for this exam enhance one’s ability to work on complex projects and innovate within the field of data engineering.

The DP-200 exam stands out as a comprehensive assessment for anyone aspiring to master the implementation of Azure data solutions. It not only validates technical expertise but also cultivates a strong understanding of real-world data challenges. Through its focus on data storage, processing, and optimization, it prepares professionals to manage modern data systems with confidence.

For data engineers, the DP-200 represents both a professional milestone and a practical learning experience. It deepens technical capabilities while enhancing problem-solving skills in the context of cloud-based data management. With its relevance to current and emerging data technologies, this exam remains a valuable credential for anyone committed to excelling in the field of data engineering within the Azure ecosystem.

Detailed Overview of the DP-200 Exam Objectives

The DP-200 exam is designed to evaluate the technical ability to implement Azure data solutions and manage data-related operations effectively. This exam measures how well professionals can use Azure services to create data systems that are reliable, secure, and high performing. It goes beyond theoretical concepts, requiring an understanding of how to solve real-world challenges using Microsoft’s data tools and infrastructure. The focus is on three key aspects of data management—storage, processing, and monitoring—each reflecting the daily responsibilities of a data engineer in the cloud environment.

Understanding these objectives helps candidates prepare strategically for what the exam emphasizes. Rather than memorizing facts or commands, success in this exam comes from knowing how different Azure components work together to form complete data solutions. It ensures that a data engineer is capable of handling everything from designing scalable storage solutions to maintaining performance optimization and data governance.

Implementing Effective Data Storage Solutions

One of the most heavily weighted parts of the DP-200 exam focuses on implementing data storage solutions. This involves designing and configuring both relational and non-relational storage options based on workload requirements. Candidates must demonstrate an understanding of how to select appropriate storage technologies, ensuring reliability, scalability, and security for different business use cases.

Relational storage includes services such as Azure SQL Database and Synapse Analytics, which are suited for structured data scenarios. Candidates must understand how to provision databases, configure data distribution models, and manage performance through indexing and partitioning strategies. This includes designing systems capable of handling large-scale analytical queries without performance degradation. Understanding high availability, disaster recovery, and data replication strategies is also key, as these ensure that stored data remains consistent and accessible even in cases of failure.

For non-relational storage, the focus shifts to solutions like Azure Cosmos DB and Data Lake Storage. These systems handle unstructured or semi-structured data, offering flexibility in data modeling. Candidates must know how to implement global distribution, set up replication, and choose the right consistency model for the business scenario. The exam evaluates the ability to configure these data stores for optimal performance while maintaining data security and access control.

Encryption and access management play a major role here. Candidates need to know how to protect data both at rest and in transit, as well as how to enforce identity-based access through Azure Active Directory. This part of the exam ensures that professionals can design and maintain storage solutions that balance efficiency, reliability, and compliance with data governance requirements.

Managing and Developing Data Processing Workflows

Another significant component of the DP-200 exam focuses on the ability to manage and develop data processing systems. This includes both batch and streaming data scenarios. Data processing is at the heart of analytics because it transforms raw data into structured, usable information.

Azure Data Factory is a primary service for building these workflows. Candidates are expected to understand how to create linked services that connect different data sources and sinks, build pipelines to orchestrate data movement, and define triggers to automate execution. This involves knowledge of pipeline design patterns, data flow transformations, and integration runtime configurations. Understanding how to manage dependencies and handle errors during pipeline execution is also tested.

Azure Databricks is another essential service covered in this area. Candidates should be familiar with cluster management, job scheduling, and notebook development. This service is often used for large-scale data transformations and machine learning model preparation. The exam tests whether candidates can manage Databricks clusters effectively, control resource allocation, and optimize performance for both batch and interactive workloads.

Streaming data processing using Azure Stream Analytics is equally important. Candidates need to know how to configure data inputs, define query logic for real-time processing, and set up outputs to deliver processed data to destinations such as Power BI or storage accounts. Understanding how to manage event data, apply windowing functions, and optimize query performance ensures that real-time analytics solutions run efficiently.

This section of the exam reflects the practical challenges data engineers face daily—ensuring data is processed correctly, transformed accurately, and delivered promptly to support decision-making processes. It requires strong attention to detail and an ability to work across multiple services that interact dynamically within Azure’s ecosystem.

Monitoring and Optimizing Azure Data Solutions

Monitoring and optimization form the third major component of the DP-200 exam. This section ensures that data engineers can maintain high-performing and stable systems by actively tracking metrics, diagnosing issues, and fine-tuning configurations. It tests the candidate’s understanding of how to use Azure’s built-in monitoring tools to maintain operational efficiency.

Azure Monitor and Log Analytics are central to this process. Candidates should know how to collect and analyze telemetry data, set up performance alerts, and create dashboards that visualize key system metrics. This includes tracking resource utilization, query performance, and data flow success rates across services.

Performance optimization involves analyzing workloads to identify bottlenecks and inefficiencies. Candidates must know how to optimize storage access patterns, tune database queries, and adjust compute resources dynamically based on workload demand. In distributed systems, partitioning and caching are crucial techniques for improving data throughput and reducing latency. The exam expects familiarity with these optimization methods.

Another important part of monitoring is maintaining system security and compliance. Candidates are evaluated on how they can configure auditing, apply data retention policies, and ensure that the system complies with data governance standards. The ability to monitor for unusual activity or access patterns helps in maintaining data integrity and preventing breaches.

This area of the exam ensures that candidates not only build effective systems but also maintain them proactively to achieve long-term stability and performance. It also highlights the importance of continuous improvement, where data engineers must refine their configurations and adapt to changing workloads over time.

Skills and Experience Needed for Success

To succeed in the DP-200 exam, a candidate needs a mix of technical expertise and practical experience. A strong understanding of Azure architecture and its data services is essential. Candidates should be comfortable with concepts such as virtual networks, storage accounts, and role-based access control. This foundational knowledge supports the more advanced data-specific topics covered in the exam.

Hands-on experience is vital. Candidates who have worked on real data engineering projects using Azure will find it easier to understand the exam’s practical scenarios. Experience with data modeling, database management, and ETL (extract, transform, load) processes helps in connecting theoretical knowledge with real-world applications.

Familiarity with scripting and query languages like SQL and Python is beneficial. Many Azure services allow automation through scripts, and data transformation often involves writing queries or code snippets. Understanding how to debug pipeline errors or performance issues through scripting is a valuable skill tested indirectly through scenario-based questions.

Soft skills, such as problem-solving and critical thinking, are equally important. The exam includes case studies that require candidates to analyze situations, identify the right services, and design efficient solutions. This means that knowing the syntax or interface alone is not enough; one must also understand how to apply knowledge strategically to meet business goals.

Value of the DP-200 Certification in the Data Industry

The DP-200 certification holds strong relevance in the data engineering landscape because it represents a verified understanding of how to implement data systems in a cloud-based environment. It bridges the gap between traditional database management and modern cloud-driven data architecture.

Organizations today rely on data more than ever, and cloud platforms like Azure are central to managing that data efficiently. Professionals who hold this certification demonstrate their ability to handle the complexities of large-scale data systems, including integration, security, and optimization. This makes them valuable contributors to teams working on data-driven projects.

Holding this certification also indicates readiness to work with evolving technologies. Cloud environments are dynamic, and new tools or features are introduced regularly. By preparing for and passing this exam, professionals prove their adaptability and their commitment to continuous learning within the field of cloud data engineering.

The DP-200 also adds credibility for professionals transitioning into more specialized roles. It validates practical experience and helps employers assess technical proficiency objectively. This certification can enhance opportunities for advancement into senior positions that require oversight of entire data infrastructures or analytical solutions.

Practical Applications of Knowledge Gained

The knowledge acquired during preparation for the DP-200 exam extends well beyond passing the test. It equips professionals with skills that can be directly applied to their work. For example, understanding how to implement Azure Data Factory pipelines enables smoother integration between on-premises and cloud systems. Similarly, familiarity with Databricks allows teams to handle complex data transformation tasks more efficiently.

In real-world applications, data engineers use these skills to design systems that collect data from multiple sources, process it efficiently, and deliver it to analytics platforms or storage systems for further use. They are also responsible for ensuring that data remains secure, traceable, and compliant with organizational policies. The ability to optimize these workflows for cost and performance gives businesses a significant competitive edge.

Another key application is in automation. Many data processes can be automated using Azure tools, reducing manual intervention and minimizing human error. This not only increases reliability but also allows teams to focus on strategic data initiatives instead of routine maintenance.

Long-Term Relevance of DP-200 Knowledge

Even as technology evolves, the foundational principles covered in the DP-200 exam remain highly relevant. Understanding data architecture, pipeline management, and performance optimization are core skills that apply across all cloud platforms, not just Azure. The exam helps build a mindset of designing scalable, efficient systems—an approach that is valuable in any data engineering environment.

Moreover, the problem-solving framework learned through DP-200 preparation enhances adaptability. Professionals who have mastered these principles can quickly learn new tools or services because they understand the underlying concepts of data handling and processing. This flexibility ensures career longevity in a rapidly changing industry.

The DP-200 also reinforces the importance of data governance, an increasingly critical aspect of data management. As organizations collect more data, maintaining compliance and security becomes a top priority. Professionals trained in implementing data retention and access control policies can help their organizations manage these challenges effectively.

The DP-200 exam represents a comprehensive assessment of the skills required to implement and maintain data solutions in Azure. It goes beyond testing theoretical understanding to evaluate real-world problem-solving abilities across data storage, processing, and monitoring. Professionals who prepare for and pass this exam develop a strong grasp of how to create secure, efficient, and scalable data systems that support modern analytics needs.

By focusing on Azure’s integrated tools and services, the DP-200 equips candidates with the expertise needed to manage complete data ecosystems. It ensures they can design reliable architectures, handle complex workflows, and maintain performance in dynamic environments. For anyone pursuing a career in cloud data engineering, this exam remains a key step toward mastering the implementation of advanced data solutions and contributing effectively to data-driven initiatives.

In-Depth Understanding of Data Solution Implementation

The DP-200 exam measures a candidate’s ability to translate business data requirements into working solutions within the Azure ecosystem. The core focus lies in practical implementation—how data systems are built, optimized, and maintained across their lifecycle. A major part of this process involves connecting different components of Azure to create an integrated and efficient data environment that supports modern analytical workloads. Implementing an Azure data solution is not only about using a single service effectively but also about understanding how multiple services interact to create seamless data flows.

Implementing data storage begins with identifying the right type of storage for each data workload. Azure provides various services that cater to structured, semi-structured, and unstructured data. A key challenge lies in choosing between these options while maintaining scalability and cost-effectiveness. For instance, transactional systems might rely on Azure SQL Database for relational data, while analytical workloads often benefit from distributed systems like Azure Synapse. Non-relational stores such as Cosmos DB or Data Lake Storage are preferred when handling large-scale or real-time data. Each of these technologies serves a unique function within the broader ecosystem, and the exam assesses whether candidates can make the correct selection based on data volume, speed, and complexity.

Data engineers must also be capable of ensuring high availability and disaster recovery. These are not optional features but essential design principles. The exam evaluates understanding of concepts such as replication, geo-redundancy, and failover strategies. Professionals are expected to know how to plan and configure systems so that data remains accessible even when failures occur. Additionally, implementing backup and restore strategies ensures that data integrity is maintained under all circumstances.

Another critical area covered in the exam involves managing data access and security. Data professionals must understand how to apply identity management through Azure Active Directory, assign permissions using role-based access control, and configure encryption methods. The ability to design systems that adhere to data privacy and governance standards demonstrates a professional’s ability to handle sensitive information securely.

Building and Managing Data Processing Solutions

The DP-200 exam evaluates how well professionals can create and manage data pipelines that handle data ingestion, transformation, and delivery. This process involves moving data from various sources, transforming it according to business rules, and storing it in the right destination for analytics or reporting. The complexity of data pipelines can vary, ranging from simple extract-transform-load tasks to continuous streaming processes that require real-time analysis.

Azure Data Factory plays a central role in this area. Candidates must understand how to create pipelines that automate data movement and transformation. This includes setting up linked services that connect to data sources such as databases, APIs, or storage accounts. Pipelines can be triggered on schedules or in response to specific events, ensuring that data flows continuously and efficiently. Candidates must be able to handle data integration between on-premises systems and the cloud, often requiring the use of integration runtimes.

The exam also includes topics related to Azure Databricks, which is designed for advanced data processing and analytics. It allows professionals to work with large datasets using distributed computing frameworks. Candidates are expected to know how to create and manage Databricks clusters, optimize job performance, and develop data transformation workflows using notebooks. Databricks is often used for complex data preparation tasks that feed into downstream analytics or machine learning systems.

Streaming data processing is another focus area. Modern data environments often require real-time data insights, which are made possible through services like Azure Stream Analytics. Candidates must know how to define input streams, configure query logic, and send processed data to multiple outputs such as dashboards, databases, or storage systems. These scenarios test the ability to build event-driven architectures capable of processing large volumes of data instantly.

Optimizing these processing workflows is another major part of the exam. Professionals must demonstrate the ability to identify performance bottlenecks and implement improvements. This might involve adjusting parallelism in pipelines, optimizing query logic, or scaling compute resources dynamically. Knowing how to balance performance with cost efficiency is an important skill, as real-world systems must deliver results without unnecessary resource consumption.

Monitoring, Troubleshooting, and Performance Management

Effective monitoring is what ensures that Azure data solutions operate smoothly and efficiently over time. The DP-200 exam evaluates a candidate’s ability to monitor, diagnose, and troubleshoot issues across various Azure data services. This requires understanding how to use built-in tools to collect logs, visualize performance metrics, and respond proactively to alerts.

Azure Monitor and Log Analytics are core tools for this process. They allow engineers to collect telemetry data from multiple services, analyze trends, and create alerts for unusual activity or performance degradation. The exam tests whether candidates can set up monitoring rules, configure dashboards, and interpret data accurately. The ability to use these insights to make informed decisions is essential for maintaining the reliability of data systems.

Troubleshooting involves identifying and resolving system failures, performance issues, or configuration errors. Candidates must know how to interpret error messages, analyze logs, and trace data movement across interconnected services. For example, if a data pipeline fails to execute, understanding whether the issue lies in network connectivity, authentication, or data transformation logic is key to resolving the problem quickly.

Performance optimization also falls under this category. Candidates need to demonstrate understanding of how to tune queries, partition data efficiently, and manage resource allocation. This may include strategies such as caching frequently accessed data, minimizing data shuffling in distributed systems, and adjusting throughput settings in storage accounts or databases. The exam aims to ensure that professionals can maintain optimal performance even under changing workloads and data growth.

Monitoring extends beyond performance; it also includes ensuring compliance and data governance. Engineers must know how to implement auditing and track access to sensitive information. Maintaining detailed audit logs helps identify unauthorized activities and ensures transparency in how data is handled across the organization. These practices reinforce trust and align data systems with regulatory requirements.

Practical Relevance of the DP-200 Certification

The DP-200 certification holds great value because it validates practical expertise in implementing Azure data solutions. It represents more than academic knowledge—it signifies that a professional can design, deploy, and manage complex data systems that meet organizational needs. This certification builds confidence in handling real-world scenarios, such as building data lakes, optimizing pipelines, and maintaining data quality.

Data engineers with this certification understand how to work effectively within the Azure ecosystem. They can leverage various services together to solve problems such as integrating data from multiple systems or improving analytics performance. This ability to design end-to-end solutions is a key differentiator in the modern data industry, where organizations rely on efficient data management to make informed decisions.

The certification also demonstrates adaptability. Cloud technologies evolve rapidly, and professionals who understand the foundational architecture of Azure can easily adapt to new tools or updates. The exam encourages candidates to think critically about system design rather than memorize isolated features. This mindset fosters continuous improvement and innovation within professional practice.

From an organizational perspective, certified data engineers contribute to better system reliability and scalability. Their ability to design optimized architectures results in more efficient use of resources, lower operational costs, and faster insights from data. This makes them valuable assets in data-driven teams, whether working on analytics, data science, or application development projects.

Learning Outcomes and Skill Development

Preparing for the DP-200 exam helps professionals develop a wide range of technical and analytical skills. They learn how to apply engineering principles to data architecture, combining theoretical understanding with hands-on practice. This preparation strengthens their ability to handle the full data lifecycle—from ingestion to analysis.

Key learning outcomes include the ability to design resilient storage solutions, implement complex ETL workflows, and monitor system performance proactively. Professionals also gain a deeper understanding of cloud-based security principles, learning how to safeguard data assets without compromising accessibility. These skills are universally relevant across industries and can be applied to different roles within data engineering and analytics.

The preparation process also improves problem-solving and critical-thinking abilities. The exam presents scenarios that require analyzing data requirements, identifying optimal services, and implementing scalable solutions. Candidates learn to approach challenges methodically, balancing technical constraints with business objectives. This skill set is valuable not just for exam success but also for everyday work in data management and engineering environments.

Hands-on practice with Azure services is a major part of the preparation process. Working through case studies or simulations helps candidates gain familiarity with real configurations and workflows. This experiential learning approach ensures that once certified, professionals are ready to apply their knowledge in production environments.

Long-Term Significance of the DP-200 Exam Knowledge

The knowledge gained from mastering the DP-200 content has lasting relevance in the evolving field of data engineering. The principles of building scalable, secure, and optimized data systems extend beyond any specific tool or platform. By focusing on these core concepts, professionals can easily transition into more advanced roles or adapt to emerging technologies.

As organizations increasingly rely on cloud infrastructure for data management, the demand for skilled data engineers continues to grow. The DP-200 exam equips professionals with a comprehensive understanding of how to design and implement systems that handle large volumes of data efficiently. This makes them valuable contributors to teams focused on analytics, business intelligence, and digital transformation.

Moreover, the exam promotes a mindset of continuous learning. Data engineers who master Azure’s services develop a deeper curiosity about how to further optimize performance or integrate new technologies. This adaptability ensures long-term career growth and relevance in an industry that changes rapidly.

The emphasis on monitoring and optimization also instills a proactive approach to system maintenance. Rather than waiting for failures to occur, professionals learn how to anticipate issues and apply preventive measures. This approach not only improves system reliability but also enhances organizational productivity by minimizing downtime and ensuring data accuracy.

The DP-200 exam serves as a benchmark for validating the technical competence required to implement Azure data solutions effectively. It focuses on practical abilities—how to design, build, monitor, and optimize data systems that align with business goals. By mastering these skills, professionals gain the expertise to manage complex data environments that support analytics, decision-making, and innovation.

This certification not only enhances technical proficiency but also develops strategic thinking about data architecture. It prepares professionals to create scalable, secure, and efficient systems that can evolve with organizational needs. Through hands-on learning and real-world application, candidates who complete this certification demonstrate their readiness to handle the demands of modern data engineering, making the DP-200 an essential step for those pursuing excellence in the Azure data domain.

Comprehensive Understanding of the DP-200 Exam

The DP-200 exam is centered on implementing Azure data solutions and assessing the technical ability to transform business needs into reliable, scalable, and secure data systems. It evaluates whether candidates can effectively integrate multiple Azure services to manage data through its complete lifecycle, from ingestion and storage to transformation, analysis, and visualization. This exam serves as a significant milestone for individuals pursuing expertise in cloud-based data engineering, focusing on practical implementation rather than theoretical understanding.

To succeed in the DP-200 exam, candidates must develop proficiency in managing both structured and unstructured data. Azure’s ecosystem includes services such as Azure SQL Database, Azure Synapse Analytics, Cosmos DB, and Data Lake Storage. Each service is suited to different workloads and performance requirements, and the exam tests one’s ability to determine which technology best supports a specific business objective. The understanding of when and how to use these services forms a core part of the certification’s practical emphasis.

A professional preparing for this exam must also understand how to create a balanced data architecture that supports both real-time and batch data processing. This requires familiarity with distributed systems, storage architectures, and integration mechanisms. The DP-200 focuses heavily on practical decision-making, such as determining appropriate partitioning strategies, implementing data retention policies, and optimizing throughput for high-performance scenarios.

Security plays a major role in implementing Azure data solutions. The exam requires knowledge of data encryption, access control, and auditing mechanisms. These elements ensure that data remains secure throughout its lifecycle while maintaining compliance with governance policies. A certified professional should be able to configure security at multiple levels, including databases, networks, and applications.

Practical Applications and Skill Relevance

The DP-200 exam provides a foundation for professionals to apply Azure data engineering skills in real-world scenarios. Its value lies in teaching practical methods to deploy data-driven solutions that can adapt to different organizational requirements. The exam encourages professionals to not only focus on system performance but also to design architectures that are cost-effective and easy to maintain.

A key skill developed through DP-200 preparation is the ability to design pipelines that move data efficiently between systems. Using tools such as Azure Data Factory, engineers can automate the movement and transformation of data across environments. This involves designing linked services, configuring datasets, and managing triggers that control when data processes occur. Through this, professionals learn how to manage both periodic and continuous data flows, which is essential for handling dynamic data workloads.

The use of Azure Databricks and Stream Analytics further enhances data processing capabilities. Azure Databricks allows engineers to perform large-scale data transformations and analytics by combining distributed computing with scripting flexibility. Meanwhile, Azure Stream Analytics is used to manage real-time data streams, enabling immediate insights from continuous data sources. The DP-200 exam measures one’s ability to use these services effectively, configure them for different use cases, and integrate them into a broader data solution.

Monitoring and optimization are also emphasized throughout the certification. Engineers are expected to understand how to set up monitoring dashboards, configure alerts, and interpret performance data. This ensures that systems not only run efficiently but also remain reliable over time. Knowledge of troubleshooting is equally critical, as engineers must be able to identify and resolve system issues quickly to prevent disruption.

The DP-200 exam prepares professionals to think holistically about system design. It teaches the importance of balancing data performance, storage efficiency, and operational cost. Engineers who can align these elements create sustainable solutions that deliver value long after deployment.

Role of Data Engineers in the Context of the Exam

The DP-200 exam reflects the evolving responsibilities of data engineers in modern organizations. These professionals act as the link between raw data and actionable insights, transforming fragmented datasets into organized structures that support analytics and business intelligence. The exam is designed to ensure that candidates can design systems capable of handling growing data volumes while maintaining consistency and performance.

A data engineer working within Azure must be proficient in using its native services for storage, processing, and analysis. They must understand how to configure these services so they work seamlessly together. The DP-200 focuses on these practical integration skills, ensuring candidates can deploy production-ready systems. For example, combining Azure SQL Database for structured data and Data Lake Storage for unstructured data allows the engineer to accommodate a wider range of business requirements.

Data engineers are also responsible for maintaining data reliability and quality. The exam covers data validation, error handling, and transformation logic to ensure that data pipelines produce accurate results. Professionals must know how to manage versioning, track changes, and handle schema evolution, especially when dealing with large or frequently updated datasets.

Performance optimization is another key responsibility. A certified engineer should be capable of evaluating the efficiency of data systems and applying tuning techniques such as query optimization, caching, and proper indexing. They should also understand when to scale compute or storage resources to maintain system performance during peak workloads.

Through these responsibilities, the DP-200 certification demonstrates that a professional can handle end-to-end data solution management in a cloud environment. It verifies not just technical ability but also strategic decision-making in data architecture and operations.

Deep Dive into Monitoring and Optimization

Monitoring plays a critical role in the success of Azure data solutions. The DP-200 exam assesses how well candidates can implement monitoring strategies to track system performance, identify bottlenecks, and maintain reliability. Azure provides several built-in tools for this purpose, and understanding how to configure and use them effectively is essential.

Azure Monitor serves as the primary service for collecting telemetry data from various Azure resources. It allows data engineers to visualize performance trends, detect anomalies, and respond to system alerts. Setting up alerts helps ensure that issues are detected early, preventing disruptions. Engineers must also be able to configure log analytics to aggregate data from multiple sources and analyze it for deeper insights.

Performance tuning often involves analyzing these logs to identify inefficiencies. Engineers learn to apply partitioning, indexing, and compression techniques to improve query performance. They also become skilled at managing resource allocation, scaling compute clusters, and balancing workloads across nodes. The DP-200 exam ensures that candidates can make data-driven decisions about how to optimize their environments.

Another aspect of optimization involves managing data storage costs without compromising system capabilities. This includes selecting the right storage tiers, implementing data lifecycle policies, and deleting unnecessary data automatically. The goal is to ensure that systems remain both efficient and financially sustainable.

The DP-200 exam also examines understanding of troubleshooting strategies. Engineers must be able to diagnose failed data pipelines, identify misconfigurations, and resolve security access issues. These scenarios require not just technical knowledge but also logical reasoning to trace problems through interconnected systems.

Career Impact and Professional Growth

The DP-200 certification provides a strong foundation for professionals aiming to advance in data engineering and cloud architecture roles. It validates the ability to work confidently within Azure environments and apply industry-standard methods for managing data solutions. The skills gained from preparing for this exam extend beyond Azure and can be applied to any cloud-based data system.

Earning the certification demonstrates commitment to mastering complex technical concepts and solving real-world challenges. It equips professionals with the confidence to handle responsibilities that involve designing, deploying, and maintaining mission-critical systems. These skills are valuable across a wide range of industries that rely on data-driven decision-making.

Professionals who have completed the DP-200 often find themselves more capable of contributing to organizational strategies. They can recommend data management improvements, optimize workflows, and help teams make informed decisions based on reliable data infrastructure. This ability to connect technical expertise with business goals makes them key contributors to digital transformation initiatives.

Beyond individual development, the certification also fosters collaboration. Data engineers work closely with analysts, architects, and developers, and understanding Azure’s ecosystem helps bridge communication gaps between these roles. Shared understanding of data systems ensures smoother project execution and higher quality outcomes.

The DP-200 certification also encourages a mindset of lifelong learning. Cloud technology evolves rapidly, and professionals who have developed a solid foundation in Azure can adapt more easily to future changes. This adaptability keeps their skills relevant, even as new tools and techniques emerge.

Core Focus Areas of the Exam

The DP-200 exam evaluates a wide range of technical abilities. The key areas include implementing data storage solutions, managing data processing systems, and monitoring data operations. Each of these domains encompasses several layers of understanding, from conceptual design to hands-on implementation. Candidates are expected to know how to configure Azure data services, optimize their performance, and troubleshoot issues efficiently.

Implementing data storage solutions is one of the most significant portions of the exam. Candidates need to understand both relational and non-relational storage systems within Azure. This includes working with Azure SQL Database, Azure Synapse Analytics, and Cosmos DB. Each storage type has specific use cases, and understanding when to apply each one is critical. The exam tests knowledge of configuring these systems for performance, managing data security, and ensuring fault tolerance through replication and backup strategies.

For non-relational storage, familiarity with services such as Azure Data Lake Storage and Blob Storage is essential. These services allow organizations to handle massive volumes of unstructured data. Engineers must know how to configure access controls, manage data partitions, and optimize performance for large-scale workloads.

Managing and developing data processing systems is another key focus. This area includes working with Azure Data Factory, Azure Databricks, and Azure Stream Analytics. Data Factory enables the orchestration of data pipelines, automating the extraction, transformation, and loading (ETL) processes. Engineers are expected to create and manage linked services, configure datasets, and implement scheduled triggers. Understanding how to build reusable and efficient pipelines is crucial to maintaining data flow reliability.

Azure Databricks plays a vital role in the processing domain. It provides a collaborative platform for large-scale data transformation, machine learning, and analytics. Engineers must be able to create clusters, develop notebooks, and manage job execution within Databricks. They also need to understand how to integrate it with other Azure services for seamless data movement and analysis.

Streaming data is another critical topic in the DP-200 exam. Azure Stream Analytics enables real-time data processing, allowing businesses to react to events as they happen. Candidates should know how to configure input and output streams, design queries using built-in functions, and manage stream jobs for continuous analytics.

Monitoring and optimizing data solutions is the final domain of focus. It involves ensuring that systems perform consistently under varying loads. Candidates must demonstrate the ability to use Azure’s monitoring tools to identify and resolve performance issues. This includes configuring Azure Monitor, Log Analytics, and diagnostic settings. Understanding how to interpret metrics, set up alerts, and manage system health proactively is a fundamental skill for any data engineer.

The Role of Data Engineers in Azure Ecosystem

The DP-200 exam represents the growing importance of data engineers in modern organizations. As data becomes the foundation for decision-making, the demand for professionals who can manage and optimize data systems continues to rise. Data engineers are responsible for building and maintaining the infrastructure that allows analysts, scientists, and business users to derive value from data.

Within Azure, a data engineer’s responsibilities extend across multiple services and technologies. They must design storage systems that handle different types of data efficiently, whether it is transactional, analytical, or unstructured. They also develop pipelines that move data seamlessly between systems while maintaining integrity and consistency. Security is another essential area of expertise, as engineers must ensure compliance with governance standards while protecting sensitive information.

The DP-200 exam validates these responsibilities by assessing the candidate’s ability to manage complex systems effectively. Engineers who pass this exam demonstrate that they can design architectures that align with both technical requirements and business objectives. This includes balancing performance with scalability and cost, as well as maintaining the flexibility to adapt to changing organizational needs.

Beyond technical ability, the role of a data engineer involves collaboration. They often work alongside data scientists, developers, and analysts. A professional with DP-200 certification has the skills to communicate effectively across these teams, ensuring that data systems support analytical and operational goals. This collaboration is essential to achieving efficient and cohesive data ecosystems.

Real-World Importance of the Exam Skills

The DP-200 exam emphasizes practical, hands-on skills that directly translate into real-world data engineering tasks. Professionals who prepare for this certification gain experience with problem-solving scenarios that mirror those faced in daily operations. This practical knowledge allows them to apply best practices for performance optimization, cost management, and security implementation.

One of the most valuable skills tested in the DP-200 exam is performance tuning. Engineers learn how to evaluate workloads, analyze bottlenecks, and apply optimization techniques. This may involve partitioning data for faster queries, indexing critical tables, or managing distributed compute resources for parallel processing. The goal is to design systems that can handle increasing data volumes without sacrificing efficiency.

Another key aspect is security management. Data engineers are responsible for ensuring that access controls, encryption, and auditing mechanisms are in place. The exam reinforces the understanding of identity and access management principles, including how to implement role-based access control and encryption at rest and in transit. These skills are crucial for maintaining trust in enterprise data environments.

Data lifecycle management is also covered in depth. Engineers must design strategies for data retention, archival, and deletion, ensuring that storage systems remain efficient and compliant with organizational policies. Knowing when and how to automate these processes is an important part of maintaining system hygiene.

Monitoring capabilities developed through DP-200 preparation are equally valuable. Engineers who can interpret system metrics and act upon them prevent downtime, optimize costs, and ensure the stability of production environments. By mastering Azure Monitor and Log Analytics, they can establish proactive maintenance strategies that keep data pipelines reliable.

Preparation Approach and Learning Mindset

Preparing for the DP-200 exam requires more than rote memorization. Candidates must build an understanding of how Azure’s components interact within a complete data ecosystem. A successful approach involves hands-on experimentation, conceptual study, and scenario-based problem solving.

Working on practical exercises helps in developing confidence with tools like Azure Data Factory and Databricks. Building small pipelines, connecting data sources, and transforming data in real-time provide valuable experience that aligns closely with the exam’s objectives.

In addition, reviewing monitoring and security practices strengthens understanding of how systems are maintained after deployment. These topics form an essential part of the DP-200 exam, as they ensure candidates can not only build but also sustain high-performing data environments.

The mindset required for this certification is one of continuous learning. Azure’s services evolve constantly, and professionals must stay informed about new features and updates. A solid foundation in the concepts covered by the DP-200 exam allows engineers to adapt more easily to changes in technology.

Why the DP-200 Exam Remains Important

Despite the evolution of technology and the introduction of newer exams, the core knowledge covered in DP-200 remains deeply relevant. The principles of implementing secure, scalable, and efficient data systems form the backbone of any data engineering practice. Understanding how to architect, manage, and optimize data flows is a skill that remains valuable regardless of updates or shifts in technology.

The exam’s structure, which emphasizes both practical tasks and conceptual understanding, helps ensure that candidates are ready for real-world challenges. It teaches not only how to implement Azure solutions but also how to think critically about design choices. This blend of technical and analytical ability is what differentiates capable data engineers from those with limited hands-on experience.

The DP-200 is particularly valuable for professionals transitioning from traditional data systems to cloud environments. It bridges the gap between on-premises infrastructure and cloud-based architectures, helping professionals understand how to migrate workloads, maintain performance, and ensure continuity.

By mastering the topics in this exam, candidates gain a thorough understanding of cloud data engineering principles that extend across platforms. The focus on scalability, security, and optimization ensures that the knowledge remains applicable as new technologies and services emerge.

Conclusion

The DP-200 exam represents a comprehensive evaluation of a professional’s ability to implement Azure data solutions that meet real business needs. It requires a balance of technical expertise, problem-solving ability, and strategic thinking. Candidates who prepare thoroughly develop the skills to design robust data systems capable of handling complex, large-scale workloads efficiently.

Through its focus on implementation, optimization, and monitoring, the exam ensures that professionals are not only technically proficient but also capable of managing data systems effectively throughout their lifecycle. The certification validates an understanding of how data moves, transforms, and supports decision-making in a cloud environment.

Earning this certification demonstrates readiness to take on challenging data engineering roles and contribute meaningfully to organizational success. It provides a pathway for continuous growth and deeper specialization in cloud-based data technologies. For professionals seeking to solidify their expertise and advance their careers in data management, the DP-200 exam remains a vital and valuable step.

Microsoft DP-200 practice test questions and answers, training course, study guide are uploaded in ETE Files format by real users. Study and Pass DP-200 Implementing an Azure Data Solution certification exam dumps & practice test questions and answers are to help students.

Exam Comments * The most recent comment are on top

- AZ-104 - Microsoft Azure Administrator

- AI-900 - Microsoft Azure AI Fundamentals

- AI-102 - Designing and Implementing a Microsoft Azure AI Solution

- DP-700 - Implementing Data Engineering Solutions Using Microsoft Fabric

- AZ-305 - Designing Microsoft Azure Infrastructure Solutions

- MD-102 - Endpoint Administrator

- PL-300 - Microsoft Power BI Data Analyst

- AZ-900 - Microsoft Azure Fundamentals

- AZ-500 - Microsoft Azure Security Technologies

- SC-300 - Microsoft Identity and Access Administrator

- MS-102 - Microsoft 365 Administrator

- SC-200 - Microsoft Security Operations Analyst

- SC-401 - Administering Information Security in Microsoft 365

- DP-600 - Implementing Analytics Solutions Using Microsoft Fabric

- AZ-204 - Developing Solutions for Microsoft Azure

- AZ-700 - Designing and Implementing Microsoft Azure Networking Solutions

- SC-100 - Microsoft Cybersecurity Architect

- PL-200 - Microsoft Power Platform Functional Consultant

- AZ-400 - Designing and Implementing Microsoft DevOps Solutions

- PL-400 - Microsoft Power Platform Developer

- AZ-800 - Administering Windows Server Hybrid Core Infrastructure

- SC-900 - Microsoft Security, Compliance, and Identity Fundamentals

- AZ-140 - Configuring and Operating Microsoft Azure Virtual Desktop

- DP-300 - Administering Microsoft Azure SQL Solutions

- PL-600 - Microsoft Power Platform Solution Architect

- GH-300 - GitHub Copilot

- MS-900 - Microsoft 365 Fundamentals

- AZ-801 - Configuring Windows Server Hybrid Advanced Services

- MS-700 - Managing Microsoft Teams

- MB-280 - Microsoft Dynamics 365 Customer Experience Analyst

- PL-900 - Microsoft Power Platform Fundamentals

- MB-330 - Microsoft Dynamics 365 Supply Chain Management

- MB-800 - Microsoft Dynamics 365 Business Central Functional Consultant

- DP-900 - Microsoft Azure Data Fundamentals

- MB-310 - Microsoft Dynamics 365 Finance Functional Consultant

- DP-100 - Designing and Implementing a Data Science Solution on Azure

- MB-820 - Microsoft Dynamics 365 Business Central Developer

- AB-730 - AI Business Professional

- MB-230 - Microsoft Dynamics 365 Customer Service Functional Consultant

- MS-721 - Collaboration Communications Systems Engineer

- MB-700 - Microsoft Dynamics 365: Finance and Operations Apps Solution Architect

- PL-500 - Microsoft Power Automate RPA Developer

- MB-500 - Microsoft Dynamics 365: Finance and Operations Apps Developer

- GH-200 - GitHub Actions

- GH-900 - GitHub Foundations

- MB-335 - Microsoft Dynamics 365 Supply Chain Management Functional Consultant Expert

- MB-240 - Microsoft Dynamics 365 for Field Service

- GH-500 - GitHub Advanced Security

- DP-420 - Designing and Implementing Cloud-Native Applications Using Microsoft Azure Cosmos DB

- MB-920 - Microsoft Dynamics 365 Fundamentals Finance and Operations Apps (ERP)

- MB-910 - Microsoft Dynamics 365 Fundamentals Customer Engagement Apps (CRM)

- GH-100 - GitHub Administration

- AZ-120 - Planning and Administering Microsoft Azure for SAP Workloads

- DP-203 - Data Engineering on Microsoft Azure

- SC-400 - Microsoft Information Protection Administrator

- 62-193 - Technology Literacy for Educators

- AZ-303 - Microsoft Azure Architect Technologies

- MB-210 - Microsoft Dynamics 365 for Sales

- 98-383 - Introduction to Programming Using HTML and CSS

- MO-100 - Microsoft Word (Word and Word 2019)

- MO-300 - Microsoft PowerPoint (PowerPoint and PowerPoint 2019)

Why customers love us?

What do our customers say?

The resources provided for the Microsoft certification exam were exceptional. The exam dumps and video courses offered clear and concise explanations of each topic. I felt thoroughly prepared for the DP-200 test and passed with ease.

Studying for the Microsoft certification exam was a breeze with the comprehensive materials from this site. The detailed study guides and accurate exam dumps helped me understand every concept. I aced the DP-200 exam on my first try!

I was impressed with the quality of the DP-200 preparation materials for the Microsoft certification exam. The video courses were engaging, and the study guides covered all the essential topics. These resources made a significant difference in my study routine and overall performance. I went into the exam feeling confident and well-prepared.

The DP-200 materials for the Microsoft certification exam were invaluable. They provided detailed, concise explanations for each topic, helping me grasp the entire syllabus. After studying with these resources, I was able to tackle the final test questions confidently and successfully.

Thanks to the comprehensive study guides and video courses, I aced the DP-200 exam. The exam dumps were spot on and helped me understand the types of questions to expect. The certification exam was much less intimidating thanks to their excellent prep materials. So, I highly recommend their services for anyone preparing for this certification exam.