- Home

- IBM Certifications

- C2090-424 InfoSphere DataStage v11.3 Dumps

Pass IBM C2090-424 Exam in First Attempt Guaranteed!

Get 100% Latest Exam Questions, Accurate & Verified Answers to Pass the Actual Exam!

30 Days Free Updates, Instant Download!

C2090-424 Premium File

- Premium File 64 Questions & Answers. Last Update: Feb 15, 2026

Whats Included:

- Latest Questions

- 100% Accurate Answers

- Fast Exam Updates

Last Week Results!

All IBM C2090-424 certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the C2090-424 InfoSphere DataStage v11.3 practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

Understanding the Scope of the IBM C2090-424 Exam

The IBM C2090-424 exam evaluates a candidate’s proficiency in InfoSphere DataStage v11.3, focusing on data integration, ETL processes, and system optimization. Preparing for this certification requires a deep understanding of both theoretical principles and practical applications. The exam is designed to test a professional’s ability to manage, configure, and optimize DataStage environments while ensuring security, scalability, and reliability. Understanding the scope of this exam is essential for crafting an effective study plan and achieving a high level of competency. Candidates need to familiarize themselves with a variety of topics, including architecture, system components, performance tuning, troubleshooting, and cloud integration.

DataStage architecture forms the backbone of the certification, encompassing client-server interactions, server management, job sequences, and parallel processing frameworks. Candidates are expected to understand the flow of data through different stages and the correct use of transformation stages to achieve efficient ETL processing. Knowledge of runtime column propagation, job sequencing, and the management of reusable components is critical to ensuring successful data integration workflows. Additionally, metadata management and schema propagation are fundamental for maintaining consistency and integrity across data pipelines. Practical exercises in setting up jobs, configuring environments, and simulating data flows provide a strong foundation for the exam and professional work.

Exam Topics and Their Practical Significance

The IBM C2090-424 exam includes several core areas that reflect real-world responsibilities for DataStage professionals. Security and data protection are vital aspects, requiring candidates to be familiar with authentication methods, role-based access, encryption techniques, and compliance with data governance standards. Network configuration and connectivity are equally important, as candidates must ensure seamless communication between distributed systems and external data sources. Cloud integration has become a critical skill, as many enterprises leverage hybrid or fully cloud-based data platforms. Candidates need to understand virtualized environments, cloud storage connections, and performance implications of cloud workloads. Each of these topics not only prepares candidates for the exam but also equips them with practical skills applicable in modern data engineering roles.

Performance tuning and system optimization are essential for handling large volumes of data efficiently. Candidates must understand parallel job execution, resource allocation, and strategies for minimizing runtime and memory consumption. Optimization extends to troubleshooting job failures, monitoring job execution logs, and identifying bottlenecks. The exam also emphasizes storage solutions, backup practices, and IT service management processes, highlighting the importance of maintaining high system availability and data integrity. Knowledge of these areas ensures that certified professionals can maintain operational efficiency and quickly respond to issues in production environments.

Registration and Exam Logistics

Registering for the IBM C2090-424 exam requires understanding the official procedures and planning the process carefully. Candidates must create an account with the authorized testing platform and provide accurate personal details to ensure a smooth registration experience. Scheduling the exam involves selecting an appropriate testing location or opting for an online proctored environment, depending on availability and preference. Candidates should verify the exam date, time, and venue well in advance and ensure all required documentation is in order. Preparing for the logistics reduces anxiety and allows candidates to focus entirely on content mastery.

Exam centers are secure and standardized, with clear protocols to maintain fairness. Online exams have specific technical requirements, including stable internet connections, compatible devices, and a distraction-free environment. Candidates need to review these requirements carefully to avoid technical disruptions during the exam. Planning the registration and exam day steps ensures that candidates are fully prepared and can concentrate on demonstrating their knowledge and skills without unnecessary distractions.

Exam Format and Question Structure

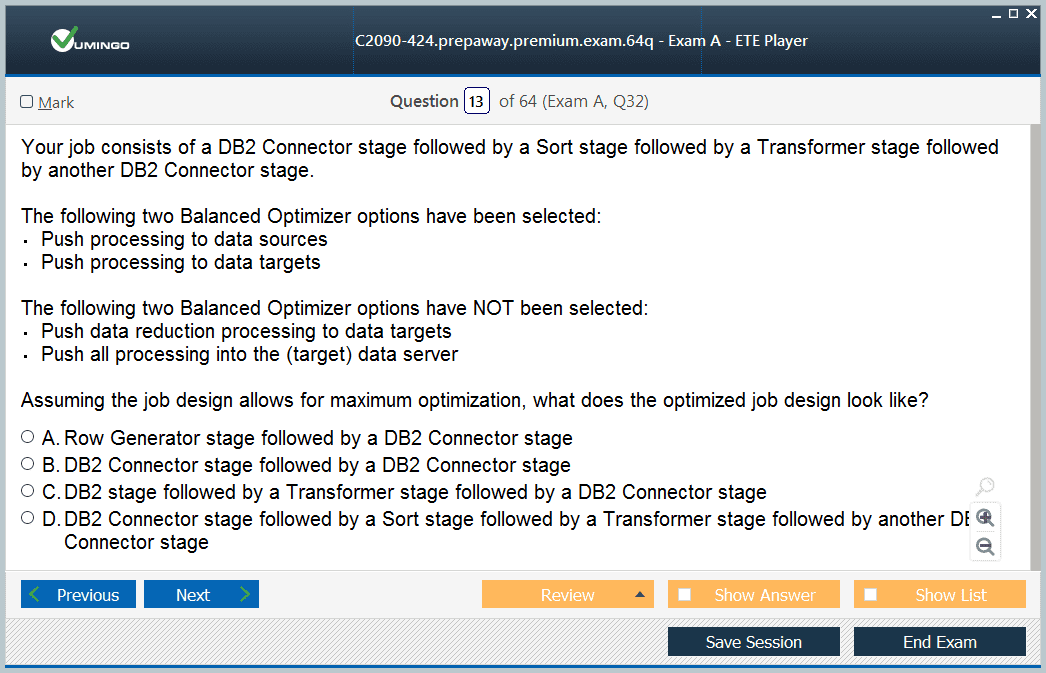

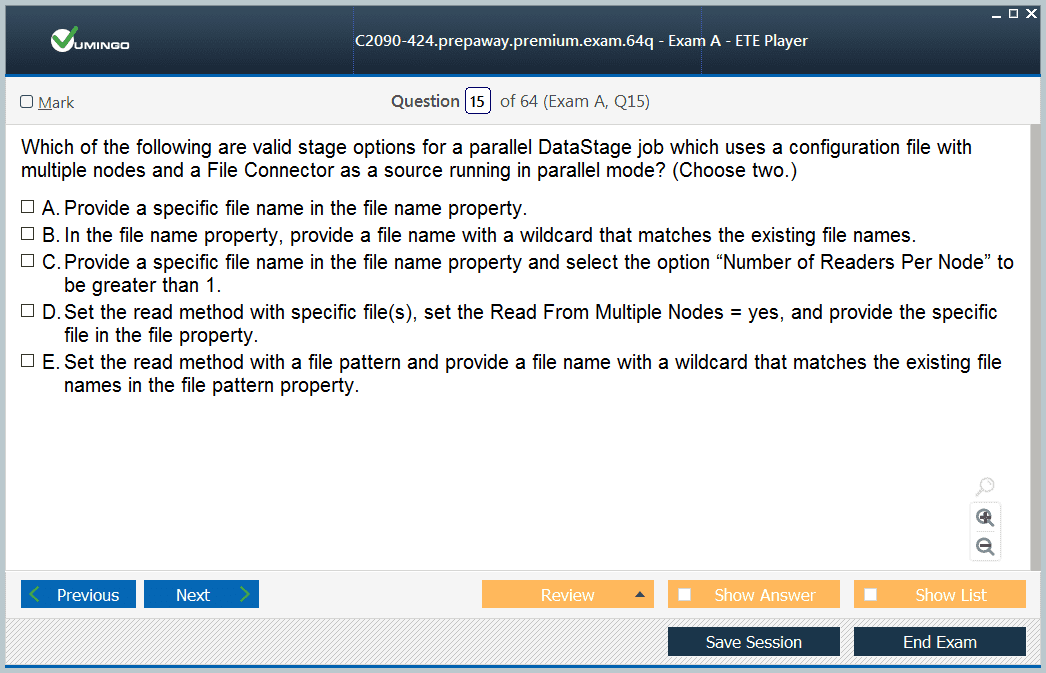

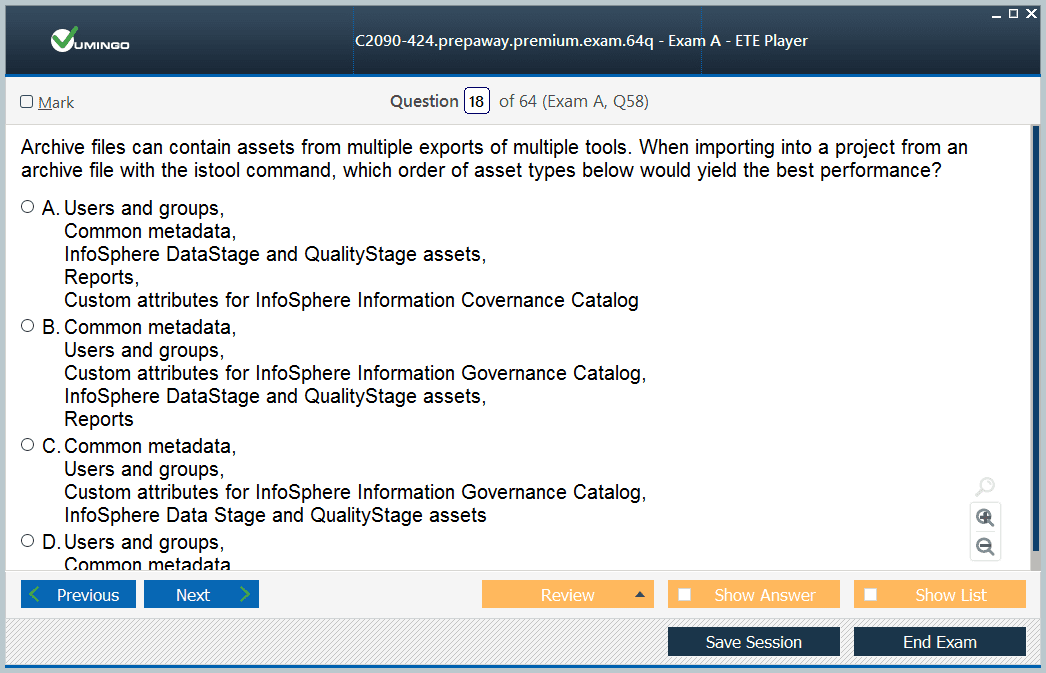

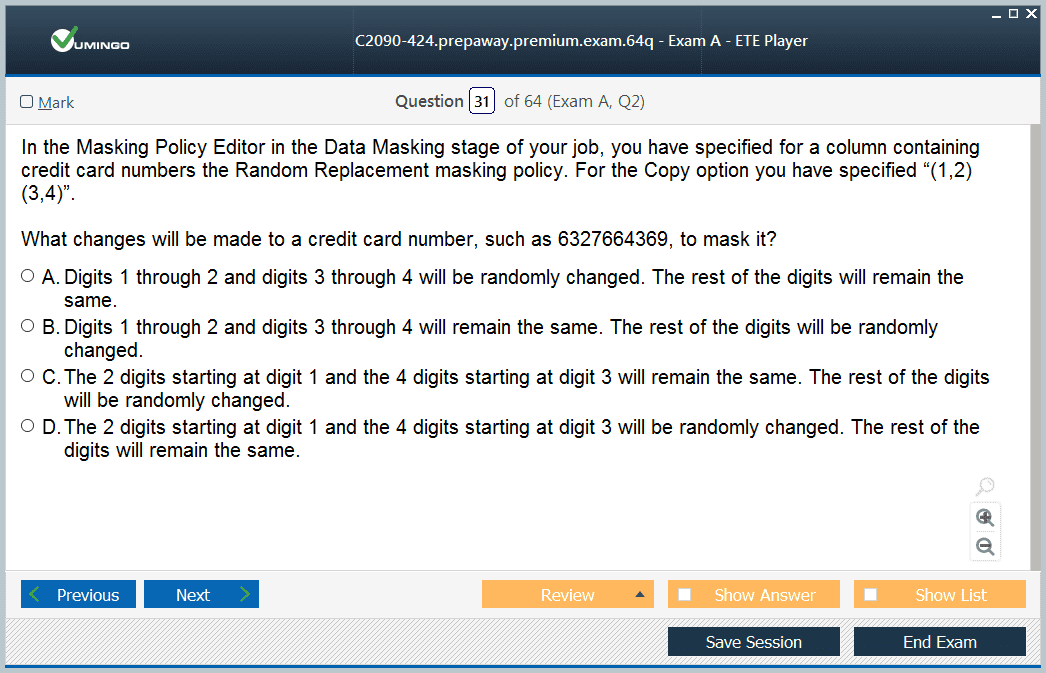

The IBM C2090-424 exam employs a multiple-choice format designed to assess both theoretical knowledge and practical abilities. Questions cover a range of topics, from system architecture and job design to troubleshooting and performance optimization. Each question typically carries equal weight, and the exam duration provides sufficient time to analyze and answer each question thoughtfully. The scoring system emphasizes accuracy, encouraging candidates to focus on understanding concepts rather than memorizing content superficially.

The question format often simulates real-world scenarios where candidates must apply their knowledge to resolve specific challenges. This approach tests practical skills in job design, metadata management, network configuration, and data flow optimization. Candidates may encounter questions that require breaking down complex processes into manageable steps, reflecting the decision-making required in actual data integration tasks. Understanding the exam structure allows candidates to allocate time effectively and ensures a methodical approach to answering questions under timed conditions.

Developing a Structured Study Plan

A comprehensive study plan is crucial for success in the IBM C2090-424 exam. Candidates should start by mapping out each topic area and identifying subtopics that require deeper focus. For instance, system architecture can be divided into server components, client interactions, job sequences, and reusable modules. Performance optimization can be broken down into resource allocation, parallelism, job monitoring, and runtime configuration. By segmenting the content, candidates can focus on mastering each concept systematically while gradually building a complete understanding of the platform.

Practical application of learned concepts is critical. Setting up a test environment allows candidates to design jobs, simulate data flows, manage metadata, and perform troubleshooting exercises. Hands-on practice ensures that theoretical knowledge is reinforced through real-world experience, improving retention and increasing confidence. Simulating common challenges encountered in production environments helps candidates develop problem-solving skills that are directly applicable to their professional roles.

Integrating Security and Compliance Knowledge

Understanding security and compliance within DataStage is vital for both exam success and professional practice. Candidates must be familiar with access control mechanisms, user authentication, and role-based permissions. Protecting sensitive data and ensuring compliance with organizational and regulatory standards are key responsibilities. Knowledge of encryption methods, secure data transfer protocols, and audit procedures ensures that candidates can maintain secure and reliable data workflows. Security scenarios in the exam often test the ability to identify vulnerabilities and implement best practices for safeguarding data integrity and confidentiality.

Cloud Integration and Virtualization

Cloud computing and virtualization are increasingly important in modern data integration environments. Candidates should understand how to configure DataStage for cloud storage solutions, manage virtualized resources, and optimize job execution in hybrid cloud settings. This includes awareness of network latency, resource contention, and scalability considerations. Familiarity with cloud deployment options enhances candidates’ ability to manage distributed environments and ensures that they can leverage cloud capabilities effectively. Exam questions may simulate cloud scenarios, requiring practical understanding of these concepts.

Troubleshooting and System Maintenance

Troubleshooting and system maintenance are core competencies evaluated in the IBM C2090-424 exam. Candidates need to develop systematic approaches for identifying and resolving job failures, configuration errors, and performance bottlenecks. This includes analyzing logs, interpreting error messages, and applying corrective actions. Maintenance tasks such as backup, recovery, and version management are equally important for ensuring system reliability. Mastery of these skills demonstrates practical readiness to handle production-level challenges and maintain operational continuity.

Optimizing Performance and Resource Utilization

Performance tuning involves identifying inefficiencies in job execution and optimizing resource usage. Candidates must understand parallel processing, memory allocation, and job sequencing to achieve optimal runtime performance. Monitoring tools and metrics help identify bottlenecks and guide corrective actions. Efficient performance management ensures that large-scale data processing tasks are executed reliably and quickly, which is crucial in enterprise environments. Exam questions may test candidates on diagnosing performance issues and implementing optimization strategies.

Career Advantages of Certification

Achieving the IBM C2090-424 certification provides tangible benefits in career progression. Certified professionals are recognized for their technical expertise in DataStage and can pursue roles such as ETL developer, data integration specialist, or technical consultant. Mastery of DataStage v11.3 allows candidates to handle complex data workflows, optimize system performance, and troubleshoot issues efficiently. The certification validates skills and knowledge, enhancing employability and opening opportunities for higher responsibility roles.

In addition to professional credibility, certified individuals often experience increased earning potential. The certification demonstrates a recognized level of proficiency, making candidates attractive for specialized projects and leadership opportunities. Knowledge gained through exam preparation equips professionals to contribute effectively to strategic initiatives, data integration projects, and process improvements within organizations.

Practical Preparation Strategies

Effective exam preparation requires balancing theoretical study with practical exercises. Candidates should allocate time to review each topic area thoroughly while reinforcing concepts through hands-on practice. Regularly simulating real-world scenarios, managing jobs, configuring systems, and performing optimizations builds confidence and proficiency. Tracking progress through practice exercises allows candidates to identify weak areas and focus on improving them systematically. Gradual, structured preparation enhances understanding, retention, and readiness for the exam.

Practical exercises also help internalize troubleshooting and problem-solving methods. By applying concepts in a controlled environment, candidates gain insights into system behavior, job dependencies, and error handling. This hands-on approach complements theoretical study, ensuring that candidates are equipped to handle both exam questions and professional challenges effectively.

Long-Term Knowledge Integration

Preparing for the C2090-424 exam is not solely about passing a test; it also involves integrating knowledge for long-term professional growth. Developing a routine of continuous learning and practical application ensures that skills remain relevant and up-to-date. Candidates who engage regularly with job design, data flows, system optimization, and cloud integration cultivate expertise that extends beyond the exam.

Professionals who integrate study topics into real-world scenarios gain deeper insights into DataStage functionality and operational best practices. This approach enhances problem-solving capabilities, builds confidence, and ensures that certified individuals are prepared for a wide range of technical challenges in enterprise environments.

Mastering the IBM C2090-424 exam requires a comprehensive understanding of DataStage v11.3, including architecture, job design, security, cloud integration, performance tuning, troubleshooting, and system maintenance. A structured study plan that combines theoretical knowledge with practical application is essential for success. Candidates benefit from hands-on exercises, scenario simulations, and continuous review to build confidence and competence. Achieving this certification not only validates expertise but also enhances career prospects, providing opportunities for advancement, professional recognition, and increased earning potential.

Deep Dive into DataStage Job Design and Development

Job design is at the heart of mastering DataStage for the C2090-424 exam. Candidates must understand how to construct jobs that efficiently extract, transform, and load data across multiple sources and targets. Each job is composed of stages connected through links, and understanding the role of each stage type is essential. Sequential file stages, database stages, and transformer stages all serve unique purposes, and using them correctly ensures smooth data flow. Parallel jobs further complicate the environment, requiring candidates to understand partitioning, data distribution, and stage-specific performance considerations.

Effective job design begins with proper planning. Candidates should analyze source and target requirements, determine transformation logic, and map out the data flow before constructing jobs. Metadata management plays a critical role in this planning phase, ensuring consistency across jobs and enabling runtime column propagation. Data lineage and schema propagation are key topics that candidates must grasp, as they influence downstream processes and ensure accurate integration. By focusing on these elements, candidates prepare for both the conceptual and practical aspects of the exam.

Metadata Management and Data Governance

Understanding metadata is crucial for maintaining the integrity of data pipelines. Candidates must be familiar with the framework schema, metadata import and sharing, and managing metadata across multiple jobs. Metadata acts as a blueprint for transformations and job execution, allowing DataStage to manage column propagation and dependencies effectively. The exam tests knowledge of how metadata facilitates integration, supports version control, and maintains consistency across environments.

Data governance and security are intertwined with metadata management. Candidates should know how to implement access controls, manage roles, and protect sensitive information during processing. Encryption and secure data transmission methods are key areas of focus, ensuring that data is handled in compliance with organizational and regulatory standards. By integrating metadata management with security practices, candidates demonstrate their ability to create reliable and compliant data workflows.

System Architecture and Parallel Processing

A strong grasp of system architecture is necessary for understanding how DataStage handles large-scale data operations. The exam evaluates knowledge of server components, client-server interactions, and parallel processing frameworks. Parallelism in DataStage allows multiple data streams to be processed simultaneously, improving performance and efficiency. Candidates should understand partitioning strategies, job sequencing, and the nuances of processing large datasets across distributed environments.

Awareness of the underlying architecture helps candidates optimize job performance. They need to know how to allocate resources effectively, monitor job execution, and identify bottlenecks. Understanding the flow of data through multiple stages and nodes ensures that candidates can troubleshoot issues, balance workloads, and maintain high system performance. Mastery of these architectural principles is a key differentiator for success in the exam and professional practice.

Performance Tuning and Optimization

Performance optimization is a recurring theme in the exam and in real-world applications. Candidates must understand how to fine-tune jobs for minimal execution time and efficient resource use. This includes optimizing database queries, configuring memory and processing parameters, and managing parallel execution settings. Monitoring tools within DataStage provide metrics on job performance, helping identify areas for improvement. Candidates should practice analyzing these metrics and applying corrective actions to optimize throughput.

Optimization extends to storage and network considerations. Efficient data movement requires understanding how links, buffers, and pipelines interact with system resources. Candidates should be able to implement strategies for batch processing, caching, and load balancing to achieve optimal results. The exam evaluates practical knowledge of these optimization techniques, and hands-on experience with job tuning is essential for thorough preparation.

Troubleshooting and Issue Resolution

The ability to troubleshoot effectively separates proficient DataStage professionals from others. Candidates must be able to analyze error logs, understand failure messages, and implement solutions to maintain job continuity. Troubleshooting encompasses job failures, configuration errors, and system performance issues. The exam tests both systematic problem-solving approaches and practical knowledge of common pitfalls.

Candidates are encouraged to practice with real-world scenarios, simulating issues like connectivity problems, data type mismatches, or performance degradation. Developing a methodology for diagnosing problems, testing fixes, and validating results is critical. Troubleshooting exercises also help reinforce theoretical knowledge, ensuring that candidates can apply concepts effectively under exam conditions.

Cloud Integration and Virtualization Considerations

Modern DataStage deployments often involve cloud environments and virtualized infrastructure. Candidates must understand how to configure jobs to interact with cloud storage, manage virtualized resources, and optimize performance in hybrid or fully cloud-based systems. This includes awareness of latency, bandwidth limitations, and resource allocation in distributed environments.

The exam evaluates the ability to design jobs and system configurations that leverage cloud capabilities efficiently. Candidates should focus on integration strategies, best practices for cloud security, and maintaining data integrity during transfers. Familiarity with virtualization concepts ensures that candidates can deploy scalable, resilient, and high-performing data pipelines that align with contemporary enterprise requirements.

Security Practices and Compliance Requirements

Security is an integral part of DataStage operations and a focus of the C2090-424 exam. Candidates need to understand how to manage user roles, implement access controls, and ensure data encryption during transit and at rest. Compliance with organizational policies and regulatory standards is emphasized, as mishandling sensitive data can lead to operational and legal risks.

Practical exercises in configuring secure jobs, restricting access to metadata, and implementing audit mechanisms help reinforce security concepts. Candidates should be able to identify potential vulnerabilities, apply preventive measures, and validate compliance measures. Mastery of these practices demonstrates readiness to manage enterprise-level data systems securely and effectively.

Exam Registration and Preparation Strategy

Scheduling the exam requires careful planning. Candidates should select a testing environment, whether an authorized test center or an online proctored exam, ensuring all technical and logistical requirements are met. Accurate registration details and adherence to deadlines reduce stress and enable focused preparation.

A structured preparation strategy involves breaking down topics into manageable sections, combining theory with hands-on practice, and reviewing complex concepts through repeated exercises. Practicing job creation, troubleshooting, and optimization in a controlled environment builds confidence and reinforces understanding. Candidates benefit from tracking progress, identifying weak areas, and focusing study sessions on improving those areas.

Practical Study Techniques

To ensure thorough exam readiness, candidates should integrate multiple learning methods. Reviewing theoretical concepts, building jobs in practice environments, analyzing logs, and performing performance tuning exercises form the foundation of an effective study routine. Scenario-based exercises enhance problem-solving skills and reinforce the application of concepts in real-world contexts.

Time management is also essential during preparation. Candidates should allocate dedicated study sessions for each major topic, alternating between theory and hands-on exercises. Regular review of completed exercises helps consolidate learning, while simulated test scenarios allow candidates to experience exam conditions and refine time management strategies.

Leveraging Hands-On Experience

Hands-on practice is critical for internalizing DataStage concepts. Candidates should focus on creating jobs that integrate multiple sources, handle complex transformations, and implement error handling and recovery strategies. Testing jobs with large datasets helps understand system behavior, identify performance bottlenecks, and optimize execution.

Working with metadata and implementing column propagation strengthens understanding of data lineage and ensures consistent results across jobs. Candidates should also practice configuring security settings, managing access controls, and ensuring data integrity during transfers. This practical experience is invaluable for both exam performance and real-world application.

Building Long-Term Expertise

The C2090-424 exam is not only a certification milestone but also a gateway to long-term professional growth. Candidates who integrate learned concepts into daily practice develop advanced expertise in ETL processes, data integration, and system optimization. Continuous learning and exposure to new scenarios enhance problem-solving abilities and reinforce understanding of core principles.

Developing a habit of regularly revisiting job design techniques, performance tuning strategies, and security practices ensures sustained competence. This approach prepares candidates to handle evolving technologies, new features, and complex enterprise environments. Long-term expertise translates to career growth, leadership opportunities, and the ability to manage sophisticated data integration projects effectively.

Integrating Exam Preparation with Career Goals

The skills gained while preparing for the C2090-424 exam align closely with industry requirements. Candidates develop proficiency in ETL processes, system optimization, cloud integration, and security management. These competencies are directly applicable to roles such as ETL developer, data integration specialist, or technical consultant. Certification validates these abilities, increasing employability and opening pathways for advanced positions.

Candidates benefit from aligning exam preparation with professional objectives. Practicing relevant skills, understanding best practices, and applying knowledge in real-world scenarios ensures readiness not only for the exam but also for ongoing career challenges. Certification serves as a benchmark for expertise and demonstrates a commitment to professional development.

Candidates gain in-depth understanding of job design, metadata management, performance optimization, troubleshooting, cloud integration, security, and hands-on application. Structured study routines, scenario-based exercises, and continuous learning contribute to a strong foundation for both exam success and professional competence. Achieving this certification demonstrates expertise in DataStage v11.3 and equips candidates to manage complex data integration environments with confidence.

Advanced Data Transformation Techniques

One of the most critical aspects of the C2090-424 exam is mastering advanced data transformation techniques within DataStage. Candidates need to understand how to implement complex transformations, manipulate datasets efficiently, and apply conditional logic across multiple stages. This includes working with transformer stages, routine integration, and job parameterization. Conditional expressions and derivations allow jobs to dynamically adjust processing based on input values, improving flexibility and efficiency.

Candidates are expected to grasp runtime column propagation, which enables seamless integration of metadata changes without breaking existing jobs. Proper use of derivations, functions, and lookups ensures data integrity and reduces processing errors. By understanding these transformation techniques, candidates can design scalable, robust, and maintainable jobs that reflect real-world enterprise requirements.

Job Sequencing and Orchestration

Efficient orchestration of multiple jobs is a core requirement for the exam. Candidates need to know how to manage dependencies, schedule jobs, and handle conditional flows. Job sequences in DataStage allow complex workflows to be executed in a controlled manner. These sequences can include triggers, exception handling, and parallel execution branches to ensure smooth operation across large-scale data pipelines.

Understanding job dependencies and sequencing is essential for minimizing runtime failures and optimizing performance. Candidates should practice creating sequences that handle exceptions gracefully, implement notifications, and maintain audit trails. Mastery of these concepts ensures candidates can manage enterprise workflows effectively while demonstrating the ability to plan, execute, and monitor integrated jobs.

Performance Monitoring and Optimization

Optimizing job performance goes beyond simple configuration adjustments. Candidates need to monitor resource utilization, identify bottlenecks, and implement strategies for efficient data processing. Partitioning strategies, buffer management, and memory allocation are critical for ensuring jobs run smoothly under varying data volumes.

Parallelism plays a significant role in performance tuning. Understanding the different partitioning methods, such as round-robin, hash, or range-based partitioning, helps distribute workload evenly across processing nodes. Candidates should also be familiar with optimizing database access, reducing data movement, and implementing incremental loads to enhance job efficiency. Monitoring tools within DataStage provide insights into execution metrics, which can be used to fine-tune job performance and prevent resource contention.

Troubleshooting and Error Handling

Troubleshooting is a skill tested extensively in the exam. Candidates must know how to identify the root cause of job failures, interpret error logs, and apply corrective measures. Common issues include connectivity errors, data type mismatches, and transformation logic failures. Developing a systematic approach to troubleshoot ensures that candidates can quickly resolve issues and maintain smooth operations.

Error handling strategies are equally important. Candidates should be able to implement checkpoints, exception stages, and retry mechanisms to ensure that jobs continue running despite temporary failures. Incorporating logging and notifications helps maintain transparency and allows teams to respond promptly to issues. Practicing troubleshooting scenarios enhances problem-solving skills and prepares candidates for both exam questions and real-world challenges.

Cloud and Virtual Environment Integration

Modern DataStage environments increasingly rely on cloud infrastructure and virtualized environments. Candidates need to understand how to configure jobs to operate efficiently in these contexts. This includes knowledge of cloud storage interactions, data transfer protocols, and virtual resource allocation. Latency, bandwidth, and storage performance all affect job execution, making it critical to design jobs that optimize resource use while maintaining data integrity.

Integration with cloud services also requires understanding security, compliance, and access control mechanisms. Candidates should be familiar with best practices for encrypting data in transit and at rest, managing user permissions, and monitoring cloud resource utilization. These skills ensure that jobs can be executed reliably in distributed, scalable environments, reflecting the demands of modern enterprise data integration.

Security and Compliance in Data Integration

Ensuring data security and compliance is a fundamental part of managing DataStage jobs. Candidates must understand how to enforce access controls, manage sensitive information, and comply with regulatory standards. Security practices include setting up user roles, restricting metadata access, and implementing encryption protocols. Maintaining audit trails and logging system activity also supports compliance and provides transparency in data operations.

Exam questions often test the ability to design secure workflows that prevent unauthorized access and protect sensitive data. Candidates should be familiar with strategies for managing credentials, masking data, and ensuring secure transfers between sources and targets. By integrating security considerations into job design, candidates demonstrate readiness to manage enterprise-level data operations responsibly.

Leveraging Job Parameters and Reusable Components

Job parameterization is an essential technique for building flexible and reusable DataStage jobs. Parameters allow jobs to be adapted for different environments, datasets, or execution conditions without requiring code changes. Candidates should understand how to define and use job parameters effectively, including environment variables, runtime inputs, and default values.

Reusable components, such as shared routines, templates, and custom stages, help maintain consistency and reduce development time. Candidates are expected to know how to create and integrate these components into workflows, ensuring maintainability and scalability. Understanding how to balance reusability with performance optimization is key for both exam preparation and practical job implementation.

Metadata-Driven Development and Runtime Column Propagation

Metadata-driven development enables dynamic job execution by leveraging metadata definitions to guide transformations and data flow. Candidates should understand how to import, manage, and share metadata across multiple jobs. Runtime column propagation allows jobs to adapt automatically when metadata changes, reducing maintenance overhead and minimizing errors.

This approach is particularly valuable for large-scale integration projects where schema changes are frequent. Candidates should practice implementing metadata-driven jobs that maintain accuracy while adapting to evolving source and target structures. Proficiency in this area ensures that candidates can design flexible, robust, and scalable ETL processes.

Monitoring and Logging Strategies

Effective monitoring and logging are critical for operational excellence in DataStage environments. Candidates should understand how to set up job logs, track execution metrics, and analyze performance trends. Logs provide insights into failures, bottlenecks, and resource utilization, enabling proactive issue resolution.

Monitoring strategies include setting thresholds for job completion times, resource consumption, and error rates. By analyzing these metrics, candidates can optimize performance, ensure reliability, and maintain compliance with organizational standards. Familiarity with these practices demonstrates the ability to manage enterprise workloads efficiently.

Preparing with Structured Study Plans

A structured study plan is vital for C2090-424 exam success. Candidates should break down exam topics into manageable sections, focusing on practical exercises, theoretical understanding, and review sessions. Allocating time for hands-on practice, scenario-based exercises, and performance tuning ensures comprehensive coverage of exam objectives.

Candidates benefit from simulating real-world scenarios to apply concepts in practical contexts. Tracking progress, identifying weak areas, and revisiting complex topics enhances retention and confidence. By following a disciplined study routine, candidates develop both the knowledge and the problem-solving skills required to excel in the exam.

Enhancing Exam Readiness Through Practice

Practice is an integral part of preparation. Candidates should build jobs, execute sequences, and troubleshoot simulated errors to gain practical insights. Hands-on experience reinforces theoretical concepts and prepares candidates to handle unexpected challenges. Performance tuning exercises, cloud integration tasks, and security configurations provide exposure to the full spectrum of exam content.

Structured practice sessions help candidates internalize best practices, understand dependencies, and develop a systematic approach to problem-solving. This preparation not only increases exam confidence but also equips candidates with skills that are directly applicable to professional environments.

Professional Growth and Application

The skills acquired while preparing for the C2090-424 exam extend beyond certification. Candidates develop expertise in ETL processes, metadata management, job orchestration, cloud integration, and security practices. These competencies are directly applicable to roles such as data integration specialist, ETL developer, or technical consultant.

Achieving certification demonstrates proficiency in designing, developing, and managing complex data workflows. Professionals gain credibility, enhance their career prospects, and are better positioned to take on challenging projects that involve enterprise-scale data integration. Continuous practice and exposure to evolving technologies ensure long-term competence and career growth.

Integrating Advanced Concepts with Real-World Application

Mastery of DataStage involves integrating advanced concepts into practical workflows. Candidates should focus on designing jobs that are scalable, maintainable, and optimized for performance. Implementing security measures, metadata-driven processes, and reusable components ensures robust, enterprise-ready solutions.

By applying learned skills to real-world scenarios, candidates enhance problem-solving abilities, develop operational efficiency, and gain confidence in managing large-scale data integration tasks. These experiences reinforce exam preparation and prepare candidates for professional challenges that require a comprehensive understanding of DataStage.

Data Quality and Validation Techniques

Data quality is a cornerstone of effective data integration and plays a significant role in the C2090-424 exam. Candidates must understand how to implement validation rules, enforce data standards, and identify anomalies in data streams. Ensuring accurate, complete, and consistent data is essential for reliable reporting and decision-making. DataStage provides tools to define rules for checking formats, ranges, and relationships between fields.

Validation techniques include using lookup stages, filter stages, and transformer derivations to detect inconsistencies. Candidates should practice implementing error-handling mechanisms that capture invalid records for review while allowing valid data to flow uninterrupted. Developing a systematic approach to data quality ensures that workflows produce trusted results, which is critical for enterprise environments.

Advanced Job Design and Modularization

Designing complex jobs efficiently is key to both exam success and professional application. Candidates should focus on modular job design, breaking down large processes into smaller, reusable components. Modularization improves maintainability, simplifies debugging, and enhances reusability across multiple projects.

Key considerations include designing stages for reusability, parameterizing jobs for flexibility, and separating transformation logic from workflow control. By creating a library of tested components, candidates can streamline development, reduce errors, and enhance scalability. Understanding these design principles ensures jobs remain adaptable to changing requirements and simplifies updates in enterprise systems.

Handling Large Data Volumes and High Throughput

Working with large datasets is a common scenario for DataStage professionals. Candidates need to understand strategies for efficient processing of high-volume data without compromising performance. Techniques include optimizing partitioning methods, tuning buffers, and leveraging parallelism effectively.

Partitioning strategies, such as hash, round-robin, or key-based partitioning, distribute workload evenly across nodes to avoid bottlenecks. Candidates should also be familiar with optimizing database access, minimizing unnecessary data movement, and implementing incremental loads to reduce processing times. By mastering high-volume processing techniques, candidates demonstrate readiness for enterprise-scale projects and exam questions focused on performance optimization.

Metadata Management and Impact Analysis

Managing metadata effectively is critical for maintaining clarity and consistency across jobs. Candidates should understand how to import, export, and share metadata, as well as track changes over time. Metadata-driven development allows jobs to adapt to schema changes automatically, reducing maintenance effort and error potential.

Impact analysis is another essential concept. Candidates need to assess how changes in source or target systems affect dependent jobs and workflows. By conducting thorough impact assessments, they can prevent disruptions and ensure continuity of operations. Mastery of metadata management and impact analysis prepares candidates to handle complex, evolving environments and demonstrates analytical thinking valued in enterprise settings.

Cloud and Hybrid Deployment Strategies

Modern data integration increasingly involves hybrid environments, where on-premise systems coexist with cloud platforms. Candidates need to understand deployment strategies that optimize performance and maintain security across these environments. This includes knowledge of data transfer protocols, cloud storage integration, and resource allocation for virtualized environments.

Candidates should practice designing jobs that accommodate cloud-specific constraints, such as network latency and variable throughput. Security considerations, including encryption, user access management, and compliance adherence, are also essential. Understanding cloud deployment strategies ensures that candidates can implement scalable, reliable solutions aligned with enterprise requirements and exam expectations.

Security Best Practices for Data Integration

Security is a critical component of managing enterprise data workflows. Candidates need to understand how to configure access controls, manage credentials, and secure sensitive information. Encryption protocols, role-based access, and logging practices help maintain data confidentiality and integrity.

Security measures should be integrated into job design, ensuring that sensitive data is protected during extraction, transformation, and loading processes. Candidates must also be familiar with audit trails and compliance reporting to satisfy regulatory requirements. By incorporating security best practices, candidates demonstrate the ability to manage enterprise data responsibly and meet industry standards.

Troubleshooting Complex Scenarios

Complex scenarios often arise when multiple jobs interact or when unexpected data anomalies occur. Candidates should develop systematic troubleshooting approaches, starting with log analysis, error tracing, and root cause identification. Understanding common failure points, such as connectivity issues, data type mismatches, and transformation logic errors, is essential.

Advanced troubleshooting involves analyzing job sequences, dependencies, and execution logs to pinpoint issues. Candidates should also practice implementing retry mechanisms, error notifications, and recovery strategies to maintain workflow continuity. Proficiency in troubleshooting ensures that candidates can handle real-world challenges and perform effectively under exam conditions.

Performance Tuning and Optimization

Performance tuning is a recurring theme in the exam, requiring candidates to balance resource utilization with processing efficiency. Techniques include optimizing parallelism, managing memory and buffer allocations, and fine-tuning stage properties. Candidates must understand how to measure job performance, identify bottlenecks, and implement improvements.

Optimizing database access, minimizing data movement, and leveraging partitioning strategies contribute to faster execution and reduced system load. By focusing on performance tuning, candidates enhance their ability to deliver efficient, scalable solutions. Hands-on practice with tuning exercises is crucial for both exam preparation and professional competency.

Job Scheduling and Workflow Automation

Scheduling jobs and automating workflows are essential skills for managing enterprise environments. Candidates should understand how to configure schedules, handle dependencies, and implement conditional execution paths. Automation ensures timely data processing and reduces manual intervention, improving efficiency and reliability.

Understanding triggers, notifications, and exception handling allows candidates to design robust workflows that can respond dynamically to changing conditions. Proficiency in scheduling and automation ensures that jobs execute consistently, meeting operational requirements and exam objectives. Candidates who master these concepts demonstrate readiness to manage enterprise workloads effectively.

Leveraging Practice and Simulation for Exam Preparation

Structured practice and simulation are key to mastering the exam content. Candidates should engage in hands-on exercises that mimic real-world scenarios, such as building complex jobs, configuring sequences, and troubleshooting errors. Simulated environments provide a safe space to experiment, learn from mistakes, and reinforce understanding.

Practice sessions should cover advanced transformation techniques, metadata management, job orchestration, cloud integration, and performance optimization. By repeating these exercises, candidates develop familiarity with common challenges and gain confidence in applying concepts under exam conditions. Simulation-based preparation ensures readiness for both theoretical questions and practical problem-solving tasks.

Professional Benefits and Career Advancement

Achieving the C2090-424 certification demonstrates proficiency in DataStage and positions professionals for a wide range of roles, including data integration specialist, ETL developer, and systems analyst. Certified individuals are recognized for their ability to design, develop, and manage complex data workflows efficiently and securely.

Certification enhances career prospects by validating technical skills, improving credibility, and opening opportunities for higher-level responsibilities. Employers value certified professionals for their ability to optimize processes, troubleshoot issues, and implement scalable solutions. This recognition translates into potential salary growth, leadership opportunities, and participation in strategic projects that influence organizational success.

Continuous Learning and Skill Development

Certification is not the endpoint but a milestone in continuous professional development. Candidates are encouraged to stay updated on evolving technologies, new DataStage features, and industry best practices. Ongoing learning ensures that skills remain relevant and adaptable to changing business needs.

Engaging in advanced projects, exploring emerging tools, and integrating new methodologies into workflows enhances expertise and practical understanding. Continuous improvement strengthens problem-solving capabilities, increases operational efficiency, and maintains professional relevance in a rapidly evolving technology landscape.

Integrating Best Practices into Daily Workflows

Mastery of exam topics translates directly into everyday work practices. Candidates should apply best practices in job design, security, performance optimization, and cloud integration to daily operations. This practical application ensures that workflows are efficient, reliable, and maintainable.

Following structured approaches to data transformation, error handling, metadata management, and automation fosters consistency and minimizes operational risk. By internalizing these principles, professionals not only excel in the exam but also contribute to higher-quality, more resilient enterprise systems.

Real-World Application of Skills

The competencies gained from preparing for the C2090-424 exam are directly applicable in real-world data integration projects. Professionals can design scalable ETL processes, optimize resource usage, ensure security compliance, and troubleshoot complex issues effectively.

Applying these skills in practical contexts reinforces theoretical knowledge, develops analytical thinking, and enhances the ability to manage enterprise data operations efficiently. This combination of technical expertise and applied experience positions professionals to excel in both certification assessments and professional roles.

Advanced Data Integration Techniques

A core aspect of mastering the C2090-424 exam is understanding advanced data integration techniques. Candidates should focus on integrating diverse data sources, including relational databases, flat files, APIs, and cloud storage systems. Handling heterogeneous data requires knowledge of connectors, stages, and transformations that allow seamless data flow between systems.

Advanced integration also involves data aggregation, merging, and cleansing to ensure consistency across multiple inputs. Understanding how to design pipelines that accommodate different data formats and schema changes is crucial. Candidates should practice creating workflows that consolidate and transform data efficiently, enabling analytics-ready datasets.

Complex Job Orchestration

Job orchestration becomes essential as projects scale in complexity. Candidates must be able to sequence jobs with dependencies, conditional execution paths, and error-handling mechanisms. Orchestrating jobs ensures that workflows execute in the correct order, with appropriate checkpoints and notifications for failures.

Advanced orchestration techniques include looping, branching, and parallel execution of jobs. Candidates should understand how to implement checkpoints and recovery points, allowing jobs to resume after failures without restarting the entire workflow. Mastery of job orchestration demonstrates an ability to manage enterprise-level workflows efficiently.

Real-Time Data Processing

The increasing demand for near-instantaneous insights makes real-time data processing a critical skill. Candidates should understand the fundamentals of streaming data, message queues, and event-driven processing within DataStage. Real-time integration allows organizations to respond quickly to operational events and business intelligence needs.

Practices include configuring continuous data capture, processing streaming events, and updating target systems in real time. Understanding latency, throughput, and concurrency considerations helps candidates design reliable and responsive workflows. Real-time processing knowledge strengthens both exam readiness and practical application in modern enterprise environments.

Advanced Performance Optimization

Performance optimization is not just about faster processing but also about resource efficiency. Candidates should focus on tuning job execution through buffer management, partitioning strategies, and efficient stage configurations. Knowing how to monitor performance metrics, identify bottlenecks, and apply corrective measures is essential.

Advanced optimization includes balancing workloads across multiple nodes, minimizing disk I/O, and optimizing database interactions. Candidates should also understand the impact of job design choices on system resources, ensuring that large-scale workflows run smoothly. Effective optimization techniques enhance reliability, scalability, and exam preparedness.

Cloud-Based Workflow Design

As cloud adoption increases, understanding cloud-based workflow design is essential. Candidates must grasp how to deploy DataStage jobs in hybrid or fully cloud environments. This involves knowledge of cloud storage, virtualized compute resources, and network configurations to ensure smooth data flow.

Key aspects include data transfer strategies, encryption, access control, and integration with cloud-native services. Candidates should practice designing workflows that handle varying cloud resource availability and minimize costs while maintaining performance. Cloud-based design knowledge demonstrates readiness for modern enterprise deployments and aligns with exam expectations.

Advanced Security Practices

Securing data across complex workflows requires more than basic access control. Candidates need to implement encryption for data at rest and in transit, manage user credentials, and enforce role-based access across all stages. Logging and audit trails should be configured to track data access and modifications.

Security considerations also include compliance with regulations and organizational policies. Understanding potential vulnerabilities, implementing safeguards, and monitoring job execution ensures robust protection. Candidates who can demonstrate secure data handling practices show proficiency in enterprise-grade solutions and readiness for the exam.

Integration Testing and Quality Assurance

Testing and validation are critical for ensuring that workflows perform as expected. Candidates should focus on unit testing individual jobs, integration testing sequences of jobs, and end-to-end testing of full data pipelines. Quality assurance ensures accuracy, completeness, and reliability of the processed data.

Techniques include verifying transformation logic, checking data consistency, and simulating failure scenarios. Automated testing strategies and validation checks reduce the risk of errors in production. Strong testing skills indicate readiness to handle real-world projects and align with exam objectives.

Troubleshooting and Problem Resolution

Troubleshooting in advanced scenarios requires methodical approaches. Candidates must be able to diagnose issues in multi-job sequences, complex transformations, and large data volumes. Techniques include analyzing logs, monitoring performance metrics, and isolating stages that cause failures.

Understanding dependencies, concurrency issues, and data flow intricacies allows candidates to identify root causes effectively. Implementing retry mechanisms, error handling, and alerts ensures continuity of operations. Mastery of troubleshooting is essential for both certification success and practical application in enterprise environments.

Monitoring and Maintenance of Workflows

Ongoing monitoring ensures that data pipelines continue to function optimally. Candidates should be familiar with setting up alerts, dashboards, and performance metrics to track job execution and detect anomalies early. Regular maintenance includes updating job configurations, validating metadata, and performing system health checks.

By implementing proactive monitoring and maintenance strategies, candidates can ensure workflows remain efficient and reliable over time. This knowledge demonstrates the ability to manage enterprise-grade data operations effectively and meets the practical demands reflected in the exam.

Advanced Metadata and Lineage Management

Metadata and data lineage management provide transparency and traceability across workflows. Candidates must understand how to track data sources, transformations, and outputs, maintaining detailed lineage information. This ensures accountability, facilitates impact analysis, and supports regulatory compliance.

Effective metadata management enables reuse of components, easier troubleshooting, and quicker adaptation to schema changes. Candidates should practice documenting and utilizing metadata to maintain clarity and consistency across complex projects. Proficiency in this area is highly valued both professionally and in exam scenarios.

Professional Growth Through Applied Knowledge

Passing the C2090-424 exam equips professionals with advanced skills in DataStage development, job orchestration, performance optimization, security, and cloud integration. These competencies directly translate to enhanced career opportunities, including roles as data integration specialists, ETL developers, and enterprise solution architects.

The knowledge gained enables professionals to design scalable and efficient workflows, handle large-scale data processing, ensure data security, and maintain high-quality standards in complex environments. Employers recognize the practical expertise demonstrated through certification, leading to improved credibility, higher responsibility, and potential salary growth.

Long-Term Skill Development

Certification encourages ongoing professional growth. Candidates are motivated to stay current with updates, new features, and emerging best practices in data integration. Continuous learning ensures skills remain relevant in evolving technology landscapes, allowing professionals to tackle increasingly complex projects with confidence.

Ongoing development includes exploring advanced transformations, experimenting with cloud-native integrations, refining automation strategies, and optimizing high-volume workflows. This long-term skill-building approach reinforces knowledge, improves problem-solving ability, and sustains professional advancement.

Applying Certification Knowledge in Real Projects

Knowledge acquired through exam preparation has direct applications in real-world projects. Professionals can implement complex data pipelines, perform real-time integration, optimize performance, enforce security standards, and maintain metadata across multiple systems. These capabilities enable efficient, reliable, and scalable enterprise data operations.

By applying theoretical knowledge practically, professionals gain confidence, refine workflows, and adapt solutions to unique business requirements. This integration of certification knowledge into real projects enhances professional credibility and demonstrates readiness to manage enterprise-scale data integration challenges.

Conclusion

Successfully preparing for the C2090-424 exam requires a thorough understanding of advanced data integration, job orchestration, real-time processing, and performance optimization. Candidates must be able to design workflows that efficiently handle diverse data sources, implement robust error handling, and maintain high levels of security and compliance. Mastery of cloud-based workflow design and metadata management ensures that candidates can manage complex, enterprise-scale environments with confidence.

Beyond technical knowledge, the exam emphasizes practical skills such as troubleshooting, monitoring, and maintaining workflows. These competencies ensure that candidates are not only able to pass the exam but also apply their knowledge effectively in real-world projects. The ability to implement, optimize, and secure data pipelines is critical for meeting organizational objectives and supporting data-driven decision-making.

Certification in this domain demonstrates proficiency and reliability, enhancing professional credibility and opening opportunities for career advancement. Candidates gain a competitive edge, enabling them to contribute to strategic initiatives, lead complex projects, and drive efficiency within their organizations. The knowledge acquired also supports long-term skill development, encouraging continuous learning and adaptation to emerging technologies.

Ultimately, preparing for and passing the C2090-424 exam equips professionals with both the theoretical understanding and practical expertise required to excel in data integration roles. The comprehensive preparation process fosters confidence, sharpens analytical and problem-solving abilities, and positions candidates for success in managing enterprise-level data environments efficiently and effectively.

IBM C2090-424 practice test questions and answers, training course, study guide are uploaded in ETE Files format by real users. Study and Pass C2090-424 InfoSphere DataStage v11.3 certification exam dumps & practice test questions and answers are to help students.

Exam Comments * The most recent comment are on top

- C1000-156 - QRadar SIEM V7.5 Administration

- C1000-074 - IBM FileNet P8 V5.5.3 Deployment Professional

- C1000-194 - IBM Cloud Pak for Business Automation v24.0.0 Solution Architect - Professional

- C1000-150 - IBM Cloud Pak for Business Automation v21.0.3 Administration

- C1000-142 - IBM Cloud Advocate v2

- C1000-132 - IBM Maximo Manage v8.0 Implementation

Why customers love us?

What do our customers say?

The resources provided for the IBM certification exam were exceptional. The exam dumps and video courses offered clear and concise explanations of each topic. I felt thoroughly prepared for the C2090-424 test and passed with ease.

Studying for the IBM certification exam was a breeze with the comprehensive materials from this site. The detailed study guides and accurate exam dumps helped me understand every concept. I aced the C2090-424 exam on my first try!

I was impressed with the quality of the C2090-424 preparation materials for the IBM certification exam. The video courses were engaging, and the study guides covered all the essential topics. These resources made a significant difference in my study routine and overall performance. I went into the exam feeling confident and well-prepared.

The C2090-424 materials for the IBM certification exam were invaluable. They provided detailed, concise explanations for each topic, helping me grasp the entire syllabus. After studying with these resources, I was able to tackle the final test questions confidently and successfully.

Thanks to the comprehensive study guides and video courses, I aced the C2090-424 exam. The exam dumps were spot on and helped me understand the types of questions to expect. The certification exam was much less intimidating thanks to their excellent prep materials. So, I highly recommend their services for anyone preparing for this certification exam.

Achieving my IBM certification was a seamless experience. The detailed study guide and practice questions ensured I was fully prepared for C2090-424. The customer support was responsive and helpful throughout my journey. Highly recommend their services for anyone preparing for their certification test.

I couldn't be happier with my certification results! The study materials were comprehensive and easy to understand, making my preparation for the C2090-424 stress-free. Using these resources, I was able to pass my exam on the first attempt. They are a must-have for anyone serious about advancing their career.

The practice exams were incredibly helpful in familiarizing me with the actual test format. I felt confident and well-prepared going into my C2090-424 certification exam. The support and guidance provided were top-notch. I couldn't have obtained my IBM certification without these amazing tools!

The materials provided for the C2090-424 were comprehensive and very well-structured. The practice tests were particularly useful in building my confidence and understanding the exam format. After using these materials, I felt well-prepared and was able to solve all the questions on the final test with ease. Passing the certification exam was a huge relief! I feel much more competent in my role. Thank you!

The certification prep was excellent. The content was up-to-date and aligned perfectly with the exam requirements. I appreciated the clear explanations and real-world examples that made complex topics easier to grasp. I passed C2090-424 successfully. It was a game-changer for my career in IT!