- Home

- Amazon Certifications

- AWS-SysOps AWS Certified SysOps Administrator (SOA-C01) Dumps

Pass Amazon AWS-SysOps Exam in First Attempt Guaranteed!

Get 100% Latest Exam Questions, Accurate & Verified Answers to Pass the Actual Exam!

30 Days Free Updates, Instant Download!

AWS-SysOps Premium Bundle

- Premium File 932 Questions & Answers. Last update: Feb 15, 2026

- Training Course 219 Video Lectures

- Study Guide 775 Pages

Last Week Results!

Includes question types found on the actual exam such as drag and drop, simulation, type-in and fill-in-the-blank.

Based on real-life scenarios similar to those encountered in the exam, allowing you to learn by working with real equipment.

Developed by IT experts who have passed the exam in the past. Covers in-depth knowledge required for exam preparation.

All Amazon AWS-SysOps certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the AWS-SysOps AWS Certified SysOps Administrator (SOA-C01) practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

Ultimate Guide: Top 7 Tips for Preparing AWS Certified SysOps Administrator Associate Exam

Amazon Web Services stands as the undisputed leader in cloud computing infrastructure, revolutionizing how organizations approach their technological requirements. For aspiring cloud professionals seeking to establish their credentials in this dynamic field, AWS presents a comprehensive certification pathway designed to validate expertise across multiple competency levels. The certification framework encompasses four distinct tiers: foundational understanding, associate proficiency, professional mastery, and specialized expertise domains.

Within the associate-level category, three primary certifications emerge as cornerstones of AWS competency validation. These include the Solutions Architect Associate credential, the Developer Associate qualification, and the SysOps Administrator Associate certification. Among these distinguished offerings, the AWS Certified SysOps Administrator Associate examination represents the most rigorous evaluation for system administrators and operations professionals.

The SysOps Administrator certification distinguishes itself through its emphasis on operational excellence, deployment strategies, configuration management, and comprehensive service administration within cloud environments. Unlike its counterparts that focus primarily on design principles or application development methodologies, this certification demands profound understanding of service implementation, monitoring techniques, troubleshooting procedures, and performance optimization strategies.

This exhaustive examination evaluates candidates' capabilities in managing complex cloud infrastructures, implementing automated deployment processes, configuring monitoring solutions, and maintaining operational stability across distributed systems. The assessment encompasses scenario-based questioning that mirrors real-world challenges faced by system administrators in enterprise environments.

Recent examination updates have introduced hands-on laboratory components, transforming the traditional multiple-choice format into a comprehensive evaluation that includes practical demonstrations of technical proficiency. Candidates must now navigate actual AWS environments, configure services, troubleshoot issues, and implement solutions under timed conditions, reflecting the authentic responsibilities of SysOps professionals.

Understanding the AWS Certification Ecosystem and Its Strategic Importance

Amazon Web Services has established itself as the predominant force in cloud computing, commanding significant market share and driving innovation across numerous industries. The certification program represents AWS's commitment to maintaining high standards of professional competency while addressing the growing demand for skilled cloud practitioners.

The foundational level serves as an entry point for individuals new to cloud computing concepts, providing essential knowledge about AWS services, pricing models, security fundamentals, and basic architectural principles. This level establishes the groundwork for more advanced certifications and helps professionals understand the broader ecosystem of cloud technologies.

Associate-level certifications build upon foundational knowledge by introducing deeper technical concepts, practical implementation strategies, and service-specific expertise. These credentials validate professionals' ability to design, deploy, and maintain AWS solutions effectively while adhering to best practices and security guidelines.

Professional-level certifications represent the pinnacle of AWS expertise, requiring extensive hands-on experience, advanced architectural understanding, and demonstrated ability to solve complex business challenges through innovative cloud solutions. These certifications are typically pursued by senior engineers, architects, and technical leaders.

Specialty certifications focus on specific domains such as machine learning, database management, security, networking, or data analytics. These targeted credentials allow professionals to demonstrate specialized expertise in rapidly evolving technological areas.

The SysOps Administrator Associate certification occupies a unique position within this framework, bridging the gap between fundamental cloud knowledge and advanced operational expertise. It validates professionals' ability to manage AWS environments effectively, ensuring optimal performance, security, and cost efficiency.

Organizations increasingly recognize the value of certified AWS professionals, often requiring specific certifications for employment opportunities or client engagements. The credential serves as tangible evidence of technical competency and commitment to professional development within the cloud computing domain.

Market research consistently demonstrates that AWS-certified professionals command premium salaries compared to their non-certified counterparts. The certification opens doors to diverse career opportunities across industries and geographical locations, reflecting the global adoption of AWS services.

Comprehensive Analysis of Examination Structure and Requirements

The AWS Certified SysOps Administrator Associate examination represents a meticulously crafted assessment designed to evaluate comprehensive operational knowledge across multiple service domains. The current examination format incorporates both traditional multiple-choice questions and innovative hands-on laboratory scenarios, creating a holistic evaluation framework.

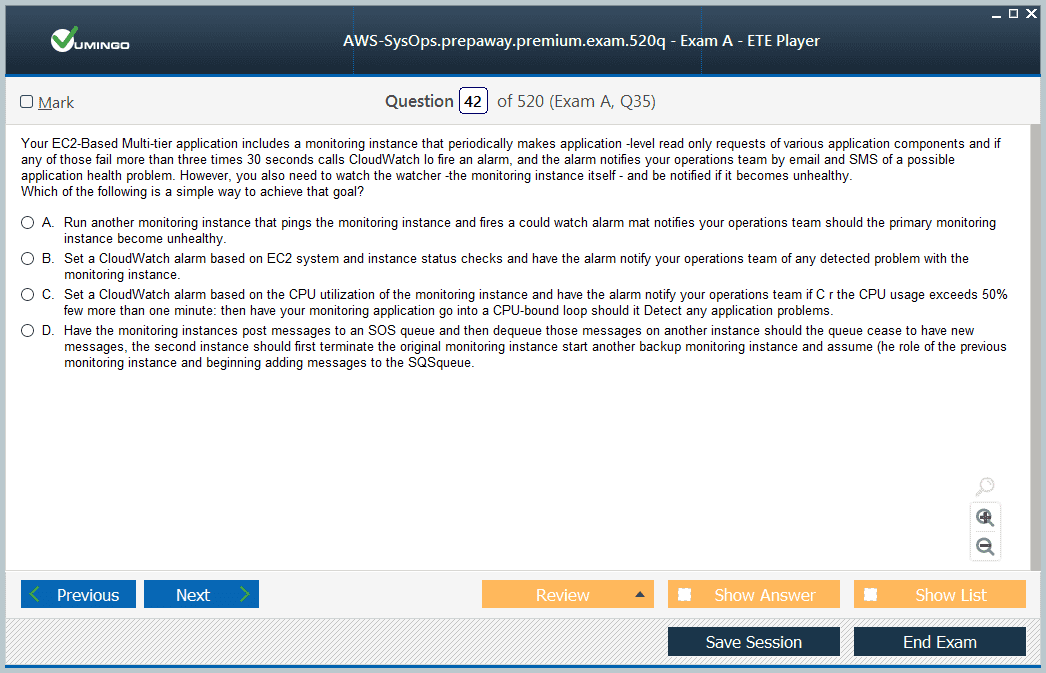

Traditional multiple-choice segments assess theoretical understanding, best practices knowledge, and scenario-based decision-making capabilities. These questions often present complex operational challenges requiring candidates to select optimal solutions from multiple viable alternatives. The questioning methodology emphasizes practical application rather than memorization, demanding deep comprehension of service interactions and dependencies.

Hands-on laboratory components require candidates to perform actual configuration tasks within live AWS environments. These practical exercises simulate real-world operational scenarios, testing candidates' ability to navigate the management console, configure services correctly, implement monitoring solutions, and troubleshoot common issues under time constraints.

The examination blueprint defines specific knowledge domains and their relative weighting within the overall assessment. Monitoring, logging, and remediation activities typically constitute the largest portion of the examination, reflecting their critical importance in operational roles. Configuration management and infrastructure automation represent another significant component, emphasizing the modern emphasis on infrastructure as code and automated deployment processes.

High availability and business continuity concepts form another crucial examination area, testing candidates' understanding of disaster recovery planning, backup strategies, fault tolerance design, and service redundancy implementation. Security and compliance considerations permeate throughout all examination domains, reflecting AWS's commitment to maintaining robust security postures across all operational activities.

Networking concepts represent a fundamental requirement, encompassing virtual private cloud configuration, subnet design, routing protocols, load balancing strategies, and content delivery networks. Candidates must demonstrate proficiency in designing secure, scalable network architectures that support diverse application requirements.

Storage and data management topics cover various storage services, backup methodologies, data lifecycle policies, and performance optimization techniques. Understanding appropriate storage selection criteria, cost optimization strategies, and data protection mechanisms becomes essential for successful examination completion.

The examination duration typically spans three hours, providing adequate time for both multiple-choice responses and laboratory completion. However, effective time management becomes crucial given the complexity of hands-on scenarios and the detailed analysis required for challenging questions.

Recent examination statistics indicate that approximately 65-70% of first-time candidates achieve passing scores, highlighting the rigorous nature of the assessment while demonstrating that thorough preparation leads to successful outcomes. The examination undergoes regular updates to reflect evolving AWS services and industry best practices, ensuring continued relevance and accuracy.

Foundation Building and Official Resource Utilization

The journey toward AWS Certified SysOps Administrator Associate certification begins with comprehensive engagement with authoritative documentation sources. Official AWS resources provide the most accurate, up-to-date, and examination-relevant information available to candidates preparing for this challenging assessment.

Amazon maintains extensive documentation repositories covering every aspect of their cloud services ecosystem. These resources undergo continuous updates to reflect service enhancements, feature additions, and evolving best practices. For SysOps certification candidates, these materials serve as the primary knowledge foundation upon which all additional learning builds.

The certification preparation homepage aggregates essential information across all AWS credential programs, providing centralized access to examination guides, study recommendations, and preparation strategies. This comprehensive portal enables candidates to understand the complete certification pathway while focusing specifically on SysOps Administrator requirements.

Dedicated SysOps Administrator certification pages contain detailed examination blueprints, prerequisite recommendations, and scoring methodologies. These official sources eliminate ambiguity regarding examination expectations while providing clear guidance on preparation strategies and timeline recommendations.

Official documentation distinguishes itself through its authoritative nature, technical accuracy, and direct alignment with examination objectives. Unlike third-party resources that may contain outdated information or interpretative variations, AWS documentation represents the definitive source for service capabilities, configuration procedures, and operational best practices.

The documentation architecture follows logical service categorization, enabling systematic exploration of related technologies and their interconnections. This organizational approach facilitates comprehensive understanding of service dependencies and integration possibilities, crucial knowledge areas for SysOps professionals.

Service-specific documentation sections provide exhaustive coverage of configuration options, operational procedures, monitoring capabilities, and troubleshooting methodologies. These detailed resources enable deep technical understanding necessary for successful examination performance and practical professional application.

Regular documentation updates reflect rapid service evolution and feature enhancement cycles characteristic of cloud computing platforms. Candidates must remain current with these changes to ensure their preparation aligns with the most recent examination content and service capabilities.

Decoding Examination Blueprints and Learning Objectives

The AWS Certified SysOps Administrator Associate examination blueprint serves as the authoritative guide for understanding assessment scope, domain weighting, and specific knowledge requirements. This meticulously crafted document outlines the comprehensive skill set expected from certified professionals and provides strategic direction for focused preparation efforts.

Examination domains represent distinct knowledge areas that collectively encompass the full spectrum of SysOps Administrator responsibilities. Each domain carries specific weighting percentages that indicate their relative importance within the overall assessment framework. Understanding these weightings enables candidates to allocate study time proportionally and prioritize high-impact areas.

The monitoring, logging, and remediation domain typically commands the largest portion of examination focus, reflecting its central importance in operational roles. This area encompasses service health monitoring, log analysis, alert configuration, automated remediation processes, and performance optimization techniques. Candidates must demonstrate proficiency in implementing comprehensive monitoring solutions that provide actionable insights into system behavior and performance characteristics.

Configuration management and infrastructure automation represent another substantial examination component, emphasizing modern operational practices and efficiency improvements through automation. This domain covers infrastructure as code implementations, configuration management tools, automated deployment processes, and change management procedures.

High availability and business continuity planning constitute critical knowledge areas that test candidates' understanding of resilience design principles, disaster recovery strategies, backup methodologies, and fault tolerance implementation. These concepts extend beyond technical configuration to encompass strategic planning and risk management considerations.

Security and compliance permeate throughout all examination domains, reflecting the paramount importance of maintaining robust security postures in cloud environments. Candidates must understand identity management systems, access control mechanisms, data protection strategies, compliance frameworks, and security monitoring techniques.

Networking concepts form the backbone of effective cloud operations, requiring deep understanding of virtual private clouds, subnet configuration, routing protocols, load balancing strategies, and content delivery mechanisms. These foundational concepts enable effective service connectivity and optimal performance delivery.

Cost optimization strategies represent an increasingly important examination area as organizations seek to maximize cloud investment returns. Candidates must understand pricing models, cost monitoring tools, resource optimization techniques, and financial governance practices.

The blueprint document includes recommended study resources, reference materials, and additional learning opportunities that supplement core documentation. These curated recommendations provide structured pathways for knowledge acquisition while ensuring comprehensive coverage of examination topics.

Strategic Approach to Official Resource Navigation

Effective utilization of official AWS resources requires systematic navigation strategies that maximize learning efficiency while ensuring comprehensive coverage of examination requirements. The vast scope of available documentation necessitates structured approaches to avoid information overload while maintaining focus on certification-relevant content.

Service documentation follows consistent organizational patterns that facilitate efficient information location and comprehension. Each service page typically includes overview sections, getting started guides, configuration instructions, best practices recommendations, and troubleshooting resources. Understanding this structure enables rapid navigation and targeted information retrieval.

The user guide sections provide comprehensive operational instructions covering service configuration, feature utilization, and administrative procedures. These detailed resources serve as practical references for hands-on learning activities and real-world implementation scenarios.

API reference documentation offers technical specifications for programmatic service interactions, essential knowledge for automation and integration activities. While not always directly examined, this technical depth provides valuable context for understanding service capabilities and limitations.

Developer guides bridge the gap between theoretical service descriptions and practical implementation strategies. These resources often include code examples, architectural patterns, and integration methodologies that enhance understanding of service utilization within broader system contexts.

Best practices documentation synthesizes operational wisdom gained through extensive real-world implementations across diverse customer environments. These curated recommendations provide tested strategies for common challenges and proven approaches to optimal service utilization.

Security-focused documentation sections deserve particular attention given their pervasive importance across all operational activities. These resources detail identity management configurations, access control implementations, data protection strategies, and compliance considerations that form the foundation of secure cloud operations.

The AWS Architecture Center provides additional context through detailed solution examples, reference architectures, and case study analyses. These resources demonstrate practical application of services within realistic business scenarios, enhancing understanding of operational considerations and design trade-offs.

Regular documentation updates require ongoing attention to maintain current knowledge aligned with evolving service capabilities. Subscribing to AWS service announcements, reading monthly feature summaries, and monitoring documentation change logs ensures preparation materials remain current and examination-relevant.

Understanding Examination Format Evolution and Implications

The recent introduction of hands-on laboratory components represents a fundamental shift in AWS certification assessment methodology, moving beyond theoretical knowledge evaluation toward practical competency validation. This evolution reflects industry demands for demonstrable technical skills and real-world problem-solving capabilities.

Laboratory scenarios present candidates with actual AWS environments where they must perform specific operational tasks under time constraints. These practical exercises simulate authentic workplace challenges, requiring candidates to navigate management interfaces, configure services, implement solutions, and verify successful completion.

The hybrid examination format combines multiple-choice questions with hands-on laboratory exercises, creating a comprehensive assessment that evaluates both theoretical understanding and practical application skills. This approach ensures certified professionals possess the technical competency necessary for effective operational performance.

Laboratory exercises typically involve common operational scenarios such as configuring monitoring solutions, implementing automated backup processes, troubleshooting service connectivity issues, or optimizing resource performance. These activities mirror daily responsibilities of SysOps administrators while testing knowledge application under pressure.

The introduction of practical components necessitates enhanced preparation strategies that emphasize hands-on learning alongside traditional study methods. Candidates must develop comfort with AWS management interfaces, command-line tools, and service configuration procedures through extensive practice activities.

Time management assumes greater importance given the complex nature of laboratory exercises and the detailed analysis required for scenario-based multiple-choice questions. Candidates must develop efficient problem-solving approaches that enable completion of all assessment components within allocated timeframes.

The scoring methodology encompasses both question accuracy and laboratory completion success, creating a holistic evaluation framework that reflects comprehensive operational competency. Partial credit may be awarded for laboratory exercises based on configuration accuracy and adherence to specified requirements.

This examination format evolution aligns with industry trends toward practical skill validation and reflects AWS's commitment to maintaining certification relevance within rapidly evolving technological landscapes. Certified professionals demonstrate proven ability to implement solutions effectively rather than simply understanding theoretical concepts.

Leveraging Official Training Resources and Learning Paths

AWS provides extensive official training resources designed to support systematic skill development and certification preparation. These professionally developed materials offer structured learning pathways that align directly with examination objectives while building practical competency through guided instruction.

AWS Training and Certification offers instructor-led courses delivered by certified AWS experts who provide authoritative instruction on service utilization, best practices implementation, and operational procedures. These comprehensive programs combine theoretical instruction with hands-on laboratory exercises that reinforce learning through practical application.

Digital learning platforms provide flexible access to professional-grade training content through self-paced modules, interactive demonstrations, and progressive skill assessments. These resources accommodate diverse learning preferences while maintaining rigorous academic standards and examination alignment.

Official learning paths aggregate related courses and resources into coherent educational sequences that guide candidates through systematic knowledge acquisition. These structured progressions ensure comprehensive coverage of prerequisite concepts before advancing to complex operational scenarios.

AWS Skill Builder represents the primary platform for accessing official training content, offering both free foundational courses and advanced paid programs. The platform includes progress tracking, competency assessments, and personalized learning recommendations based on individual performance and career objectives.

Classroom training sessions provide intensive, focused instruction delivered by expert instructors in collaborative learning environments. These immersive experiences facilitate direct interaction with subject matter experts while enabling peer learning opportunities and networking possibilities.

Virtual classroom options extend access to high-quality instruction regardless of geographical location, combining live expert instruction with interactive technologies that simulate traditional classroom experiences. These sessions often include real-time question and answer opportunities and collaborative problem-solving exercises.

Official training resources undergo continuous updates to reflect service enhancements and evolving industry practices. This ensures candidates receive current, accurate instruction that aligns with the latest examination content and professional requirements.

The investment in official training resources often yields significant returns through improved examination success rates, enhanced practical competency, and accelerated career advancement opportunities. These professionally developed programs provide structured learning approaches that optimize preparation efficiency while building comprehensive operational expertise.

Mastering Examination Blueprint Analysis and Strategic Study Planning

The AWS Certified SysOps Administrator Associate examination blueprint provides meticulous detail regarding the specific knowledge domains and competencies required for certification success. Understanding these domains in granular detail enables candidates to develop targeted preparation strategies that address each area with appropriate depth and focus.

The monitoring, logging, and remediation domain encompasses the largest portion of examination content, typically representing 20-22% of total questions. This emphasis reflects the critical importance of maintaining visibility into system operations, identifying performance anomalies, and implementing corrective measures to ensure optimal service delivery. Within this domain, candidates must demonstrate proficiency in CloudWatch metrics configuration, log aggregation strategies, alarm threshold establishment, and automated response mechanisms.

Advanced monitoring concepts include custom metric development, cross-service correlation analysis, distributed tracing implementation, and performance baseline establishment. These sophisticated techniques enable proactive identification of potential issues before they impact service availability or user experience. Understanding metric mathematics, statistical analysis methods, and trend identification becomes essential for effective monitoring solution implementation.

Log management strategies extend beyond basic collection to encompass centralized aggregation, structured analysis, retention policies, and security considerations. Candidates must understand various log formats, parsing techniques, search methodologies, and integration possibilities with third-party analysis tools. The ability to extract actionable insights from complex log datasets represents a core competency for operational professionals.

Remediation procedures require understanding of both manual intervention techniques and automated response mechanisms. Candidates should demonstrate knowledge of incident escalation procedures, service restoration methodologies, and preventive measure implementation to reduce future occurrence probabilities.

Configuration management and infrastructure automation typically account for 16-18% of examination questions, emphasizing modern operational practices that enhance efficiency, reduce human error, and ensure consistent environment management. This domain encompasses infrastructure as code principles, configuration management tools, automated deployment processes, and change management procedures.

Infrastructure as code implementation requires understanding of various templating languages, service orchestration methodologies, and version control integration practices. Candidates must demonstrate ability to create, maintain, and deploy infrastructure definitions that ensure consistent environment provisioning across multiple deployment stages.

Configuration management extends beyond initial deployment to encompass ongoing maintenance, compliance verification, and drift detection capabilities. Understanding various configuration management approaches, their appropriate application scenarios, and integration possibilities with existing operational workflows becomes crucial for examination success.

Automated deployment processes include continuous integration and continuous deployment pipeline implementation, testing strategy development, rollback procedure establishment, and monitoring integration throughout deployment cycles. These practices ensure reliable, repeatable deployment processes that minimize service disruption while maintaining high delivery velocity.

High availability and business continuity planning represents another substantial domain, typically comprising 14-16% of examination content. This area focuses on designing resilient systems that maintain service availability despite component failures, regional disruptions, or unexpected demand fluctuations.

Disaster recovery planning requires comprehensive understanding of recovery time objectives, recovery point objectives, backup strategies, cross-region replication mechanisms, and failover procedures. Candidates must demonstrate ability to design and implement recovery solutions that meet specific business requirements while optimizing cost and complexity considerations.

Fault tolerance design encompasses service redundancy, load distribution, health checking mechanisms, and automatic scaling capabilities. Understanding these concepts enables implementation of systems that gracefully handle component failures without impacting overall service availability.

Backup and restore procedures extend beyond simple data protection to include testing methodologies, retention policies, compliance requirements, and cost optimization strategies. Candidates must understand various backup approaches, their appropriate application scenarios, and integration possibilities with broader data management strategies.

Strategic Study Planning and Resource Allocation

Effective preparation for the AWS Certified SysOps Administrator Associate examination requires systematic planning that optimizes learning efficiency while ensuring comprehensive coverage of all required knowledge domains. Strategic study planning involves timeline establishment, resource allocation, progress monitoring, and adaptive adjustment based on evolving understanding and competency development.

Initial preparation phases should focus on foundational knowledge establishment through comprehensive review of official documentation, service overviews, and basic operational procedures. This foundation-building period enables subsequent advanced learning by ensuring solid understanding of core concepts and service capabilities.

The timeline allocation typically requires 8-12 weeks of dedicated preparation for candidates with moderate AWS experience, though individual requirements may vary based on prior knowledge, available study time, and learning preferences. Professional responsibilities and personal commitments must be considered when establishing realistic preparation schedules.

Domain-based study organization enables systematic progression through examination content while maintaining clear focus on specific knowledge areas. This approach facilitates deep understanding development while preventing superficial coverage that may prove inadequate during examination scenarios.

Progressive difficulty escalation ensures steady competency building through graduated learning experiences. Beginning with fundamental concepts and gradually advancing to complex operational scenarios enables confidence building while preventing overwhelming complexity exposure during early preparation phases.

Regular progress assessment through practice examinations, hands-on exercises, and knowledge verification activities provides objective measurement of preparation effectiveness. These assessments identify knowledge gaps that require additional attention while reinforcing successfully mastered concepts.

Study group participation or professional mentorship can provide valuable perspectives, alternative learning approaches, and motivation maintenance throughout extended preparation periods. Collaborative learning often reveals different understanding approaches and practical insights that enhance overall comprehension.

Resource diversification across multiple learning modalities accommodates different learning preferences while reinforcing key concepts through varied presentation methods. Combining reading, visual learning, hands-on practice, and interactive exercises creates comprehensive learning experiences that enhance retention and application capability.

Time management skills development becomes crucial given the examination's hybrid format and complex scenario requirements. Practice with timed exercises, laboratory completion under pressure, and efficient problem-solving approaches prepares candidates for actual examination conditions.

Advanced Blueprint Interpretation and Examination Psychology

Successful examination preparation extends beyond content mastery to encompass understanding of assessment psychology, question construction methodologies, and strategic response approaches. The AWS Certified SysOps Administrator Associate examination employs sophisticated questioning techniques that test deep understanding rather than surface-level memorization.

Scenario-based questions present complex operational challenges that require analysis of multiple variables, consideration of various solution approaches, and selection of optimal responses based on specified criteria. These questions often include detailed background information, specific constraints, and multiple seemingly viable alternatives.

Distractor analysis becomes essential for navigating challenging multiple-choice questions where several responses may appear technically correct. Understanding how examination authors construct plausible but incorrect alternatives enables more accurate response selection through systematic elimination processes.

The examination emphasizes practical application knowledge over theoretical memorization, requiring candidates to demonstrate understanding of when and how to apply specific solutions rather than simply recognizing service features. This approach validates real-world competency while ensuring certified professionals can contribute effectively to operational teams.

Laboratory exercises test procedural knowledge and practical implementation skills under realistic constraints. Success requires familiarity with management interfaces, efficient navigation techniques, and systematic approach to complex configuration tasks. Practice with similar environments and procedures builds confidence and competency for these critical examination components.

Time pressure management becomes particularly important given the examination's comprehensive scope and complex laboratory requirements. Developing efficient problem-solving approaches, rapid decision-making capabilities, and systematic verification procedures enables successful completion within allocated timeframes.

Question interpretation skills help candidates understand exactly what examinations authors are seeking while avoiding common misunderstanding traps. Careful reading, key term identification, and constraint recognition prevent errors caused by rushed or superficial analysis.

The examination may include questions covering newly released services or recently announced features, requiring candidates to maintain currency with AWS service evolution. Regular review of service announcements, feature updates, and documentation changes ensures preparation materials remain aligned with current examination content.

Understanding examination scoring methodologies helps candidates prioritize efforts toward high-impact areas while ensuring adequate coverage of all domains. The composite scoring approach considers both multiple-choice accuracy and laboratory completion success, requiring balanced competency across all assessment components.

Developing Effective Study Methodologies and Learning Strategies

Successful AWS Certified SysOps Administrator Associate certification preparation requires implementation of proven study methodologies that optimize learning efficiency while ensuring comprehensive knowledge acquisition. Effective strategies accommodate individual learning preferences while maintaining systematic progression through complex technical content.

Active learning approaches engage candidates directly with material through hands-on exercises, practical implementations, and real-world scenario analysis. These methodologies enhance retention and understanding compared to passive reading or video consumption while building practical skills applicable to professional responsibilities.

Spaced repetition techniques optimize long-term retention through strategic review scheduling that reinforces previously learned concepts at optimal intervals. This approach prevents knowledge decay while building cumulative understanding that withstands examination pressure and supports professional application.

Elaborative interrogation involves systematic questioning of learned concepts to develop deeper understanding and identify knowledge gaps. This technique encourages active engagement with material while building analytical skills necessary for complex problem-solving scenarios.

Interleaving practice combines study of different topics within single sessions rather than focusing exclusively on individual subjects. This approach enhances discrimination capabilities and builds flexibility in applying various concepts to complex scenarios that may involve multiple service domains.

Concept mapping visualizes relationships between different services, features, and operational procedures. These graphical representations help candidates understand system interdependencies while identifying integration opportunities and potential conflict areas.

Case study analysis involves detailed examination of real-world implementation scenarios, including challenges faced, solutions implemented, and lessons learned. These practical examples provide valuable context for theoretical concepts while demonstrating practical application possibilities.

Self-explanation techniques require candidates to articulate their understanding of concepts in their own words, identifying areas where comprehension may be incomplete or inaccurate. This process enhances understanding while building communication skills valuable in professional environments.

Retrieval practice through frequent testing without reference materials strengthens memory consolidation while identifying areas requiring additional attention. Regular self-assessment provides objective measures of preparation progress while building confidence in mastered areas.

Building Technical Foundation Through Systematic Service Study

The AWS service ecosystem encompasses hundreds of individual services across numerous categories, each with specific capabilities, configuration options, and operational considerations. For SysOps Administrator certification candidates, developing systematic understanding of core services and their operational implications becomes essential for examination success and professional effectiveness.

Compute services form the foundation of most cloud implementations, requiring deep understanding of instance types, sizing strategies, performance optimization, monitoring configurations, and cost management approaches. Amazon Elastic Compute Cloud represents the cornerstone service demanding comprehensive knowledge of launch procedures, security group configuration, network interface management, and instance lifecycle administration.

Auto Scaling capabilities enable dynamic resource adjustment based on demand fluctuations, requiring understanding of scaling policies, metric configuration, cooldown periods, and integration with load balancing solutions. These automated mechanisms ensure optimal resource utilization while maintaining service availability during varying load conditions.

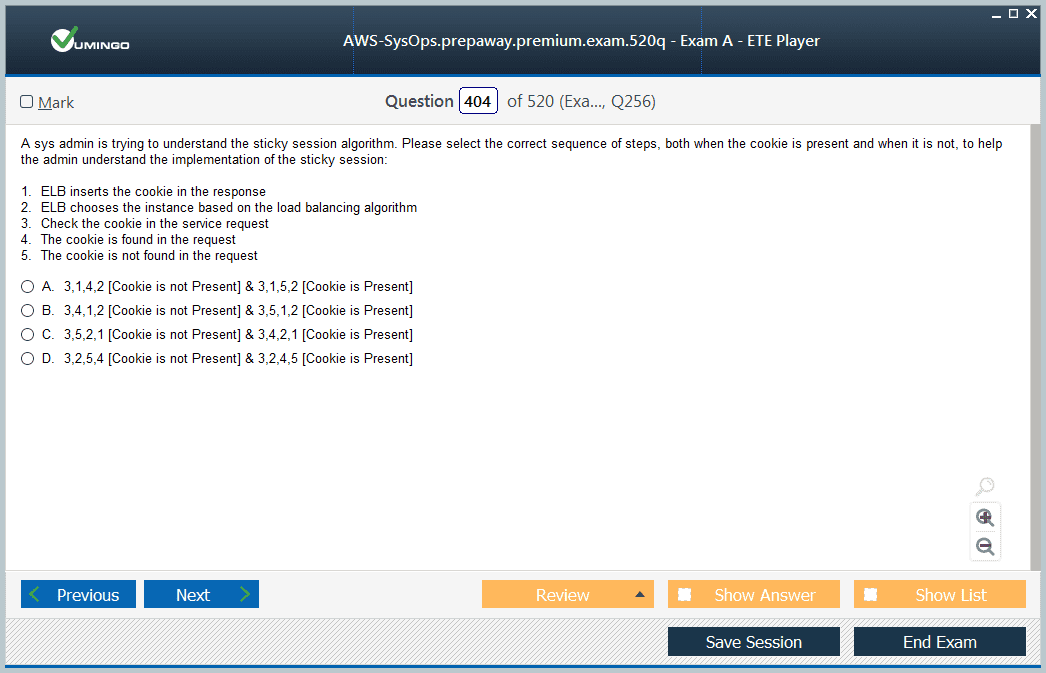

Elastic Load Balancing provides traffic distribution capabilities across multiple compute resources, requiring knowledge of different load balancer types, health checking configurations, SSL termination procedures, and cross-zone distribution strategies. Understanding appropriate load balancer selection criteria and configuration optimization becomes crucial for effective traffic management.

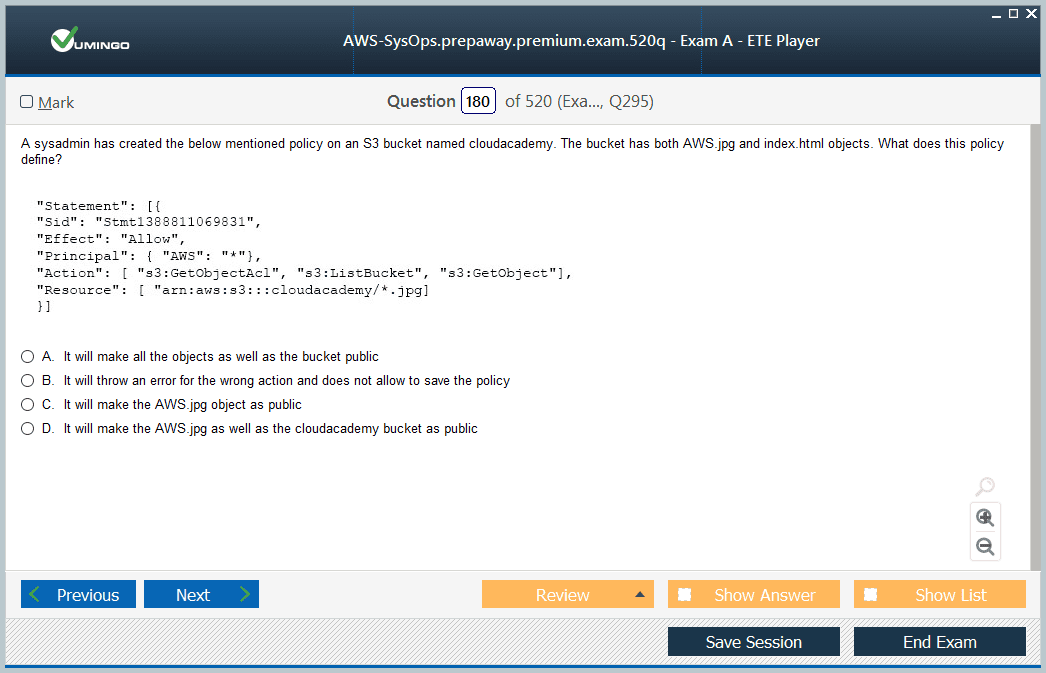

Storage services encompass various solutions optimized for different use cases, performance requirements, and cost considerations. Simple Storage Service represents the foundational object storage platform requiring understanding of bucket configuration, access control mechanisms, lifecycle policies, and cross-region replication strategies.

Elastic Block Store provides persistent storage for compute instances, requiring knowledge of volume types, performance characteristics, snapshot procedures, and encryption capabilities. Understanding appropriate volume selection criteria and performance optimization techniques becomes essential for effective storage management.

Elastic File System offers shared storage capabilities for multiple compute resources, requiring understanding of performance modes, throughput configurations, access control mechanisms, and cross-availability zone mounting procedures.

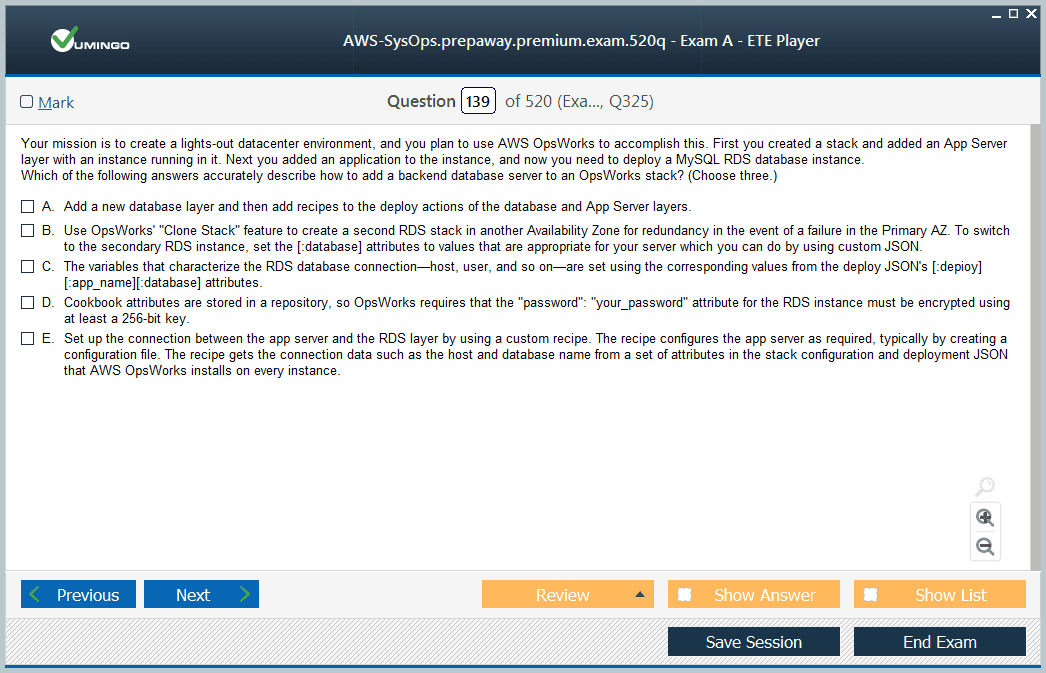

Database services provide managed solutions for various data storage and processing requirements. Amazon Relational Database Service requires understanding of engine selection criteria, instance sizing strategies, backup and recovery procedures, performance monitoring techniques, and security configuration options.

DynamoDB represents the primary NoSQL database offering requiring knowledge of table design principles, capacity planning strategies, index configuration options, and performance optimization techniques. Understanding when to apply relational versus NoSQL solutions becomes important for effective database service selection.

Networking services enable secure, performant connectivity between resources and external systems. Virtual Private Cloud represents the foundational networking service requiring comprehensive understanding of subnet design, routing table configuration, internet gateway management, and network access control list implementation.

Practical Laboratory Experience and Hands-On Skill Development

The integration of hands-on laboratory components into the AWS Certified SysOps Administrator Associate examination demands extensive practical experience with AWS services and management interfaces. Developing proficiency in real-world service configuration, troubleshooting procedures, and operational tasks requires systematic laboratory practice that simulates actual working conditions and examination scenarios.

Creating effective practice environments involves establishing AWS accounts with appropriate access permissions, service quotas, and cost controls that enable comprehensive learning while preventing unexpected charges. The AWS Free Tier provides substantial resources for initial learning activities, though serious preparation often requires investment in additional resources to explore advanced configurations and enterprise-scale scenarios.

Laboratory environment design should replicate realistic operational scenarios rather than isolated service demonstrations. Complex multi-service implementations provide valuable experience with service integration, dependency management, and system-wide optimization that reflects actual workplace responsibilities and examination expectations.

Sandbox environment establishment enables experimentation without impacting production systems while providing freedom to explore various configuration options, test different approaches, and learn from mistakes without consequences. These isolated environments encourage bold experimentation and deep learning through trial and error processes.

Version control integration for infrastructure configurations enables systematic tracking of changes, collaboration with learning partners, and rollback capabilities when experiments produce undesired outcomes. Understanding infrastructure as code principles while practicing with actual implementations builds valuable professional skills alongside certification preparation.

Documentation of laboratory activities through detailed notes, configuration records, and lesson learned summaries creates valuable reference materials for examination review while building professional documentation skills. These records facilitate knowledge retention and provide quick reference materials during intensive preparation periods.

Cost monitoring and management throughout laboratory activities develops practical understanding of AWS pricing models, resource optimization techniques, and financial governance practices. This experience provides valuable insights into real-world operational considerations that extend beyond technical configuration knowledge.

Regular environment cleanup and resource termination practices prevent unnecessary charges while building understanding of proper resource lifecycle management. These procedures reflect responsible cloud operation practices that prevent waste and maintain cost efficiency in professional environments.

Developing Proficiency in Service Configuration and Management

Effective SysOps administrators must demonstrate mastery of service configuration procedures that enable optimal performance, security, and cost efficiency across diverse operational scenarios. Laboratory practice should encompass comprehensive configuration exercises that build systematic competency in essential operational tasks.

Compute service configuration encompasses instance selection, security group establishment, key pair management, user data specification, and network interface configuration. Understanding appropriate instance type selection criteria based on workload characteristics, performance requirements, and cost constraints becomes essential for effective resource utilization.

Auto Scaling group configuration requires understanding of launch template creation, scaling policy definition, metric specification, and integration with load balancing solutions. Practice scenarios should include various scaling triggers, cooldown period optimization, and health check configuration to ensure robust automated resource management.

Load balancer configuration involves understanding different load balancer types, target group establishment, health check definition, listener configuration, and SSL certificate management. Laboratory exercises should explore various distribution algorithms, sticky session configuration, and cross-zone distribution strategies.

Storage service configuration covers bucket creation, access policy definition, lifecycle rule establishment, versioning enablement, and cross-region replication setup. Understanding appropriate storage class selection, cost optimization strategies, and data protection mechanisms requires practical experience with various configuration scenarios.

Database service configuration encompasses instance creation, parameter group customization, backup policy establishment, monitoring configuration, and security group management. Practice activities should include various database engines, performance optimization techniques, and integration with application environments.

Network configuration represents a fundamental skill area requiring understanding of VPC creation, subnet design, routing table configuration, internet gateway establishment, and NAT gateway implementation. Laboratory exercises should encompass complex multi-tier architectures with appropriate security controls and traffic flow optimization.

Security configuration permeates all service areas, requiring understanding of identity and access management, encryption implementation, security group configuration, and compliance monitoring setup. Practice scenarios should emphasize defense in depth principles while maintaining operational efficiency and user accessibility.

Monitoring configuration involves CloudWatch setup, custom metric creation, dashboard design, alarm configuration, and notification mechanism establishment. Understanding metric aggregation, statistical analysis, and trend identification requires practical experience with monitoring solution implementation and optimization.

Advanced Troubleshooting and Problem Resolution Techniques

The AWS Certified SysOps Administrator Associate examination places significant emphasis on troubleshooting capabilities and problem resolution skills that enable rapid identification and correction of operational issues. Laboratory practice must include systematic exposure to common problems, diagnostic procedures, and solution implementation techniques.

Service connectivity troubleshooting requires understanding of network path analysis, security group evaluation, routing table verification, and DNS resolution confirmation. Laboratory scenarios should include various connectivity failures and systematic diagnostic approaches that identify root causes efficiently.

Performance troubleshooting encompasses resource utilization analysis, bottleneck identification, scaling configuration verification, and optimization strategy implementation. Understanding performance metrics interpretation, capacity planning principles, and tuning techniques enables effective resolution of performance-related issues.

Security troubleshooting involves access permission analysis, policy evaluation, encryption verification, and compliance status assessment. Practice scenarios should include various security misconfigurations and systematic approaches to identifying and correcting security vulnerabilities.

Service health troubleshooting requires understanding of health check configuration, auto-scaling behavior analysis, load balancer status verification, and instance health assessment. Laboratory exercises should explore various health-related failures and appropriate diagnostic and corrective procedures.

Data integrity troubleshooting encompasses backup verification, replication status assessment, corruption detection, and recovery procedure implementation. Understanding various data protection mechanisms and their failure modes enables effective data-related problem resolution.

Cost anomaly troubleshooting involves billing analysis, resource utilization assessment, pricing model verification, and optimization opportunity identification. Practice with cost monitoring tools and analysis techniques builds competency in financial governance and optimization activities.

Log analysis troubleshooting requires understanding of log aggregation verification, parsing configuration assessment, search query optimization, and alert mechanism validation. Laboratory scenarios should include various log-related issues and systematic diagnostic approaches.

Application performance troubleshooting encompasses dependency analysis, service integration verification, caching effectiveness assessment, and database performance evaluation. Understanding application-infrastructure interactions enables effective resolution of complex performance issues that span multiple service domains.

Implementation of Automated Operations and Infrastructure Management

Modern cloud operations emphasize automation as a fundamental practice that enhances efficiency, reduces human error, and enables scalable management of complex infrastructure environments. Laboratory practice must include extensive automation implementation that demonstrates understanding of various tools, techniques, and best practices.

Infrastructure as code implementation requires practical experience with template creation, parameter utilization, stack management, and change set analysis. Laboratory exercises should encompass complete environment provisioning, including network infrastructure, security configurations, compute resources, and application deployment procedures.

Configuration management automation involves understanding of agent-based and agentless approaches, policy definition, compliance monitoring, and drift correction mechanisms. Practice scenarios should include various configuration management challenges and systematic approaches to maintaining consistent environment states.

Deployment automation encompasses pipeline creation, testing integration, approval workflows, and rollback procedures. Laboratory activities should include complete deployment pipeline implementation that demonstrates understanding of continuous integration and continuous deployment principles.

Monitoring automation involves alert configuration, notification routing, escalation procedures, and automated response implementation. Understanding various monitoring triggers, response mechanisms, and integration possibilities enables effective automated incident management.

Backup automation requires understanding of schedule configuration, retention policy implementation, cross-region replication setup, and recovery testing procedures. Laboratory exercises should demonstrate comprehensive data protection automation that meets various business requirements and compliance standards.

Scaling automation encompasses policy definition, metric configuration, cooldown optimization, and integration with load balancing solutions. Practice scenarios should include various scaling challenges and systematic approaches to implementing responsive, efficient automated scaling solutions.

Security automation involves vulnerability scanning, compliance monitoring, access review procedures, and incident response automation. Understanding various security automation tools and their appropriate application scenarios enables implementation of comprehensive security management solutions.

Cost optimization automation includes resource scheduling, rightsizing recommendations, unused resource identification, and spending alert configuration. Laboratory practice should demonstrate understanding of various cost management tools and their integration into automated operational workflows.

Efficient Interface Navigation for Accelerated Task Performance

Navigating the AWS management console fluently is paramount when time is of the essence in a practical exam setting. Cultivate an intuitive mental map of the console’s architecture, so you can dart to services with alacrity. Familiarize yourself with the search field sanctum—typing a few keystrokes and selecting the desired service is exponentially faster than traversing nested menus. Enhance your efficacy by exploiting keyboard shortcuts such as recent‑access toggles or using browser tabs to pin frequently visited service pages for swift inter‑service context switching. Cultivate muscle memory for keystrokes like Alt+Tab to jump between windows, and split screens to monitor resource states in tandem. Practicing repeatable sequences ensures you can execute tasks at mach speed without sacrificing precision.

Interface navigation drills might involve launching an EC2 instance, tagging it, then immediately pivoting to verifying its security group configuration, all within a constrained interval of two or three minutes. These exercises help build a fusion of velocity and accuracy, ensuring that, under exam duress, you react with fluid facility rather than creeping caution. By embedding such drills into your preparation, you shape navigation agility into a tacit competence that underpins your laboratory triumph.

Strategic Task Decomposition for Complex Scenarios

Laboratory prompts in the SysOps Administrator Associate exam frequently encompass multifaceted imperatives—deploy an Auto Scaling group, then ensure load‑balancer health, then configure monitoring alarms, etc. Tackling these labyrinthine demands tomahawk‑fashion requires systematic disassembly. Begin by parsing the prompt sentence by sentence. Enumerate discrete tasks: for instance, identify that first you must provision resources, then configure scaling or load‑balancing, then verify metrics, then establish alerts. Determine dependencies—like how alarms must be associated with metrics that only exist when resources are deployed. Layout a stepwise hierarchy: deploy resource → tag appropriately → configure networking → attach to load balancer → set up monitoring → test triggers → document outcome.

Visualize this sequence as a flowchart or linear task checklist during practice sessions. This decomposition strategy precludes fumbling through tasks in haphazard order and prevents omission of critical steps. It also allows you to triage: if time dwindles, at least ensure the foundational layers—deployment and core functionality—are solid, even if ancillary bells and whistles must wait. By training this logical breakdown habit, you circumnavigate confusion and systematically assuage each requirement with clarity and confidence.

Rigorous Verification Protocols to Safeguard Configurations

Completing a configuration is insufficient unless you confirm that it functions as intended. Develop a ritual of verification immediately after implementing each task. If you spin up an RDS instance, ping it or verify its endpoint and security group connectivity. If you configure a CloudWatch alarm, simulate the triggering metric or review the alarm’s state to ensure it transitions as expected. For IAM policy changes, test with a stub user or use policy simulator to ascertain enforcement.

Your verification protocol should include endpoint checks, console status reads, metric inspection, logs review, and simulated failure to test recovery. For example, after deploying an instance, view instance status checks or attempt a login; after configuring an S3 bucket policy, try accessing or uploading objects from authorized and unauthorized credentials. Build an explicit habit: implement configuration, then immediately validate it. This prevents partial credit loss due to misconfiguration or untested behaviors and instills confidence that your work truly meets the imperatives.

Error Recovery Techniques to Mitigate Mishaps

Errors are inevitable under time pressure—whether a mis‑typed ARN, a mis‑configured security rule, or a mis‑applied tag. The key is swift diagnostic acumen and redemptive maneuvering. First, cultivate awareness of common error typologies: authentication failures, denied permissions, missing dependencies, resource limits. Know where to find error messages quickly on the console or in logs, and interpret them without delay. Create a mental or physical quick‑reference of frequent pitfalls and their remedies.

When an error surfaces, refrain from flailing. Instead, apply a structured triage: read the error message attentively, pinpoint the probable resource at fault, check related configuration panels, validate IDs or policies, make corrective edits, and re‑test. For more obscure issues, consult the inline documentation or use the console’s troubleshooting hints to assist rapid diagnosis. In practice drills, deliberately induce errors—like inserting an invalid value into a parameter or mis‑naming a resource—and then practice diagnosing and reversing the error swiftly. This builds resilience and confidence, allowing you to recover from missteps without panicking or wasting precious minutes.

Exhaustive Documentation Scanning Under Duress

Even though you’re not permitted external internet access during the actual exam, practicing with documentation reading trains your ability to scan quickly. Familiarize yourself with AWS service documentation structure—the navigation pane, section headings such as Setup, Examples, Troubleshooting. During drills, time yourself retrieving a particular piece of information—e.g., syntax for a CLI command or structure of an IAM policy statement—within 30 seconds. Develop strategies like skimming headings, using the page‑search (Ctrl+F) for keywords, or mentally indexing where code examples reside.

In your preparation, take unfamiliar or arcane services and attempt to locate, within a minute, how to configure them for a particular scenario. This trains your eyes to detect salient phrases like “example policy,” “parameter,” “configuration option,” which allow you to absorb relevant content quickly. This skill translates into exam readiness—should you need to access the integrated docs, you can pinpoint the necessary text swiftly, without meandering through paragraphs of prose. By embedding fast scanning and selective attention into your habits, you advance your capacity to interpret and apply documentation under time‑pressured conditions.

Time‑boxing and Practice Routines for Fluency

Time management is your ally. During mock practice sessions, partition your tasks into short bursts—perhaps 10‑minute panels. Within each block, attempt to navigate to the required service, implement one lab task end‑to‑end, verify, handle any errors. Then immediately debrief yourself: how long did each step take? Where did hesitation occur? Which part of navigation slowed you? Then repeat, aiming to shave off seconds.

Alternate between focused tasks (e.g. only deployment tasks) and composite tasks (deploy plus monitoring plus remediation). Build stamina for mental context shifts under pressure. Periodically simulate full practice exams—set aside a 60‑minute block, apply multiple multi‑step tasks, and push through with conscious pacing—focusing on working steadily rather than sprint‑and‑stall. Over time, your cadence becomes more metered, your decisions more instinctive, and your execution more reliable.

Integrative Lab‑Scenario Immersion for Mastery

True fluency emerges when you synthesize these techniques in immersive, exam‑style laboratories. Create holistic scenarios: provision a load‑balanced, auto‑scaled web tier; configure health‑checked routing; attach monitoring alarms and auto‑scaling policies; implement proper IAM roles and policies; then introduce a simulated failure and recover autonomously; finally, document your solution succinctly. During each scenario, practice: penetrating the console swiftly, decomposing tasks, verifying continually, recovering errors instantly, scanning documentation for nuance, and pacing effectively.

After each drill, perform a post‑mortem: what slowed you, where did verification catch flaws, where did documentation scanning falter, what errors tripped you up. Use these insights to adjust your next session’s focus. Over time, these iterative immersions sharpen your reflexes, expand your experiential bank, and build a robust cognitive schema for approaching any laboratory prompt with composure and laboratory dexterity.

Final Thoughts

Preparing for the AWS Certified SysOps Administrator Associate exam is not merely about memorizing service names or mastering console clicks—it’s about cultivating a strategic mindset, operational fluency, and technical confidence under pressure. The journey to certification transforms candidates into methodical problem-solvers capable of managing dynamic, cloud-based environments with precision and foresight. By following the top 7 tips outlined in this guide, you're not only preparing to pass an exam—you’re laying the groundwork for professional resilience and long-term cloud operations success.

The SysOps Administrator certification is often regarded as the most technically challenging among AWS’s associate-level paths. It rigorously assesses your ability to monitor, automate, secure, and troubleshoot infrastructure in a real-world, production-like environment. Unlike the Solutions Architect exam, which leans heavily on design theory and best practices, or the Developer Associate exam, which emphasizes CI/CD pipelines and application lifecycle management, the SysOps track is a pressure test of hands-on operational skill. From configuring high-availability architectures to managing incident responses and interpreting detailed metric data, every element of this certification mirrors responsibilities faced by real SysOps engineers.

That’s why preparation must go beyond passive reading or video tutorials. Success lies in consistent, immersive practice using real AWS environments—configuring alarms in CloudWatch, manipulating IAM roles, orchestrating Auto Scaling Groups, fine-tuning EC2 launch templates, or exploring route tables in VPCs. Simulated exam scenarios and practical labs are indispensable. The lab component of the exam, in particular, demands calm under pressure and the ability to carry out multi-step procedures swiftly and accurately. That skill can only be sharpened through repetition and retrospection.

It’s also important to embrace the mindset of lifelong learning. The AWS ecosystem is a moving target. Services evolve, interfaces are revamped, and new features are continuously added. A SysOps Administrator must remain vigilant and adaptable, not just for the exam, but as a professional standard. The habits you develop during your certification journey—such as reading service documentation, parsing CloudTrail logs, or diving into billing dashboards—will continue to serve you long after you pass the test.

As you refine your knowledge, make it a point to teach or explain what you’ve learned. Whether you write blog posts, participate in community forums, or simply review with a study partner, explaining concepts out loud reinforces understanding. The Feynman Technique—teaching something simply—is a powerful mechanism to detect gaps in comprehension and convert passive familiarity into active mastery.

Most importantly, remember that this is a certification worth earning not just because of the credential it confers, but because of the professional transformation it encourages. The ability to monitor, automate, recover, and optimize infrastructure in cloud environments is a vital skill set that organizations rely on every day. Your ability to think like a SysOps engineer—to troubleshoot calmly, automate wisely, and act decisively—is what truly makes you valuable.

So stay focused, practice often, and build confidence through each lab and scenario. The AWS Certified SysOps Administrator Associate exam is absolutely passable—with the right strategy, tenacity, and a bit of trial and error. And once you earn it, wear it not just as a badge of technical skill, but as proof of your commitment to operational excellence in the evolving world of cloud computing.

Amazon AWS-SysOps practice test questions and answers, training course, study guide are uploaded in ETE Files format by real users. Study and Pass AWS-SysOps AWS Certified SysOps Administrator (SOA-C01) certification exam dumps & practice test questions and answers are to help students.

Exam Comments * The most recent comment are on top

- AWS Certified Solutions Architect - Associate SAA-C03

- AWS Certified Solutions Architect - Professional SAP-C02

- AWS Certified AI Practitioner AIF-C01

- AWS Certified Cloud Practitioner CLF-C02

- AWS Certified Machine Learning Engineer - Associate MLA-C01

- AWS Certified DevOps Engineer - Professional DOP-C02

- AWS Certified Data Engineer - Associate DEA-C01

- AWS Certified CloudOps Engineer - Associate SOA-C03

- AWS Certified Developer - Associate DVA-C02

- AWS Certified Machine Learning - Specialty - AWS Certified Machine Learning - Specialty (MLS-C01)

- AWS Certified Advanced Networking - Specialty ANS-C01

- AWS Certified Security - Specialty SCS-C03

- AWS Certified Security - Specialty SCS-C02

- AWS Certified SysOps Administrator - Associate - AWS Certified SysOps Administrator - Associate (SOA-C02)

- AWS-SysOps - AWS Certified SysOps Administrator (SOA-C01)

Purchase AWS-SysOps Exam Training Products Individually

Why customers love us?

What do our customers say?

The resources provided for the Amazon certification exam were exceptional. The exam dumps and video courses offered clear and concise explanations of each topic. I felt thoroughly prepared for the AWS-SysOps test and passed with ease.

Studying for the Amazon certification exam was a breeze with the comprehensive materials from this site. The detailed study guides and accurate exam dumps helped me understand every concept. I aced the AWS-SysOps exam on my first try!

I was impressed with the quality of the AWS-SysOps preparation materials for the Amazon certification exam. The video courses were engaging, and the study guides covered all the essential topics. These resources made a significant difference in my study routine and overall performance. I went into the exam feeling confident and well-prepared.

The AWS-SysOps materials for the Amazon certification exam were invaluable. They provided detailed, concise explanations for each topic, helping me grasp the entire syllabus. After studying with these resources, I was able to tackle the final test questions confidently and successfully.

Thanks to the comprehensive study guides and video courses, I aced the AWS-SysOps exam. The exam dumps were spot on and helped me understand the types of questions to expect. The certification exam was much less intimidating thanks to their excellent prep materials. So, I highly recommend their services for anyone preparing for this certification exam.

Achieving my Amazon certification was a seamless experience. The detailed study guide and practice questions ensured I was fully prepared for AWS-SysOps. The customer support was responsive and helpful throughout my journey. Highly recommend their services for anyone preparing for their certification test.

I couldn't be happier with my certification results! The study materials were comprehensive and easy to understand, making my preparation for the AWS-SysOps stress-free. Using these resources, I was able to pass my exam on the first attempt. They are a must-have for anyone serious about advancing their career.

The practice exams were incredibly helpful in familiarizing me with the actual test format. I felt confident and well-prepared going into my AWS-SysOps certification exam. The support and guidance provided were top-notch. I couldn't have obtained my Amazon certification without these amazing tools!

The materials provided for the AWS-SysOps were comprehensive and very well-structured. The practice tests were particularly useful in building my confidence and understanding the exam format. After using these materials, I felt well-prepared and was able to solve all the questions on the final test with ease. Passing the certification exam was a huge relief! I feel much more competent in my role. Thank you!

The certification prep was excellent. The content was up-to-date and aligned perfectly with the exam requirements. I appreciated the clear explanations and real-world examples that made complex topics easier to grasp. I passed AWS-SysOps successfully. It was a game-changer for my career in IT!