- Home

- SAS Institute Certifications

- A00-240 SAS Statistical Business Analysis Using SAS 9: Regression and Modeling Dumps

Pass SAS Institute A00-240 Exam in First Attempt Guaranteed!

Get 100% Latest Exam Questions, Accurate & Verified Answers to Pass the Actual Exam!

30 Days Free Updates, Instant Download!

A00-240 Premium Bundle

- Premium File 98 Questions & Answers. Last update: Jan 31, 2026

- Training Course 87 Video Lectures

- Study Guide 895 Pages

Last Week Results!

Includes question types found on the actual exam such as drag and drop, simulation, type-in and fill-in-the-blank.

Based on real-life scenarios similar to those encountered in the exam, allowing you to learn by working with real equipment.

Developed by IT experts who have passed the exam in the past. Covers in-depth knowledge required for exam preparation.

All SAS Institute A00-240 certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the A00-240 SAS Statistical Business Analysis Using SAS 9: Regression and Modeling practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

Ultimate SAS Institute A00-240 Certification Mastery: Complete Professional Preparation Framework

Achieving mastery in SAS Statistical Business Analysis epitomizes a significant professional accomplishment for data analytics specialists intent on refining their skills in advanced regression modeling and complex statistical analysis. The SAS A00-240 certification, issued by the SAS Institute, rigorously assesses an individual's command over intricate statistical methodologies, encompassing regression techniques, model diagnostics, and the synthesis of actionable insights within the SAS software ecosystem.

In contemporary data-centric enterprises, the demand for analytical professionals who can proficiently interpret large datasets, construct predictive models, and execute sophisticated statistical tests continues to grow exponentially. The A00-240 certification validates such competencies, emphasizing the critical balance between theoretical knowledge and practical application. Successful candidates demonstrate proficiency not only in the mathematical underpinnings of statistical procedures but also in harnessing SAS's powerful suite of analytical tools to extract meaningful patterns and drive informed decision-making.

This certification journey is not merely an academic exercise but a strategic career investment, preparing analysts to address the multifaceted challenges of today's business environments. It provides a robust foundation for handling real-world scenarios where data variability, noise, and complexity demand advanced modeling approaches combined with interpretative precision.

Core Competencies and Exam Content Structure

The A00-240 certification examination meticulously delineates several pivotal domains integral to statistical business analysis, ensuring candidates exhibit comprehensive expertise across a spectrum of analytical techniques. These core domains include foundational principles of simple and multiple linear regression, logistic regression for classification problems, variable selection methodologies, diagnostic tools for model adequacy assessment, and the nuanced interpretation of output statistics.

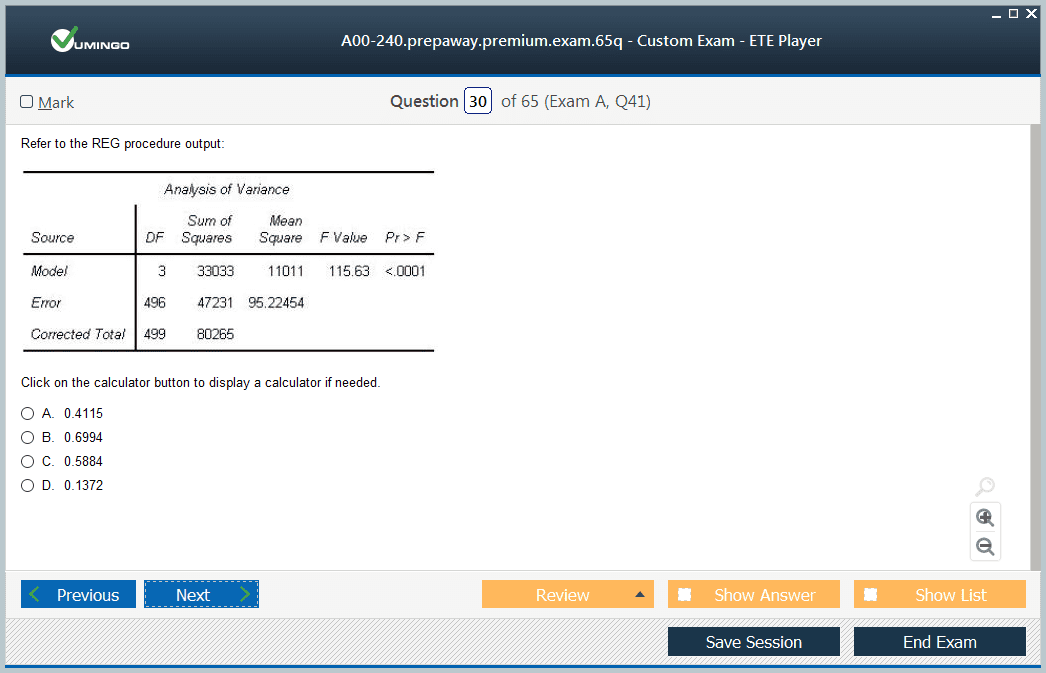

A primary emphasis is placed on linear regression, where candidates must showcase proficiency in constructing models that elucidate relationships between dependent and independent variables, accounting for multicollinearity and heteroscedasticity challenges. The multiple linear regression segment tests the ability to manage datasets with numerous predictors, ensuring appropriate variable selection and transformation to optimize model fit.

Logistic regression, crucial for binary outcome predictions, forms another key pillar of the examination, evaluating candidates' capacity to model probabilities, interpret odds ratios, and validate model performance using techniques such as the Hosmer-Lemeshow test and ROC curves. This area is increasingly relevant in business contexts such as credit scoring, churn analysis, and risk assessment.

In addition to regression techniques, the exam rigorously probes candidates' knowledge of variable selection strategies like stepwise, forward, and backward elimination methods, facilitating model parsimony and preventing overfitting. Diagnostic procedures, including residual analysis, leverage tests, and influence statistics, are essential to ascertain model reliability and identify anomalies or data points exerting disproportionate effects.

Finally, the interpretation section demands the synthesis of statistical output into coherent narratives that translate technical findings into actionable business insights. This skill bridges the gap between analytical rigor and executive decision-making, making the certification invaluable for data professionals aspiring to impact strategic planning.

Strategic Preparation Approaches for Certification Success

The pathway to successfully attaining the SAS A00-240 certification is marked by deliberate, multifaceted preparation strategies that integrate theoretical comprehension with rigorous hands-on practice. Candidates benefit immensely from adopting structured study plans that progressively introduce and deepen their understanding of each core domain, enabling mastery through incremental learning.

Initial preparation phases should focus on reinforcing statistical theory underpinning regression analysis and variable selection. Textbooks and scholarly articles provide the necessary conceptual frameworks, elucidating assumptions, mathematical formulations, and limitations of various models. These resources lay the groundwork for more applied learning stages.

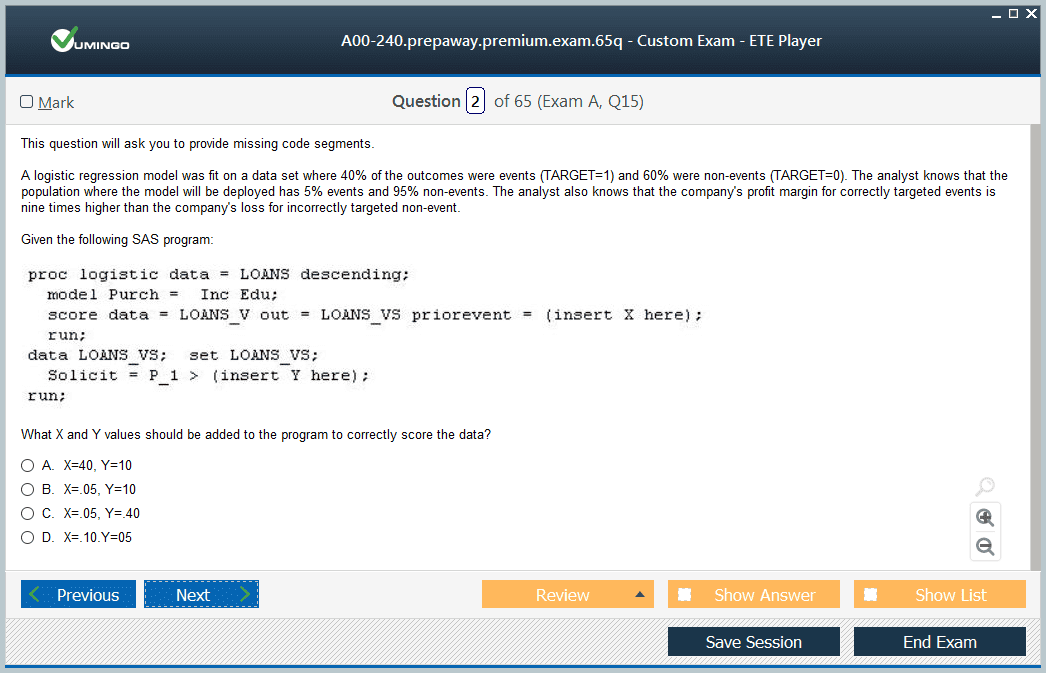

Simultaneously, extensive practice within the SAS environment is imperative. Familiarity with SAS procedures such as PROC REG for linear regression, PROC LOGISTIC for logistic models, and PROC GLM for general linear modeling equips candidates with operational fluency. Engaging in data manipulation tasks, variable transformations, and diagnostic plotting cultivates technical proficiency essential for exam success.

High-quality practice materials, including sample questions and full-length mock exams, simulate the testing environment, improving time management and stress resilience. Repeated exposure to exam-style questions hones problem-solving agility and reinforces memory retention. Candidates are advised to adopt iterative study cycles, where self-assessment guides targeted revision efforts, closing knowledge gaps systematically.

Supplementary learning modalities, such as online tutorials, video lectures, and interactive SAS workshops, further diversify the preparation experience. These tools cater to varying learning preferences and reinforce complex concepts through visual and experiential methods.

Practical Application and Real-World Relevance

One of the hallmark attributes distinguishing the SAS A00-240 certification is its unwavering focus on practical applicability. The exam transcends theoretical knowledge, compelling candidates to apply analytical methodologies in scenarios reflective of authentic business challenges. This pragmatic orientation ensures that certified professionals can immediately contribute to organizational analytics initiatives upon credential acquisition.

In practice, certified statisticians leverage their expertise to optimize marketing efforts by identifying customer segments poised for conversion, predict financial market trends through regression forecasts, and enhance operational efficiencies via risk modeling. These applications necessitate translating numerical outputs into strategic recommendations, requiring nuanced understanding of both data science and business contexts.

Moreover, proficiency in SAS software allows analysts to manage vast datasets, automate complex procedures, and generate comprehensive reports. Mastery of SAS macro programming and data step processing augments efficiency, enabling analysts to streamline workflows and scale analytical operations.

The certification encourages developing critical soft skills such as communication and stakeholder engagement. Analysts must articulate model assumptions, limitations, and implications clearly to non-technical audiences, facilitating data-driven decision-making across organizational hierarchies.

The Evolving Landscape of Statistical Business Analysis and Continuous Learning

Statistical business analysis is a continually evolving discipline, shaped by advancements in machine learning, big data technologies, and cloud computing. The SAS Institute maintains currency in its certification programs, updating exam content and training materials to reflect the latest methodologies and software enhancements, ensuring candidates acquire skills aligned with contemporary industry standards.

Continuous professional development is imperative for maintaining analytical proficiency in this dynamic environment. Certified professionals should engage in ongoing education through workshops, webinars, advanced certifications, and participation in professional analytics forums. This lifelong learning ethos fosters adaptability, equipping analysts to incorporate emerging techniques such as ensemble modeling, time-series forecasting, and natural language processing into their analytical toolkit.

In addition, the integration of SAS with other platforms like Python and R necessitates interdisciplinary knowledge. Modern analysts often require hybrid skill sets combining SAS statistical capabilities with data engineering, visualization, and machine learning expertise to address complex, multifaceted business problems.

Organizational Impact and Career Advancement Opportunities

Obtaining the SAS A00-240 certification significantly enhances both individual career trajectories and organizational analytical capabilities. Certified analysts serve as catalysts for embedding a culture of data-driven decision-making within enterprises, contributing to more precise forecasting, risk mitigation, and strategic planning.

From a corporate perspective, employing SAS-certified statisticians signals a commitment to analytical rigor and operational excellence. Organizations benefit from improved project outcomes, optimized resource allocation, and heightened competitive advantage. Certification status also facilitates compliance with industry standards and regulatory requirements, which increasingly mandate validated analytical competencies.

For professionals, certification opens avenues to higher-responsibility roles, such as senior data analyst, analytics consultant, and quantitative modeler. It provides a competitive differentiator in the job market, often correlating with enhanced remuneration packages and leadership prospects. The certification also lays a foundational pathway toward advanced SAS credentials and broader data science qualifications.

Building a Supportive Professional Network and Leveraging Certification Benefits

Pursuing SAS certification extends beyond the exam, offering entry into a vibrant global community of data professionals. This network provides access to invaluable resources including forums for knowledge exchange, mentorship programs, industry conferences, and collaborative projects.

Active engagement within this community fosters ongoing learning and career development, as members share best practices, troubleshoot complex analytical problems, and discuss emerging trends. Networking opportunities facilitate connections with industry leaders, potential employers, and academic researchers, enriching professional growth.

Additionally, certification holders gain access to SAS-sponsored events and exclusive training, which further enhance skills and industry visibility. This ecosystem supports a continuous feedback loop of learning, innovation, and professional advancement, ensuring certified analysts remain at the forefront of statistical business analysis excellence.

Advanced Examination Preparation Methodologies and Strategic Learning Approaches

Mastering SAS Statistical Business Analysis, particularly in preparation for the rigorous A00-240 certification, demands a well-structured and multifaceted learning strategy that synthesizes theoretical insights with pragmatic skills. This certification serves as a comprehensive benchmark for professionals aiming to demonstrate advanced proficiency in statistical concepts, SAS programming, and data-driven problem-solving. To excel, candidates must adopt preparation methodologies that blend varied learning styles with systematic knowledge reinforcement, ensuring a robust command over the vast syllabus.

Leveraging technology-enhanced learning tools has become indispensable in modern exam preparation. Interactive platforms offering dynamic content engagement foster deeper conceptual understanding, while adaptive learning systems analyze individual performance trends to tailor practice sessions effectively. These cutting-edge resources optimize learning efficiency, transforming preparation from rote memorization to active skill acquisition. Such personalized learning trajectories enable candidates to focus on weaker areas while consolidating strengths, thereby maximizing exam readiness.

The statistical domain encapsulated in the certification exam is multifarious, encompassing intricate techniques that require specialized study tactics. The foundational pillar is regression modeling, where grasping both simple and multiple linear regression forms the cornerstone. Candidates must assimilate knowledge of model assumptions, residual behavior, and transformation techniques that address non-linearity. Proficiency in selecting the correct model type tailored to data characteristics and business objectives underpins successful statistical analysis.

Mastery of Diagnostic Procedures and Model Validation Techniques

A pivotal aspect of SAS Statistical Business Analysis is the ability to critically evaluate model performance through diagnostic procedures. The examination rigorously tests candidates on recognizing assumption violations such as heteroscedasticity, multicollinearity, and autocorrelation, which can compromise model integrity. Beyond theoretical familiarity, exam takers must demonstrate practical skills in employing diagnostic plots, influence statistics, and residual analyses to identify and mitigate such issues.

Corrective strategies, including variable transformation, model respecification, or robust estimation methods, highlight an analyst’s capacity to enhance model robustness. This practical acumen ensures that statistical outputs are not only mathematically sound but also reliable for predictive purposes in complex business scenarios where data imperfections are commonplace.

Model validation emerges as a complementary focus area, emphasizing techniques that verify a model’s generalizability beyond the training dataset. Candidates must understand and apply holdout validation, k-fold cross-validation, and bootstrap resampling methods to quantify model stability. Interpreting validation metrics such as mean squared error, AIC, BIC, and classification accuracy for logistic models enables informed decisions about model selection and deployment.

Strategic Variable Selection and Feature Engineering

Selecting the most pertinent variables to include in a statistical model is a sophisticated task crucial to preventing overfitting and enhancing predictive accuracy. The exam evaluates candidates’ mastery of selection methodologies including forward selection, backward elimination, and stepwise procedures, each with nuanced advantages and caveats.

An advanced understanding extends to regularization techniques such as ridge regression and LASSO, which impose penalties to shrink less informative coefficients towards zero, facilitating simpler and more interpretable models. Additionally, candidates must grasp information criteria like Akaike and Bayesian Information Criteria, which aid in balancing model complexity against fit.

Feature engineering—the art of transforming raw data into meaningful predictors—is also a critical competency. Analysts must demonstrate skills in handling missing data through imputation methods, detecting and treating outliers, and performing variable transformations like logarithmic scaling or polynomial terms to capture complex relationships. Mastery in these areas significantly impacts model performance and reliability.

Proficiency in Advanced Modeling Techniques

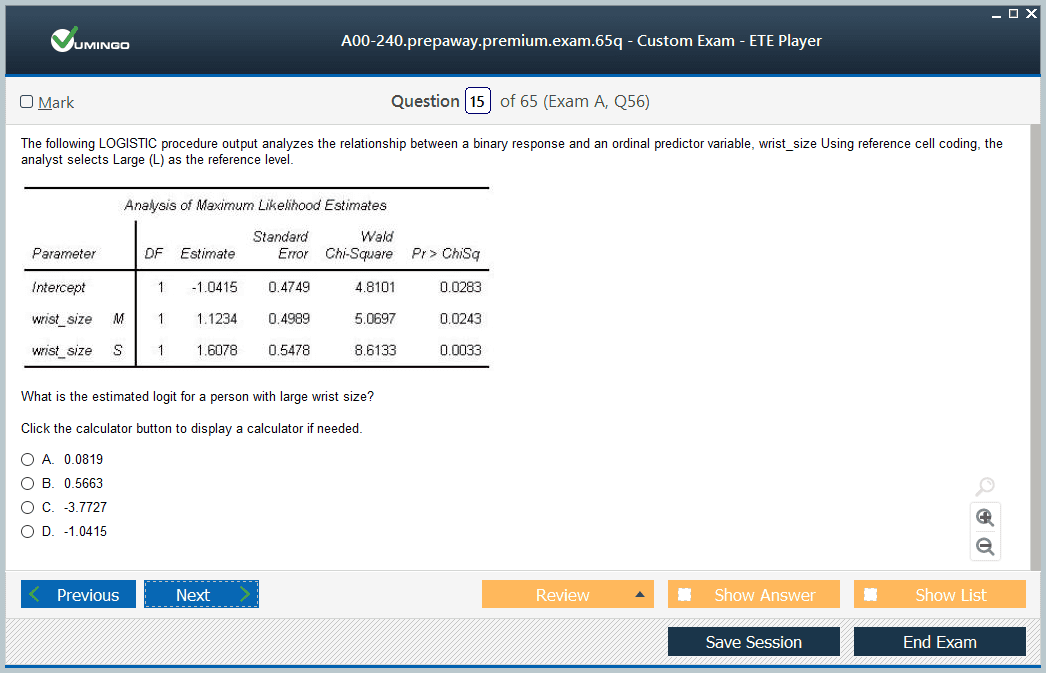

While the exam foregrounds foundational regression analysis, it also explores sophisticated modeling paradigms addressing diverse analytical needs. Logistic regression models, essential for binary classification problems, demand a thorough grasp of log-odds interpretation, odds ratio calculations, and model fit assessment via likelihood ratio tests.

Beyond binary outcomes, candidates are expected to understand polynomial regression approaches that model non-linear relationships by incorporating higher-degree terms, enabling nuanced curve fitting in complex datasets. Interaction effects modeling, which examines how the relationship between predictors changes in the presence of other variables, represents another advanced concept tested in the examination.

These advanced modeling skills empower analysts to construct flexible and insightful models that align closely with real-world data structures, thereby enhancing predictive accuracy and interpretability within business contexts.

Comprehensive Data Preparation and Exploratory Analysis

Data preparation underpins every successful statistical model. The examination tests candidates on their ability to cleanse datasets, detect anomalies, and prepare features conducive to robust analysis. This includes practices such as normalization, standardization, handling categorical variables through encoding, and addressing missing values strategically.

Exploratory data analysis (EDA) is equally emphasized, requiring candidates to proficiently summarize data characteristics through descriptive statistics, visualize distributions with histograms and boxplots, and assess variable relationships using correlation matrices and scatterplots. These exploratory techniques provide critical insights that inform subsequent modeling decisions, helping analysts identify trends, patterns, and potential data issues early in the process.

Proficiency in SAS tools for data wrangling and visualization, including procedures like PROC MEANS, PROC UNIVARIATE, and PROC SGPLOT, is integral to effective preparation and application.

SAS Programming Skills and Practical Software Competence

SAS programming proficiency forms the backbone of the certification, as candidates must demonstrate the ability to translate statistical theory into executable code. The exam evaluates competence in data step programming, macro utilization for automation, and applying statistical procedures accurately.

Candidates are expected to write efficient SAS code to manipulate data sets, generate summary statistics, and run advanced analytical models using procedures such as PROC REG, PROC LOGISTIC, and PROC GLMSELECT. Mastery of debugging techniques and understanding SAS log messages for error detection significantly enhance programming effectiveness and reduce development time.

Hands-on practice within the SAS environment not only consolidates theoretical learning but also builds confidence to tackle complex exam questions that simulate real-world analytical challenges.

Integrating Business Context and Communication Skills

Effective statistical analysis transcends numerical computation; it requires situating insights within the relevant business milieu. The exam underscores the importance of contextual understanding, prompting candidates to interpret results in alignment with organizational goals, operational constraints, and market dynamics.

Communicating statistical findings clearly and persuasively to diverse audiences, including non-technical stakeholders, is a critical professional skill. Candidates must be able to craft coherent narratives that link analytical results to strategic recommendations, facilitating data-driven decision-making.

Developing this competency involves practicing report writing, presentation skills, and visualization techniques that highlight key findings while avoiding technical jargon, thus ensuring the utility and impact of analytical work.

Time Management, Error Correction, and Continuous Self-Assessment

Given the exam’s comprehensive scope and time-bound nature, effective time management is paramount. Candidates must hone the ability to allocate appropriate time to each section, balancing speed with accuracy. Practice under simulated exam conditions helps build stamina and reduce anxiety.

Moreover, cultivating error recognition skills is essential. The exam often incorporates deliberately flawed data or code snippets, challenging candidates to identify and rectify mistakes. This evaluative ability reflects real-world scenarios where analytical vigilance prevents costly errors.

Continuous self-assessment through regular practice exams and progress tracking allows candidates to identify weaknesses and refine their study focus dynamically. Utilizing analytics dashboards or study journals to monitor performance trends supports adaptive learning, thereby enhancing preparedness and confidence for the certification challenge.

Comprehensive Content Mastery Strategies for Statistical Analysis Excellence

Achieving true mastery in statistical business analysis demands a systematic and holistic approach to content comprehension, where theoretical principles seamlessly intertwine with practical application prowess. The A00-240 certification examination embodies this philosophy by testing candidates across an extensive spectrum of statistical concepts and methodologies, necessitating comprehensive preparation that spans foundational theories to complex analytical techniques. Effective mastery strategies integrate deep conceptual learning, iterative hands-on exercises, and strategic review mechanisms designed to reinforce retention and application skills, enabling candidates to confidently tackle diverse analytical challenges.

At the core of this mastery lies a profound understanding of regression analysis, a fundamental pillar underpinning advanced statistical modeling. The simple linear regression paradigm serves as an entry point, where candidates must grasp the nature of correlation coefficients, interpret slopes and intercepts, and conduct hypothesis testing on regression parameters. Crucially, appreciating the assumptions that sustain regression validity—linearity between independent and dependent variables, independence of observations, homoscedasticity or constant variance of residuals, and normality of errors—is imperative to building sound models. Mastery of these assumptions informs model diagnostics and guides appropriate remedial actions when violations arise.

Advanced Regression Techniques: Multiple and Polynomial Regression

Building upon simple linear regression, multiple regression techniques elevate analytical capacity by accommodating several predictor variables simultaneously, capturing more intricate relationships within data. Candidates must adeptly construct models incorporating multiple independent variables, while vigilantly assessing multicollinearity—a condition where predictors exhibit high intercorrelation potentially inflating variance estimates. Understanding diagnostic measures such as Variance Inflation Factor (VIF) becomes critical here. Furthermore, interpreting outputs including partial correlation coefficients, adjusted R-squared values that compensate for model complexity, and standardized regression coefficients for comparing variable influence enhances analytical precision.

Polynomial regression techniques expand this toolkit by enabling the modeling of non-linear relationships through higher-degree terms and interaction effects. Recognizing when data patterns necessitate quadratic, cubic, or even higher-order polynomial transformations facilitates nuanced model fitting. Interaction terms, representing the combined effect of two or more variables, provide additional flexibility, capturing conditional relationships that linear models overlook. Candidates must not only execute these transformations but also interpret polynomial coefficients carefully, as their meaning diverges from linear counterparts.

Specialized Modeling: Logistic Regression and Model Diagnostics

For scenarios involving binary outcome variables, logistic regression emerges as a specialized technique requiring mastery of unique interpretative frameworks. Candidates must understand the calculation and implication of odds ratios, log-odds transformations, and probability estimations. The concept of maximum likelihood estimation, which underpins parameter determination in logistic models, is central to grasping model behavior. Evaluating model adequacy using goodness-of-fit statistics such as the Hosmer-Lemeshow test, and interpreting pseudo R-squared values, ensures a robust assessment of logistic regression outputs.

Equally vital is mastery of model diagnostic procedures that safeguard analytical integrity. Residual analysis techniques, including scrutiny of standardized residuals, leverage statistics that identify influential observations, and influence measures like Cook’s distance, DFFITS, and DFBETAS, empower analysts to detect anomalies or outliers that unduly sway model results. Understanding the implications of these diagnostics enables candidates to refine models, enhancing reliability and predictive power.

Variable Selection Strategies and Cross-Validation Techniques

Optimizing model performance hinges on judicious variable selection methodologies that balance model simplicity with explanatory strength. Forward selection approaches initiate with null models, incrementally incorporating predictors based on statistical significance, while backward elimination starts with comprehensive models, pruning insignificant variables systematically. Stepwise selection synthesizes both paradigms, offering a dynamic route to optimal variable subsets. Familiarity with information criteria such as Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC) provides quantitative guidance in model comparison and variable selection.

Complementing variable selection are cross-validation techniques that assess model generalizability beyond the sample data. K-fold cross-validation partitions data into subsets, iteratively training and validating models to estimate predictive accuracy robustly. Leave-one-out cross-validation represents an extreme form, leveraging all but one observation for training iteratively, while bootstrap methods resample data to quantify variability. Understanding the bias-variance trade-off inherent in these approaches guides candidates in selecting validation strategies that minimize overfitting while preserving predictive fidelity.

Data Transformation, Outlier Treatment, and Handling Missing Data

Practical data challenges often necessitate variable transformations to align with model assumptions. Logarithmic transformations stabilize variance and linearize exponential relationships, while square root and Box-Cox transformations address varying degrees of non-linearity and heteroscedasticity. Selecting appropriate transformations demands analytical discernment, balancing interpretability with model performance.

Outliers—extreme data points that diverge markedly from other observations—can distort statistical inference if unaddressed. Employing statistical detection methods such as z-scores, modified z-scores robust to small samples, and interquartile range (IQR) criteria allows for objective identification. Candidates must weigh the decision to retain, transform, or exclude outliers based on their impact on model accuracy and relevance to the underlying population.

Missing data, ubiquitous in real-world datasets, pose unique analytical challenges. Techniques range from complete case analysis, which discards records with missing values, to mean imputation that replaces missing entries with average values. More sophisticated approaches like multiple imputation model the missingness mechanism to generate plausible replacements, preserving data integrity and minimizing bias. Understanding the nature of missingness—whether Missing Completely at Random (MCAR), Missing at Random (MAR), or Not Missing at Random (NMAR)—guides the selection of appropriate handling methods.

Categorical Variable Modeling and Model Comparison

Incorporating categorical predictors into regression models necessitates specialized encoding methods. Creating dummy variables transforms categorical data into binary indicators, facilitating inclusion in linear frameworks. Strategic selection of reference categories shapes interpretation, while advanced coding schemes such as contrast and effect coding offer nuanced analytical flexibility. Interaction modeling involving categorical variables further enriches explanatory models by capturing context-dependent effects.

Model comparison methodologies enable analysts to discern the best-fitting models from competing alternatives. Information criteria like AIC and BIC balance goodness-of-fit against model complexity, embodying the parsimony principle that favors simpler models with adequate explanatory power. Adjusted R-squared values complement these criteria by penalizing excessive predictors, ensuring model efficiency without compromising accuracy.

Rigorous Assumption Testing and Advanced Analytical Techniques

Verifying that regression models meet underlying assumptions is paramount to valid inference. Normality of residuals is assessed via formal tests such as Shapiro-Wilk and Kolmogorov-Smirnov, which detect departures from Gaussian distributions. Homoscedasticity—the constancy of error variance—is evaluated through Breusch-Pagan and White tests, while independence of residuals, critical in time series or clustered data, is examined with Durbin-Watson statistics.

Beyond traditional regression frameworks, advanced analytical methods enhance robustness and flexibility. Regularization techniques such as ridge regression and LASSO incorporate penalty terms that mitigate multicollinearity and promote sparsity in predictor selection, respectively. Robust regression methodologies accommodate violations of assumptions and outliers, safeguarding model reliability. Non-parametric methods, which eschew stringent distributional assumptions, provide alternatives for modeling complex, nonlinear relationships in heterogeneous data landscapes.

Professional Implementation Framework for Real-World Statistical Applications

Transitioning from theoretical knowledge to practical application embodies a pivotal phase in the professional evolution of statistical analysts. The A00-240 certification examination rigorously evaluates candidates’ abilities to synthesize statistical concepts with real-world business challenges, requiring not only technical proficiency but also keen insight into contextual application and problem-solving acumen. A well-constructed professional implementation framework unites analytical skills, business understanding, and strategic execution to deliver impactful and actionable insights that drive organizational success.

Business Problem Identification and Analytical Formulation

A cornerstone of effective statistical analysis lies in precise business problem identification and formulation. Analysts must adeptly translate broad, often ambiguous business questions into sharply defined analytical objectives that can be empirically investigated through statistical methods. This translation demands a comprehensive understanding of the organizational environment, stakeholder expectations, and the constraints posed by available data and resources. Crafting clear, measurable objectives sets the foundation for targeted analysis, ensuring alignment with strategic goals and facilitating meaningful outcome measurement.

Business problem formulation also involves delineating success criteria, defining key performance indicators, and establishing the scope and limitations of the analysis. By engaging stakeholders early in this process, analysts ensure that the analytical approach remains relevant and actionable, bridging the gap between statistical rigor and business utility. This phase often requires iterative dialogue, refining problem statements to balance ambition with feasibility.

Data Acquisition, Preparation, and Quality Assurance

The integrity and suitability of data significantly influence the validity of any statistical analysis. Data acquisition involves identifying reliable sources, understanding collection methodologies, and assessing data provenance to ensure relevance and accuracy. Analysts must navigate a complex landscape of structured and unstructured data, often integrating disparate datasets to create a comprehensive analytical foundation.

Subsequent data preparation workflows are critical, encompassing cleaning, transformation, and validation processes. These activities address common data issues such as missing values, inconsistencies, duplicate entries, and anomalies, which, if uncorrected, can skew results and erode model credibility. Implementing rigorous quality assurance protocols, including automated validation checks and manual reviews, guarantees data integrity and supports reproducibility.

Documentation of data provenance and preparation steps establishes transparency and facilitates future audits or model retraining. Analysts benefit from leveraging robust data management tools and adhering to industry best practices for data governance, ensuring compliance with organizational policies and regulatory standards.

Exploratory Data Analysis and Insight Extraction

Exploratory Data Analysis (EDA) serves as an indispensable precursor to formal modeling, enabling analysts to uncover intrinsic data characteristics and potential relationships. Employing descriptive statistics such as measures of central tendency and dispersion provides a quantitative snapshot of data distribution, variability, and central values. Visualization techniques—including histograms, box plots, scatterplots, and heatmaps—offer intuitive graphical representations that reveal trends, clusters, and outliers.

Correlation analyses elucidate linear and nonlinear dependencies between variables, guiding feature selection and model specification. Advanced EDA may involve dimensionality reduction techniques like Principal Component Analysis (PCA) to identify underlying latent structures and reduce complexity. Systematic EDA mitigates risks of mis-specification by informing model choice and parameter tuning, ultimately enhancing predictive accuracy and interpretability.

Iterative Model Development and Validation

Effective model development integrates theoretical statistical principles with pragmatic considerations such as computational efficiency, interpretability, and relevance to business goals. Analysts adopt iterative modeling workflows, progressively refining models based on diagnostic feedback and validation outcomes. This cyclical process includes selecting appropriate regression techniques, specifying variables, and testing alternative model forms to balance complexity and parsimony.

Documentation plays a pivotal role in ensuring reproducibility and knowledge transfer, encompassing code annotations, model assumptions, and rationale for methodological choices. Collaborative development environments enhance transparency and facilitate peer review.

Robust validation procedures assess model generalizability and predictive performance using multiple approaches. Holdout validation partitions data into training and test sets to evaluate out-of-sample accuracy. Cross-validation techniques, such as k-fold and leave-one-out methods, provide comprehensive performance metrics by averaging results across multiple partitions. Bootstrap methods further quantify variability by resampling with replacement. Combining these validation strategies offers nuanced insight into model stability and robustness, critical for real-world deployment.

Communication of Statistical Results to Stakeholders

The value of statistical analysis culminates in the effective communication of results to diverse audiences. Analysts must translate complex technical findings into clear, actionable insights that resonate with non-technical stakeholders. Tailoring communication to audience needs involves simplifying jargon, focusing on business implications, and contextualizing results within strategic objectives.

Visualization tools—ranging from simple charts to interactive dashboards—enhance comprehension by distilling data into intuitive formats. Storytelling techniques guide narrative flow, emphasizing key findings, uncertainties, and recommended actions. Effective communication fosters informed decision-making, aligns analytical outcomes with organizational priorities, and supports stakeholder buy-in.

Training sessions and workshops may complement reporting, empowering business users to interpret data independently and promoting data-driven cultures.

Implementation Planning, Monitoring, and Maintenance

Strategic implementation planning ensures the smooth transition of analytical models from development to operational use. Analysts must consider resource allocation, timeline constraints, integration with existing IT infrastructures, and alignment with organizational workflows. Realistic planning addresses potential bottlenecks, such as data latency, system compatibility, and user adoption challenges.

Change management principles guide the introduction of new analytical tools, fostering stakeholder engagement and mitigating resistance. Continuous performance monitoring tracks model accuracy and relevance, detecting degradation due to evolving data patterns or business conditions. Automated monitoring systems facilitate real-time alerts, enabling prompt intervention.

Routine maintenance activities—including model recalibration, retraining, and documentation updates—preserve analytical efficacy and organizational trust. Establishing clear protocols and accountability ensures sustained model performance and compliance.

Ethical Standards, Collaborative Practices, and Professional Development

Ethical considerations permeate all facets of statistical analysis, encompassing responsible data use, privacy protection, and unbiased methodology application. Adherence to ethical guidelines fosters transparency, respects stakeholder rights, and safeguards against misuse or misinterpretation of analytical outputs. Analysts must disclose methodological limitations, acknowledge uncertainties, and avoid overstatement of findings.

Collaborative workflows enhance analytical quality through shared expertise, version control, and coordinated documentation. Embracing project management methodologies streamlines team interactions, facilitates knowledge exchange, and accelerates problem resolution. Leveraging collaborative technologies supports remote and interdisciplinary teams, enhancing productivity and innovation.

Ongoing professional development is vital amid rapidly evolving analytical landscapes. Staying abreast of emerging methodologies, industry trends, and technological advancements empowers analysts to maintain competitive edge. Engagement with professional communities, certifications, and continuing education nurtures expertise and fosters career advancement.

Risk management frameworks identify potential pitfalls—including data quality issues, modeling errors, and stakeholder misalignment—and implement mitigation strategies. Contingency planning ensures analytical projects adapt fluidly to unforeseen challenges, safeguarding project success and organizational value.

Advanced Optimization Techniques for Statistical Model Enhancement

Achieving excellence in statistical analysis hinges on the continuous refinement and optimization of analytical models. The A00-240 certification examination rigorously tests candidates on their proficiency in advanced optimization techniques essential for enhancing model accuracy, robustness, and interpretability. Optimization in this context involves strategic improvements that balance predictive performance with computational efficiency and business relevance. Analysts must master diverse optimization strategies, tailoring approaches to the idiosyncrasies of specific datasets and analytical objectives.

Performance optimization begins with a critical evaluation of algorithmic choices. Selecting appropriate statistical or machine learning models requires understanding the trade-offs between accuracy, computational resource consumption, and model explainability. For instance, while complex ensemble methods may offer superior predictive power, they may sacrifice interpretability and increase computational overhead. Conversely, simpler linear models afford clarity but may underfit complex data structures. Proficient analysts judiciously weigh these factors to identify the most effective methodology within given constraints.

Sophisticated Feature Engineering and Hyperparameter Tuning

Feature engineering remains a linchpin in crafting highly predictive statistical models. Advanced techniques transcend mere data cleaning and basic transformation, encompassing the creation of interaction terms that capture synergistic effects between variables and polynomial features that model nonlinear relationships. Incorporating domain knowledge enriches this process, enabling the construction of predictors that resonate with business realities and augment model explanatory power.

Hyperparameter optimization further refines model performance by systematically adjusting algorithmic settings to identify optimal configurations. Traditional methods such as grid search exhaustively evaluate parameter combinations but can become computationally prohibitive in high-dimensional spaces. Random search offers efficiency gains by sampling parameter subsets probabilistically. More sophisticated Bayesian optimization leverages probabilistic models to guide the search process intelligently, converging on optimal hyperparameters with fewer iterations. Understanding these approaches allows analysts to deploy computational resources effectively while maintaining analytical rigor.

Ensemble Methods and Automated Model Selection Frameworks

Ensemble modeling represents a pinnacle of advanced statistical methodology, combining multiple predictive models to achieve superior generalization compared to any single constituent model. Techniques such as bagging, which aggregates predictions from multiple bootstrap samples, reduce variance and stabilize forecasts. Boosting sequentially trains models to emphasize previously misclassified observations, enhancing overall accuracy. Stacking employs meta-models to integrate diverse base learners, synthesizing their strengths into a cohesive predictive system.

Complementing ensemble methods, automated model selection frameworks streamline analytical workflows by reducing manual intervention. Automated procedures for variable selection, hyperparameter tuning, and model comparison facilitate exhaustive evaluation of alternative models. Such automation enhances consistency, reproducibility, and scalability, particularly valuable in enterprise settings with voluminous data and tight deadlines. However, practitioners must remain vigilant regarding automation pitfalls, such as overfitting through excessive parameter tweaking, necessitating thoughtful implementation.

Enhanced Validation Techniques and Bias-Variance Trade-off Analysis

Robust model validation underpins confidence in statistical predictions. Beyond traditional k-fold cross-validation, advanced strategies cater to specific data characteristics and analytical demands. Stratified cross-validation preserves class proportions within folds, critical for imbalanced datasets. Time series cross-validation respects temporal ordering, preventing leakage of future information into training data. Nested cross-validation introduces hierarchical loops to mitigate bias in hyperparameter tuning assessment.

Central to model optimization is the bias-variance trade-off, a conceptual framework dissecting prediction error into bias, variance, and irreducible noise. High-bias models tend to underfit, oversimplifying data patterns, whereas high-variance models overfit training data, failing to generalize. Mastery of this trade-off guides the selection of model complexity and regularization parameters, facilitating balance between flexibility and generalizability.

Regularization Techniques and Model Interpretation Approaches

Regularization methods serve as powerful tools to combat overfitting and multicollinearity, enhancing model robustness and interpretability. Ridge regression imposes L2 penalties to shrink coefficients, reducing variance at the cost of some bias. LASSO applies L1 penalties, simultaneously performing variable selection by driving insignificant coefficients to zero, thereby promoting model sparsity. The elastic net combines both penalties, offering a flexible balance suited for correlated predictors.

Interpreting complex models, especially those deemed black-boxes, remains critical for extracting actionable business insights. Model-agnostic interpretation tools such as SHAP (SHapley Additive exPlanations) values decompose predictions into contributions from individual features, enabling granular understanding. LIME (Local Interpretable Model-agnostic Explanations) generates locally faithful approximations to explain specific predictions. Permutation importance quantifies feature relevance by measuring prediction degradation when feature values are randomized. Proficiency in these techniques bridges the gap between sophisticated modeling and practical decision-making.

Computational Efficiency and Continuous Monitoring Frameworks

Optimization extends to computational strategies that enhance analytical efficiency and scalability. Vectorization exploits matrix operations to replace iterative loops, accelerating calculations dramatically. Parallel processing distributes computations across multiple cores or nodes, expediting large-scale model training. Efficient memory management minimizes resource bottlenecks, enabling handling of vast datasets without degradation.

Sustaining model performance post-deployment requires continuous monitoring frameworks. Statistical process control charts detect shifts in model behavior indicative of drift. Drift detection algorithms automate identification of data distribution changes, triggering model retraining or recalibration. Performance tracking systems maintain comprehensive logs of key metrics, supporting proactive maintenance and ensuring sustained business value.

Reproducibility and Version Control in Statistical Analysis

Reproducibility is a foundational pillar in statistical analysis, safeguarding scientific integrity by allowing others to verify, replicate, and build upon your work. Employing robust version control systems such as Git enables precise tracking of code alterations, fosters seamless collaboration among geographically dispersed teams, and preserves a meticulous history of methodological evolution. This approach ensures that every decision, from the choice of preprocessing steps to model refinement, remains traceable and transparent. The union of reproducible code and version history cultivates trust and knowledge continuity, even as projects scale or personnel transition.

Version control paired with structured branching workflows like feature branches or experiment-specific versions lets analysts maintain isolation for distinct analytical threads while preserving a coherent mainline. Visualization of diffs highlights parameter adjustments and algorithm tweaks, and integration with collaborative platforms enables peer commentary, code reviews, and automated testing pipelines. By embedding consistent checkpoints and systematic naming conventions, reproducibility becomes inherent rather than an afterthought, setting a foundation for enduring analytical rigor.

Computational Notebooks as Narrative and Code Vessels

Computational notebooks fuse code, outputs, visual artifacts, and narrative exposition into a unified medium. This amalgamation enhances transparency, enabling analysts to chronicle the analytic journey in a literate programming style. Embedded narrative clarifies context, such as why a particular transformation was applied or why a specific model was chosen. Inline visualizations reveal intermediate results dynamically, inviting exploratory inquiry and facilitating debugging. Sharing notebooks fosters knowledge transfer, allowing collaborators to reproduce plots, re-run simulations, and revisit intermediate states even amid evolving datasets or experimental conditions.

Notebooks support modularity and experimentation. Sections can be rerun without reinitializing the entire pipeline, enabling quick iteration and hypothesis testing. Environment-specific metadata, like kernel versions or dependency snapshots, ensures consistency across users. Analysts can export narrative reports or live dashboards directly from notebooks, bridging exploratory analysis and storytelling for stakeholders. The notebook becomes both a lab journal and a communication bridge between data practitioners and decision-makers.

Environment Management for Consistent Analytical Ecosystems

Ensuring that computational environments remain consistent across machines is paramount for reproducible outcomes. Leveraging environment management tools such as virtual environments, containerization solutions, or dependency management systems guarantees that analyses run in identical ecosystems, reducing issues stemming from conflicting packages or outdated versions. Pinning versions of libraries, frameworks, and runtime interpreters helps to avoid inadvertent variation introduced by updates, deprecations, or dependency clashes.

Technologies like container orchestration enable encapsulation of the entire runtime environment, including operating system libraries, system-level requirements, analytical packages, and even custom toolchains. This encapsulation yields portable, immutable analysis stacks. Whether re-executing on a coworker’s workstation, a high-performance cluster, or in the cloud, the preserved environment ensures that code behaves identically and outputs remain consistent.

Environment management also aids in long-term preservation. Archival of container images or dependency manifests allows future revisit, verification, or replication of results even years later. Teams benefit from well-defined, version-controlled environments that form a critical backbone for reproducible science, analytical governance, and collaborative development.

Rigorous Experimental Design and A/B Testing Frameworks

Establishing sound experimentation frameworks, particularly A/B testing, is essential for quantitatively assessing analytical improvements and business interventions. Designing experiments with deliberate stratification, proper randomization, and clear control groups prevents bias and elevates internal validity. Random assignment of subjects or samples ensures that the only systematic difference between experimental arms is the treatment under evaluation.

Prior to launching experiments, conducting statistical power analysis is indispensable. It calculates the sample size required to detect an effect of interest with pre-specified sensitivity, confidence, and significance levels. This prevents underpowered studies that risk false negatives and overpowered designs that waste resources. By aligning power analysis with anticipated effect sizes and acceptable Type I (false positive) and Type II (false negative) error thresholds, analysts balance rigor with efficiency.

Moreover, when performing multiple comparisons such as testing numerous metrics or subgroup effects, adjustments like Bonferroni correction or controlling the false discovery rate guard against inflated Type I risk. By correcting p-value thresholds or applying procedures such as the Benjamini-Hochberg adjustment, the integrity of experimental conclusions is preserved even in the presence of numerous hypotheses.

Experimentation frameworks also support continuous optimization. Incremental testing, multivariate designs, and adaptive experimentation enable organizations to make data-informed decisions rapidly. Mastery of these frameworks ensures statistical robustness while fostering agility in product development and strategic planning.

Scalable Analytical Infrastructure via Cloud and Distributed Systems

Incorporating emerging technological paradigms dramatically enhances analytical capacity, adaptability, and competitive advantage. Cloud computing platforms deliver on-demand infrastructure provisioning, scaling computational resources fluidly to match workload demands. High-performance virtual machines, serverless functions, managed database services, and elastic clusters empower analysts to handle large-scale simulations, model training, or real-time inference without upfront hardware investments.

Complementing cloud elasticity, distributed computing frameworks such as map-reduce paradigms or parallel processing libraries enable efficient handling of massive datasets. By slicing tasks into smaller jobs executed across multiple nodes concurrently, throughput accelerates and bottlenecks dissipate. Analysts can distribute data preprocessing, feature engineering, and model training workflows over clusters or managed services, reducing latency and unlocking new classes of insights from expansive or streaming data sources.

Cloud-native orchestration further streamlines deployment. Container clustering services, workflow schedulers, and serverless pipelines allow analytic routines to run reliably and repeatably at scale. Infrastructure-as-code methodologies capture deployments in versioned scripts, tying reproducibility of environment to reproducibility of analysis. This harmonizes infrastructure, code, and strategy into an integrated, scalable, and transparent system, essential for modern data teams operating at velocity.

Specialized Analytical Toolkits and Automated Workflows

Emerging on the technological frontier are specialized tools that amplify analytical potential and reduce manual overhead. Advanced visualization platforms empower compelling narrative through interactive dashboards, geospatial mapping, and multivariate rendering, enhancing stakeholder engagement and interpretability. These tools often integrate dynamic filtering, drill-down capabilities, and custom theming, elevating the clarity of insights.

Automated machine learning platforms democratize the modeling process by automating tasks such as feature selection, hyperparameter tuning, and algorithm selection. By orchestrating search strategies, ensembling, cross-validation, and model evaluation automatically, these platforms accelerate experimental cycles and surface performant models more swiftly. Integration into reproducible pipelines ensures easy comparison between automatically generated models and custom-built alternatives.

Other specialized toolkits include feature stores, model monitoring dashboards, and reproducibility report generators. Feature stores centralize reusable derived data artifacts. Monitoring systems track model drift, performance degradation, and operational anomalies. Reproducibility report generators compile comprehensive records of data lineage, parameter versions, and runtime summaries. These tools form an ecosystem that supports responsible, scalable, and transparent analytical deployment.

As these systems evolve, seamless interoperability becomes a priority. Analysts can automate workflows across data ingestion, transformation, training, deployment, and monitoring, freeing up cognitive bandwidth for strategic exploration and innovation.

Continuous Innovation through Technological Vigilance

Remaining attuned to rapidly evolving technologies enables analysts to stay ahead and cultivate innovation. Exploring nascent paradigms such as distributed ledger-backed provenance tracking, container-driven reproducible deployment, graph-based data lineage visualization, or federated learning models provides avenues to pioneer new methodologies. From adopting infrastructure-as-code trends to deepening use of interactive notebooks with cloud backends, analysts continuously optimize workflows and expand possibilities.

Embracing rare or emerging tools also sharpens responsiveness. Lightweight workflow orchestrators, portability-focused container systems, or compact dependency lock-file tools may not yet be ubiquitous, but they can substantially streamline analytical cycles, reduce friction, and future-proof pipelines. Encouraging analysts to experiment in sandbox environments invites discovery and prepares organizations to adopt breakthroughs early without destabilizing core systems.

By weaving reproducibility, experimentation, and integration of cutting-edge technologies into the analytical fabric, teams achieve not only rigor but also adaptability. Version control, narrative-rich notebooks, managed environments, robust experimentation, scalable infrastructure, specialized tools, and forward-looking innovation practices coalesce into an environment where analysis is reproducible, extensible, and impactful.

This synergy transforms sporadic insights into enduring intelligence. Analysts operate within reproducible pipelines that evolve systematically, experiments yield credible evidence, and infrastructure supports dynamic scaling. Analytical frameworks become living organisms capable of growth, adaptation, and reflection, anchored by integrity and propelled by innovation. In this ecosystem, delivering reliable results and maintaining competitive advantage become inseparable ambitions, manifesting through meticulous design, technological fluency, and relentless curiosity.

Final Thoughts

The SAS Institute A00-240 certification, officially known as the SAS Certified Statistical Business Analyst Using SAS 9: Regression and Modeling credential, stands as a prestigious benchmark for professionals aiming to establish their expertise in statistical analysis, predictive modeling, and the application of advanced analytics using SAS software. Completing this certification journey is not merely about passing an exam—it represents the culmination of disciplined study, deep conceptual understanding, and practical problem-solving ability across a range of data science challenges.

Achieving mastery over the A00-240 syllabus demonstrates a candidate's ability to manipulate data, interpret analytical results, and develop robust statistical models that inform actionable business insights. This isn’t just academic knowledge. It’s a reflection of applied competence in real-world data environments, where outcomes influence strategic decisions, profitability, risk mitigation, and innovation. In today’s data-driven economy, being certified by SAS Institute positions professionals as not just proficient users, but as analytical thinkers capable of leveraging SAS tools to their fullest potential.

The preparation for A00-240 often requires a well-structured approach. Aspirants benefit immensely from a balanced framework that incorporates theoretical study, hands-on practice, mock testing, and real-world applications. Relying solely on memorization or short-term learning techniques will fall short—this certification demands not only accuracy but also an analytical mindset. The exam tests conceptual fluency in regression analysis, model selection, ANOVA, logistic regression, and statistical inference—all areas that require critical thinking, not rote recall.

A successful preparation strategy involves mastering the nuances of PROC REG, PROC GLM, and PROC LOGISTIC, understanding how to interpret statistical outputs, manage multicollinearity, and select appropriate model diagnostics. More importantly, it involves learning how to connect these outputs back to tangible business scenarios—what does a p-value really mean for a marketing strategy? How should an odds ratio influence pricing models? These are the types of insights that separate a certified SAS business analyst from a typical data technician.

However, the journey does not end once the certificate is earned. In fact, earning the A00-240 should be viewed as a powerful launchpad rather than a finish line. With the certification in hand, professionals are well-positioned to dive deeper into the analytics field—progressing into areas like machine learning, data engineering, optimization, and artificial intelligence. The SAS ecosystem is rich with advanced certifications and specialized tools, from SAS Viya to predictive analytics servers and AI-integrated platforms.

Moreover, this certification significantly boosts professional credibility. Employers across industries—from healthcare and finance to retail and logistics—recognize the SAS A00-240 credential as a marker of analytical maturity and technical reliability. Whether pursuing a promotion, transitioning into a new role, or stepping into the competitive freelance analytics space, being SAS-certified opens doors and enhances professional narratives in a highly saturated market.

SAS Institute A00-240 practice test questions and answers, training course, study guide are uploaded in ETE Files format by real users. Study and Pass A00-240 SAS Statistical Business Analysis Using SAS 9: Regression and Modeling certification exam dumps & practice test questions and answers are to help students.

Exam Comments * The most recent comment are on top

Purchase A00-240 Exam Training Products Individually

Why customers love us?

What do our customers say?

The resources provided for the SAS Institute certification exam were exceptional. The exam dumps and video courses offered clear and concise explanations of each topic. I felt thoroughly prepared for the A00-240 test and passed with ease.

Studying for the SAS Institute certification exam was a breeze with the comprehensive materials from this site. The detailed study guides and accurate exam dumps helped me understand every concept. I aced the A00-240 exam on my first try!

I was impressed with the quality of the A00-240 preparation materials for the SAS Institute certification exam. The video courses were engaging, and the study guides covered all the essential topics. These resources made a significant difference in my study routine and overall performance. I went into the exam feeling confident and well-prepared.

The A00-240 materials for the SAS Institute certification exam were invaluable. They provided detailed, concise explanations for each topic, helping me grasp the entire syllabus. After studying with these resources, I was able to tackle the final test questions confidently and successfully.

Thanks to the comprehensive study guides and video courses, I aced the A00-240 exam. The exam dumps were spot on and helped me understand the types of questions to expect. The certification exam was much less intimidating thanks to their excellent prep materials. So, I highly recommend their services for anyone preparing for this certification exam.

Achieving my SAS Institute certification was a seamless experience. The detailed study guide and practice questions ensured I was fully prepared for A00-240. The customer support was responsive and helpful throughout my journey. Highly recommend their services for anyone preparing for their certification test.

I couldn't be happier with my certification results! The study materials were comprehensive and easy to understand, making my preparation for the A00-240 stress-free. Using these resources, I was able to pass my exam on the first attempt. They are a must-have for anyone serious about advancing their career.

The practice exams were incredibly helpful in familiarizing me with the actual test format. I felt confident and well-prepared going into my A00-240 certification exam. The support and guidance provided were top-notch. I couldn't have obtained my SAS Institute certification without these amazing tools!

The materials provided for the A00-240 were comprehensive and very well-structured. The practice tests were particularly useful in building my confidence and understanding the exam format. After using these materials, I felt well-prepared and was able to solve all the questions on the final test with ease. Passing the certification exam was a huge relief! I feel much more competent in my role. Thank you!

The certification prep was excellent. The content was up-to-date and aligned perfectly with the exam requirements. I appreciated the clear explanations and real-world examples that made complex topics easier to grasp. I passed A00-240 successfully. It was a game-changer for my career in IT!