- Home

- Microsoft Certifications

- 70-463 MCSA Implementing a Data Warehouse with Microsoft SQL Server 2012/2014 Dumps

Pass Microsoft MCSA 70-463 Exam in First Attempt Guaranteed!

70-463 Premium File

- Premium File 245 Questions & Answers. Last Update: Feb 26, 2026

Whats Included:

- Latest Questions

- 100% Accurate Answers

- Fast Exam Updates

Last Week Results!

All Microsoft MCSA 70-463 certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the 70-463 MCSA Implementing a Data Warehouse with Microsoft SQL Server 2012/2014 practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

Exam 70-463 Preparation: Essential Study Materials and Insights

Implementing a data warehouse begins with a clear understanding of its architecture, including dimension tables and fact tables. Dimension tables provide context to the business metrics, offering descriptive information that helps in slicing and dicing data. Fact tables store quantitative data for analysis and are linked to dimension tables through key relationships. Mastering the design of these tables is essential because the efficiency of queries, aggregations, and reports depends heavily on proper table structuring. This includes determining granularity, handling slowly changing dimensions, and planning for star and snowflake schema implementations. The interplay between fact and dimension tables also impacts indexing strategies and overall system performance.

Extracting and Transforming Data

A significant part of data warehouse implementation is the extraction, transformation, and loading of data from multiple sources. Integration Services provides tools to design robust ETL processes, including connection management, data flow design, and transformation components. Designing data flows involves mapping source data to target structures, applying transformations to clean or aggregate data, and ensuring that error handling is incorporated. This phase often requires implementing complex transformations such as derived columns, conditional splits, and lookups, ensuring data integrity and consistency throughout the process. Understanding how these components interact is critical for managing large datasets efficiently.

Loading and Managing Data

Once data is transformed, it must be efficiently loaded into the warehouse. Control flow in ETL packages coordinates tasks such as executing SQL commands, performing file operations, and managing dependencies between processes. Using variables and parameters within packages enhances flexibility and allows for dynamic execution based on runtime conditions. Proper use of package templates, checkpoints, and error handling mechanisms ensures reliability and enables the resumption of tasks in case of failures. The loading process also involves designing incremental data loads, handling late-arriving data, and implementing partitioned data structures to optimize query performance.

Configuring and Deploying Solutions

Deploying ETL packages involves configuring execution environments, security settings, and monitoring mechanisms. Troubleshooting integration processes requires understanding logging, auditing, and event handling features to quickly identify and resolve issues. Administrators must ensure that deployed packages run consistently, handle exceptions properly, and maintain security compliance. Execution monitoring and performance tuning are critical to ensuring that data is delivered accurately and efficiently to the warehouse. Familiarity with different deployment models and execution options allows for flexibility in meeting business requirements while maintaining control over the ETL process.

Data Quality and Master Data Management

Data quality and master data management are essential for reliable reporting and analytics. Data Quality Services provides tools for profiling, cleansing, and standardizing incoming data, while Master Data Services centralizes the management of key business entities. Implementing these services ensures consistency and accuracy across multiple data sources and warehouse applications. It allows the creation of reusable and governable data resources that support enterprise-level analytics. Data profiling, cleansing rules, and knowledge base creation are essential activities to maintain high data quality. Understanding how to integrate these services with ETL packages and warehouse structures is vital for building robust, maintainable solutions.

Integration of Components

Effective data warehouse solutions require seamless integration between design, ETL, and data quality components. Dimension and fact tables must be designed to support intended analyses while accommodating future changes. ETL processes must be optimized to handle varying data volumes and transformations efficiently. Data quality rules must be applied consistently to prevent inaccurate or inconsistent data from entering the warehouse. Mastery of these integrations ensures that each component complements the others, resulting in a system that is scalable, maintainable, and capable of supporting complex analytical queries.

Practical Implementation Considerations

Hands-on experience with real data sets is critical for mastering warehouse implementation. Creating sample ETL packages, simulating various data scenarios, and testing error handling improves understanding of complex workflows. Performance testing, optimization of queries, and monitoring of package execution highlight potential bottlenecks and help develop strategies for mitigating them. Understanding indexing strategies, partitioning, and aggregation techniques enhances system performance. Implementing reusable components and scripts increases development efficiency and ensures consistency across multiple packages and projects.

Advanced ETL Techniques

Advanced ETL techniques, including handling slowly changing dimensions, incremental loads, and dynamic transformations, are essential for efficient warehouse operation. Scripts and custom components may be required for specific business logic or complex data transformations. Implementing error handling, logging, and auditing ensures data integrity and traceability. Understanding the implications of transaction control, checkpoint usage, and parallel execution allows ETL processes to scale effectively. These techniques ensure that the warehouse remains performant and reliable as data volumes and complexity grow.

Monitoring and Optimization

Monitoring ETL processes and warehouse performance is critical for ongoing reliability. Using logging and auditing mechanisms helps detect errors and performance bottlenecks. Optimization strategies include tuning transformations, managing memory allocation, and adjusting execution flow to maximize throughput. Identifying potential failures in advance and implementing proactive solutions prevents downtime and data inconsistencies. Continuous performance evaluation ensures that warehouse solutions remain efficient and meet business requirements.

Comprehensive Knowledge and Integration

Building a successful data warehouse solution requires comprehensive knowledge of all components, their configuration, and interactions. Understanding dimension and fact table design, ETL workflows, data quality, and master data management is essential. Mastery of Integration Services, including data and control flows, variables, parameters, and deployment models, provides the foundation for efficient ETL execution. Integrating these components with data quality and governance frameworks ensures consistent, accurate, and usable data throughout the warehouse.

Preparing for Implementation Scenarios

Candidates should focus on practical exercises that replicate real-world data scenarios. This includes designing dimension and fact tables, creating ETL packages with complex transformations, implementing data quality rules, and managing master data. Testing incremental loads, handling late-arriving data, and troubleshooting execution errors provide insight into operational challenges. Developing a systematic approach to problem-solving, package optimization, and integration ensures readiness for handling large-scale warehouse implementations.

Holistic Approach to Data Warehouse Solutions

The key to successful warehouse implementation is a holistic approach that connects design, ETL, and data management components. Dimension tables must align with fact tables to support analytical queries efficiently. ETL processes must handle diverse data sources while maintaining quality and integrity. Data quality and master data services ensure consistent, accurate, and reliable data for reporting and analysis. Understanding the relationships and dependencies between all components ensures that warehouse solutions are scalable, maintainable, and capable of supporting evolving business requirements.

Preparation for implementing a data warehouse involves more than memorizing features or following tutorials. It requires a deep understanding of data warehouse architecture, ETL processes, data quality management, and master data governance. Practical experience, hands-on exercises, and scenario-based testing solidify theoretical knowledge and ensure proficiency. By focusing on each component individually and understanding how they interact, candidates can build robust, efficient, and reliable data warehouse solutions capable of supporting complex business intelligence and analytical needs.

Advanced Data Flow Design

Designing efficient data flows is a cornerstone of implementing a data warehouse. A data flow defines the movement of data from source systems to destination structures while applying necessary transformations. This process involves selecting appropriate source connections, mapping fields to target tables, and applying transformations to clean, aggregate, or reshape the data. Efficient data flow design also considers the order of operations, memory allocation, and parallel execution to maximize performance. Understanding how different transformation components, such as lookups, derived columns, conditional splits, and aggregations, interact within the flow is crucial for designing robust ETL solutions that scale with growing data volumes.

Connection Management and Source Integration

Managing connections to various data sources is an essential part of ETL processes. Connection managers provide a centralized way to define, configure, and reuse source and destination connections. They support diverse data formats including relational databases, flat files, and external services. Proper connection management ensures consistency, simplifies package maintenance, and facilitates dynamic configurations during runtime. It also allows for error handling and logging to capture connectivity issues, helping maintain data flow integrity. Integrating multiple sources efficiently requires knowledge of data source behaviors, transaction handling, and the implications of batch versus real-time extraction strategies.

Transformation Techniques

Transformations modify data as it moves from source to destination to meet analytical or reporting requirements. Basic transformations include data type conversions, trimming whitespace, and applying conditional logic. More advanced transformations involve handling slowly changing dimensions, implementing surrogate keys, aggregating measures, and resolving duplicate records. Script components and custom logic enable specialized transformations that cannot be accomplished with built-in tools. Understanding when to use each transformation type and optimizing them for performance is critical to ensuring the ETL process is both accurate and efficient.

Implementing Control Flow

Control flow orchestrates the sequence of tasks within ETL packages. It defines dependencies, execution order, and conditional processing, ensuring that tasks execute in a controlled and predictable manner. Using sequence containers, loops, and precedence constraints allows for modular, maintainable packages. Control flow also manages transactions, checkpoints, and error handling, providing resilience against failures and enabling recovery without reprocessing the entire data set. Mastery of control flow design is essential for coordinating complex ETL processes and ensuring that dependencies between tasks do not compromise data integrity or performance.

Error Handling and Logging

Comprehensive error handling and logging are vital for maintaining data quality and operational reliability. Packages should capture errors at both the data flow and control flow levels, redirecting invalid data to error-handling destinations for review and correction. Logging mechanisms record package execution details, including task durations, success or failure status, and exceptions encountered. These logs provide visibility into the ETL process, facilitate troubleshooting, and help optimize performance by identifying bottlenecks. Designing robust logging and error handling is especially important for large-scale data warehouse implementations where undetected errors can have significant downstream impacts.

Deployment and Configuration

Deploying ETL solutions involves configuring execution environments, security settings, and scheduling mechanisms. Packages must be tested for consistent behavior across different environments, ensuring that variables, parameters, and connection configurations are correctly applied. Deployment strategies include project deployment models, package deployment models, and centralized management through a catalog or repository. Proper deployment also includes managing security access, versioning, and backup strategies to prevent unauthorized changes and ensure business continuity. Configuration flexibility allows packages to adapt to changes in source systems or business requirements without requiring extensive redesign.

Incremental Loading Strategies

Incremental loading improves ETL efficiency by processing only data that has changed since the last load. Techniques include using change tracking, timestamp comparisons, and source-specific mechanisms to identify new or modified records. Incremental loads reduce resource consumption, minimize execution time, and allow for more frequent data refreshes. Designing incremental processes requires careful planning to maintain referential integrity, handle late-arriving data, and avoid duplication. Implementing reusable components and templates for incremental loads ensures consistency across multiple packages and data sources, enabling scalable warehouse operations.

Performance Optimization

Optimizing ETL performance is critical for large-scale data warehouse environments. This includes tuning transformations, parallelizing data flows, and managing memory usage. Indexing strategies, partitioning, and batch processing can significantly improve load times and query performance. Performance monitoring involves analyzing task execution times, identifying bottlenecks, and applying optimization techniques such as adjusting buffer sizes, optimizing lookup operations, and reducing unnecessary data movement. Continuous performance assessment ensures that ETL processes remain efficient as data volume and complexity increase, maintaining the responsiveness of analytical queries.

Data Quality Integration

Integrating data quality management into ETL processes ensures that warehouse data is accurate, consistent, and reliable. Data profiling identifies anomalies, inconsistencies, and missing values. Cleansing operations correct or standardize data before it reaches target structures, reducing downstream errors and improving analytical reliability. Master data management supports consistent definitions of key business entities across multiple systems, enabling accurate reporting and analysis. Embedding data quality checks into ETL workflows prevents propagation of incorrect data, ensures compliance with governance standards, and enhances overall trust in the warehouse environment.

Automation and Scheduling

Automation and scheduling are necessary for operational efficiency. ETL processes should be scheduled to run at optimal times, considering source system availability and business requirements. Automated execution reduces manual intervention, ensures timely data delivery, and maintains consistency across multiple runs. Integration with job scheduling systems or orchestration tools allows for monitoring, retry mechanisms, and notification on failure. Proper scheduling strategies also help balance workloads, reduce resource contention, and maintain predictable system performance.

Advanced Scripting and Custom Components

Complex business logic often requires advanced scripting and custom ETL components. Script tasks and script components enable manipulation of data that cannot be handled by standard transformations, such as specialized calculations, integration with external services, or dynamic metadata handling. Developing reusable custom components enhances maintainability and ensures consistency across multiple packages. Understanding how to debug, test, and optimize scripts is essential for reliable ETL execution and effective integration with standard transformations.

Comprehensive ETL Strategy

A successful data warehouse implementation relies on a comprehensive ETL strategy that integrates data extraction, transformation, and loading with quality management, error handling, and performance optimization. Designing modular, reusable components, leveraging parallel processing, and implementing robust logging and auditing ensures reliability and scalability. Aligning ETL workflows with business requirements, source system constraints, and target reporting needs ensures that the warehouse supports analytical objectives effectively.

Real-World Implementation Practices

Practical experience reinforces theoretical knowledge. Building sample ETL packages, simulating various data scenarios, and testing error handling helps identify potential issues early. Performance testing, optimization, and monitoring enhance understanding of real-world challenges. Implementing reusable templates and modular design patterns accelerates development and simplifies maintenance. Documenting processes, transformations, and data lineage ensures clarity for future updates and collaboration across teams.

Integration of Data Management and ETL

Integrating master data management and data quality processes with ETL workflows provides a unified approach to maintaining accurate, consistent, and reliable data. Dimension and fact table designs align with quality rules, cleansing operations, and governance standards. ETL processes incorporate validation, error handling, and logging to enforce these standards consistently. This integration ensures that analytical outputs are based on trustworthy data and reduces the risk of inconsistencies across reporting and decision-making systems.

Future-Proofing Data Warehouse Solutions

Future-proofing a data warehouse involves designing flexible, scalable ETL processes that accommodate growth in data volume, complexity, and business requirements. This includes using modular package design, dynamic configuration, parameterization, and automated monitoring. Anticipating changes in source systems, integrating new data sources, and planning for schema evolution ensures long-term maintainability. Proactive performance tuning and periodic review of data quality rules sustain efficiency and reliability over time.

Continuous Learning and Skill Development

Mastery of data warehouse implementation requires continuous learning and practical skill development. Keeping abreast of new features, best practices, and optimization techniques ensures that ETL processes remain efficient and relevant. Hands-on practice with complex transformations, data profiling, incremental loads, and error handling strengthens understanding. Documenting lessons learned, analyzing performance metrics, and refining workflows contribute to building expertise and preparing for complex implementation scenarios.

Holistic Understanding of Data Warehouse Ecosystem

A holistic understanding encompasses dimension and fact table design, ETL execution, data quality management, and master data governance. Each component is interdependent, and successful implementation requires knowledge of how changes in one area affect the others. Designing with scalability, maintainability, and reliability in mind ensures that the data warehouse can support advanced analytics, reporting, and decision-making. Integrating these components seamlessly results in a cohesive system that meets business intelligence objectives efficiently.

Developing a high-performing data warehouse requires attention to architectural design, ETL process efficiency, data quality, and operational management. By integrating robust control flows, advanced transformations, comprehensive error handling, and automated execution, organizations can ensure accurate and timely data delivery. A deep understanding of data modeling, ETL principles, and governance frameworks supports scalable, maintainable, and reliable warehouse solutions capable of adapting to evolving business needs.

Advanced Control Flow Management

Control flow is the backbone of orchestrating ETL processes within a data warehouse environment. It manages the sequence and dependencies of tasks, enabling complex workflows to execute reliably. Tasks can be grouped into sequence containers for modularity, loops can handle repetitive operations, and precedence constraints ensure that execution follows defined conditions. Utilizing these components allows developers to implement transactional controls, checkpoints for resuming interrupted packages, and structured error handling. Mastery of control flow is essential for building robust, maintainable, and scalable ETL processes that handle complex data movements efficiently.

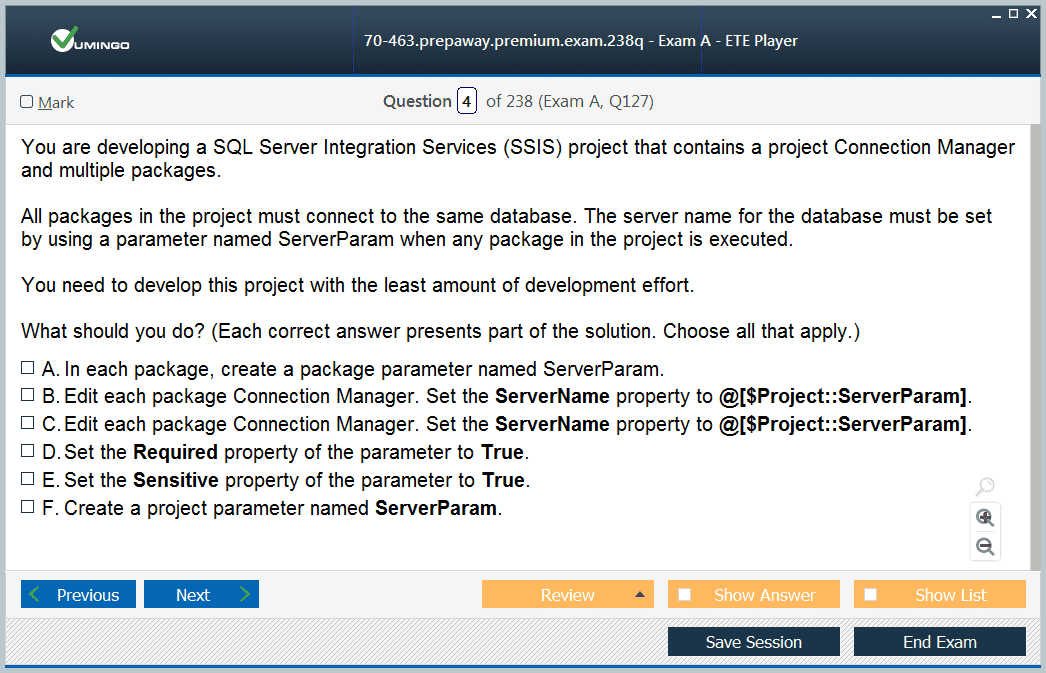

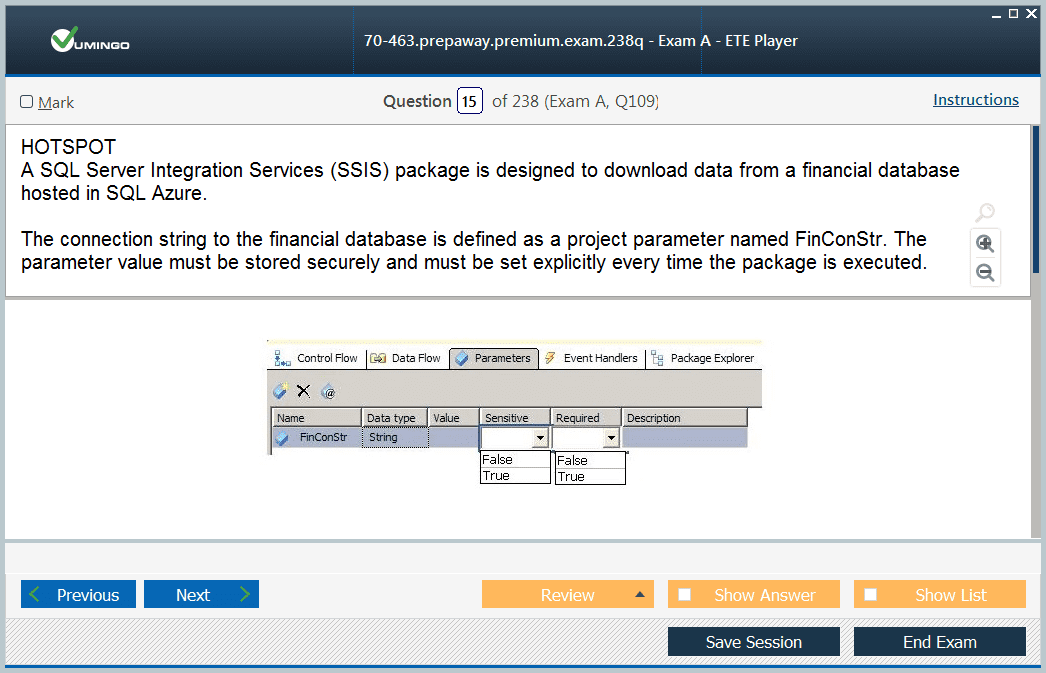

Variables and Parameters in ETL

Variables and parameters are fundamental for dynamic and reusable ETL solutions. Variables store values that change during package execution, such as counters, temporary results, or environment-specific information. Parameters allow external input to packages, enabling configuration without altering package design. Effective use of variables and parameters supports modular ETL design, facilitates incremental loads, and enhances adaptability to different deployment environments. Understanding scope, data types, and lifetime of variables is crucial for controlling flow logic, maintaining package flexibility, and ensuring consistent execution across multiple environments.

Data Extraction Techniques

Extracting data from heterogeneous sources is a critical component of a data warehouse implementation. Extraction strategies must consider source system capabilities, data volume, and frequency of changes. Options include full extraction, incremental extraction, and change data capture. Full extraction reads entire datasets, while incremental approaches capture only new or modified data, optimizing performance and reducing system load. Change data capture allows real-time tracking of modifications. Knowing when and how to apply each extraction method ensures accuracy, minimizes resource usage, and maintains synchronization between source systems and the data warehouse.

Transformation Logic and Optimization

Transformations apply rules and calculations to raw data to meet analytical requirements. Common transformations include data type conversion, conditional splitting, aggregation, lookups, and handling slowly changing dimensions. Complex scenarios may require script components or custom transformations to address specialized business logic. Optimizing transformations involves reducing unnecessary processing, using caching strategies for lookups, minimizing row-by-row operations, and applying parallel execution where possible. Efficient transformation logic ensures that data is prepared accurately and delivered to the warehouse in a timely manner, supporting responsive analytical queries.

Loading Strategies and Data Integration

Data loading involves moving transformed data into warehouse structures, such as dimension and fact tables. Strategies include full refresh, incremental load, and partition switching. Full refresh replaces existing data, while incremental loads append or update data based on change detection mechanisms. Partition switching allows large datasets to be managed efficiently, minimizing downtime and resource usage. Designing loading workflows requires balancing performance, data integrity, and operational constraints. Proper sequencing of extraction, transformation, and loading ensures that dependencies are respected and that data is available for analytics without disruption.

Performance Tuning and Resource Management

Performance tuning is essential to handle large datasets and complex ETL processes. Techniques include optimizing buffer sizes, configuring parallel execution, indexing source and target tables, and partitioning data. Monitoring resource utilization, analyzing bottlenecks, and applying tuning methods such as minimizing blocking transformations or reducing memory-intensive operations improves throughput. Efficient resource management ensures ETL packages can process data within required time windows while maintaining stability and avoiding excessive load on source or destination systems.

Error Handling and Data Validation

Robust error handling ensures the reliability of ETL operations. Errors can occur at the data flow, transformation, or control flow level. Redirecting erroneous rows to error destinations, logging failures, and implementing retry logic allows developers to manage exceptions without disrupting the overall process. Data validation verifies consistency, completeness, and conformity to business rules, catching issues early in the ETL pipeline. Combining error handling with validation ensures high data quality and reduces the risk of incorrect reporting or analysis.

Logging and Auditing Practices

Logging and auditing provide visibility into ETL operations and support troubleshooting, compliance, and performance analysis. Logging captures package execution details, including task completion times, row counts, errors, and warnings. Auditing tracks changes, user interactions, and operational events. Comprehensive logging and auditing facilitate proactive monitoring, allow root cause analysis of failures, and enable accountability for data processing activities. This practice is critical for operational transparency and continuous improvement of data warehouse processes.

Incremental Load Management

Incremental load processes minimize resource consumption and reduce execution time by processing only new or updated records. Techniques include using timestamps, change tracking, and delta queries. Proper implementation maintains referential integrity, prevents duplication, and supports historical data management. Incremental loads allow frequent refresh cycles, enabling near real-time analytics and timely reporting. Reusable templates and standardized patterns enhance maintainability and consistency across multiple packages and workflows.

Data Quality Assurance

Ensuring data quality is central to a reliable data warehouse. Data profiling identifies anomalies, outliers, and inconsistencies. Cleansing operations correct errors, standardize formats, and apply business rules to produce accurate data. Integrating data quality checks within ETL processes prevents propagation of flawed data into analytics and reporting layers. Implementing validation, deduplication, and enrichment during the ETL pipeline improves reliability and ensures that downstream business intelligence activities are based on trustworthy information.

Master Data Management Integration

Master data management (MDM) ensures that critical business entities are consistently defined and maintained across multiple systems. Integrating MDM with ETL workflows ensures that dimension tables and reference data are accurate and aligned with governance policies. MDM supports hierarchies, relationships, and versioning, enabling standardized analytical reporting. Effective integration of MDM enhances consistency, reduces redundancy, and supports enterprise-wide decision-making.

Security and Access Control

Security is a fundamental aspect of ETL and data warehouse operations. Packages must enforce appropriate access controls for sensitive data, ensuring that only authorized users can execute or modify processes. Role-based security, encryption of sensitive information, and secure configuration of connections and components are critical for compliance and risk management. Managing security effectively protects data integrity and prevents unauthorized access or misuse during extraction, transformation, and loading.

Automation and Scheduling

Automating ETL execution ensures timely and consistent data availability. Scheduling mechanisms trigger packages at predefined intervals, balancing system load and business requirements. Automation reduces manual intervention, ensures repeatable processes, and supports continuous integration with source systems. Scheduling strategies also accommodate dependencies, maintenance windows, and resource constraints, enabling efficient operation of complex ETL workflows.

Custom Components and Advanced Scripting

Custom components and scripts extend ETL functionality to address unique business logic or source-specific challenges. Script tasks and components allow developers to implement calculations, transformations, or integrations not supported by standard components. Reusable scripts and custom modules improve maintainability and efficiency across multiple packages. Advanced scripting skills enable precise control over data handling, error management, and dynamic behavior within ETL workflows.

Scalability and Future-Proofing

Designing ETL processes with scalability ensures that the data warehouse can accommodate growing data volumes and evolving business requirements. Techniques include modular package design, parameterization, dynamic configurations, and partitioned loading strategies. Anticipating schema changes, integrating new sources, and optimizing performance ensures long-term maintainability. Scalable designs support business growth, maintain system responsiveness, and reduce the need for major redesigns as data complexity increases.

Holistic Data Warehouse Approach

A holistic approach integrates control flow, data transformation, loading, data quality, master data management, and security into a cohesive ETL strategy. Understanding interdependencies ensures that processes complement each other and maintain data integrity. Designing with flexibility, maintainability, and reliability in mind supports advanced analytics, reporting, and decision-making. A well-integrated data warehouse ecosystem ensures consistent, accurate, and timely information delivery for business intelligence objectives.

Continuous Learning and Best Practices

Continuous learning and application of best practices strengthen ETL expertise. Hands-on experience with real-world scenarios, incremental loads, complex transformations, and error handling builds competence. Documenting lessons learned, analyzing performance metrics, and refining workflows enhance the efficiency and reliability of ETL processes. Staying updated with emerging techniques, tools, and optimizations ensures that data warehouse solutions remain effective and aligned with business needs.

Mastering advanced ETL techniques involves a combination of control flow management, transformation logic, incremental loading, data quality, and automation. Each component plays a critical role in building a reliable and efficient data warehouse. A deep understanding of variables, parameters, error handling, logging, security, and scalable design principles ensures that data is accurately captured, transformed, and made available for analysis. By integrating these practices, organizations can develop robust, maintainable, and high-performing ETL solutions that support strategic decision-making and operational efficiency.

Advanced Data Flow Design

Data flow design is crucial in implementing an efficient data warehouse. It involves defining the path that data takes from source to destination, including transformations, lookups, and aggregations. Proper design ensures minimal latency, prevents data bottlenecks, and maximizes throughput. Understanding the interaction between source data types, transformations, and destination requirements allows the creation of flexible pipelines that can handle various data volumes and structures. Optimizing buffer sizes, minimizing blocking transformations, and leveraging parallel execution are critical techniques to enhance data flow performance.

Connection Management and Source Integration

Connection management involves configuring and maintaining reliable connections to diverse data sources. A data warehouse may receive data from relational databases, flat files, or cloud-based systems. Properly defining connection managers ensures secure, efficient, and reusable access across multiple packages. Understanding connection properties, transaction settings, and error handling within connections reduces failures and improves ETL reliability. Integration strategies should account for differences in data formats, encoding, and source system performance.

Transformation Strategies

Transformations are applied to cleanse, standardize, and enrich data before loading it into warehouse structures. These include conditional splits, lookups, derived columns, and data type conversions. More complex transformations may involve custom scripts for specialized business rules. Implementing transformations efficiently requires knowledge of performance implications, such as blocking versus non-blocking transformations, caching mechanisms, and memory usage. Combining transformations in a structured, logical sequence ensures data integrity and prepares datasets for analytic processing.

Handling Slowly Changing Dimensions

Slowly changing dimensions (SCD) are a key challenge in data warehouse design, as they track changes in dimension attributes over time. Techniques include Type 1 for overwriting values, Type 2 for preserving historical records, and Type 3 for limited historical tracking. Designing and implementing SCDs requires careful attention to key generation, versioning, and indexing strategies to ensure that historical analysis is accurate and queries return expected results. Proper SCD implementation maintains the integrity of trend analysis and historical reporting.

Script Tasks and Custom Transformations

Script tasks and components allow advanced logic that cannot be handled by built-in transformations. They are useful for dynamic calculations, complex string manipulations, or integrating with external systems. Effective use of scripting requires understanding the object model, debugging techniques, and best practices to maintain performance and readability. Scripts enhance flexibility and enable ETL processes to adapt to unique business requirements without extensive redesign of packages.

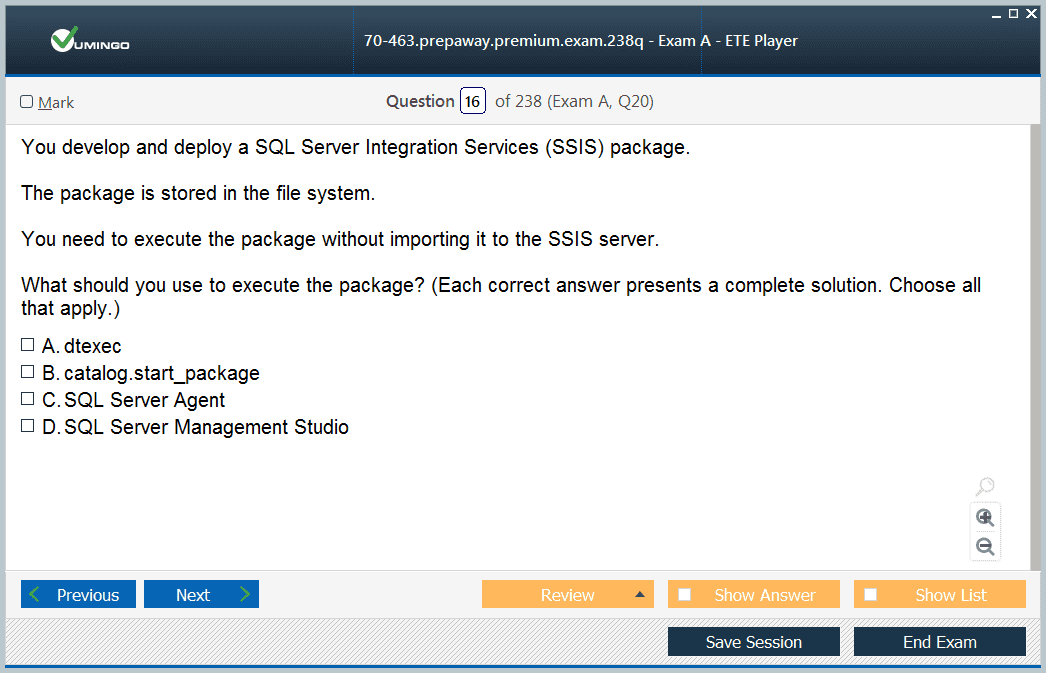

Execution and Package Management

Managing the execution of ETL packages involves scheduling, dependency handling, and monitoring. Packages can be executed manually, through automated schedules, or triggered by events. Proper package management ensures that dependencies are respected, resources are allocated efficiently, and failures are detected and resolved promptly. Techniques like checkpoints, transaction handling, and logging help in recovering from interruptions and maintaining consistency in data processing.

Logging and Monitoring

Logging captures details about package execution, including task durations, errors, warnings, and processed row counts. Monitoring tracks performance, identifies bottlenecks, and ensures adherence to SLA requirements. Combining logging and monitoring enables proactive management of ETL workflows, facilitating troubleshooting, auditing, and continuous optimization. Detailed logs also support compliance and reporting requirements by providing a historical record of data processing activities.

Incremental and Delta Loading

Incremental and delta loading techniques minimize processing time by capturing only new or modified records. Approaches include timestamp-based extraction, change data capture, and triggers in source systems. Proper implementation requires understanding dependencies between tables, ensuring referential integrity, and handling updates, inserts, and deletes correctly. Incremental strategies reduce load on both source and target systems, allowing more frequent refreshes and near real-time availability of data for reporting.

Data Quality Integration

Data quality management ensures that only accurate and consistent data enters the warehouse. Techniques include profiling data to detect anomalies, standardizing formats, and deduplicating records. Embedding data quality operations within ETL processes prevents propagation of errors and enhances the reliability of analytical outputs. High-quality data improves decision-making and increases confidence in business intelligence outputs.

Master Data Management Alignment

Aligning ETL processes with master data management ensures that core business entities, such as customers or products, are consistent across all systems. This reduces redundancy, enforces standard definitions, and allows accurate reporting and analysis. Integration with master data services supports hierarchical structures, data versioning, and consistent application of rules across multiple data marts. Proper alignment reduces inconsistencies and strengthens enterprise-wide analytics.

Auditing and Compliance

Auditing within ETL processes provides a mechanism to track changes, user actions, and processing history. This includes capturing execution details, error occurrences, and data modifications. Auditing supports compliance requirements, enables accountability, and helps identify areas for process improvement. By combining auditing with logging, organizations can maintain comprehensive oversight of their data warehouse operations.

Security Practices

Security in ETL operations ensures that sensitive data is protected from unauthorized access. Techniques include role-based access control, encryption of sensitive data, secure handling of connection strings, and validation of user permissions. Implementing security best practices protects both data and ETL processes from misuse or breaches, maintaining the integrity and confidentiality of enterprise information.

Error Handling and Recovery

Robust error handling strategies prevent ETL failures from causing data inconsistencies. Techniques include redirecting error rows, implementing retries, and capturing detailed error information for diagnostics. Recovery mechanisms like checkpoints and transaction management allow processes to resume from the point of failure without reprocessing entire datasets. Effective error handling reduces downtime and ensures data accuracy.

Performance Tuning Techniques

Performance tuning involves optimizing transformations, buffer management, parallelism, and resource allocation. Identifying bottlenecks, reducing blocking operations, and applying best practices for indexing and caching can dramatically improve ETL efficiency. Continuous monitoring and iterative tuning ensure that data loads complete within acceptable time windows while maintaining stability.

Parallelism and Partitioning

Leveraging parallelism and data partitioning allows ETL processes to handle large datasets efficiently. Partitioned tables, parallel data flows, and concurrent task execution reduce processing time and resource contention. Effective design ensures that workloads are distributed evenly and that dependencies are managed to prevent conflicts. These strategies are essential for high-volume data warehouse environments.

Integration Testing and Validation

Integration testing ensures that all ETL components work together as intended. This includes verifying data accuracy, completeness, and consistency across transformations and loads. Validation processes test edge cases, confirm referential integrity, and ensure compliance with business rules. Comprehensive testing reduces the risk of errors in production and supports reliable decision-making.

Automation and Orchestration

Automation of ETL processes enables repeatable, reliable execution and reduces manual intervention. Orchestration tools schedule, monitor, and trigger packages based on dependencies or events. Automation improves efficiency, ensures adherence to processing windows, and supports continuous integration of new data sources or business requirements.

Documentation and Best Practices

Maintaining detailed documentation of ETL processes, transformations, dependencies, and configurations is critical for maintainability and knowledge transfer. Following best practices in design, naming conventions, modularization, and error handling improves readability, reduces technical debt, and facilitates collaboration among development teams. Proper documentation also supports troubleshooting and enhances long-term sustainability of data warehouse solutions.

Continuous Improvement and Optimization

Continuous improvement focuses on analyzing ETL performance, identifying inefficiencies, and implementing optimizations. This includes refining transformation logic, tuning data flows, improving resource usage, and updating packages to accommodate evolving business needs. Iterative improvement ensures that ETL workflows remain efficient, reliable, and aligned with analytical objectives over time.

Comprehensive ETL Strategies

Developing comprehensive ETL strategies for a data warehouse involves mastering control flow, transformation logic, incremental loading, data quality, and performance optimization. Integrating scripting, error handling, security, logging, and automation ensures robust, maintainable, and high-performing solutions. A holistic approach aligns ETL processes with business objectives, supports accurate reporting and analytics, and maintains data integrity across all stages of the warehouse lifecycle. By focusing on these practices, organizations can build scalable, efficient, and reliable data warehouses that deliver actionable insights consistently.

Data Loading Strategies

Efficient data loading is a cornerstone of implementing a data warehouse. This process involves moving transformed and cleansed data into fact and dimension tables in a manner that ensures accuracy, consistency, and minimal disruption to production systems. Selecting appropriate loading techniques, such as full loads, incremental loads, or delta loads, depends on the nature of the data, frequency of updates, and system capacity. Each approach has its own advantages, with incremental and delta loads often favored for their ability to reduce system overhead and enable near real-time data availability.

Incremental Load Design

Incremental loading focuses on extracting only new or modified records from source systems. This requires the identification of key indicators such as timestamps, version numbers, or change tracking flags. Proper design ensures that the ETL process captures all relevant changes without introducing duplicates or missing updates. Implementation may involve query-based filters, change data capture mechanisms, or event-driven triggers. Managing incremental loads also involves handling dependencies between tables and maintaining referential integrity to ensure that related data remains consistent across fact and dimension tables.

Data Transformation and Enrichment

Transformations applied during loading refine data to meet analytical requirements. This includes calculations, data type conversions, aggregations, and derivation of business metrics. Enrichment may involve integrating reference data, performing lookups, or applying rules to standardize values across different sources. Effective transformation design requires understanding the logical and physical schema of the target warehouse, performance implications, and the need for data consistency. Complex transformations often require scripting or custom components to meet specific business logic.

Fact Table Loading

Fact tables store transactional or measurable events within a data warehouse. Loading fact tables efficiently involves managing large volumes of data, ensuring accurate aggregation, and maintaining historical consistency. Techniques such as partitioned loading, batch processing, and parallelism are commonly applied to optimize performance. Special attention is needed for handling surrogate keys, foreign key relationships, and slowly changing dimensions to maintain accurate historical records.

Dimension Table Loading

Dimension tables provide context for fact data and often contain descriptive attributes about business entities such as customers, products, or locations. Dimension loading involves managing primary keys, handling hierarchical relationships, and implementing mechanisms for tracking changes. Slowly changing dimension strategies, including Type 1, Type 2, and Type 3, are critical to preserving historical accuracy while allowing current attribute values to be updated. Properly loaded dimensions support efficient queries, aggregations, and reporting across multiple business scenarios.

Control Flow Management

Control flow orchestrates the sequence of tasks within ETL packages, managing dependencies, conditional execution, and looping operations. Designing effective control flow ensures that tasks execute in the correct order, error handling is applied consistently, and parallel execution is leveraged when possible. Control flow structures such as sequence containers, loops, and precedence constraints facilitate modular, maintainable, and efficient ETL processes. They also provide a framework for implementing checkpoints, retries, and transaction handling to maintain data integrity during execution.

Using Variables and Parameters

Variables and parameters enable dynamic and flexible ETL processes. Variables store temporary values that can control execution logic, store intermediate results, or influence transformations. Parameters allow external configuration of packages, enabling the same ETL process to be reused across different environments or data sources. Effective use of variables and parameters reduces hard-coding, simplifies maintenance, and enhances reusability of ETL packages. Understanding scope, data types, and proper initialization ensures consistent behavior across complex workflows.

Error Handling and Exception Management

Robust error handling prevents failures in one part of the ETL process from cascading and affecting overall data integrity. Techniques include redirecting error rows, implementing conditional logic for error correction, and capturing detailed diagnostic information. Exception management involves monitoring runtime conditions, logging error details, and providing mechanisms for automated recovery or notifications. This ensures that errors are addressed promptly, reduces downtime, and supports audit and compliance requirements.

Logging and Monitoring

Logging captures execution metrics such as task durations, error counts, and row processing statistics. Monitoring leverages these logs to identify performance bottlenecks, track execution trends, and ensure adherence to service level agreements. Detailed logging combined with real-time monitoring provides visibility into ETL operations, supporting proactive troubleshooting, resource management, and performance optimization. It also serves as a foundation for auditing, compliance, and historical analysis of data processing activities.

Package Deployment and Configuration

Deploying ETL packages involves moving them from development to testing and production environments while ensuring consistent behavior. Proper deployment strategies include configuration management, environment-specific parameters, and validation of connections and dependencies. Configuration techniques, such as using configuration files or centralized parameterization, enable flexible deployment across multiple environments without modifying the package logic. This reduces errors, simplifies maintenance, and ensures reliable execution in production.

Data Quality Integration

Incorporating data quality measures into ETL processes enhances the reliability and accuracy of the warehouse. Techniques include data profiling, standardization, deduplication, and validation against reference data. Data cleansing operations can be embedded directly into transformation pipelines or managed through dedicated data quality tools. Maintaining high-quality data reduces downstream errors, supports trustworthy analytics, and ensures that business intelligence outputs reflect accurate insights.

Master Data Management Alignment

Aligning ETL workflows with master data management practices ensures consistent representation of critical business entities across the warehouse. Centralized management of customer, product, and other core data supports accuracy, reduces duplication, and simplifies integration across multiple fact and dimension tables. Master data alignment enables hierarchical modeling, version tracking, and enforcement of business rules, which collectively enhance reporting consistency and analytic accuracy.

Performance Optimization

Optimizing ETL performance involves balancing resource utilization, minimizing processing time, and preventing system bottlenecks. Strategies include parallel execution of tasks, partitioned data loads, efficient memory management, and minimizing blocking transformations. Continuous monitoring and profiling allow identification of slow operations and enable targeted tuning to improve throughput. Effective optimization ensures that large datasets are processed efficiently while maintaining accuracy and reliability.

Automation and Scheduling

Automation reduces manual intervention, ensures repeatable processes, and supports predictable delivery of data to the warehouse. Scheduling mechanisms trigger ETL packages based on time, dependencies, or events. Automation frameworks provide centralized control over execution, simplify operational management, and allow for the integration of alerts, notifications, and escalation procedures. Automated ETL enhances consistency, reduces human error, and enables adherence to business timelines.

Security and Compliance

Security measures protect sensitive data and ensure that ETL operations are performed within controlled access boundaries. Implementing role-based permissions, encrypting connections, and validating user access are essential practices. Compliance with organizational and regulatory standards is reinforced through secure handling of sensitive information, comprehensive logging, and audit-ready reporting of ETL activities. Secure ETL operations maintain both data confidentiality and operational integrity.

Testing and Validation

Testing ETL processes ensures that data is accurately extracted, transformed, and loaded according to defined business rules. Validation includes verifying data completeness, accuracy, consistency, and adherence to schema constraints. Unit tests, integration tests, and regression testing help identify defects early, confirm correct behavior, and prevent errors from reaching production environments. Comprehensive testing reduces risks, improves reliability, and supports ongoing maintenance and enhancement of ETL workflows.

Continuous Improvement and Optimization

Continuous improvement involves analyzing ETL performance, identifying inefficiencies, and implementing refinements. This includes reviewing transformation logic, enhancing parallel execution, tuning queries, and optimizing resource utilization. Iterative improvements allow ETL processes to adapt to changing business needs, handle growing data volumes, and maintain high performance. Continuous refinement ensures that the data warehouse remains a reliable and efficient source of business intelligence over time.

Holistic ETL Management

A holistic approach integrates design, transformation, loading, quality, security, and monitoring into a unified framework. Coordinating all aspects of ETL ensures consistency, accuracy, and efficiency across the entire data warehouse lifecycle. Holistic management reduces duplication, streamlines operations, and enables a proactive response to issues, while supporting scalability and adaptability. By addressing all components collectively, organizations can build robust and reliable data warehouse solutions that provide actionable insights consistently.

Strategic Data Warehouse Planning

Strategic planning involves aligning ETL and data warehouse design with business goals and analytics requirements. It requires anticipating growth, selecting appropriate architecture, and implementing scalable ETL frameworks. Strategic planning ensures that data flows are optimized, quality is maintained, and reporting requirements are supported efficiently. A well-planned warehouse enables timely decision-making, enhances analytical capabilities, and provides a foundation for enterprise-wide intelligence.

Knowledge Management and Documentation

Maintaining comprehensive documentation of ETL workflows, transformations, dependencies, and operational procedures is essential for sustainability and knowledge transfer. Documentation supports troubleshooting, onboarding of new team members, and auditing processes. Standardized practices, naming conventions, and clearly defined workflows improve maintainability and reduce technical debt. Effective knowledge management ensures that the warehouse can be operated reliably over the long term, even as personnel or business requirements change.

Integration with Analytical Tools

Data warehouses provide the foundation for business intelligence, reporting, and analytics. Ensuring that ETL processes deliver data in formats suitable for analytical tools maximizes usability. This includes structuring fact and dimension tables for efficient querying, applying necessary aggregations, and maintaining historical records. Integration with analytics tools enhances decision-making by providing timely, accurate, and comprehensive insights derived from high-quality warehouse data.

Comprehensive ETL and Data Warehouse Implementation

Successful implementation of a data warehouse requires mastery of ETL design, execution, and optimization. Effective strategies encompass data flow, transformation, incremental loading, error handling, logging, monitoring, and performance tuning. Incorporating data quality, master data alignment, security, and compliance ensures that the warehouse produces reliable and accurate insights. Automation, testing, and continuous improvement enhance operational efficiency and adaptability. A comprehensive, holistic approach ensures that all aspects of ETL and warehouse management are coordinated, scalable, and aligned with organizational objectives. By integrating these practices, a data warehouse becomes a robust, dependable platform for analytics, enabling informed decision-making and supporting enterprise-wide intelligence initiatives.

Advanced Dimension Design

Designing dimensions involves more than simply creating tables with attributes. Each dimension must accurately represent business entities, support analytical requirements, and enable efficient querying. Key aspects include defining hierarchies, establishing attribute relationships, and implementing slowly changing dimension strategies. Hierarchies allow for multi-level aggregation and drill-down analysis, while attribute relationships optimize query performance by clarifying dependencies. Slowly changing dimensions are crucial for capturing historical changes without overwriting past data, with strategies ranging from updating existing rows to creating new versioned records. Proper dimension design directly impacts the accuracy, performance, and usability of the entire data warehouse.

Fact Table Architecture

Fact tables store measurable business events and often contain large volumes of data. Designing fact tables requires attention to granularity, indexing, and partitioning strategies. Granularity defines the level of detail captured and affects both storage requirements and query performance. Partitioning can improve data loading efficiency and query performance by segmenting large datasets into manageable chunks. Fact tables also maintain foreign key relationships with dimensions, which must be managed carefully to ensure referential integrity. Columnstore indexing and aggregations are additional techniques to enhance query performance for analytical workloads.

Integration Services for ETL

Integration Services are central to extracting, transforming, and loading data efficiently. ETL processes must handle various source systems, apply necessary transformations, and load data into the warehouse while maintaining data integrity. Designing effective data flows involves balancing transformations, managing memory usage, and optimizing task execution. Tasks include merging, splitting, aggregating, and cleansing data to ensure the warehouse contains accurate and consistent information. Script tasks and custom components provide flexibility for complex business rules that cannot be handled with built-in transformations.

Data Flow Optimization

Optimizing data flows involves reducing bottlenecks, leveraging parallel execution, and minimizing memory overhead. Proper configuration of buffers, batch sizes, and commit intervals ensures high throughput. Transformations should be sequenced efficiently to avoid unnecessary blocking operations. Incremental loads and delta processing reduce the amount of data processed, further improving performance. Continuous monitoring of data flow performance and adjusting parameters based on volume and complexity helps maintain consistent ETL efficiency.

Control Flow and Package Management

Control flow coordinates the sequence and execution of ETL tasks. Containers, loops, and precedence constraints manage dependencies, error handling, and task execution order. Packages must be designed to allow modularity and reusability, with parameters and variables providing flexibility for different environments and datasets. Checkpoints and transaction handling ensure recoverability and prevent partial data loads. Proper control flow design improves maintainability, reliability, and scalability of ETL processes.

Error Handling and Logging

Robust error handling captures exceptions, prevents process failure propagation, and provides detailed diagnostic information. Redirecting error rows, applying conditional logic, and capturing logs allow for proactive correction and auditing. Logging includes execution metrics, error counts, and data statistics, supporting performance monitoring and compliance reporting. Centralized logging and monitoring systems enable rapid identification of issues, trend analysis, and continuous process improvement.

Deployment and Configuration

Deploying ETL packages requires careful management of environment-specific settings, dependencies, and security considerations. Parameterized configurations and centralized management reduce the risk of errors and simplify transitions across development, testing, and production environments. Validation of packages prior to execution ensures correctness and prevents operational issues. Deployment strategies should also consider rollback plans and monitoring to handle unexpected failures.

Data Quality and Cleansing

Data quality integration is essential for maintaining reliable and accurate analytical data. Cleansing operations include standardization, deduplication, validation against reference datasets, and enforcement of business rules. Automated data profiling identifies anomalies, inconsistencies, and missing data. Integrating data quality measures into ETL processes ensures that the warehouse remains a trusted source of information. Reusable knowledge bases allow consistent application of rules across multiple datasets and projects.

Master Data Management

Master data management ensures consistency of critical business entities such as customers, products, and suppliers. Centralized management of these entities allows for accurate reporting and analytics across multiple fact and dimension tables. Integration of master data into ETL workflows helps enforce standardized attributes, maintain hierarchies, and track changes over time. Effective master data management improves decision-making and reduces data redundancy, supporting enterprise-wide analytical initiatives.

Performance Tuning

Optimizing ETL and warehouse performance involves indexing strategies, partitioning, parallel execution, and efficient memory management. Fact and dimension tables should be designed for both loading efficiency and query performance. Query optimization, incremental data processing, and caching mechanisms further improve responsiveness. Regular monitoring and tuning of ETL workflows help identify and resolve bottlenecks, ensuring consistent performance even as data volumes grow.

Automation and Scheduling

Automation reduces manual intervention and ensures reliable, repeatable ETL execution. Scheduling mechanisms trigger processes based on time, events, or dependencies, while monitoring and alerting frameworks ensure timely response to failures. Automation also supports batch processing, incremental loads, and integration with downstream reporting systems. By minimizing human involvement, automation reduces errors, improves consistency, and allows resources to focus on analytical tasks rather than routine data management.

Security Considerations

Securing ETL processes and warehouse data is critical. Role-based access, encryption, and authentication mechanisms ensure that sensitive data is protected and only accessible by authorized users. Security measures extend to both execution environments and stored data, supporting compliance with organizational and regulatory requirements. Logging and audit trails further reinforce accountability and facilitate monitoring of data access and process execution.

Testing and Validation

Comprehensive testing ensures that ETL processes produce accurate, complete, and consistent data. Unit tests verify individual transformations, integration tests check interactions between components, and end-to-end tests validate overall workflows. Data validation includes ensuring consistency with source systems, verifying calculated measures, and confirming adherence to business rules. Ongoing testing during development and after deployment helps maintain the reliability and accuracy of the warehouse over time.

Continuous Improvement

Continuous improvement involves analyzing ETL processes, identifying inefficiencies, and refining workflows. Monitoring execution metrics, adjusting transformations, and optimizing control flow enhance efficiency and reduce processing time. Iterative improvements allow the warehouse to adapt to evolving business needs and growing data volumes. Regular evaluation of architecture, process design, and performance metrics ensures that the data warehouse remains a scalable and reliable analytical resource.

Integration with Analytics

The ultimate purpose of a data warehouse is to support analytical and business intelligence needs. Ensuring that ETL processes deliver clean, consistent, and well-structured data enhances the value of downstream analytics. Fact and dimension tables should support diverse queries, aggregations, and reporting requirements. Timely and accurate data enables informed decision-making and supports strategic initiatives, providing tangible business benefits.

Knowledge Management and Documentation

Documenting ETL processes, transformations, dependencies, and operational procedures is essential for maintainability and knowledge transfer. Clear documentation allows new team members to understand workflows quickly, supports troubleshooting, and ensures continuity when personnel changes occur. Standardized conventions and detailed notes reduce ambiguity, streamline maintenance, and facilitate auditing. Effective knowledge management ensures the long-term sustainability of data warehouse operations.

Holistic Data Warehouse Management

A holistic approach integrates dimension and fact design, ETL execution, data quality, security, monitoring, and analytics into a unified framework. Coordinating all aspects of warehouse management ensures consistency, efficiency, and reliability. This approach reduces redundancy, simplifies maintenance, and provides a scalable foundation for future growth. A well-managed warehouse supports high-quality analytics, strategic insights, and operational excellence, making it a critical component of an organization’s data infrastructure.

Strategic Planning and Architecture

Strategic planning aligns ETL processes and warehouse design with business objectives. It involves anticipating growth, selecting appropriate architectures, and designing scalable and flexible data pipelines. Proper planning ensures that ETL processes can handle increasing data volumes, maintain performance, and support emerging analytical needs. Strategic architecture planning enhances the warehouse’s ability to deliver timely, accurate, and actionable insights.

Advanced Data Warehouse Implementation

Advanced data warehouse implementation requires mastery across multiple domains including dimension and fact table design, ETL processes, data quality, master data management, security, testing, and performance optimization. Effective design of dimensions and fact tables ensures accurate representation and efficient querying. ETL processes must be optimized for data flow, control, error handling, logging, and automation. Incorporating data quality and master data management maintains consistency and reliability, while security and compliance measures protect sensitive information. Continuous improvement, strategic planning, and holistic management ensure that the warehouse remains scalable, maintainable, and aligned with business goals. Integrating these elements provides a robust foundation for analytical insights, informed decision-making, and enterprise-wide intelligence, making the data warehouse a central asset in supporting operational and strategic initiatives.

Advanced ETL Techniques

ETL processes form the backbone of a data warehouse, and mastering advanced techniques ensures both efficiency and accuracy. Data extraction should consider source heterogeneity, handling structured, semi-structured, and unstructured data efficiently. Transformations must include complex business logic, data type conversions, and aggregations. Handling incremental loads is critical to avoid full dataset reloads, which can be resource-intensive. Change data capture mechanisms allow tracking only modified or new records, minimizing processing time. Optimized ETL pipelines ensure data freshness while maintaining system performance and reliability.

Dimension Table Enhancements

Dimension tables require careful design to accommodate business requirements and historical analysis. Implementing surrogate keys provides consistency even when natural keys change. Slowly changing dimension strategies allow tracking historical data without losing context. Complex hierarchies should be defined to support multiple drill-down paths for analysis. Attribute relationships and aggregations enhance query performance by enabling the system to understand interdependencies. Conformed dimensions standardize common attributes across different fact tables, ensuring consistency in analysis and reporting.

Fact Table Optimization

Fact tables need to be optimized for both storage and query efficiency. Choosing the appropriate granularity impacts the detail level of analysis and storage requirements. Columnstore indexes, partitioning, and aggregation tables enhance query performance, particularly for large datasets. Fact tables must also maintain referential integrity with dimensions, ensuring accurate joins for analytics. Effective indexing and partitioning strategies reduce query times while supporting high-volume data loads, allowing the warehouse to scale efficiently.

Integration Services Workflows

Integration Services provides tools to design and execute ETL workflows. Control flow orchestrates task execution while data flow handles row-level data transformation. Containers and loops manage dependencies and repetitive tasks. Script tasks and custom components allow implementing complex logic not covered by standard transformations. Parameterization enables flexible execution across different environments without modifying package design. Logging and auditing within the workflow capture execution details, errors, and performance metrics for monitoring and troubleshooting.

Data Flow Optimization

Optimizing data flow involves configuring buffers, minimizing blocking transformations, and enabling parallelism. Buffer sizing and batch processing influence memory usage and throughput. Transformations should be sequenced logically to avoid unnecessary overhead. Incremental loading reduces the volume of processed data, improving performance and reducing execution time. Regular monitoring and tuning of data flow parameters ensures efficient ETL operations even as data volume and complexity increase.

Error Handling and Recovery

Robust error handling ensures ETL processes do not fail silently and allows recovery without data loss. Redirecting error rows, applying conditional logic, and capturing detailed logs provide actionable information for correction. Checkpoints enable restarting processes from specific points, reducing the need to reprocess entire datasets. Error handling strategies include row-level validation, exception handling, and notifications to support proactive problem resolution and operational reliability.

Data Quality Integration

Maintaining data quality within ETL processes is essential for analytics reliability. Data cleansing includes standardization, validation, and deduplication. Profiling identifies anomalies, missing values, and inconsistencies. Establishing reusable rules and knowledge bases ensures consistent data treatment across multiple processes. High-quality data improves analytical outcomes, decision-making accuracy, and overall confidence in warehouse information. Integrating data quality operations into ETL workflows streamlines maintenance and reduces downstream errors.

Master Data Management

Master data management standardizes critical business entities and provides a single source of truth. Proper integration into ETL ensures consistency across all fact and dimension tables. Managing hierarchies, relationships, and attributes centrally reduces redundancy and enhances reporting accuracy. Versioning of master data allows tracking changes over time, ensuring historical accuracy while supporting current operational needs. Effective master data management strengthens data governance and supports enterprise-wide analytics.

Performance Tuning

Performance tuning spans ETL execution, data storage, and query optimization. Partitioning, indexing, and parallel processing improve data load times and query responsiveness. Efficient memory usage and minimizing blocking transformations prevent bottlenecks. Monitoring execution metrics allows identification of slow-performing components, enabling targeted optimization. Continuous tuning ensures ETL workflows and warehouse structures can accommodate growing data volumes and increasing user queries without degradation in performance.

Security and Compliance

Data security is a critical aspect of warehouse management. Implementing role-based access control, encryption, and authentication mechanisms protects sensitive information. Security measures extend to ETL execution, storage, and reporting environments. Auditing and logging track data access and changes, supporting compliance with organizational policies and regulatory requirements. A secure warehouse architecture ensures data integrity and prevents unauthorized access while enabling authorized users to perform analytics safely.

Deployment Strategies

Deploying ETL packages requires careful management of configuration, dependencies, and environment-specific settings. Parameterized packages reduce manual intervention and allow seamless transitions across development, testing, and production. Validation before deployment ensures correctness and reduces operational issues. Deployment strategies should include rollback plans and monitoring to handle unexpected failures. Centralized configuration management improves maintainability, consistency, and operational reliability of ETL workflows.

Testing and Validation

Thorough testing verifies that ETL processes produce accurate and complete data. Unit tests evaluate individual transformations, while integration and end-to-end tests confirm proper interactions between components. Data validation ensures compliance with business rules, accuracy of calculated measures, and consistency with source systems. Continuous testing during development and after deployment supports reliability and maintains confidence in the data warehouse outputs.

Automation and Scheduling

Automation streamlines ETL execution, reduces manual effort, and ensures repeatability. Scheduling enables triggering based on time, events, or dependencies, supporting batch processing and incremental loads. Automated alerts and monitoring facilitate rapid response to failures. By reducing manual intervention, automation increases reliability, allows for consistent execution, and frees resources for analytical tasks rather than operational overhead.

Monitoring and Reporting

Monitoring ETL processes ensures early detection of performance issues, errors, or data anomalies. Metrics include execution time, throughput, error counts, and resource utilization. Centralized monitoring dashboards provide visibility into all ETL operations, supporting proactive management. Integration with reporting systems allows tracking data quality, audit compliance, and operational performance. Effective monitoring ensures ongoing reliability and helps optimize warehouse operations.

Analytics Enablement

The data warehouse serves as the foundation for analytics and business intelligence. Clean, structured, and high-quality data enables accurate reporting, trend analysis, and predictive modeling. Properly designed fact and dimension tables support complex queries and aggregations. Timely access to reliable data empowers informed decision-making and enhances operational and strategic initiatives. The integration of analytics into warehouse design maximizes the business value of stored data.

Knowledge Management and Documentation

Documenting ETL processes, data flows, and warehouse architecture ensures maintainability and knowledge transfer. Clear, structured documentation helps new team members understand workflows, supports troubleshooting, and facilitates auditing. Consistent documentation standards improve operational efficiency and reduce risk. Knowledge management ensures long-term sustainability and enhances collaboration among teams responsible for warehouse maintenance and analytics.

Scalability and Future-Proofing

Scalability involves designing ETL processes and warehouse structures to handle growing data volumes and evolving business requirements. Flexible architectures, partitioning, indexing, and optimized workflows enable the warehouse to scale without compromising performance. Future-proofing ensures that new sources, analytics requirements, or business rules can be integrated without major redesigns. Planning for scalability ensures long-term value and operational efficiency of the data warehouse infrastructure.

Continuous Improvement and Optimization

Continuous improvement evaluates ETL processes, warehouse design, and analytics workflows to identify inefficiencies and areas for enhancement. Regular performance reviews, tuning, and workflow optimization ensure that the warehouse remains responsive and reliable. Iterative enhancements allow adaptation to changing business needs and technological advances. A focus on continuous improvement maximizes efficiency, reliability, and business impact of the data warehouse environment.

Conclusion

Comprehensive mastery of data warehouse implementation involves expertise across dimension and fact table design, advanced ETL processes, data quality integration, master data management, performance tuning, automation, monitoring, and security. Each component contributes to a cohesive system that delivers accurate, timely, and actionable insights. Optimized ETL processes, robust error handling, and continuous monitoring ensure operational reliability. Data quality and master data strategies maintain consistency and trustworthiness. Security measures protect sensitive information, while scalability and continuous improvement strategies guarantee long-term sustainability. When all elements are integrated effectively, the data warehouse becomes a powerful analytical engine, enabling informed decision-making, supporting strategic initiatives, and providing measurable business value.

Microsoft MCSA 70-463 practice test questions and answers, training course, study guide are uploaded in ETE Files format by real users. Study and Pass 70-463 MCSA Implementing a Data Warehouse with Microsoft SQL Server 2012/2014 certification exam dumps & practice test questions and answers are to help students.

Exam Comments * The most recent comment are on top

- AZ-104 - Microsoft Azure Administrator

- AI-900 - Microsoft Azure AI Fundamentals

- AI-102 - Designing and Implementing a Microsoft Azure AI Solution

- AZ-305 - Designing Microsoft Azure Infrastructure Solutions

- DP-700 - Implementing Data Engineering Solutions Using Microsoft Fabric

- MD-102 - Endpoint Administrator

- AZ-900 - Microsoft Azure Fundamentals

- PL-300 - Microsoft Power BI Data Analyst