- Home

- VMware Certifications

- 2V0-41.20 Professional VMware NSX-T Data Center Dumps

Pass VMware 2V0-41.20 Exam in First Attempt Guaranteed!

2V0-41.20 Premium File

- Premium File 92 Questions & Answers. Last Update: Jan 24, 2026

Whats Included:

- Latest Questions

- 100% Accurate Answers

- Fast Exam Updates

Last Week Results!

All VMware 2V0-41.20 certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the 2V0-41.20 Professional VMware NSX-T Data Center practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

2V0-41.20 Exam Prep: Step-by-Step Guide to Earning Your VCP-NV 2021

The NSX-T platform is designed to provide a comprehensive network virtualization solution that decouples networking services from the underlying physical infrastructure. It offers a complete suite of capabilities for building, managing, and securing virtual networks in a data center environment. NSX-T focuses on flexibility and scalability, supporting both traditional and modern applications across various deployment models. Candidates preparing for the 2V0-41.20 exam need a deep understanding of the platform’s architecture, including its components and the roles they play in delivering network services.

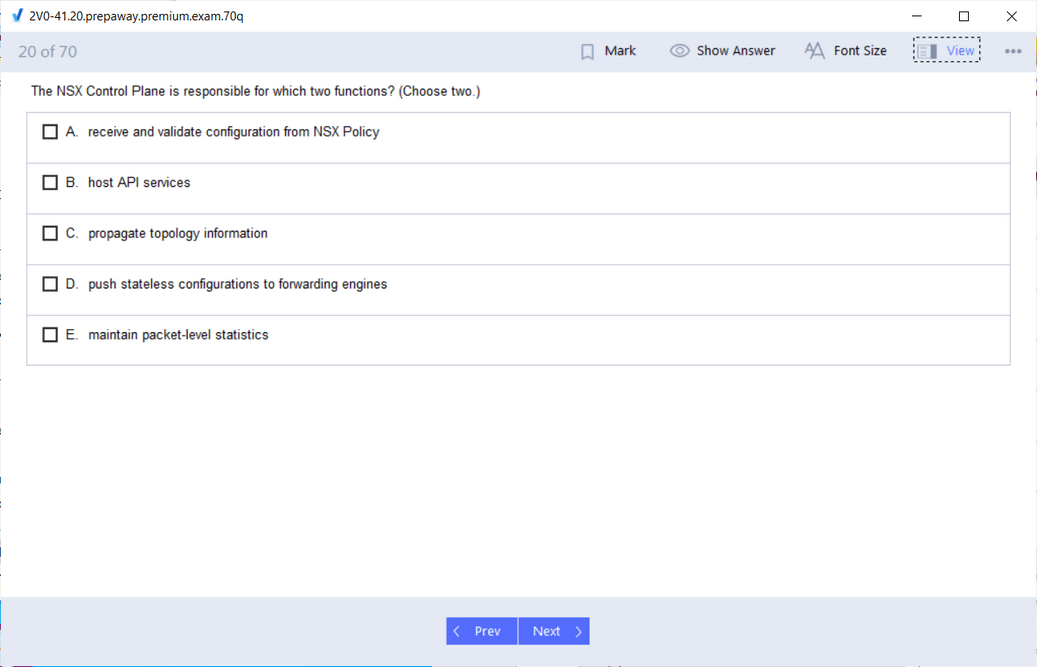

The architecture of NSX-T is divided into three major planes: the management plane, the control plane, and the data plane. The management plane is responsible for configuring and monitoring the network environment, managing policies, and handling automation tasks. The control plane propagates network information and ensures consistent communication between different network elements. The data plane carries the actual network traffic, applying policies and executing the forwarding rules defined by the control plane. Familiarity with how these planes interact is essential for successfully implementing and troubleshooting NSX-T environments.

Core Components and Features

NSX-T includes multiple components that work together to deliver advanced networking and security functionalities. Key components include the NSX Manager, which provides the interface for configuration and management; the NSX Controller, which manages the control plane functions; and the NSX Edge, which provides routing, load balancing, and gateway services. Candidates should understand the deployment options, configuration settings, and operational behaviors of these components to effectively plan and manage virtual networks.

NSX-T also introduces logical switching and routing capabilities that allow networks to be provisioned and managed independently of physical hardware. Logical switches enable segment isolation and simplified network topology, while logical routers allow seamless communication between segments. Knowledge of distributed firewall rules, security policies, and micro-segmentation techniques is critical, as these features form the backbone of NSX-T’s security model.

Preparing for the Exam

Effective preparation for the 2V0-41.20 exam requires a combination of theoretical knowledge and practical skills. Candidates should start by reviewing the official NSX-T documentation and exam blueprint to identify key topics and objectives. Understanding the scope of the exam and the weight of different domains helps prioritize study efforts and ensures comprehensive coverage of all essential areas.

Creating a structured study plan is highly recommended. Break down the content into manageable segments, focusing on one topic at a time, and progressively build a deeper understanding. Key domains often include network virtualization concepts, NSX-T architecture, logical switching and routing, security features, and operational tasks. Combining reading with hands-on practice enhances comprehension and retention.

Hands-On Lab Practice

Practical experience is crucial for mastering NSX-T and passing the 2V0-41.20 exam. Setting up a lab environment allows candidates to explore configurations, test scenarios, and troubleshoot issues in a controlled setting. Labs can include creating logical switches and routers, implementing distributed firewall rules, configuring Edge services, and deploying security policies. This experiential learning helps reinforce theoretical concepts and prepares candidates for real-world tasks.

While working in a lab, it is important to simulate complex network topologies and use case scenarios. Testing failover, redundancy, and advanced security configurations ensures a comprehensive understanding of the platform. Documenting lab exercises and results also aids in reviewing concepts and identifying areas that require further study.

Study Techniques and Learning Strategies

Engaging with peers through study groups or online forums can significantly enhance preparation. Discussing challenging concepts, solving problems collaboratively, and sharing practical experiences provide new perspectives and reinforce understanding. Learning from others’ approaches and troubleshooting methods can expose candidates to scenarios they might not encounter in a solo study routine.

Using multiple study resources in combination can improve knowledge retention. Exam guides, official documentation, and practice exercises should be integrated into a structured plan. Mapping study material to the exam blueprint ensures that all critical topics are covered. Regular self-assessment through quizzes or scenario-based exercises helps track progress and highlights areas needing additional focus.

Focus Areas for the Exam

A strong understanding of the control plane and data plane functions is critical. Candidates should know how NSX-T distributes network information, manages routing tables, and applies forwarding rules. Security is another major focus, including distributed firewall rules, micro-segmentation strategies, and policy enforcement mechanisms. Understanding these concepts not only supports exam preparation but also equips candidates for operational tasks in virtualized network environments.

NSX-T Edge services, such as load balancing, VPN, and NAT, are also essential topics. Candidates should be able to configure and manage these services, understand their integration with the NSX-T environment, and troubleshoot related issues. Knowledge of Edge clusters, high availability, and scaling options is important to ensure smooth operations in complex network deployments.

Automation and operational tasks are another critical area. Candidates should understand how to manage NSX-T using APIs, scripts, and automation tools. Knowledge of monitoring, logging, and troubleshooting techniques is essential for maintaining a healthy environment and addressing potential issues proactively. Familiarity with backup, restore, and upgrade procedures is also important to demonstrate full operational competence.

Practical Tips for Mastery

Consistent study and hands-on practice are key to exam success. Candidates should allocate regular study periods, review material frequently, and practice configurations repeatedly in a lab setting. Combining theoretical study with practical exercises ensures a solid understanding of both concepts and application.

Documenting learning progress, key configurations, and troubleshooting steps can reinforce knowledge. Creating diagrams, flowcharts, and notes for network topologies and policy structures provides a quick reference and aids in understanding complex relationships. Revisiting these materials periodically strengthens retention and builds confidence for exam day.

Networking with other learners and professionals can provide additional insights. Sharing experiences, discussing troubleshooting approaches, and exploring alternative configurations can enhance understanding and highlight best practices. Exposure to a variety of scenarios helps candidates anticipate challenges and prepares them for problem-solving under exam conditions.

Integrating Knowledge and Skills

The 2V0-41.20 exam tests both knowledge and practical ability. Success requires integrating theoretical understanding with hands-on expertise. Candidates should be comfortable navigating NSX-T interfaces, interpreting configuration options, implementing security policies, and troubleshooting network issues. This combination of skills ensures not only passing the exam but also being ready to manage real-world NSX-T deployments effectively.

Building confidence through repeated practice and structured study is essential. As candidates progress, they should focus on areas of difficulty, revisit concepts that are less familiar, and validate understanding through lab exercises. This iterative approach ensures a well-rounded preparation that covers all critical aspects of NSX-T.

Preparing for the 2V0-41.20 exam involves understanding the NSX-T platform, gaining hands-on experience, and applying structured study techniques. By focusing on core components, security features, logical networking, Edge services, and operational tasks, candidates can develop a comprehensive skill set. Consistent practice, collaborative learning, and systematic review enhance both knowledge retention and confidence. With dedication and a practical approach, candidates can successfully navigate the exam and gain proficiency in managing NSX-T environments.

Advanced Networking Concepts in NSX-T

A thorough understanding of networking concepts is essential for mastering NSX-T and preparing for the 2V0-41.20 exam. Candidates should focus on how logical networks are created and managed, including the configuration of segments, transport zones, and overlay networks. Logical segments act as isolated broadcast domains, allowing for secure and efficient traffic flow within virtualized environments. Overlay networks enable communication across physical hosts without dependency on the underlying physical topology. Knowledge of these concepts is crucial for implementing scalable and flexible network designs.

Routing in NSX-T is handled through both distributed and centralized mechanisms. Distributed routing allows traffic to be routed at the hypervisor level, reducing latency and improving efficiency, while centralized routing through Edge nodes handles north-south traffic and external connectivity. Understanding the deployment and configuration of Tier-0 and Tier-1 routers, route redistribution, and high availability is critical for ensuring proper network operations. Candidates should also be familiar with routing protocols such as BGP and OSPF, their configuration options, and use cases in NSX-T.

Security and Micro-Segmentation

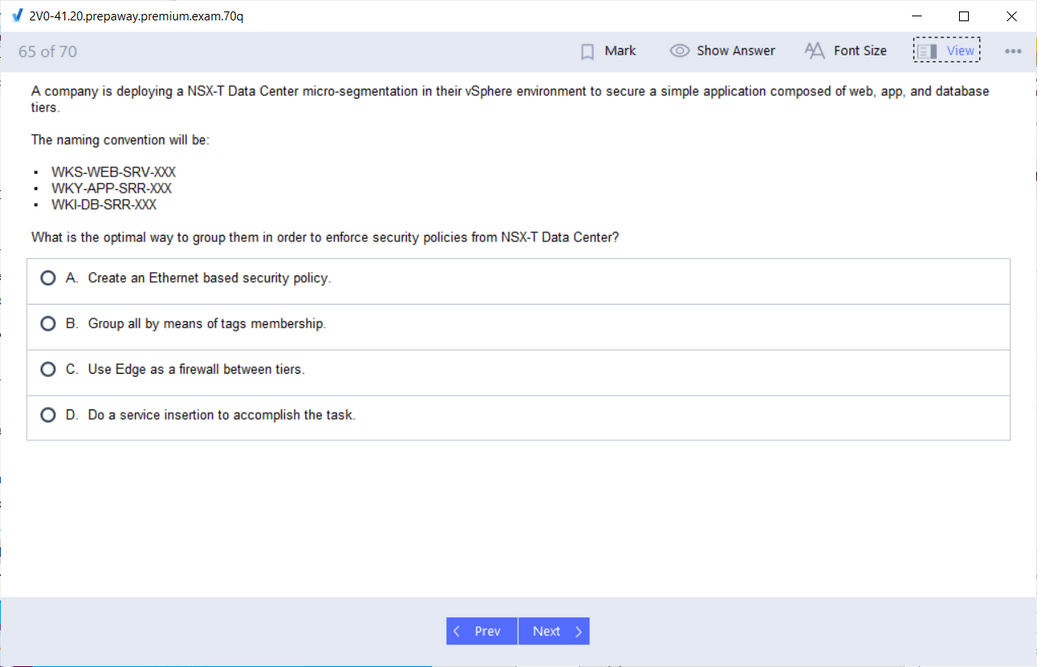

Security is a fundamental aspect of NSX-T. Micro-segmentation allows fine-grained control over east-west traffic, enabling policies to be applied at the virtual machine level. Distributed firewalls enforce these policies consistently across all workloads, regardless of their location. Candidates should understand the types of firewall rules, policy inheritance, and the effect of rule ordering on traffic flow. In addition to firewall configuration, knowledge of service insertion, identity-based firewalling, and security groups is important for implementing dynamic security policies.

NSX-T also integrates advanced security features such as intrusion detection, threat intelligence, and logging for monitoring network activity. Understanding how to configure and interpret these features ensures that network traffic is secure and compliant with organizational policies. Security troubleshooting skills are necessary to identify misconfigurations or unexpected traffic behavior.

Load Balancing and Edge Services

NSX-T Edge services provide critical functions such as load balancing, VPN, NAT, and DHCP. Configuring load balancers involves creating pools, virtual servers, and monitors to distribute traffic efficiently across backend servers. Candidates should understand the differences between Layer 4 and Layer 7 load balancing, persistence methods, and health monitoring techniques.

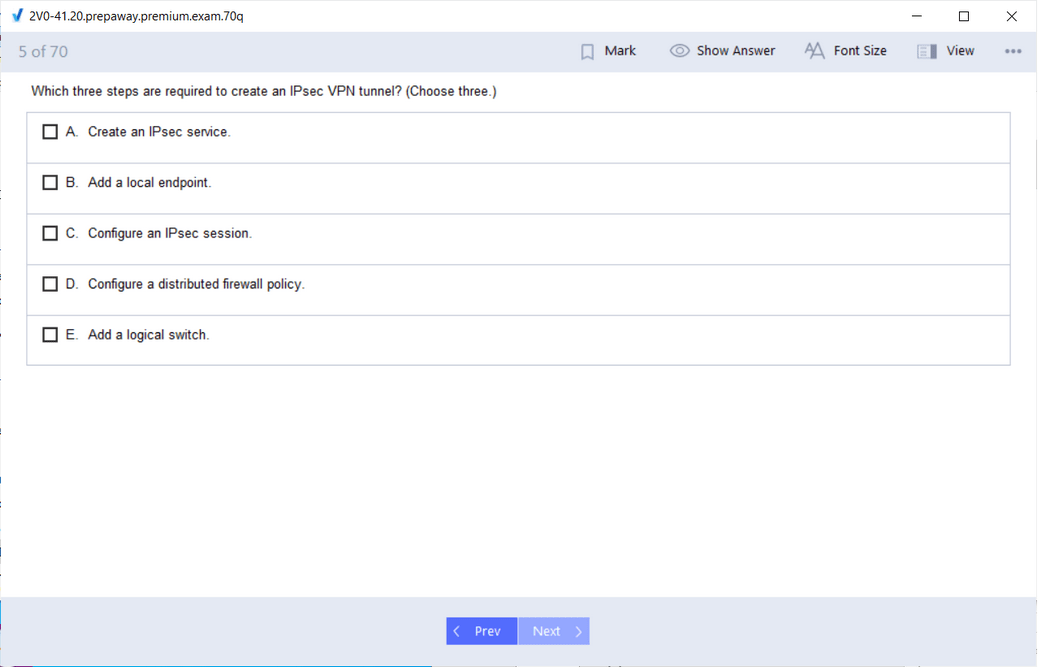

VPN and NAT services allow secure connectivity and translation between internal and external networks. Configuring site-to-site VPNs, client VPNs, and NAT rules requires an understanding of encryption protocols, tunneling methods, and network addressing. Edge clusters provide scalability and high availability for these services, ensuring that critical network functions remain operational under load or failure conditions.

Automation and Operational Management

Automation plays a significant role in managing NSX-T at scale. Candidates should be familiar with API-based automation, scripting, and integration with configuration management tools. Automation allows for repeatable deployments, rapid configuration changes, and consistent application of policies across multiple environments. Understanding the structure of NSX-T REST APIs, common endpoints, and authentication methods is important for creating automated workflows.

Operational management also involves monitoring, logging, and troubleshooting. NSX-T provides dashboards, syslog integration, and alerting mechanisms to track the health and performance of network components. Candidates should know how to interpret logs, monitor traffic patterns, and diagnose issues related to connectivity, performance, and policy enforcement. Knowledge of backup and restore procedures ensures that configurations can be recovered in case of failure, supporting continuity of operations.

Troubleshooting and Optimization

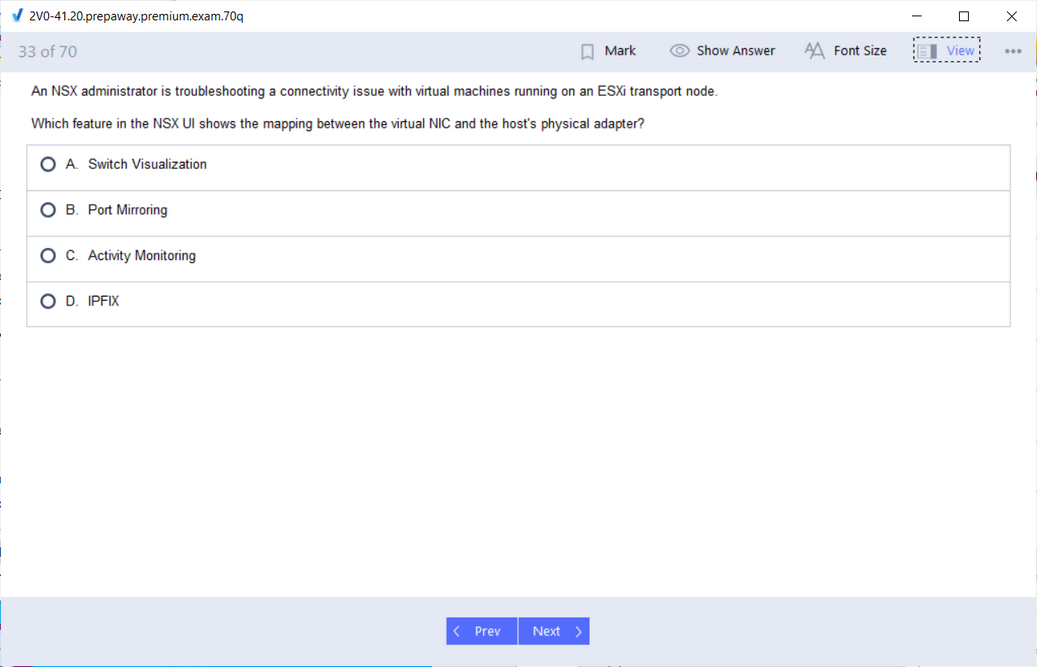

Proficiency in troubleshooting is vital for both exam success and real-world operations. Candidates should be able to identify misconfigurations in logical switches, routers, firewalls, and Edge services. Common troubleshooting steps include verifying connectivity, checking configuration consistency, reviewing logs, and simulating traffic flows in a lab environment. Understanding the relationship between the control plane, data plane, and management plane is essential for isolating and resolving issues effectively.

Optimization of NSX-T deployments ensures efficient use of resources and maintains high performance. This includes tuning overlay networks, managing routing tables, and optimizing firewall rule sets. Knowledge of monitoring metrics such as latency, throughput, and CPU utilization of NSX components helps in proactive performance management. Candidates should be able to recommend best practices for deployment, scale, and configuration to maintain stability and performance in virtualized networks.

Hands-On Scenario Practice

Scenario-based practice is critical for comprehensive exam preparation. Candidates should work through lab exercises that simulate real-world problems, such as configuring multi-tiered networks, implementing micro-segmentation, and deploying Edge services. Each scenario should include planning, configuration, verification, and troubleshooting steps. Practicing in a controlled lab environment allows candidates to gain confidence and experience without impacting production systems.

Lab exercises should also cover complex scenarios such as integrating NSX-T with external networks, configuring dynamic routing protocols, and implementing security policies that adapt to changing workloads. Repeatedly performing these exercises builds muscle memory and ensures that candidates are prepared to answer exam questions that test both knowledge and applied skills.

Integrating Knowledge Across Domains

Success in the 2V0-41.20 exam requires a holistic understanding of NSX-T. Candidates must integrate knowledge of networking, security, Edge services, automation, and operational management to solve problems effectively. Studying each domain individually is important, but understanding how they interact provides the context needed for real-world implementation. For example, changes in routing configuration can affect firewall policies, and load balancing settings may influence traffic flow across segments. Recognizing these interdependencies is crucial for both exam readiness and practical expertise.

Candidates should also practice scenario analysis, where multiple aspects of the NSX-T environment are evaluated together. This could involve troubleshooting a network issue while considering routing, firewall, and Edge configurations simultaneously. Integrating skills across domains ensures a deeper comprehension of the platform and improves the ability to respond to complex questions in the exam.

Exam Readiness and Confidence

Building confidence comes from combining theoretical study with practical experience. Regular self-assessment through lab exercises, scenario practice, and review of key concepts helps reinforce learning. Tracking progress, identifying weak areas, and revisiting challenging topics ensures a comprehensive understanding. Structured preparation provides the foundation for success, reducing anxiety and increasing readiness for the exam.

In addition to technical skills, time management and exam strategy are important. Familiarity with the format of the exam, types of questions, and time allocation allows candidates to navigate the exam efficiently. Practicing under timed conditions and simulating exam scenarios in a lab environment builds both speed and accuracy.

Preparing for the 2V0-41.20 exam involves mastering NSX-T networking, security, Edge services, automation, and operational management. Candidates must combine theoretical knowledge with practical lab experience, scenario practice, and collaborative learning to achieve competence. By integrating understanding across different domains, practicing troubleshooting, and building confidence through consistent study, candidates are well-prepared to succeed in the exam and effectively manage NSX-T environments. This approach ensures not only exam readiness but also the ability to handle complex, real-world network virtualization challenges.

Advanced NSX-T Services and Integration

NSX-T provides a wide range of services that go beyond basic networking, offering advanced capabilities for both internal and external network integration. Understanding these services is crucial for the 2V0-41.20 exam. Candidates need to be familiar with advanced routing, bridging, and integration techniques that connect virtual networks with external networks. This includes configuring Tier-0 and Tier-1 routers to manage north-south and east-west traffic, implementing dynamic routing protocols, and optimizing route distribution for performance and redundancy.

Edge services in NSX-T allow for centralized management of network functions such as load balancing, NAT, and VPN. Load balancing involves distributing client requests efficiently across multiple backend servers while maintaining session persistence and monitoring server health. Candidates should understand both Layer 4 and Layer 7 load balancing features, as well as the configuration of monitors, pools, and virtual servers. NAT services translate internal IP addresses to external addresses and vice versa, supporting connectivity across different network segments. VPN configuration, including site-to-site and client VPNs, provides secure communication channels and requires knowledge of encryption methods and tunnel management.

Security Operations and Policy Enforcement

Security operations are a critical aspect of NSX-T. The platform’s distributed firewall enforces security policies at the virtual machine level, providing granular control over traffic. Candidates must understand how to create and manage firewall rules, apply policies to security groups, and use tags for dynamic rule assignment. Micro-segmentation allows for strict control over east-west traffic, reducing the attack surface and ensuring compliance with organizational security requirements.

Additional security features include service insertion, which integrates third-party security services into NSX-T, and identity-based firewalling, which applies policies based on user or application identity. Monitoring and logging are also essential components, enabling administrators to detect anomalies, analyze traffic patterns, and respond to security events. Understanding how to interpret alerts and logs is critical for effective operational management and exam preparation.

Automation and Scripting

Automation is a key capability in managing NSX-T at scale. Candidates should be familiar with the use of REST APIs, PowerCLI, and scripting for repetitive tasks such as creating logical segments, deploying routers, and applying security policies. Automation ensures consistency, reduces the potential for human error, and allows for rapid deployment of network configurations.

Understanding the structure of NSX-T APIs, authentication methods, and common endpoints is important for creating automated workflows. Candidates should also know how to use automation to monitor system health, perform configuration backups, and manage upgrades. Integrating automation into operational procedures not only streamlines management but also demonstrates proficiency in modern network administration practices.

Operational Management and Monitoring

Operational management in NSX-T involves continuous monitoring and maintenance of the environment to ensure optimal performance. Candidates should be able to monitor the health of NSX components, including controllers, managers, and Edge nodes. Key metrics include CPU usage, memory utilization, network throughput, and latency. Monitoring tools and dashboards provide real-time visibility into network performance and help identify potential issues before they affect operations.

Logging and alerting mechanisms are essential for proactive management. Candidates should understand how to configure syslog, interpret event logs, and respond to alerts. Troubleshooting techniques include verifying connectivity, analyzing policy application, and checking routing configurations. Operational readiness also involves performing backup and restore procedures, ensuring that network configurations can be recovered in the event of failure.

Troubleshooting Complex Scenarios

Effective troubleshooting requires a deep understanding of NSX-T architecture and the interdependencies between components. Candidates should be able to analyze network problems involving logical switches, routers, distributed firewall rules, and Edge services. Identifying the root cause often involves examining the control plane, data plane, and management plane to pinpoint where issues originate.

Scenario-based practice enhances troubleshooting skills. Candidates should simulate problems such as routing loops, firewall misconfigurations, and load balancing failures in a lab environment. Practicing end-to-end resolution of these issues strengthens understanding and builds confidence in handling real-world challenges. Understanding the impact of each configuration change and how it affects traffic flow is crucial for both exam success and operational competence.

Integration with External Systems

NSX-T is designed to integrate seamlessly with external systems such as public clouds, third-party security tools, and orchestration platforms. Candidates should understand the methods for connecting NSX-T to external networks, including Layer 2 bridging, BGP and OSPF routing, and VPN tunnels. Integration with security and monitoring tools enhances visibility and control, allowing administrators to enforce consistent policies across hybrid environments.

Orchestration platforms can automate NSX-T workflows, enabling rapid provisioning and consistent policy application. Knowledge of integration points, API interactions, and automation best practices ensures that candidates can deploy and manage complex environments efficiently. Understanding these integrations also supports scalability, operational efficiency, and alignment with organizational requirements.

High Availability and Scalability

High availability is a critical consideration in NSX-T deployments. Candidates should understand how to configure Edge clusters, controller redundancy, and management plane failover to ensure continuous service. Load balancing across multiple Edge nodes and distributed routing configurations enhance resilience and maintain network performance during hardware or software failures.

Scalability considerations include designing logical networks, configuring transport zones, and managing segment and router capacity. Efficient design ensures that the environment can grow without impacting performance or manageability. Candidates should be able to plan for scaling both control plane and data plane resources, applying best practices for cluster sizing, placement, and redundancy.

Exam Preparation Strategies

Preparation for the 2V0-41.20 exam requires integrating knowledge of NSX-T components, services, and operational procedures. Candidates should use structured study plans that combine reading official guides, reviewing architecture diagrams, and performing hands-on lab exercises. Focusing on scenario-based exercises helps bridge theoretical understanding with practical application.

Time management and review strategies are important for comprehensive preparation. Allocating time to cover each domain, revisiting complex topics, and simulating exam conditions builds confidence and readiness. Candidates should track progress, identify weak areas, and reinforce understanding through repeated practice and problem-solving exercises.

Mastering the 2V0-41.20 exam requires an in-depth understanding of NSX-T networking, security, Edge services, automation, and operational management. Candidates must integrate theoretical knowledge with practical lab experience, scenario-based troubleshooting, and understanding of high availability and scalability. Consistent study, hands-on practice, and structured review ensure preparedness for both the exam and real-world NSX-T environments, enabling candidates to deploy, manage, and optimize network virtualization effectively.

Comprehensive NSX-T Architecture and Operations

Understanding the full architecture of NSX-T is critical for the 2V0-41.20 exam. Candidates should be familiar with how the management plane, control plane, and data plane work together to deliver network services. The management plane handles configuration, policy enforcement, and monitoring, while the control plane distributes routing and switching information to maintain consistent network behavior across all nodes. The data plane is responsible for actual packet forwarding, applying policies, and maintaining network isolation between workloads. Grasping the interactions between these planes allows candidates to troubleshoot effectively and design efficient virtual networks.

NSX-T uses a distributed architecture that enhances scalability and resilience. Each hypervisor host participates in the data plane, enabling distributed routing and firewalling directly at the host level. This reduces latency and increases efficiency for east-west traffic. Edge nodes handle north-south traffic and services such as NAT, VPN, and load balancing. Candidates must understand deployment topologies, placement strategies, and resource allocation to ensure high availability and optimal performance.

Logical Networking and Routing

Logical networking in NSX-T abstracts physical network infrastructure, providing flexible and isolated virtual networks. Segments, or logical switches, create Layer 2 domains that are independent of the underlying physical network. Transport zones define the scope of segments and determine whether they use overlay or VLAN-backed networks. Candidates should understand how to configure segments, map them to transport zones, and integrate them with physical networks where necessary.

Routing in NSX-T is accomplished using Tier-0 and Tier-1 logical routers. Tier-0 routers connect the NSX-T environment to external networks, while Tier-1 routers provide connectivity between logical segments. Distributed routing allows traffic to be processed locally at the hypervisor level, improving efficiency and reducing bottlenecks. Candidates need to understand how to configure static routes, dynamic routing protocols, and route redistribution between Tier-0 and Tier-1 routers to maintain connectivity and performance.

Security and Micro-Segmentation

Security is a central component of NSX-T. The distributed firewall enforces policies at the virtual machine interface level, allowing micro-segmentation and granular control over east-west traffic. Security groups and tags enable dynamic policy assignment, ensuring that new workloads inherit the correct security settings automatically. Candidates should understand how to create, organize, and apply firewall rules, including the evaluation order and precedence of rules in complex environments.

NSX-T also supports advanced security features such as service insertion for third-party security solutions and identity-based firewalling that applies policies based on user identity. Understanding how to configure logging, monitoring, and alerts ensures that administrators can detect anomalies and respond to security incidents. Familiarity with these mechanisms is essential for operational management and exam readiness.

Edge Services and Load Balancing

Edge nodes provide critical network services that extend the functionality of NSX-T. Load balancing distributes traffic across multiple backend servers to optimize performance and availability. Candidates should understand how to configure virtual servers, pools, monitors, and persistence methods. Both Layer 4 and Layer 7 load balancing configurations need to be mastered to handle different application requirements.

NAT and VPN services enable connectivity between internal and external networks. Configuring NAT requires understanding address translation, mapping rules, and interaction with routing policies. VPN setup includes site-to-site tunnels, client VPNs, and associated encryption protocols. Candidates should also know how to configure high availability for Edge nodes to ensure service continuity in case of failures.

Automation and API Integration

Automation is essential for scaling NSX-T environments efficiently. Candidates should be proficient with REST APIs, scripting, and integration with orchestration tools to automate network provisioning, security policy deployment, and operational tasks. Understanding how to authenticate, navigate endpoints, and execute workflows through the API allows candidates to manage large environments consistently and reduce manual errors.

Automation can also be applied to monitoring and alerting. Scripts can check system health, generate reports, and trigger notifications for specific conditions. This proactive approach enhances operational efficiency and ensures rapid response to potential issues. Familiarity with these automation techniques demonstrates practical competence and strengthens exam readiness.

Monitoring, Logging, and Troubleshooting

Operational monitoring is key to maintaining a stable NSX-T environment. Candidates should be able to monitor the health of NSX components, including managers, controllers, and Edge nodes. Metrics such as CPU and memory utilization, network throughput, latency, and flow table usage help identify potential bottlenecks or failures. Logs provide detailed insights into traffic behavior, configuration changes, and security events.

Troubleshooting in NSX-T requires a methodical approach. Candidates should verify connectivity, review firewall policies, analyze routing tables, and inspect Edge services configurations. Scenario-based troubleshooting exercises in a lab environment help develop critical thinking skills and reinforce understanding of component interactions. Understanding how changes in one area affect the overall network is vital for effective problem resolution.

Lab-Based Practice and Scenario Simulations

Practical, hands-on experience is one of the most effective ways to prepare for the 2V0-41.20 exam. Candidates should build lab environments that simulate realistic network topologies, including multiple segments, routers, Edge nodes, and security policies. Practicing common administrative tasks, such as segment creation, router configuration, firewall rule application, and load balancer deployment, reinforces theoretical knowledge.

Scenario simulations provide an opportunity to integrate skills across different domains. Candidates should practice troubleshooting multi-tiered networks, resolving routing conflicts, and managing dynamic security policies. Documenting lab exercises and reviewing outcomes ensures that knowledge is retained and that candidates are prepared for complex exam scenarios.

High Availability, Redundancy, and Scalability

High availability is essential in NSX-T deployments. Candidates should understand how to deploy redundant Edge nodes, configure HA for controllers, and implement failover mechanisms for critical services. Load balancing and distributed routing contribute to resiliency and performance optimization. Planning for resource allocation and capacity ensures that the environment can scale efficiently without compromising stability.

Scalability considerations also include segment and router design, transport zone configuration, and workload placement. Understanding the impact of design decisions on performance and manageability is crucial. Candidates should be able to recommend best practices for scaling both the control plane and data plane, ensuring that NSX-T environments can support growing network demands.

Integrating Knowledge for Exam Success

Exam success requires synthesizing knowledge across multiple NSX-T domains. Candidates must integrate networking, security, Edge services, automation, and operational management to solve problems holistically. Scenario-based practice is especially valuable for understanding interdependencies between components and ensuring readiness for complex questions.

Candidates should focus on reinforcing weak areas, practicing hands-on labs, and reviewing core concepts. Using diagrams, flowcharts, and notes helps visualize network topologies, security policies, and operational workflows. Regular review, combined with scenario simulations, ensures a well-rounded understanding and builds confidence for the exam.

Preparing for Complex Use Cases

The exam evaluates not only theoretical knowledge but also practical problem-solving skills. Candidates should work through use cases that combine multiple elements, such as integrating Edge services with distributed routing and micro-segmentation policies. Understanding how each component affects traffic flow, security enforcement, and connectivity is critical.

Practicing complex scenarios strengthens analytical skills and prepares candidates to tackle unfamiliar challenges on the exam. Repetition, documentation, and reflection on lab exercises deepen understanding and promote retention of concepts. This approach ensures that candidates are capable of both answering exam questions and applying their knowledge in operational environments.

Mastering NSX-T for the 2V0-41.20 exam involves understanding advanced networking, security, Edge services, automation, monitoring, and troubleshooting. Candidates must combine structured study with hands-on labs, scenario simulations, and continuous review to develop a comprehensive skill set. By integrating knowledge across all domains, practicing real-world scenarios, and reinforcing concepts through repetition, candidates achieve the confidence and competence required for exam success and effective management of NSX-T environments.

Comprehensive Understanding of NSX-T Security

Security within NSX-T is a foundational component of managing virtual networks. Candidates preparing for the 2V0-41.20 exam should develop a deep understanding of micro-segmentation, distributed firewall policies, and advanced security features. Micro-segmentation enables the isolation of workloads within the data center, allowing policies to be applied at the virtual machine interface level. This level of granularity ensures that traffic between applications or workloads is controlled, minimizing potential attack surfaces. Security groups and dynamic membership based on VM attributes or tags provide automation and flexibility in policy enforcement.

The distributed firewall in NSX-T enforces policies consistently across all hosts, ensuring that rules apply regardless of workload location. Candidates should understand the order of rule evaluation, inheritance of policies, and how to structure firewall rules effectively to avoid conflicts. Integration of service insertion allows third-party security tools to operate within NSX-T environments, providing capabilities like intrusion detection or advanced threat prevention. Identity-based firewalling, which applies policies according to user roles, further enhances security and operational efficiency. Logging and monitoring provide visibility into traffic patterns and potential security events, which is essential for proactive threat detection and resolution.

Advanced Routing and Logical Networking

Routing and logical networking are central to NSX-T functionality. Candidates should have a thorough understanding of overlay and VLAN-backed transport zones, segment creation, and logical switching. Logical switches act as isolated broadcast domains, while transport zones define the scope of these segments and determine connectivity methods. The ability to design and implement logical networks allows for scalable, flexible, and secure data center topologies.

Tier-0 and Tier-1 logical routers are crucial components in managing routing within NSX-T. Tier-0 routers handle north-south traffic, providing connectivity to external networks, while Tier-1 routers manage east-west traffic within the virtual environment. Distributed routing allows routing decisions to be processed at the hypervisor level, reducing latency and increasing performance. Candidates should understand route redistribution between Tier-0 and Tier-1 routers, the configuration of static and dynamic routes, and the implementation of routing protocols like BGP and OSPF. Mastery of routing concepts ensures that candidates can design efficient and resilient network topologies.

Edge Services and High Availability

Edge services provide essential network functions such as load balancing, NAT, VPN, and DHCP. Understanding how to configure and manage these services is critical for operational competence and exam readiness. Load balancing distributes client requests across multiple backend servers, improving performance and reliability. Candidates should know how to set up virtual servers, pools, monitors, and persistence profiles. Both Layer 4 and Layer 7 load balancing configurations are important for handling various application scenarios.

NAT and VPN services facilitate connectivity between internal virtual networks and external resources. Candidates should understand address translation rules, VPN tunnel configurations, encryption protocols, and methods for maintaining secure communication. High availability and redundancy within Edge clusters ensure that these services remain operational under load or in the event of hardware failure. Understanding cluster configuration, failover mechanisms, and load distribution is essential for maintaining continuity and service reliability.

Automation and Operational Efficiency

Automation is a key aspect of managing NSX-T efficiently. Candidates should be proficient with REST APIs, scripting, and orchestration tools to automate configuration tasks, policy enforcement, and operational monitoring. Automation reduces manual errors, ensures consistency, and allows rapid deployment of network services across multiple environments. Candidates should understand API authentication, common endpoints, and workflow creation to manage NSX-T environments effectively.

Operational efficiency also involves monitoring and troubleshooting. Candidates should be able to use dashboards, alerts, and logs to maintain system health, track network performance, and identify issues proactively. Understanding metrics such as CPU utilization, memory consumption, flow table usage, and network throughput is critical for optimizing performance. Monitoring traffic patterns, verifying policy application, and diagnosing potential failures help ensure the environment remains stable and secure.

Troubleshooting and Scenario-Based Practice

Effective troubleshooting requires a comprehensive understanding of the NSX-T architecture. Candidates should be able to identify and resolve issues involving logical switches, routers, distributed firewall rules, and Edge services. Analyzing the control plane, data plane, and management plane helps isolate root causes and implement solutions efficiently.

Scenario-based practice is highly beneficial for exam preparation. Candidates should simulate network failures, routing misconfigurations, security policy issues, and Edge service disruptions in a lab environment. Practicing end-to-end resolution of these scenarios reinforces conceptual knowledge and builds confidence in applying skills in real-world situations. Documenting exercises and reviewing outcomes ensures retention of key concepts and strengthens problem-solving capabilities.

Monitoring and Maintaining NSX-T Environments

Ongoing monitoring and maintenance are critical for ensuring NSX-T environments operate optimally. Candidates should be able to track the health of managers, controllers, Edge nodes, and workloads. Monitoring CPU, memory, latency, throughput, and flow table usage provides insight into system performance and potential bottlenecks. Logs and alerts offer detailed information on network traffic, configuration changes, and security events.

Troubleshooting requires methodical steps, including verifying connectivity, analyzing firewall rules, and checking routing configurations. Proficiency in operational tasks, including backup, restore, and upgrade procedures, ensures that configurations can be recovered and environments maintained efficiently. Understanding the interactions between control, management, and data planes is crucial for effective problem resolution.

Integrating Knowledge Across Domains

Success in the 2V0-41.20 exam relies on integrating networking, security, Edge services, automation, and operational management knowledge. Candidates must understand how changes in one domain impact others. For example, modifying a routing configuration can affect firewall rules, load balancing, and traffic flow. Recognizing these interdependencies is critical for designing robust environments and for performing troubleshooting effectively.

Scenario-based exercises that combine multiple domains strengthen analytical thinking. Candidates should practice configuring multi-tier networks, implementing micro-segmentation policies, deploying Edge services, and resolving complex network issues. Integrating these skills ensures candidates are prepared for both the exam and operational responsibilities in a virtualized network environment.

Scaling and High Performance

Scalability and performance optimization are essential considerations in NSX-T deployments. Candidates should understand how to design networks that can grow without compromising performance, including segment sizing, router deployment, and transport zone configuration. High availability strategies, such as Edge clusters, controller redundancy, and failover mechanisms, ensure continuous service delivery.

Optimizing distributed routing, firewall rule evaluation, and resource allocation contributes to overall system performance. Candidates should be able to assess network metrics, adjust configurations, and implement best practices to maintain efficient operations. Understanding the impact of scaling decisions on both control and data planes ensures environments remain resilient and performant.

Exam Strategy and Readiness

Preparation for the 2V0-41.20 exam involves a structured approach combining study, hands-on labs, and scenario practice. Candidates should prioritize understanding the NSX-T blueprint, focusing on critical topics such as logical networking, routing, security, Edge services, automation, and troubleshooting. Integrating these areas through practical exercises reinforces comprehension and builds confidence.

Time management, progress tracking, and focused review are essential strategies. Candidates should allocate sufficient time to cover each domain, revisit challenging topics, and simulate exam conditions through timed lab exercises. Combining theoretical understanding with practical application ensures readiness for complex questions that test both knowledge and applied skills.

Mastering NSX-T for the 2V0-41.20 exam requires a deep understanding of advanced networking, security, Edge services, automation, monitoring, and troubleshooting. Candidates must combine structured study with hands-on lab exercises, scenario-based practice, and knowledge integration across domains. Focusing on high availability, scalability, and operational efficiency prepares candidates for both the exam and real-world deployments. By practicing problem-solving, reinforcing concepts, and applying theoretical knowledge in practical scenarios, candidates develop the expertise needed to manage and optimize NSX-T environments effectively.

Deep Dive into NSX-T Components

NSX-T is built on a multi-layered architecture that integrates management, control, and data planes to provide comprehensive network virtualization. For the 2V0-41.20 exam, candidates need to understand the role and interaction of each component. The management plane oversees configuration, policy enforcement, and monitoring, providing administrators with tools to manage network resources efficiently. It is responsible for distributing configuration changes to the control plane and ensuring consistency across all NSX-T components.

The control plane is responsible for propagating routing and switching information to the hypervisors and Edge nodes. It ensures that the network topology and forwarding rules are consistent, enabling efficient traffic management and network scalability. Candidates should understand the mechanisms used for route calculation, logical forwarding, and synchronization between control plane nodes. The data plane executes packet forwarding and applies security policies at the host level. Distributed firewall and routing functions operate within the data plane, ensuring low latency and high performance for east-west traffic.

Logical Switching and Segmentation

Logical switches provide the foundation for network segmentation in NSX-T. Segments act as isolated Layer 2 networks, independent of physical network boundaries, allowing virtual machines to communicate securely within a defined scope. Candidates should understand segment creation, assignment to transport zones, and integration with overlay and VLAN-backed networks. Transport zones define the scope of network traffic and determine which hosts can participate in a segment. Understanding these concepts allows candidates to design scalable, flexible, and secure network topologies.

Overlay networking enables connectivity across multiple hosts without relying on the physical infrastructure. It provides encapsulation and isolation, allowing workloads to move freely while maintaining consistent IP addressing and policy enforcement. Mastery of overlay and VLAN-backed networking is essential for designing and managing multi-tiered virtual networks.

Advanced Routing Concepts

NSX-T uses both distributed and centralized routing to manage traffic efficiently. Distributed routing enables hypervisor-level packet forwarding, reducing the load on centralized routers and minimizing latency for east-west traffic. Tier-0 routers provide connectivity to external networks and handle north-south traffic, while Tier-1 routers facilitate communication between logical segments. Candidates should understand route redistribution between Tier-0 and Tier-1 routers, as well as the configuration of static and dynamic routing protocols like BGP and OSPF.

Routing decisions in NSX-T impact traffic flow, firewall policy application, and Edge service operation. Candidates must understand how routing interacts with security policies, load balancing, and high availability configurations to design robust and efficient virtual networks.

Security and Policy Implementation

Security in NSX-T is integrated at multiple levels, providing granular control over traffic. Micro-segmentation allows policies to be applied to individual workloads, controlling east-west traffic and minimizing exposure to potential threats. Candidates should be able to create and manage firewall rules, security groups, and tag-based policy assignments. Understanding rule evaluation order, inheritance, and the effect of dynamic membership on policy enforcement is critical for effective security management.

Advanced security features include identity-based firewalling and service insertion for third-party security solutions. These capabilities enable context-aware policy enforcement and integration with additional security tools. Logging and monitoring are essential to track traffic patterns, detect anomalies, and respond to potential threats. Candidates must understand how to configure alerts, analyze logs, and implement operational procedures for ongoing security management.

Edge Services and Load Balancing

Edge nodes provide essential services that extend NSX-T functionality. Load balancing distributes traffic across backend servers to ensure high availability and optimal performance. Candidates should understand virtual server configuration, pool setup, health monitoring, and persistence options. Both Layer 4 and Layer 7 load balancing are critical for handling diverse application traffic patterns.

NAT and VPN services facilitate connectivity between internal and external networks. Configuring NAT involves defining address translation rules, while VPN requires understanding tunnel configuration, encryption methods, and authentication protocols. High availability for Edge services ensures continuous operation, and candidates must be able to implement cluster configurations and failover mechanisms to maintain service continuity.

Automation and API Management

Automation is a key factor in managing large NSX-T environments efficiently. Candidates should be familiar with REST APIs, scripting, and orchestration integration to automate network deployment, policy application, and operational monitoring. Automation reduces errors, ensures consistency, and accelerates deployment across multiple environments. Candidates should understand API endpoints, authentication, and common workflows to implement automation effectively.

Automated monitoring and alerting enable proactive management of the environment. Scripts can check system health, generate reports, and trigger notifications for predefined conditions. Understanding how to integrate automation with operational processes improves efficiency and ensures rapid response to potential issues.

Operational Monitoring and Troubleshooting

Maintaining NSX-T environments requires continuous monitoring and troubleshooting. Candidates should be able to track the health of managers, controllers, Edge nodes, and workloads. Key performance metrics include CPU and memory utilization, network throughput, latency, and flow table usage. Monitoring logs and alerts provides visibility into configuration changes, traffic patterns, and security events.

Troubleshooting requires a methodical approach, including verifying connectivity, analyzing firewall policies, and inspecting routing configurations. Scenario-based lab exercises strengthen troubleshooting skills, allowing candidates to practice resolving complex network issues, misconfigurations, and service disruptions. Documenting troubleshooting steps and outcomes reinforces learning and improves operational readiness.

Scenario-Based Lab Exercises

Hands-on lab practice is essential for exam preparation. Candidates should build complex topologies with multiple segments, Tier-0 and Tier-1 routers, distributed firewall rules, and Edge services. Lab exercises should include real-world scenarios such as routing conflicts, firewall misconfigurations, load balancing failures, and VPN setup. Practicing end-to-end resolution of these scenarios enhances understanding and builds confidence.

Documenting lab activities, reviewing outcomes, and analyzing mistakes ensures that knowledge is retained. Scenario practice also prepares candidates to approach unfamiliar problems systematically, which is critical for both the exam and real-world operations.

High Availability, Redundancy, and Scalability

High availability and redundancy are critical aspects of NSX-T design. Candidates should understand how to configure redundant Edge nodes, controller clusters, and management plane failover. Distributed routing, load balancing, and segment design all contribute to resiliency. Knowledge of failover mechanisms, cluster placement, and resource allocation ensures that critical services remain operational during failures.

Scalability involves planning for segment growth, router capacity, transport zone expansion, and workload placement. Candidates must design NSX-T environments that can grow without impacting performance or manageability. Optimizing routing, firewall rules, and Edge services ensures that the environment maintains high performance and reliability as demand increases.

Integrating Knowledge for Exam Success

Success in the 2V0-41.20 exam requires integrating knowledge across multiple domains. Candidates must understand how networking, security, Edge services, automation, and operational management interact. Scenario-based exercises that combine multiple elements reinforce this integrated understanding and improve problem-solving skills. Candidates should focus on identifying interdependencies, analyzing the impact of changes, and applying best practices to maintain consistent performance and security.

Regular review, diagramming network topologies, and mapping policies help visualize complex interactions. Practicing end-to-end scenarios ensures that candidates can apply theoretical knowledge in practical situations, building confidence for the exam.

Preparing for Complex Network Deployments

The exam evaluates both conceptual understanding and practical application. Candidates should practice configuring multi-tiered networks, implementing micro-segmentation policies, deploying Edge services, and troubleshooting complex scenarios. Understanding the interrelation between components and their effect on traffic flow, security enforcement, and service availability is essential.

Repetition, documentation, and reflection on lab exercises reinforce learning. Candidates should simulate network failures, policy misconfigurations, and service disruptions to develop robust troubleshooting and operational skills. This preparation ensures readiness for both the exam and managing real-world NSX-T environments.

Mastery of NSX-T for the 2V0-41.20 exam involves a comprehensive understanding of architecture, logical networking, routing, security, Edge services, automation, monitoring, troubleshooting, and scalability. Candidates must combine structured study, hands-on lab exercises, scenario-based practice, and integration of knowledge across domains. Focusing on high availability, operational efficiency, and problem-solving skills prepares candidates to succeed in the exam and manage complex, virtualized network environments effectively.

Mastering NSX-T Control and Data Planes

A deep understanding of NSX-T control and data planes is essential for preparing for the 2V0-41.20 exam. The control plane is responsible for distributing network information across all hypervisors and Edge nodes. It ensures that routing tables, logical switches, and policy rules are consistent, allowing the network to operate efficiently and reliably. Candidates should understand how control plane nodes communicate, synchronize state, and propagate updates. Knowledge of clustering, redundancy, and failover mechanisms within the control plane ensures operational continuity and high availability.

The data plane carries actual network traffic and enforces security policies at the virtual machine level. Distributed routing allows packets to be processed locally on hypervisors, reducing latency and offloading centralized routers. Candidates should be able to analyze data plane behavior, understand encapsulation techniques like Geneve, and troubleshoot traffic issues related to misconfigurations or resource constraints. Understanding the interplay between control and data planes allows candidates to identify and resolve network issues effectively.

Logical Networking and Segment Design

Logical networking is at the core of NSX-T functionality. Segments act as isolated Layer 2 domains, providing separation between workloads. Transport zones define the reach of segments and specify whether they use overlay or VLAN-backed transport. Candidates must understand how to create, configure, and manage segments and transport zones to design scalable and efficient virtual networks.

Overlay networks allow for connectivity across multiple hosts without relying on the underlying physical network. Candidates should grasp encapsulation, MTU considerations, and the impact of overlay on performance. VLAN-backed networks provide direct integration with physical switches, and understanding when to use each approach is critical for both exam preparation and real-world deployments. Proper segment design ensures security, performance, and operational manageability.

Advanced Routing and Tiered Architecture

Routing in NSX-T is handled through a tiered structure involving Tier-0 and Tier-1 routers. Tier-0 routers manage north-south traffic and external connectivity, while Tier-1 routers handle east-west traffic between segments. Distributed routing improves performance by processing packets at the host level, reducing the load on centralized routers. Candidates should understand static routing, dynamic routing protocols like BGP and OSPF, and route redistribution between tiers.

Routing policies impact security enforcement, traffic flow, and Edge services. Candidates need to be able to troubleshoot routing issues, analyze adjacency and route propagation, and ensure that traffic flows optimally across both overlay and physical networks. Mastery of routing concepts ensures candidates can design robust, high-performance network architectures.

Security Policies and Micro-Segmentation

NSX-T provides advanced security through micro-segmentation and distributed firewall policies. Candidates must understand how to create, organize, and apply rules that control traffic between workloads. Dynamic security groups allow for policy automation, reducing administrative overhead while maintaining security compliance. Identity-based firewalling and service insertion extend security capabilities, enabling integration with third-party security tools and context-aware policy enforcement.

Understanding firewall evaluation order, rule inheritance, and logging is crucial for effective troubleshooting. Candidates should be able to monitor traffic flows, analyze logs, and respond to potential security incidents. These skills ensure that security policies are applied consistently and that environments remain secure even as workloads scale or move between hosts.

Edge Services and Network Functions

Edge nodes provide essential network services such as load balancing, NAT, VPN, and DHCP. Load balancing distributes client requests across multiple backend servers to maintain performance and reliability. Candidates should understand pool creation, virtual server configuration, health monitoring, and persistence settings. Both Layer 4 and Layer 7 load balancing capabilities must be mastered for managing application traffic efficiently.

NAT and VPN provide connectivity to external networks. Candidates should understand translation rules, tunnel setup, encryption, and authentication protocols. High availability for Edge services ensures continuous operation, with redundancy and failover mechanisms minimizing service disruption. Knowledge of service configuration, monitoring, and troubleshooting is essential for operational competence.

Automation and Orchestration

Automation enhances the efficiency and reliability of NSX-T management. Candidates should be proficient with REST APIs, scripting, and orchestration tools to automate repetitive tasks, enforce policies, and monitor system health. Automation ensures consistency, reduces human error, and allows rapid deployment of complex network configurations. Candidates must understand how to authenticate, navigate API endpoints, and implement workflows for provisioning, monitoring, and managing network resources.

Integration with orchestration platforms allows seamless deployment of virtual networks, Edge services, and security policies. Automation also supports proactive monitoring, enabling administrators to detect anomalies, generate alerts, and trigger corrective actions. Mastery of automation tools ensures that candidates can manage NSX-T environments efficiently and at scale.

Monitoring, Logging, and Operational Management

Continuous monitoring and operational management are key to maintaining a stable NSX-T environment. Candidates should be able to track the health of managers, controllers, Edge nodes, and virtual workloads. Key metrics include CPU and memory usage, flow table capacity, network throughput, and latency. Logging provides visibility into configuration changes, traffic patterns, and security events.

Troubleshooting involves verifying connectivity, analyzing policy application, and diagnosing routing or service issues. Candidates should practice scenario-based troubleshooting in a lab environment, simulating network failures, firewall misconfigurations, and Edge service disruptions. Documenting troubleshooting steps reinforces learning and prepares candidates for exam scenarios that test analytical and operational skills.

Lab-Based Scenario Practice

Hands-on practice is essential for mastering NSX-T. Candidates should build lab environments with multiple segments, tiered routers, distributed firewall rules, and Edge services. Lab exercises should include realistic scenarios such as route failures, policy conflicts, and service disruptions. Practicing configuration, monitoring, and troubleshooting in these scenarios develops both conceptual understanding and practical skills.

Documenting lab exercises and reviewing outcomes improves knowledge retention. Scenario-based practice allows candidates to develop systematic approaches to solving complex network issues, ensuring readiness for both the exam and real-world operations.

High Availability, Redundancy, and Scalability

High availability and redundancy are fundamental to NSX-T design. Candidates must understand how to configure Edge clusters, controller redundancy, and failover for management and data planes. Distributed routing and load balancing contribute to resiliency, ensuring continuous service delivery. Knowledge of resource allocation, cluster placement, and failover mechanisms is essential for maintaining operational reliability.

Scalability involves designing segments, routers, and transport zones to accommodate growth without affecting performance. Candidates should understand the impact of scaling on data plane efficiency, control plane synchronization, and policy enforcement. Optimizing configurations for both performance and manageability ensures that NSX-T environments can support growing workloads effectively.

Integrating Knowledge for Exam Readiness

Exam success requires integrating knowledge across all NSX-T domains. Candidates must understand how networking, security, Edge services, automation, and operational management interact. Scenario-based practice reinforces this integrated understanding and improves problem-solving skills. Candidates should focus on identifying interdependencies, analyzing configuration impacts, and applying best practices to maintain consistent network performance and security.

Visual tools such as diagrams and flowcharts help map complex network topologies, policy hierarchies, and traffic flows. Regular review, hands-on labs, and scenario exercises ensure that candidates are prepared to handle complex exam questions and operational challenges confidently.

Preparing for Complex Deployment Scenarios

Candidates should focus on complex use cases that combine multiple NSX-T features. For example, implementing multi-tier networks with micro-segmentation, load balancing, and routing requires understanding the interactions between components. Troubleshooting such environments strengthens analytical skills and ensures readiness for real-world scenarios.

Repetition, lab practice, and documentation of outcomes reinforce learning. By simulating failures, misconfigurations, and service disruptions, candidates develop a systematic approach to problem-solving. This preparation builds confidence for the exam and prepares candidates for managing sophisticated NSX-T environments.

Mastering NSX-T for the 2V0-41.20 exam involves comprehensive knowledge of architecture, logical networking, routing, security, Edge services, automation, monitoring, troubleshooting, high availability, and scalability. Candidates must combine structured study, scenario-based labs, and knowledge integration across domains. Practicing problem-solving, reinforcing concepts, and applying theoretical knowledge in practical scenarios ensures that candidates are fully prepared for both the exam and operational management of complex NSX-T environments.

In-Depth Understanding of NSX-T Management Plane

The management plane in NSX-T is responsible for configuration, policy enforcement, and centralized monitoring. It acts as the administrative interface that allows network and security configurations to propagate across the environment. For candidates preparing for the 2V0-41.20 exam, it is crucial to understand how the management plane communicates with the control and data planes. The management plane maintains state consistency, validates configurations, and provides operational insights through dashboards and logging. Understanding its role is essential for troubleshooting, capacity planning, and maintaining overall network health.

High availability within the management plane ensures that administrative operations continue uninterrupted in case of node failures. Candidates should know how to configure and monitor management clusters, including node roles, redundancy mechanisms, and failover behavior. Knowledge of API integration with the management plane allows for automation of repetitive tasks and monitoring workflows, increasing operational efficiency and reducing manual errors.

Control Plane Functionality and Distributed Architecture

The control plane is central to NSX-T’s distributed architecture, handling the distribution of network state and routing information. Candidates must understand how control plane nodes synchronize and propagate changes to ensure that hypervisors and Edge nodes have consistent network information. Distributed routing tables, policy propagation, and logical forwarding decisions all originate from the control plane, making its understanding critical for exam readiness.

Control plane redundancy and clustering are essential to maintain network stability. Candidates should be familiar with leader election, state synchronization, and failover procedures. Knowing how control plane failures affect routing, firewall rules, and segment connectivity allows candidates to troubleshoot effectively and design resilient environments. The control plane’s integration with the data plane ensures that routing and security policies are applied locally at the hypervisor level, reducing latency and increasing efficiency for east-west traffic.

Data Plane Operations and Performance Optimization

The data plane executes the forwarding of network traffic and enforces security policies at the host level. Distributed routing and distributed firewalling occur in the data plane, allowing traffic to be processed locally on hypervisors, which reduces reliance on centralized components and improves performance. Candidates should understand encapsulation protocols, packet flow, and flow table management. Knowledge of traffic inspection, firewall evaluation order, and policy application ensures accurate and efficient enforcement of rules across all workloads.

Performance monitoring in the data plane includes analyzing CPU utilization, memory consumption, throughput, and latency. Understanding these metrics allows candidates to identify bottlenecks, optimize routing paths, and improve firewall efficiency. Troubleshooting data plane issues often requires correlating information from both the control and management planes to identify root causes and implement corrective actions effectively.

Logical Networking and Overlay Technologies

Logical networking abstracts physical infrastructure to provide flexible and isolated virtual networks. Segments define Layer 2 domains, while transport zones determine the scope of connectivity and define whether overlay or VLAN-backed networks are used. Overlay networking allows workloads to communicate across multiple hosts independently of the physical network. Candidates should understand Geneve encapsulation, MTU considerations, and how overlays interact with physical network components to ensure optimal connectivity and performance.

Designing logical networks involves segment planning, IP addressing, and routing integration. Candidates should be able to create and manage segments, assign them to transport zones, and integrate overlay and VLAN-backed networks when necessary. Proper design ensures scalability, security, and maintainability while simplifying operational management.

Routing Architecture and Tiered Network Design

NSX-T routing is organized into a tiered architecture with Tier-0 and Tier-1 routers. Tier-0 routers manage connectivity to external networks and handle north-south traffic, while Tier-1 routers manage east-west communication between logical segments. Distributed routing allows packet forwarding to occur at the hypervisor level, reducing latency and improving performance. Candidates should understand static and dynamic routing, including BGP and OSPF configuration, route redistribution, and routing policy application.

Routing decisions impact security enforcement, traffic flow, and Edge service functionality. Candidates must know how to troubleshoot routing issues, analyze route propagation, and optimize routing paths for high performance. Mastery of routing concepts is essential for designing robust networks that can scale efficiently and maintain connectivity under diverse operational scenarios.

Security Design and Policy Management

Security in NSX-T is integrated at multiple levels, providing granular control over traffic flows. Micro-segmentation allows candidates to enforce policies at the individual workload level, controlling east-west traffic and reducing attack surfaces. Security groups and dynamic membership provide automation and ensure consistent policy application across workloads. Identity-based firewalling and service insertion extend security capabilities, enabling integration with third-party security tools and context-aware policy enforcement.

Candidates must understand firewall rule evaluation, inheritance, logging, and monitoring. Tracking traffic, analyzing security events, and responding to anomalies ensures that policies remain effective as environments grow or workloads move. Understanding these mechanisms allows candidates to implement secure, scalable networks while maintaining operational efficiency.

Edge Node Services and High Availability

Edge nodes provide essential services including load balancing, NAT, VPN, and DHCP. Load balancing distributes client requests across multiple backend servers, enhancing availability and performance. Candidates should know how to configure pools, virtual servers, health monitors, and persistence profiles. Both Layer 4 and Layer 7 load balancing methods are critical for handling diverse application traffic efficiently.

NAT and VPN services provide connectivity between internal networks and external resources. Candidates should understand translation rules, VPN tunnel configuration, encryption, and authentication protocols. Configuring high availability for Edge nodes ensures that critical services remain operational even during hardware or network failures. Knowledge of clustering, failover mechanisms, and redundancy planning is essential for maintaining service continuity.

Automation and Integration with Operational Workflows

Automation in NSX-T enables efficient management of large environments. Candidates should be proficient in using REST APIs, scripting, and orchestration tools to automate network provisioning, policy deployment, and operational monitoring. Automation reduces errors, ensures consistency, and accelerates deployment across multiple environments. Candidates should understand API endpoints, authentication methods, and workflow implementation to manage NSX-T effectively.

Automation also supports proactive monitoring and alerting. Scripts can check system health, generate performance reports, and trigger notifications for predefined conditions. Integration with orchestration platforms allows seamless deployment of networks, Edge services, and security policies, improving operational efficiency and reliability.

Monitoring, Logging, and Troubleshooting Techniques

Operational monitoring is vital for maintaining a stable NSX-T environment. Candidates should be able to track the health of managers, controllers, Edge nodes, and workloads. Monitoring CPU usage, memory consumption, flow table utilization, network throughput, and latency provides insight into system performance. Logs provide detailed information on configuration changes, traffic patterns, and security events.

Effective troubleshooting requires a systematic approach, including verifying connectivity, analyzing firewall rules, and inspecting routing configurations. Scenario-based lab exercises strengthen analytical and operational skills, allowing candidates to resolve network misconfigurations, routing conflicts, and service disruptions efficiently. Documentation of troubleshooting steps reinforces learning and ensures knowledge retention.

Scenario-Based Lab Practice and Knowledge Integration

Hands-on lab exercises are critical for exam preparation. Candidates should build complex topologies with multiple segments, tiered routers, distributed firewall policies, and Edge services. Practice scenarios should include route failures, policy misconfigurations, and service disruptions to develop troubleshooting skills. Integrating theoretical knowledge with practical exercises ensures readiness for the exam and real-world network management.

Documenting lab outcomes, reviewing mistakes, and analyzing results helps reinforce learning. Scenario practice improves problem-solving skills, enabling candidates to handle unfamiliar challenges systematically. Combining multiple NSX-T domains in lab exercises ensures a comprehensive understanding of network, security, routing, and operational interdependencies.

High Availability, Redundancy, and Scalability Planning

Ensuring high availability and redundancy is a key aspect of NSX-T design. Candidates should understand Edge clustering, controller redundancy, and management plane failover. Distributed routing, load balancing, and segment design contribute to overall resiliency, minimizing service disruption. Knowledge of resource allocation, cluster placement, and failover behavior is critical for maintaining operational reliability.

Scalability requires careful planning of segments, routers, transport zones, and workload placement. Candidates should understand the impact of scaling on data plane efficiency, control plane synchronization, and policy enforcement. Optimizing configurations for performance, security, and manageability ensures that NSX-T environments can grow efficiently while maintaining stability and operational effectiveness.

Integrating Skills for Exam Readiness

Success in the 2V0-41.20 exam requires the integration of knowledge across all NSX-T domains. Candidates must understand how networking, security, Edge services, automation, monitoring, and operational management interact. Scenario-based exercises reinforce this integration, allowing candidates to identify dependencies, assess impacts, and implement best practices.

Visualizing network topologies, policy hierarchies, and traffic flows through diagrams and flowcharts aids in comprehension. Regular review, practical lab exercises, and scenario simulations prepare candidates to approach complex exam questions with confidence.

Preparing for Advanced Deployment and Troubleshooting Scenarios

The exam evaluates both conceptual understanding and applied skills. Candidates should focus on complex scenarios that combine multiple NSX-T features, including multi-tier networks, micro-segmentation, Edge services, and routing integration. Practicing troubleshooting and operational management in these scenarios builds analytical skills and ensures readiness for real-world challenges.

Repetition, scenario practice, and documentation of outcomes reinforce understanding. Simulating failures, policy conflicts, and service disruptions develops systematic problem-solving approaches. This preparation ensures candidates are fully equipped to succeed in the exam and effectively manage NSX-T environments.

Conclusion