- Home

- F5 Certifications

- 101 Application Delivery Fundamentals Dumps

Pass F5 101 Exam in First Attempt Guaranteed!

Get 100% Latest Exam Questions, Accurate & Verified Answers to Pass the Actual Exam!

30 Days Free Updates, Instant Download!

101 Premium Bundle

- Premium File 460 Questions & Answers. Last update: Feb 11, 2026

- Training Course 132 Video Lectures

- Study Guide 423 Pages

Last Week Results!

Includes question types found on the actual exam such as drag and drop, simulation, type-in and fill-in-the-blank.

Based on real-life scenarios similar to those encountered in the exam, allowing you to learn by working with real equipment.

Developed by IT experts who have passed the exam in the past. Covers in-depth knowledge required for exam preparation.

All F5 101 certification exam dumps, study guide, training courses are Prepared by industry experts. PrepAway's ETE files povide the 101 Application Delivery Fundamentals practice test questions and answers & exam dumps, study guide and training courses help you study and pass hassle-free!

F5 101 Application Delivery Fundamentals: Ultimate Professional Certification Manual

The F5 101 Application Delivery Fundamentals certification represents a critical stepping stone for IT professionals seeking to master application delivery technologies in modern network environments. This foundational credential validates your knowledge of load balancing, traffic management, and application security principles that form the backbone of enterprise infrastructure. Aspiring candidates must develop a comprehensive study strategy that encompasses both theoretical concepts and practical implementation scenarios. The certification journey requires dedication, structured learning paths, and access to quality study materials that align with current exam objectives. Success demands commitment to understanding complex networking concepts and their real-world applications in production environments.

Many professionals pursuing the F5 101 certification find value in exploring complementary security credentials that enhance their overall expertise. For instance, understanding CCSP exam preparation strategies provides insights into cloud security principles that frequently intersect with application delivery concepts. This cross-disciplinary approach strengthens your ability to design secure, scalable application infrastructures that meet enterprise requirements. Combining multiple certification paths creates well-rounded professionals capable of addressing diverse infrastructure challenges. The synergy between different credential types amplifies professional value in competitive job markets.

Application Delivery Controller Fundamentals and Their Critical Role

Application Delivery Controllers serve as the nerve center of modern application infrastructure, managing traffic flow, ensuring availability, and optimizing performance across distributed environments. These sophisticated devices operate at multiple layers of the network stack, making intelligent decisions based on application-specific requirements and real-time conditions. Understanding ADC functionality requires mastery of concepts including virtual servers, pools, nodes, and health monitors that work together to deliver seamless application experiences. The F5 BIG-IP platform exemplifies industry-leading ADC technology, offering granular control over application traffic through its advanced feature set. Candidates must grasp how ADCs differ from traditional load balancers, particularly regarding application-layer awareness and programmability.

Professionals transitioning into application delivery roles often benefit from established cybersecurity foundations that inform security-conscious infrastructure design. Learning how to become a network security engineer provides valuable context for understanding the security implications of application delivery decisions. This knowledge proves essential when configuring ADCs to protect against modern threats while maintaining optimal performance. Security-minded application delivery professionals create infrastructure that balances accessibility with protection. The integration of security principles into application delivery design represents best practice in modern infrastructure management.

Load Balancing Algorithms and Their Practical Application in Production Environments

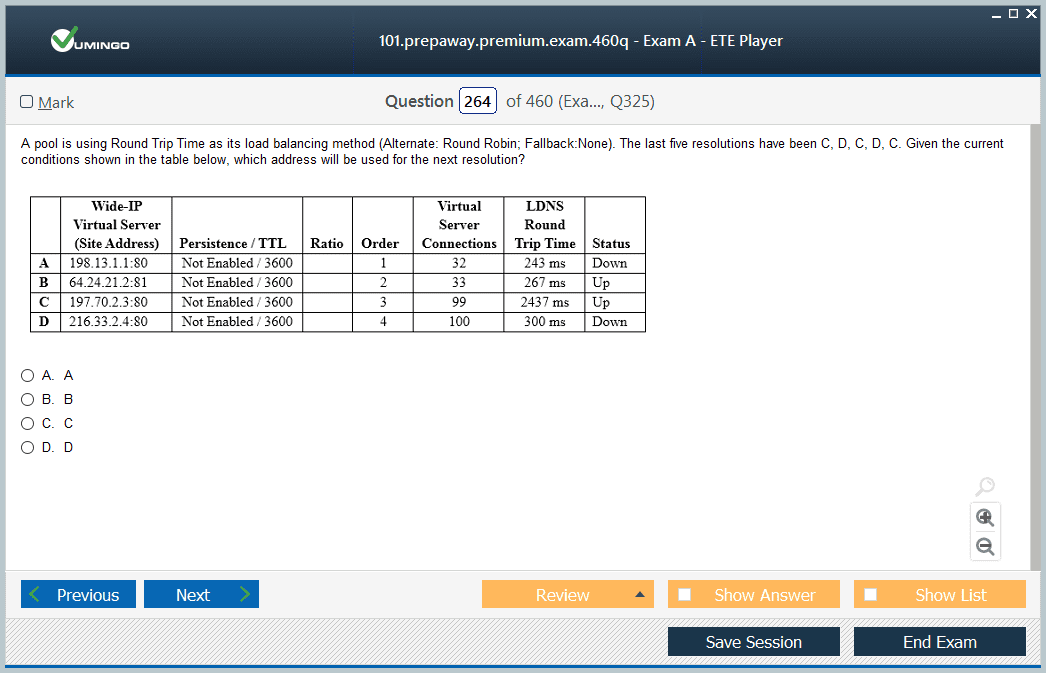

Load balancing algorithms determine how traffic distributes across multiple servers, directly impacting application performance, resource utilization, and user experience throughout the infrastructure. The F5 101 exam extensively covers algorithms including round-robin, least connections, ratio-based distribution, and dynamic methods that adapt to real-time server conditions. Each algorithm serves specific use cases, with round-robin providing simple distribution for homogeneous server pools, while least connections proves optimal for applications with varying session durations. Advanced algorithms incorporate server performance metrics, geographic proximity, and application-specific parameters to make sophisticated routing decisions that optimize overall system performance. Understanding algorithm selection criteria empowers professionals to optimize application delivery based on unique business requirements and technical constraints.

Organizations implementing comprehensive security frameworks often integrate application delivery decisions within broader governance structures that ensure consistent policy application. Exploring ISO 27001 lead implementer training reveals how application delivery configurations must align with organizational security policies and compliance requirements. This holistic perspective ensures that performance optimization never compromises security posture or regulatory compliance. Professionals who understand both technical implementation and governance frameworks deliver superior infrastructure solutions. The alignment of technical decisions with organizational policies creates sustainable, compliant infrastructure that withstands audits and changing requirements.

Health Monitoring Mechanisms for Ensuring Application Availability and Performance

Health monitoring forms the foundation of high-availability application delivery, enabling ADCs to detect and respond to server failures before users experience service disruptions. F5 platforms support multiple monitoring methods including ICMP ping, TCP connection attempts, HTTP content verification, and custom scripted checks that validate application-specific functionality. Passive monitoring observes actual user traffic patterns, while active monitoring proactively tests server responsiveness at configured intervals to maintain continuous awareness. Understanding monitor configuration parameters such as intervals, timeouts, and retry counts proves critical for balancing responsiveness against network overhead and resource consumption. Advanced monitoring strategies incorporate application-layer verification, ensuring servers not only respond but deliver correct functionality to end users.

Security professionals pursuing ethical hacking certifications develop complementary skills for identifying vulnerabilities in application delivery configurations and infrastructure implementations. Reviewing the ultimate guide to pass EC-Council Certified Ethical Hacker exam highlights techniques for testing ADC security, which informed administrators can apply to strengthen their implementations. This defensive perspective enhances overall system resilience against evolving threat landscapes. Administrators who understand attacker methodologies design more robust security controls. The combination of operational expertise and security awareness creates infrastructure that resists both accidental failures and deliberate attacks.

Virtual Server Configuration and Traffic Management Strategies for Enterprise Applications

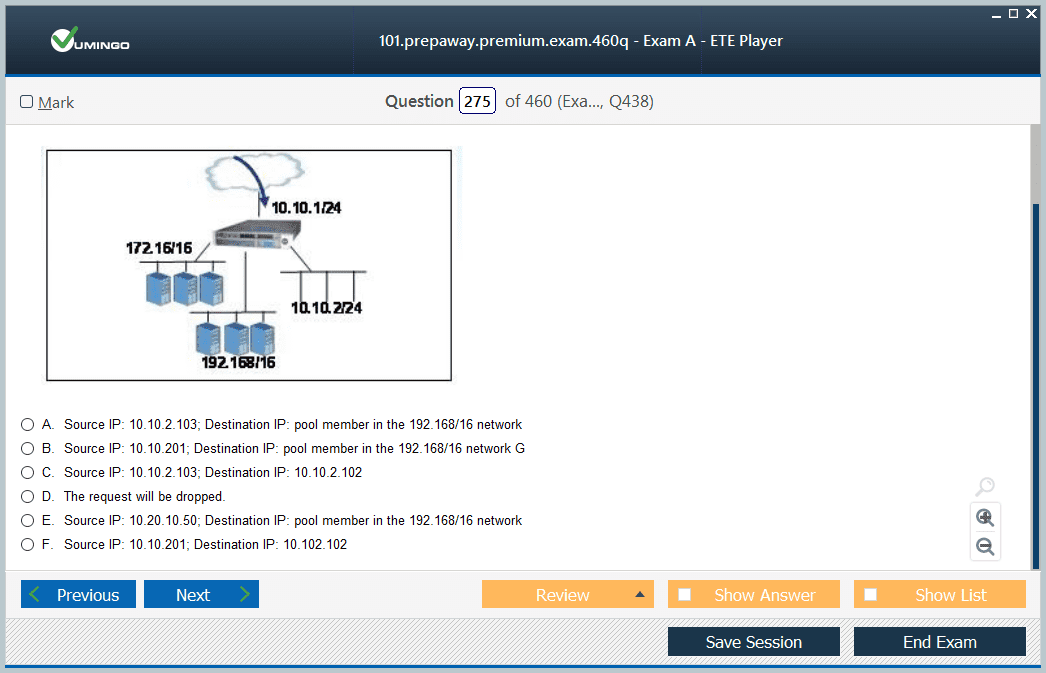

Virtual servers represent the primary interface through which clients access applications managed by F5 ADCs, abstracting backend complexity while presenting unified service endpoints. Each virtual server configuration includes critical parameters such as IP address, port, protocol, and associated pool members that handle actual requests. Understanding virtual server types—including standard, forwarding, and performance layer 4 variants—enables administrators to select appropriate configurations for specific application requirements. Traffic policies applied at the virtual server level enable sophisticated request routing based on HTTP headers, URI patterns, client characteristics, and countless other attributes. Mastering virtual server configuration empowers professionals to implement complex application delivery scenarios efficiently while maintaining performance and security.

The value proposition of cybersecurity certifications extends beyond technical knowledge to career advancement and professional credibility in competitive markets. Examining the true value of ethical hacker certification reveals parallels with F5 certification benefits, including enhanced earning potential and expanded career opportunities. Both credential types validate specialized expertise that organizations actively seek when building technical teams. Certified professionals command premium compensation and receive preferential consideration during hiring processes. The investment in certification preparation delivers measurable returns throughout professional careers.

Session Persistence Methods and Their Impact on Application Functionality

Session persistence ensures that user requests consistently reach the same backend server, maintaining application state across multiple interactions throughout user sessions. F5 platforms support various persistence methods including source address affinity, cookie-based tracking, SSL session ID persistence, and application-specific mechanisms. Understanding when to implement persistence versus allowing pure load distribution requires analyzing application architecture and state management approaches. Cookie persistence offers flexibility and reliability but introduces client-side dependencies, while source IP persistence provides simplicity at the cost of potential distribution imbalances. Advanced persistence configurations incorporate fallback methods and timeout values that balance state maintenance against resource optimization and failover capabilities.

Machine learning concepts increasingly influence modern IT infrastructure decisions, including application delivery optimization and performance tuning methodologies. Investigating the backward elimination process in machine learning demonstrates analytical approaches applicable to identifying critical configuration parameters that most impact application performance. This data-driven methodology enhances decision-making quality by replacing intuition with empirical analysis. Professionals who apply analytical frameworks to infrastructure optimization achieve superior results. The intersection of machine learning concepts and traditional infrastructure management represents evolving best practices.

Traffic Management Policies for Implementing Complex Routing Logic

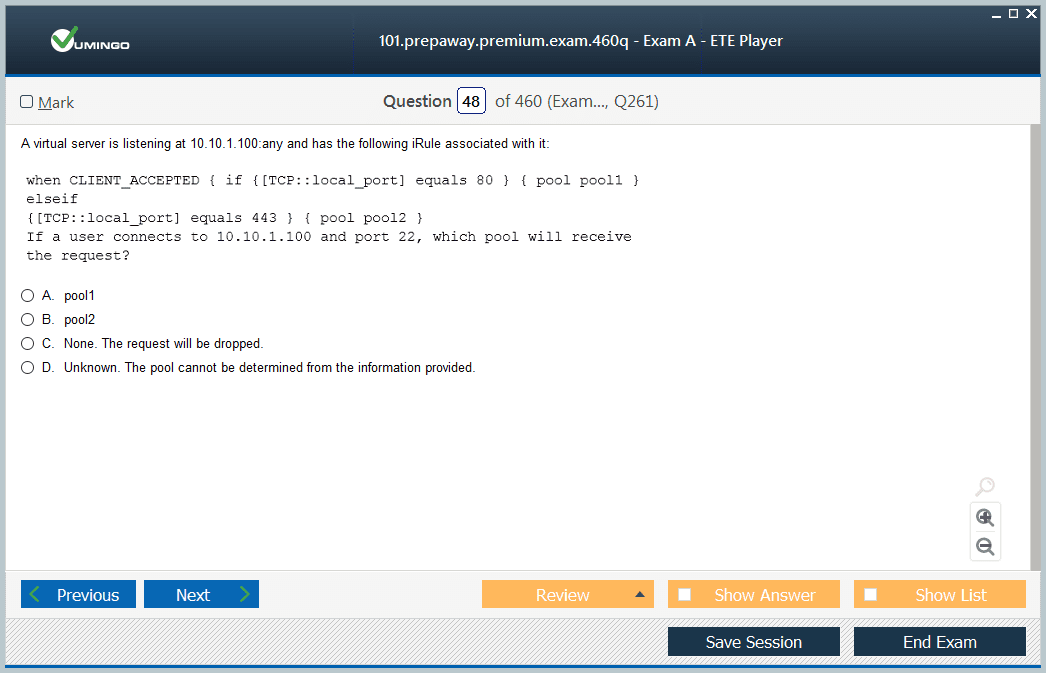

Traffic management policies enable administrators to implement sophisticated routing decisions based on comprehensive request analysis beyond simple load distribution algorithms. F5 iRules provide programmatic control through TCL scripting, allowing inspection and manipulation of traffic at various processing stages. Policy-based routing can direct requests to specific server pools based on HTTP headers, URI patterns, client geography, time of day, or any accessible request attribute. Understanding policy precedence and processing order proves essential for implementing complex logic without unintended consequences or performance degradation. Modern F5 platforms augment traditional iRules with Local Traffic Policies offering GUI-based configuration for common scenarios, simplifying administrative tasks.

Ensemble methods in machine learning share conceptual similarities with load balancing approaches in application delivery, distributing workload for improved outcomes. Exploring bagging in machine learning implementation reveals parallel strategies for distributing workload across multiple processors to improve overall system performance and reliability. These cross-domain insights enrich professional perspective and enable creative problem-solving approaches. Professionals who recognize patterns across different technology domains develop innovative solutions. The application of concepts from diverse fields strengthens analytical capabilities and expands solution possibilities.

SSL Offloading and Encryption Management for Performance Optimization

SSL offloading transfers encryption processing from backend servers to the ADC, reducing server computational burden while centralizing certificate management functions. F5 platforms excel at high-performance SSL processing, supporting thousands of concurrent encrypted connections through dedicated hardware acceleration components. Understanding SSL profiles, cipher suites, and protocol versions enables administrators to balance security requirements against performance constraints and client compatibility. Client-side and server-side SSL processing can be independently configured, allowing encrypted external communications while using unencrypted backend traffic within trusted network segments. Certificate management features including automated renewal, wildcard certificates, and Subject Alternative Names simplify administrative overhead while maintaining security.

Statistical regression models underlying machine learning applications share mathematical foundations with traffic prediction algorithms used in application delivery systems. Studying backward elimination method in machine learning illuminates systematic approaches to identifying significant variables, which translates to determining critical factors affecting application performance. This analytical rigor improves troubleshooting effectiveness and root cause identification. Professionals who understand statistical analysis methods approach problems more systematically. The application of quantitative analysis to infrastructure management elevates decision quality beyond subjective assessment.

Connection Pooling and Backend Server Resource Management

Connection pooling maintains persistent connections between ADCs and backend servers, reducing overhead associated with repeated connection establishment and teardown operations. This optimization proves particularly valuable for applications utilizing database connections or other resource-intensive protocols that benefit from connection reuse. F5 platforms intelligently manage connection pools, balancing the performance benefits of connection reuse against resource consumption from maintaining idle connections. Understanding pool member configuration including connection limits, ratio weights, and priority groups enables precise control over traffic distribution and resource allocation. Dynamic pool member management responds to changing conditions, automatically adjusting traffic distribution based on server health, capacity, and performance metrics.

Predictive analytics capabilities increasingly inform infrastructure capacity planning and performance optimization decisions across modern data centers. Reviewing simple linear regression in machine learning provides foundational concepts applicable to forecasting traffic patterns and resource requirements in application delivery environments. These quantitative methods support proactive infrastructure scaling before capacity constraints impact user experience. Professionals who leverage predictive analytics anticipate problems before they occur. The transition from reactive to proactive infrastructure management represents operational maturity.

Application Security Features Integrated Within Modern ADC Platforms

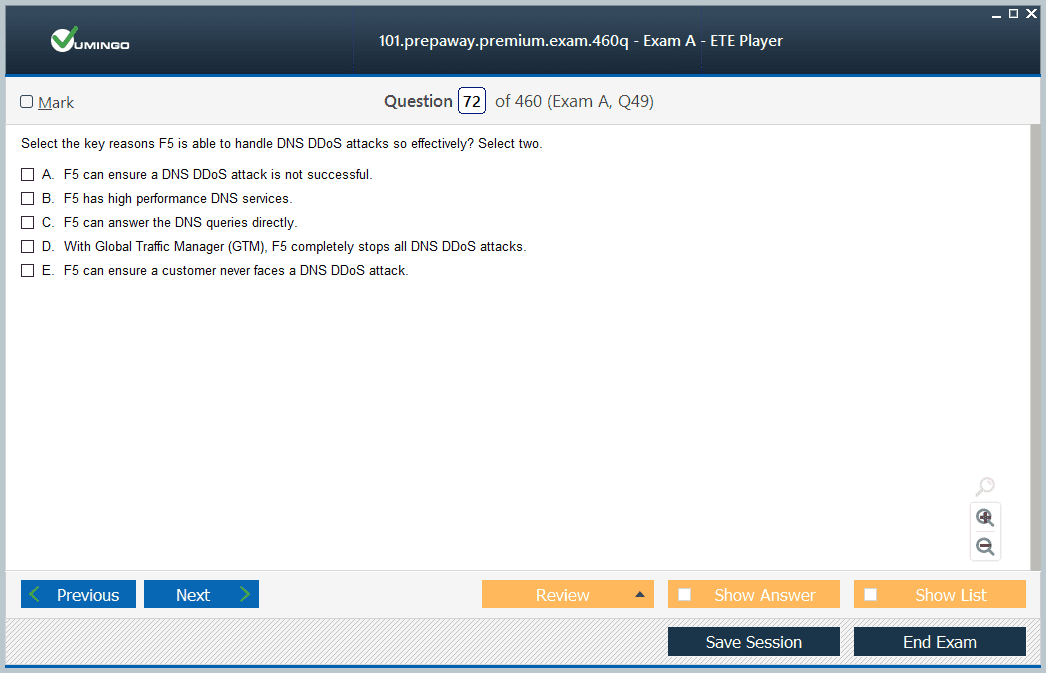

Modern ADCs incorporate comprehensive security features that protect applications from diverse threats while maintaining performance and availability standards. Web Application Firewall functionality inspects HTTP traffic for malicious patterns, blocking attacks including SQL injection, cross-site scripting, and protocol violations. DDoS mitigation capabilities detect and mitigate volumetric attacks, protecting both the ADC infrastructure and backend applications from resource exhaustion. Bot detection distinguishes legitimate users from automated threats, preventing credential stuffing, content scraping, and resource exhaustion attacks. Understanding security policy configuration, including positive and negative security models, enables administrators to balance protection against false positives. Integration with threat intelligence feeds provides dynamic protection against emerging threats identified through global monitoring.

Artificial intelligence applications increasingly rely on properly annotated training data to achieve desired accuracy levels in production systems. Examining how image annotation contributes to machine learning foundations reveals parallels with how proper traffic classification enables ADCs to make intelligent routing decisions. Both domains depend on accurate categorization for optimal performance and desired outcomes. Professionals who understand classification principles apply them effectively across different contexts. The fundamental importance of accurate categorization transcends specific technology implementations.

High Availability Architectures and Failover Mechanisms

High availability configurations ensure continuous application access despite component failures through redundant ADC deployments and automated failover mechanisms. F5 platforms support active-standby and active-active deployment models, each offering distinct advantages regarding resource utilization and complexity. Configuration synchronization maintains consistency across device pairs, ensuring seamless failover without service disruption or configuration drift. Understanding connection mirroring and persistence mirroring capabilities enables administrators to determine appropriate trade-offs between failover speed and system overhead. Network failover protocols including VRRP and connection state synchronization ensure rapid detection and response to failures. Implementing geographically dispersed high availability architectures provides protection against site-level disasters while enabling localized traffic distribution.

Risk management certifications provide valuable frameworks for assessing and mitigating infrastructure vulnerabilities through systematic analysis methodologies. Investigating career excellence in IT risk management with CRISC certification reveals systematic approaches to evaluating application delivery risks and implementing appropriate controls. This structured methodology enhances decision-making quality by ensuring comprehensive risk consideration. Professionals who apply formal risk management frameworks make better-informed infrastructure decisions. The integration of risk analysis into technical planning creates more resilient systems.

Performance Optimization Techniques for Maximum Application Responsiveness

Performance optimization encompasses numerous techniques that collectively minimize latency and maximize throughput for delivered applications across diverse network conditions. TCP optimization features including window scaling, selective acknowledgments, and congestion control algorithms reduce protocol overhead and accelerate data transfer. HTTP compression reduces bandwidth consumption and improves page load times, particularly for text-based content transmitted across constrained networks. Content caching stores frequently accessed resources on the ADC, eliminating backend server processing for repeated requests and reducing origin load. Connection multiplexing consolidates multiple client connections into fewer backend connections, reducing server resource consumption and improving scalability. Understanding when to apply each optimization technique based on application characteristics and network conditions maximizes performance gains.

Online education platforms increasingly provide accessible, cost-effective training for IT professionals pursuing certification goals across diverse technology domains. Exploring affordable online education websites to watch identifies valuable resources for supplementing official F5 training materials with diverse learning approaches. This multi-source strategy accommodates different learning styles and budgets while providing comprehensive coverage. Professionals who leverage diverse learning resources develop deeper understanding than single-source approaches enable. The democratization of education through online platforms expands access to professional development opportunities.

Monitoring and Analytics for Proactive Infrastructure Management

Comprehensive monitoring and analytics capabilities enable administrators to understand application delivery performance, identify emerging issues, and optimize configurations based on empirical data. F5 platforms provide extensive logging and statistics covering traffic volumes, connection counts, server response times, and numerous other metrics. Real-time dashboards visualize current system status, while historical trending reveals patterns and capacity planning requirements for future growth. Integration with external monitoring platforms including SNMP, syslog, and modern observability tools enables centralized infrastructure management across heterogeneous environments. Understanding which metrics most strongly correlate with application performance and user experience focuses monitoring efforts on actionable intelligence. Advanced analytics including traffic pattern analysis and anomaly detection provide early warning of potential issues.

Agile methodologies increasingly influence IT project management, including infrastructure deployment and optimization initiatives across organizations. Reviewing story points in Agile estimation provides frameworks for planning certification study efforts and infrastructure implementation projects with appropriate scope and timeline estimates. These project management skills complement technical expertise and improve delivery predictability. Professionals who combine technical skills with project management capabilities advance more rapidly. The integration of agile practices into infrastructure management improves responsiveness and stakeholder satisfaction.

API-Based Automation and Infrastructure as Code Integration

Modern application delivery management increasingly relies on automation and programmatic configuration through comprehensive API interfaces that enable integration. F5 platforms provide REST APIs enabling integration with orchestration tools, configuration management systems, and custom automation scripts. Understanding API authentication, endpoint structure, and request formatting enables administrators to automate repetitive tasks and implement dynamic configuration changes. Infrastructure as Code approaches treating ADC configuration as version-controlled code provide consistency, repeatability, and audit trails for changes. Integration with CI/CD pipelines enables application delivery configuration to evolve alongside application code, maintaining alignment between infrastructure and software. Terraform providers, Ansible modules, and other ecosystem tools simplify automated F5 management through familiar interfaces.

Marketing professionals and IT specialists both face skepticism about their respective fields' complexity and value from outside observers. Examining whether digital marketing is overrated reveals common misconceptions that parallel assumptions about application delivery being simple load balancing. Both domains involve sophisticated expertise that outsiders frequently underestimate based on superficial understanding. Professionals who educate stakeholders about domain complexity gain greater organizational support. The challenge of communicating technical complexity to non-technical audiences transcends specific professional domains.

IPv6 Support and Dual-Stack Implementation Strategies

IPv6 adoption continues accelerating, requiring application delivery infrastructure to support both legacy IPv4 and modern IPv6 addressing schemes. F5 platforms provide comprehensive dual-stack capabilities including IPv6 virtual servers, pool members, and NAT64 translation for mixed environments. Understanding IPv6 addressing, routing, and protocol differences compared to IPv4 ensures proper configuration and troubleshooting in production environments. Transition mechanisms including tunneling and translation enable gradual migration from IPv4-only to dual-stack or IPv6-only deployments. Proper IPv6 implementation positions infrastructure for long-term scalability while maintaining backward compatibility with existing systems. As IPv6 adoption increases, proficiency in dual-stack deployment becomes increasingly critical for application delivery professionals.

Standardized testing preparation strategies applicable to academic assessments translate effectively to professional certification study approaches across domains. Comparing SAT math versus ACT math demonstrates how understanding exam formats and question types improves performance, whether for academic entrance or professional credentialing. These test-taking strategies enhance certification success rates by optimizing time allocation and answer strategies. Professionals who approach certification exams strategically achieve better outcomes than raw knowledge alone enables. The application of test-taking strategies represents practical wisdom complementing technical preparation.

DNS Integration and Global Server Load Balancing

DNS integration enables ADCs to participate in global traffic management, directing users to optimal application instances based on geography, server health, and capacity. F5 Global Traffic Manager provides sophisticated DNS-based load balancing that considers multiple factors when resolving queries. Understanding DNS resolution flow, including authoritative nameservers, delegation, and caching, enables proper GTM configuration and troubleshooting. Health-based DNS responses automatically redirect traffic away from failed sites, providing geographic failover capabilities without user intervention. Topology-based routing directs users to nearest datacenters, reducing latency and improving user experience across distributed deployments. Integration with application-layer health monitoring ensures DNS directs users only to fully functional application instances.

Military aptitude assessments demonstrate how standardized scoring systems evaluate diverse skill sets for appropriate role placement within organizations. Investigating how ASVAB scores impact enlistment reveals parallels with how certification scores validate technical competency for specific professional roles. Both systems match individual capabilities with organizational requirements to optimize placement. Professionals who understand assessment methodologies prepare more effectively for credentialing examinations. The standardization of competency validation enables efficient matching of skills to opportunities.

Troubleshooting Methodologies for Rapid Issue Resolution

Effective troubleshooting requires systematic approaches that quickly isolate problems within complex application delivery environments containing multiple potential failure points. Understanding traffic flow through virtual servers, pools, and backend servers enables administrators to identify failure points methodically. Packet capture and analysis tools reveal protocol-level issues that higher-level monitoring might miss in complex interactions. Log analysis identifies patterns correlating with performance degradation or failures, enabling root cause identification. Baseline performance metrics provide reference points for identifying abnormal behavior during incident investigation. Methodical elimination of variables through configuration changes and component isolation narrows problem scope efficiently. Documentation of issue symptoms, analysis steps, and resolutions builds institutional knowledge for future reference.

Medical school admissions exams require comprehensive preparation across diverse subject areas, similar to broad technical knowledge requirements for certification success. Reviewing MCAT reproduction and development practice questions illustrates value of targeted practice in challenging subject areas, applicable to mastering difficult F5 concepts through focused study. This deliberate practice approach accelerates skill development by concentrating effort on weak areas. Professionals who identify and address knowledge gaps systematically achieve better outcomes. The strategic allocation of study time to challenging topics maximizes preparation efficiency.

Capacity Planning and Resource Forecasting for Scalable Infrastructure

Capacity planning ensures application delivery infrastructure scales appropriately with business growth and changing traffic patterns over time. Understanding current resource utilization including CPU, memory, network bandwidth, and connection counts provides baseline for future projections. Traffic trending analysis reveals growth patterns and seasonal variations requiring capacity adjustments to maintain performance. Performance testing under load identifies bottlenecks and maximum capacity thresholds before they impact production users. Hardware selection balances current requirements against anticipated growth, avoiding both over-provisioning waste and under-provisioning risks. Virtual edition deployments in cloud environments enable dynamic scaling in response to demand changes. Proper capacity planning prevents performance degradation as traffic grows while optimizing infrastructure investment.

Standardized test preparation requires understanding examination structure and content scope before developing study strategies for optimal results. Examining comprehensive PSAT insights reveals parallels with analyzing F5 exam blueprints to identify critical study areas and allocate preparation time effectively. This strategic approach maximizes certification success probability by focusing effort appropriately. Professionals who understand exam structures prepare more efficiently than those who study randomly. The importance of strategic preparation transcends specific examination types.

Mental Preparation and Exam Success Strategies for Certification Achievement

Mental preparation significantly impacts certification exam performance beyond pure technical knowledge and study time invested. Developing consistent study routines builds familiarity with material through spaced repetition and active recall techniques. Practice exams simulate testing conditions, reducing anxiety while identifying knowledge gaps requiring additional focus before examination. Time management strategies during exams ensure adequate attention to all questions without rushing or excessive dwelling on difficult items. Stress reduction techniques including proper sleep, nutrition, and exercise optimize cognitive performance during preparation and examination periods. Understanding exam format, question types, and scoring methodology removes uncertainty and enables strategic approach to answering. Confidence building through thorough preparation and realistic self-assessment transforms exam anxiety into focused performance.

Mathematical sections of standardized tests demand both content knowledge and test-taking strategies for optimal performance under time constraints. Exploring mental preparation for SAT math success provides insights into managing test anxiety and maintaining focus during challenging assessments. These psychological strategies apply equally to professional certification examinations requiring sustained concentration. Professionals who manage examination stress effectively demonstrate their knowledge more completely. The mental preparation component of certification success often receives insufficient attention despite significant impact.

Continued Professional Development Beyond Initial Certification Achievement

F5 101 certification represents the beginning of professional development rather than its conclusion in application delivery expertise. Advanced certifications including F5 201 and specialist credentials deepen expertise in specific technology areas and solutions. Hands-on experience implementing and managing production F5 deployments provides practical knowledge that supplements theoretical certification preparation. Community participation through user groups, forums, and conferences exposes professionals to diverse implementation approaches and emerging best practices. Technology evolution requires continuous learning to maintain relevance as platforms, protocols, and security threats change rapidly. Cross-training in complementary technologies including network security, cloud platforms, and automation tools increases professional versatility and value.

Graduate school admissions exams assess analytical and communication skills essential for academic success beyond narrow content knowledge. Investigating GMAT verbal preparation techniques demonstrates how developing strong communication abilities enhances professional effectiveness beyond pure technical knowledge. These well-rounded skills differentiate exceptional IT professionals from merely competent technicians. Professionals who communicate effectively advance more rapidly and influence organizational decisions. The combination of technical expertise and communication skills creates maximum professional impact.

Enterprise Hardware Solutions and Certification Preparation Resources

Enterprise hardware manufacturers provide specialized certifications validating expertise in their product ecosystems, complementing broader application delivery knowledge with vendor-specific skills. Vendor-specific credentials demonstrate proficiency with particular platforms, architectures, and management tools that organizations deploy in production environments. Understanding vendor certification pathways helps professionals align their development with career goals and market demand in their region. Many employers specifically seek candidates holding certifications from manufacturers whose products comprise their infrastructure to ensure operational expertise. Preparation resources vary significantly across vendors, requiring research to identify high-quality materials that accurately reflect current exam objectives.

Professionals seeking comprehensive preparation materials often explore specialized certification resources that provide vendor-specific content and practice examinations. Accessing HP certification exams provides insights into enterprise hardware vendor credentialing programs that validate expertise with specific product families and solutions. These specialized credentials complement general infrastructure knowledge with practical implementation skills for specific platforms. Professionals holding both general and vendor-specific credentials demonstrate comprehensive expertise. The combination of broad knowledge and deep specialization maximizes professional marketability.

Human Resources Professional Development and Cross-Functional Expertise

Modern IT professionals increasingly benefit from cross-functional knowledge that enables effective collaboration with non-technical stakeholders across organizations. Human resources certifications validate expertise in talent management, organizational development, and compliance areas that intersect with IT operations. Understanding HR perspectives improves IT professionals' ability to communicate technical concepts to business audiences and participate in strategic planning. Security professionals particularly benefit from HR knowledge when implementing access controls, conducting security awareness training, and managing insider threat risks. Cross-functional expertise differentiates professionals capable of bridging technical and business domains. Organizations increasingly value employees who combine deep technical skills with broader business understanding.

Exploring diverse certification domains broadens professional perspective and reveals transferable concepts applicable across disciplines beyond narrow specialization. Reviewing HRCI certification exams demonstrates how human resources professionals pursue credentialing similar to IT specialists, with analogous study requirements and career benefits. This parallel structure across professional domains highlights universal value of validated expertise. Professionals who understand multiple disciplines contribute more effectively to organizational success. The recognition of common patterns across different fields enhances strategic thinking capabilities.

Global Telecommunications Equipment Vendor Certification Programs

Major telecommunications equipment vendors maintain extensive certification programs validating expertise with their networking, security, and cloud infrastructure solutions. These credentials span entry-level through expert tiers, enabling professionals to demonstrate progressive skill development across career stages. Vendor certifications often focus on specific product lines, technologies, or solution areas rather than general networking concepts. Understanding vendor-specific architectures, management interfaces, and operational practices proves essential for professionals working in environments deploying particular manufacturer equipment. Many vendor certification programs include hands-on lab components ensuring practical competency beyond theoretical knowledge. Geographic regions sometimes exhibit preference for particular vendors, making regional market awareness valuable for career planning.

Telecommunications infrastructure increasingly underpins application delivery systems, making networking vendor expertise valuable for F5 professionals working in complex environments. Investigating Huawei certification exams reveals another major vendor's credentialing approach, offering alternatives or complements to other networking certifications. These parallel certification programs demonstrate multiple paths to demonstrating networking expertise. Professionals who understand multiple vendor platforms increase their versatility and employment opportunities. The diversification of vendor knowledge reduces dependence on single manufacturer ecosystems.

Administrative Professional Credentials and Organizational Efficiency

Administrative professional certifications validate expertise in office management, communication, technology utilization, and organizational operations across business functions. While seemingly distant from application delivery, these credentials highlight transferable skills including project coordination, documentation, and stakeholder communication that benefit technical professionals. Understanding administrative best practices improves IT professionals' effectiveness in creating documentation, managing projects, and coordinating with diverse teams. Many IT roles involve significant administrative responsibilities beyond pure technical work, making these complementary skills valuable. Cross-training in administrative practices positions technical professionals for management roles requiring both technical and operational expertise.

Professional development extends beyond narrow technical specialization to encompass broader business and operational skills that enhance overall effectiveness. Examining IAAP certification exams illustrates how administrative professionals pursue structured credentialing, demonstrating universal value of validated expertise across diverse career fields. This pattern reinforces the importance of formal credentials across professional domains. Professionals who develop well-rounded skill sets advance more successfully than narrow specialists. The integration of diverse capabilities creates more valuable organizational contributors.

Privacy and Data Protection Specialist Certifications

Privacy and data protection certifications address growing organizational focus on compliance with regulations including GDPR, CCPA, and industry-specific requirements. These credentials validate expertise in privacy program development, data governance, risk assessment, and regulatory compliance activities. Application delivery professionals benefit from privacy knowledge when implementing solutions that process personal information, requiring appropriate security controls and data handling practices. Understanding privacy principles enables better collaboration with legal and compliance teams during solution design and implementation. Privacy expertise increasingly differentiates professionals as organizations elevate data protection priorities in response to regulations. The intersection of privacy, security, and application delivery creates opportunities for professionals combining expertise across these domains.

Data protection increasingly influences infrastructure design decisions, requiring technical professionals to understand regulatory requirements and privacy principles beyond security. Exploring IAPP certification exams provides insights into specialized privacy credentials that complement security-focused certifications like those from F5. These privacy-specific credentials validate expertise in emerging organizational priorities. Professionals who understand both technical implementation and regulatory requirements deliver more compliant solutions. The integration of privacy knowledge into technical practice becomes increasingly critical.

Microsoft Enterprise Administration and Cloud Platform Expertise

Microsoft enterprise administration certifications validate expertise managing complex Microsoft 365 environments including Exchange, SharePoint, Teams, and integrated security services. Enterprise administrators coordinate multiple workloads, ensuring seamless integration and optimal performance across the Microsoft cloud ecosystem. Understanding Microsoft's service architecture, management interfaces, and operational best practices enables professionals to support organizations heavily invested in Microsoft platforms. These credentials demonstrate capability to manage user identities, implement security policies, monitor service health, and optimize license utilization. Many organizations standardize on Microsoft solutions, creating strong demand for administrators holding current credentials. Integration between Microsoft cloud services and on-premises infrastructure requires hybrid expertise combining cloud and traditional IT skills.

Cloud platform expertise increasingly complements on-premises application delivery knowledge as organizations adopt hybrid architectures spanning multiple environments. Reviewing Microsoft 365 Certified Enterprise Administrator Expert reveals advanced credentialing demonstrating comprehensive cloud platform mastery. These expert-level credentials validate ability to manage complex, enterprise-scale deployments. Professionals holding advanced certifications access senior-level opportunities and responsibilities. The progression from associate to expert credentials demonstrates professional development trajectory.

Messaging Infrastructure Administration and Communication Platform Management

Messaging administrator certifications focus specifically on email systems, including Exchange Online, hybrid deployments, and related communication services. Messaging infrastructure represents critical organizational dependency, requiring specialized expertise to ensure reliability, security, and compliance. Understanding message routing, mailbox management, anti-spam/anti-malware protection, and compliance features enables administrators to operate robust email environments. Integration with application delivery infrastructure occurs through load balancing, SSL offloading, and security filtering of email traffic. Specialized messaging expertise complements broader infrastructure knowledge, particularly in organizations where email systems represent primary communication channels. Migration from on-premises to cloud messaging solutions creates demand for professionals skilled in both environments.

Communication platform management increasingly involves cloud-based services requiring specialized knowledge distinct from general IT infrastructure administration. Investigating Microsoft 365 Certified Messaging Administrator Associate demonstrates focused expertise validation within specific solution domains. These specialized credentials recognize depth of knowledge in particular technology areas. Professionals who specialize in high-demand platforms command premium compensation. The focus on specific solutions enables development of deep expertise.

Modern Desktop Management in Cloud-First Enterprise Environments

Modern desktop administrator certifications address endpoint management using cloud-based tools including Microsoft Intune, Windows Autopilot, and integrated security services. Desktop management evolved from traditional imaging and software distribution to cloud-driven policy application and zero-touch deployment. Understanding modern device management requires expertise in identity integration, conditional access policies, application deployment, and security baseline implementation. Modern desktop approaches support diverse device types including Windows, macOS, iOS, and Android within unified management frameworks. Integration with application delivery infrastructure occurs through client security posture assessment and conditional application access. Organizations transitioning from traditional desktop management to modern approaches require professionals skilled in cloud-based endpoint management.

Endpoint management intersects with application delivery when implementing security controls and ensuring consistent client configurations across diverse devices. Examining Microsoft 365 Certified Modern Desktop Administrator Associate reveals specialized credentials for contemporary device management approaches. These modern credentials reflect evolving best practices in endpoint administration. Professionals who master current management paradigms position themselves for career longevity. The shift from traditional to modern management represents fundamental transformation in IT operations.

Security Administration Across Integrated Cloud Platforms

Security administrator certifications validate expertise implementing and managing security controls across integrated cloud platforms protecting organizational assets. Modern security administration encompasses identity protection, threat management, information protection, and compliance monitoring within cloud environments. Understanding security architecture, incident response, and security operations enables administrators to protect organizations against evolving threats. Integration with application delivery occurs through identity-based access controls, threat intelligence sharing, and coordinated incident response. Security administrators work closely with application delivery teams to implement defense-in-depth strategies protecting both infrastructure and applications. Growing threat landscapes and regulatory requirements create strong demand for security professionals with current credentials and practical experience.

Comprehensive security expertise increasingly requires cloud platform specialization as organizations migrate critical workloads to hosted environments. Reviewing Microsoft 365 Certified Security Administrator Associate illustrates focused security credentialing within specific cloud ecosystems. These platform-specific security credentials validate expertise with particular security tool sets. Professionals who understand platform-specific security capabilities implement more effective controls. The specialization in security domains creates valuable expertise in high-demand areas.

Collaboration Platform Administration for Remote Work Enablement

Teams administrator certifications focus on Microsoft Teams deployment, configuration, and optimization for organizational collaboration in distributed work environments. Teams evolved from simple chat application to comprehensive collaboration platform integrating voice, video, chat, and integrated applications. Understanding Teams architecture, voice routing, meeting policies, and compliance features enables administrators to support diverse collaboration scenarios. Network integration including quality of service, bandwidth management, and firewall configuration directly relates to application delivery expertise. Teams administrators coordinate with network, security, and application delivery teams ensuring optimal user experience. Remote work trends accelerated Teams adoption, creating demand for specialized administrators supporting this critical communication platform.

Collaboration platforms increasingly depend on proper network infrastructure and application delivery optimization for acceptable performance in production environments. Investigating Microsoft 365 Certified Teams Administrator Associate reveals specialized expertise requirements for modern collaboration tools. These credentials validate capability to manage platforms enabling distributed workforce productivity. Professionals specializing in collaboration platforms address critical business needs. The importance of collaboration expertise increased dramatically with remote work adoption.

Cloud Fundamentals and Platform Entry-Level Certifications

Cloud fundamentals certifications provide entry points for professionals transitioning into cloud technologies, validating basic knowledge of cloud concepts, services, and architectural principles. These credentials cover core topics including infrastructure as a service, platform as a service, software as a service, and shared responsibility models. Understanding cloud pricing, security, compliance, and service level agreements provides foundation for deeper specialization in specific platforms. Fundamentals certifications require less extensive preparation than advanced credentials, making them accessible starting points for career development. Many professionals pursue multiple cloud platform fundamentals certifications to maintain vendor-neutral perspectives across providers. These entry-level credentials signal commitment to cloud expertise development and provide structure for continued learning.

Foundation-level cloud certifications provide accessible entry points for expanding expertise beyond traditional infrastructure into modern platforms. Exploring Microsoft Certified Azure Fundamentals demonstrates introductory credentialing options that establish baseline cloud knowledge. These fundamentals credentials create pathways to more advanced specializations. Professionals beginning cloud journeys benefit from structured fundamental knowledge. The establishment of core concepts through fundamentals certifications accelerates subsequent advanced learning.

Business Application Platform Fundamentals and Low-Code Solutions

Business application platform fundamentals certifications validate understanding of customer engagement, finance, operations, and other business-focused cloud applications. These credentials address platforms enabling rapid application development through low-code or no-code approaches accessible to business users. Understanding business application capabilities, integration patterns, and deployment models helps professionals support organizations implementing these solutions. Business application platforms increasingly integrate with traditional infrastructure through APIs, data synchronization, and identity federation mechanisms. Technical professionals supporting business application deployments require sufficient platform knowledge to enable integration and troubleshooting. Fundamentals certifications provide accessible introduction to business application technologies without requiring extensive platform-specific experience.

Business-focused cloud platforms increasingly integrate with traditional infrastructure, requiring IT professionals to understand multiple technology domains simultaneously. Reviewing Microsoft Certified Dynamics 365 Fundamentals illustrates business application credentialing distinct from infrastructure-focused certifications. These business application credentials recognize different expertise areas within cloud ecosystems. Professionals who understand both technical infrastructure and business applications deliver more comprehensive solutions. The convergence of technical and business platforms requires broader professional knowledge.

Cloud Infrastructure Administration and Resource Management

Cloud infrastructure administrator certifications validate expertise deploying and managing virtual machines, storage, networking, and identity services in cloud environments. Administrators manage resource lifecycle including provisioning, scaling, monitoring, and optimization to ensure performance and cost effectiveness. Understanding infrastructure as code, automation, and DevOps practices enables modern cloud management approaches that improve consistency. Integration with on-premises infrastructure through hybrid networking and identity federation requires comprehensive understanding of both environments. Cloud administrators coordinate with application teams, security specialists, and business stakeholders to deliver reliable infrastructure services. Strong demand exists for cloud administrators with practical experience and current certifications as organizations expand cloud adoption.

Cloud infrastructure skills increasingly complement traditional on-premises expertise as organizations adopt hybrid architectures spanning multiple environments. Investigating Microsoft Certified Azure Administrator Associate reveals practical cloud infrastructure management credentialing. These administrator-level credentials validate operational expertise with cloud platforms. Professionals who master cloud administration address critical organizational needs. The transition from on-premises to cloud infrastructure requires deliberate skill development.

Artificial Intelligence Service Integration and Machine Learning Operations

AI engineer certifications validate expertise implementing and managing artificial intelligence and machine learning services within cloud platforms. AI engineers design solutions leveraging cognitive services, custom machine learning models, and integrated AI capabilities available through platforms. Understanding data preparation, model training, deployment, and monitoring enables professionals to operationalize AI solutions delivering business value. Integration with application delivery infrastructure occurs through API management, authentication, and capacity planning for AI workloads. AI expertise increasingly differentiates professionals as organizations seek to derive insights and automation from data assets. Combination of cloud expertise, programming skills, and AI knowledge positions professionals for high-demand roles in emerging technology areas.

Artificial intelligence capabilities increasingly integrate with traditional applications, requiring infrastructure professionals to understand AI service deployment and management. Examining Microsoft Certified Azure AI Engineer Associate demonstrates specialized AI implementation credentials. These AI-focused credentials validate expertise in emerging technology domains. Professionals who develop AI expertise position themselves for future opportunities. The integration of AI capabilities into mainstream applications accelerates demand for AI skills.

Data Platform Engineering and Analytics Infrastructure

Data engineer certifications validate expertise designing and implementing data storage, processing, and analytics solutions in cloud environments. Data engineers build pipelines that ingest, transform, and prepare data for analysis and machine learning applications. Understanding data lake architectures, data warehousing, streaming analytics, and data security enables comprehensive data platform implementation. Integration with application delivery occurs through API-based data access, caching strategies, and performance optimization for data-intensive operations. Organizations increasingly recognize data as strategic asset, creating demand for engineers capable of building robust data platforms. Data engineering combines programming skills, infrastructure knowledge, and understanding of analytics requirements to deliver valuable business capabilities.

Data platform expertise represents increasingly critical specialization as organizations develop data-driven decision-making capabilities across business functions. Reviewing Microsoft Certified Azure Data Engineer Associate illustrates data-focused credentialing distinct from general infrastructure administration. These data engineering credentials validate specialized expertise in data management. Professionals who master data platforms address fundamental business needs. The growing importance of data analytics drives demand for data engineering expertise.

Contact Center Platform Specialist Certifications and Customer Experience Solutions

Contact center platform certifications validate expertise implementing and managing cloud-based customer engagement solutions that integrate voice, digital channels, and workflow automation. These specialized credentials demonstrate proficiency with platforms that route customer interactions, provide agent tools, and deliver analytics for contact center optimization. Understanding omnichannel routing, workforce management integration, and quality management capabilities enables professionals to support customer service operations effectively. Integration with enterprise infrastructure occurs through network quality of service, security controls, and identity management systems. Organizations increasingly deploy cloud-based contact center solutions, creating demand for specialists who understand both the platform and underlying infrastructure requirements.

Cloud contact center solutions require specialized knowledge distinct from traditional telephony or generic cloud platforms used in enterprise environments. Accessing GCP GCX exam preparation materials provides insights into vendor-specific contact center credentialing programs. These specialized credentials validate deep expertise in customer engagement platforms. Professionals who specialize in contact center technologies address specific organizational needs. The evolution toward cloud-based contact centers creates new specialization opportunities.

Automated Routing and Campaign Management Expertise

Automated routing and campaign management certifications focus on configuring intelligent routing algorithms, outbound campaign strategies, and customer journey optimization within contact center platforms. These credentials validate ability to design routing strategies that balance customer experience, agent efficiency, and business objectives simultaneously. Understanding skills-based routing, predictive dialing, progressive campaigns, and compliance requirements enables professionals to implement sophisticated contact center operations. Integration with CRM systems, analytics platforms, and business intelligence tools provides comprehensive customer engagement capabilities. Specialists in routing and campaign management combine technical platform knowledge with business process understanding. Organizations seeking to optimize customer interactions require professionals who can translate business requirements into effective platform configurations.

Routing optimization represents specialized domain requiring both technical proficiency and business process understanding for successful implementation. Exploring GCX ARC exam resources demonstrates focused expertise validation within contact center routing specialization. These routing-specific credentials recognize niche expertise within broader contact center domain. Professionals who master routing optimization deliver measurable business value. The sophistication of modern routing capabilities creates specialization opportunities.

Customer Data Platform Integration and Analytics

Customer data platform certifications validate expertise integrating customer information across multiple systems, enabling unified customer views and personalized engagement strategies. These credentials demonstrate ability to configure data collection, identity resolution, segmentation, and activation capabilities that power customer experience initiatives. Understanding data privacy requirements, consent management, and regulatory compliance ensures responsible customer data handling in marketing applications. Integration with contact center platforms, marketing automation, and analytics tools creates comprehensive customer engagement ecosystems. Customer data specialists bridge technical implementation and marketing strategy, requiring both technical skills and business acumen. Organizations prioritizing customer experience increasingly invest in customer data platforms, creating demand for integration specialists.

Customer data platform expertise combines technical integration skills with marketing and privacy knowledge for comprehensive customer engagement solutions. Investigating GCX GCD exam materials reveals specialized credentialing for customer data platform professionals. These data-focused credentials validate expertise in emerging marketing technology areas. Professionals who master customer data platforms contribute directly to revenue generation. The increasing importance of customer data creates valuable specialization opportunities.

Scripting and Customization for Contact Center Solutions

Scripting certifications validate expertise in creating custom logic, automations, and integrations within contact center platforms using platform-specific languages. These credentials demonstrate ability to extend platform capabilities beyond standard configuration through custom code development. Understanding scripting syntax, platform APIs, and integration patterns enables professionals to implement complex business logic and workflows. Custom scripting enables integration with external systems, data enrichment, and specialized routing logic unavailable through standard configuration. Professionals with scripting expertise deliver solutions addressing unique business requirements impossible through standard platform features alone. Organizations with complex requirements seek specialists capable of customizing platforms to meet specific needs.

Platform customization capabilities significantly expand solution possibilities beyond standard configuration options available through administrative interfaces. Reviewing GCX SCR exam demonstrates scripting expertise validation within contact center platforms. These development-focused credentials recognize programming skills within specialized contexts. Professionals who combine platform knowledge with programming capabilities maximize platform value. The ability to customize platforms differentiates advanced practitioners from basic administrators.

Workforce Management and Optimization

Workforce management certifications validate expertise in forecasting contact volumes, scheduling agents, and optimizing staffing levels to balance service levels and costs. These credentials demonstrate understanding of statistical forecasting, schedule optimization, real-time management, and performance analytics for contact centers. Understanding workforce management principles enables professionals to help organizations maintain service levels while controlling labor costs effectively. Integration with contact center platforms provides data for forecasting and real-time adherence monitoring throughout operational periods. Workforce management specialists combine analytical skills, operational understanding, and technology proficiency to optimize contact center operations. Organizations seeking operational efficiency require professionals who can apply workforce optimization principles using specialized tools.

Workforce optimization represents critical operational capability for contact centers managing large agent populations and fluctuating demand patterns. Investigating GCX WFM exam reveals specialized workforce management credentialing. These operational credentials validate expertise in contact center staffing optimization. Professionals who master workforce management directly impact operational efficiency and costs. The complexity of modern workforce management creates specialized career paths.

Advanced Cisco Development and Automation

Advanced Cisco development certifications validate expertise creating automation solutions, integrating network systems, and developing applications interacting with Cisco infrastructure. These credentials demonstrate proficiency with APIs, software development practices, and automation frameworks used in modern network environments. Understanding network programmability, controller platforms, and orchestration tools enables professionals to automate operational tasks and create custom integrations. Development expertise transforms network management from manual configuration to automated, programmable infrastructure that scales efficiently. Organizations adopting network automation require professionals combining networking knowledge with software development skills. The convergence of networking and software development creates new career paths.

Network programmability increasingly requires software development skills beyond traditional networking expertise as infrastructure becomes code-defined. Exploring Cisco 350-901 DEVCOR tutorials demonstrates advanced development credentialing for networking professionals. These development-focused credentials validate programming expertise within networking contexts. Professionals who combine networking and development skills address modern infrastructure requirements. The evolution toward programmable infrastructure requires expanded skill sets.

Expert-Level Routing and Switching

Expert-level routing and switching certifications represent pinnacle achievements in networking credentialing, validating comprehensive expertise across diverse networking technologies. These credentials demonstrate mastery of complex routing protocols, switching technologies, network design, and troubleshooting methodologies. Understanding enterprise and service provider networking scenarios enables professionals to design and support large-scale, mission-critical networks. Expert certifications require extensive preparation including hands-on lab examinations testing practical troubleshooting and configuration skills. Professionals holding expert certifications access premium compensation and prestigious career opportunities in networking. The rigorous requirements create exclusive community of highly skilled networking professionals.

Expert certifications represent career milestones demonstrating exceptional technical proficiency recognized throughout the networking industry. Reviewing Cisco 400-101 CCIE Routing and Switching tutorials illustrates comprehensive preparation requirements for expert credentials. These elite certifications distinguish top networking professionals from general practitioners. Professionals who achieve expert status demonstrate sustained commitment to excellence. The prestige of expert certifications provides long-term career benefits.

Expert-Level Security Credentials

Expert-level security certifications validate comprehensive expertise across diverse security technologies, threat mitigation strategies, and secure network design principles. These credentials demonstrate mastery of security architecture, cryptography, identity services, and advanced threat protection technologies. Understanding both proactive security design and reactive incident response enables professionals to protect organizations comprehensively. Expert security certifications require extensive preparation including practical lab examinations testing real-world security scenarios. Security professionals holding expert credentials access specialized roles protecting critical infrastructure and sensitive data. The increasing importance of cybersecurity elevates value of expert security credentials.

Expert security credentials represent highest achievement levels in specialized security domains recognized globally across industries. Investigating Cisco 400-251 CCIE Security tutorials demonstrates rigorous preparation requirements for security expertise. These advanced credentials validate exceptional security proficiency. Professionals holding expert security certifications command premium compensation. The critical importance of security expertise drives demand for expert practitioners.

Customer Success and Adoption Specialization

Customer success certifications validate expertise in driving product adoption, customer retention, and value realization for technology solutions. These credentials demonstrate understanding of customer lifecycle management, adoption strategies, and success metrics measuring business outcomes. Understanding customer success principles enables professionals to help customers maximize return on technology investments through effective utilization. Customer success specialists combine technical knowledge, business acumen, and communication skills to bridge vendors and customers effectively. Organizations increasingly invest in customer success programs to reduce churn and expand accounts. The focus on customer outcomes rather than product features represents evolving sales and support approaches.

Customer success expertise combines technical understanding with business consulting capabilities to drive product adoption and renewal. Examining Cisco 820-605 tutorials reveals customer success credentialing for technology professionals. These business-focused credentials recognize skills beyond traditional technical expertise. Professionals who master customer success principles contribute directly to revenue retention. The growing importance of recurring revenue models elevates customer success specialization.

Application Virtualization and Delivery

Application virtualization certifications validate expertise delivering applications through virtual desktop infrastructure and application streaming technologies. These credentials demonstrate proficiency with platforms that centralize application management while providing user access across diverse devices. Understanding virtual desktop architecture, profile management, performance optimization, and user experience monitoring enables effective virtual application delivery. Integration with application delivery controllers optimizes virtual desktop traffic and ensures responsive user experience. Virtualization specialists enable organizations to deliver applications consistently across distributed workforces without traditional endpoint management complexities. The shift toward remote work accelerates virtual application delivery adoption.

Virtual application delivery technologies enable flexible work arrangements and centralized application management reducing endpoint complexity. Reviewing Citrix 1Y0-203 CCA-V tutorials demonstrates virtualization credentialing pathways. These virtualization credentials validate specialized delivery expertise. Professionals who master virtual delivery technologies address modern workplace requirements. The increasing prevalence of remote work drives virtualization expertise demand.

Expert-Level Virtualization Architecture

Expert-level virtualization certifications validate comprehensive expertise designing and implementing large-scale virtual desktop and application delivery solutions. These credentials demonstrate mastery of architecture design, performance optimization, disaster recovery, and multi-site deployment strategies. Understanding complex virtualization scenarios enables professionals to support enterprise-scale deployments serving thousands of concurrent users. Expert virtualization specialists design solutions balancing performance, availability, security, and cost considerations across diverse requirements. Organizations with significant virtualization investments require expert-level architects ensuring optimal design and implementation. The complexity of enterprise virtualization creates demand for specialized expertise.

Expert virtualization credentials represent advanced specialization in delivering applications and desktops through centralized infrastructure. Investigating Citrix 1Y0-401 CCE-V tutorials illustrates expert-level virtualization credentialing. These advanced credentials validate comprehensive virtualization mastery. Professionals achieving expert virtualization status access specialized architectural roles. The sophistication of modern virtualization deployments requires expert-level expertise.

Container Orchestration Administration

Container orchestration certifications validate expertise deploying and managing containerized applications using Kubernetes and related technologies. These credentials demonstrate proficiency with container concepts, cluster architecture, workload deployment, and operational management. Understanding container orchestration enables professionals to support modern application deployment patterns emphasizing portability and scalability. Container platforms increasingly host production workloads, requiring specialized operational expertise ensuring reliability and performance. Professionals with container expertise support organizations adopting cloud-native application architectures. The industry-wide adoption of containers creates strong demand for Kubernetes skills.

Container technologies fundamentally transform application deployment and management compared to traditional virtualization approaches. Exploring CNCF CKA Certified Kubernetes Administrator tutorials demonstrates container orchestration credentialing. These modern credentials validate expertise with emerging infrastructure paradigms. Professionals who master container orchestration position themselves for contemporary opportunities. The rapid adoption of containers creates urgent skill development needs.

Container Application Development

Container application development certifications validate expertise building applications designed specifically for containerized deployment on Kubernetes platforms. These credentials demonstrate understanding of containerization principles, microservices architecture, and Kubernetes-native application patterns. Understanding container-native development enables professionals to create applications that fully leverage container orchestration capabilities. Developer-focused container certifications complement administrator credentials by addressing application design rather than platform operations. Organizations building cloud-native applications require developers understanding both application development and container deployment platforms. The convergence of development and operations creates demand for hybrid skills.

Application development for container platforms requires different approaches than traditional monolithic application development patterns. Reviewing CNCF CKAD Certified Kubernetes Application Developer tutorials illustrates development-focused container credentials. These developer credentials recognize application-building expertise within container ecosystems. Professionals who master container-native development create modern, scalable applications. The shift toward microservices architecture drives container development skill requirements.

Hardware and Software Troubleshooting

Hardware and software troubleshooting certifications validate foundational IT skills including hardware component identification, operating system installation, and basic troubleshooting methodologies. These entry-level credentials demonstrate competency with desktop and laptop computers, peripherals, and end-user support scenarios. Understanding hardware architecture, operating system fundamentals, and troubleshooting processes provides the foundation for IT career development. Entry-level certifications enable career entry for individuals without prior professional IT experience through validated baseline skills. Many IT professionals begin careers with foundational certifications before specializing in particular domains. The accessibility of entry-level credentials creates pathways into IT careers.

Foundational IT skills provide essential baseline knowledge supporting progression into specialized domains throughout IT careers. Investigating CompTIA 220-1001 CompTIA A Plus tutorials demonstrates entry-level IT credentialing. These foundational credentials validate essential IT skills. Professionals beginning IT careers benefit from structured foundational knowledge. The establishment of core competencies through entry credentials enables subsequent specialization.

Operating System and Software Management

Operating system and software management certifications validate expertise configuring operating systems, installing software, managing security, and troubleshooting operational issues. These credentials demonstrate proficiency with Windows environments, security best practices, and software troubleshooting methodologies. Understanding operating system architecture, software deployment, and basic networking enables support of business workstations and user productivity. Entry-level certifications combined with practical experience create career pathways into specialized IT roles. Organizations require support professionals maintaining end-user computing environments and resolving common issues. The fundamental nature of operating system skills ensures ongoing relevance across IT domains.

Operating system expertise represents essential knowledge for IT professionals across diverse specializations and career levels. Examining CompTIA 220-1002 CompTIA A Plus tutorials illustrates operating system credentialing. These system administration credentials validate practical operational skills. Professionals who master operating systems build foundations for advanced specializations. The universal applicability of operating system knowledge ensures career-long value.

Conclusion